TensorFlow.orgで表示 TensorFlow.orgで表示 |

Google Colab で実行 Google Colab で実行

|

GitHub でソースを表示 GitHub でソースを表示 |

ノートブックをダウンロード ノートブックをダウンロード |

TF Hub モデルを参照 TF Hub モデルを参照 |

この Colab では、TensorFlow Hub からダウンロードした SPICE モデルの使用方法を紹介します。

sudo apt-get install -q -y timidity libsndfile1

Reading package lists... Building dependency tree... Reading state information... libsndfile1 is already the newest version (1.0.28-7ubuntu0.2). The following packages were automatically installed and are no longer required: libatasmart4 libblockdev-fs2 libblockdev-loop2 libblockdev-part-err2 libblockdev-part2 libblockdev-swap2 libblockdev-utils2 libblockdev2 libparted-fs-resize0 libxmlb2 Use 'sudo apt autoremove' to remove them. The following additional packages will be installed: fluid-soundfont-gm libao-common libao4 Suggested packages: fluid-soundfont-gs fluidsynth libaudio2 libsndio6.1 freepats pmidi timidity-daemon The following NEW packages will be installed: fluid-soundfont-gm libao-common libao4 timidity 0 upgraded, 4 newly installed, 0 to remove and 128 not upgraded. Need to get 120 MB of archives. After this operation, 150 MB of additional disk space will be used. Get:1 http://us-central1.gce.archive.ubuntu.com/ubuntu focal/universe amd64 fluid-soundfont-gm all 3.1-5.1 [119 MB] Get:2 http://us-central1.gce.archive.ubuntu.com/ubuntu focal/main amd64 libao-common all 1.2.2+20180113-1ubuntu1 [6644 B] Get:3 http://us-central1.gce.archive.ubuntu.com/ubuntu focal/main amd64 libao4 amd64 1.2.2+20180113-1ubuntu1 [35.1 kB] Get:4 http://us-central1.gce.archive.ubuntu.com/ubuntu focal/universe amd64 timidity amd64 2.14.0-8build1 [613 kB] Fetched 120 MB in 4s (27.1 MB/s) Selecting previously unselected package fluid-soundfont-gm. (Reading database ... 143631 files and directories currently installed.) Preparing to unpack .../fluid-soundfont-gm_3.1-5.1_all.deb ... Unpacking fluid-soundfont-gm (3.1-5.1) ... Selecting previously unselected package libao-common. Preparing to unpack .../libao-common_1.2.2+20180113-1ubuntu1_all.deb ... Unpacking libao-common (1.2.2+20180113-1ubuntu1) ... Selecting previously unselected package libao4:amd64. Preparing to unpack .../libao4_1.2.2+20180113-1ubuntu1_amd64.deb ... Unpacking libao4:amd64 (1.2.2+20180113-1ubuntu1) ... Selecting previously unselected package timidity. Preparing to unpack .../timidity_2.14.0-8build1_amd64.deb ... Unpacking timidity (2.14.0-8build1) ... Setting up libao-common (1.2.2+20180113-1ubuntu1) ... Setting up libao4:amd64 (1.2.2+20180113-1ubuntu1) ... Setting up fluid-soundfont-gm (3.1-5.1) ... Setting up timidity (2.14.0-8build1) ... Processing triggers for libc-bin (2.31-0ubuntu9.12) ... Processing triggers for man-db (2.9.1-1) ... Processing triggers for desktop-file-utils (0.24-1ubuntu3) ... Processing triggers for mime-support (3.64ubuntu1) ... Processing triggers for gnome-menus (3.36.0-1ubuntu1) ...

# All the imports to deal with sound datapip install pydub librosa music21

import tensorflow as tf

import tensorflow_hub as hub

import numpy as np

import matplotlib.pyplot as plt

import librosa

from librosa import display as librosadisplay

import logging

import math

import statistics

import sys

from IPython.display import Audio, Javascript

from scipy.io import wavfile

from base64 import b64decode

import music21

from pydub import AudioSegment

logger = logging.getLogger()

logger.setLevel(logging.ERROR)

print("tensorflow: %s" % tf.__version__)

#print("librosa: %s" % librosa.__version__)

2024-01-11 18:44:43.164066: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:9261] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered

2024-01-11 18:44:43.164108: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:607] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered

2024-01-11 18:44:43.165649: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1515] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

tensorflow: 2.15.0

/tmpfs/src/tf_docs_env/lib/python3.9/site-packages/pydub/utils.py:170: RuntimeWarning: Couldn't find ffmpeg or avconv - defaulting to ffmpeg, but may not work

warn("Couldn't find ffmpeg or avconv - defaulting to ffmpeg, but may not work", RuntimeWarning)

音声入力ファイル

これが最も困難な部分です。あなたの歌声を録音しましょう!:)

音声ファイルの取得には、次の 4 つの方法があります。

- Colab で直接音声を録音する

- ご利用の PC からアップロードする

- Google Drive に保存されたファイルを使用する

- ウェブからファイルをダウンロードする

以下の 4 つの方法から 1 つを選択してください。

[Run this] Definition of the JS code to record audio straight from the browser

RECORD = """

const sleep = time => new Promise(resolve => setTimeout(resolve, time))

const b2text = blob => new Promise(resolve => {

const reader = new FileReader()

reader.onloadend = e => resolve(e.srcElement.result)

reader.readAsDataURL(blob)

})

var record = time => new Promise(async resolve => {

stream = await navigator.mediaDevices.getUserMedia({ audio: true })

recorder = new MediaRecorder(stream)

chunks = []

recorder.ondataavailable = e => chunks.push(e.data)

recorder.start()

await sleep(time)

recorder.onstop = async ()=>{

blob = new Blob(chunks)

text = await b2text(blob)

resolve(text)

}

recorder.stop()

})

"""

def record(sec=5):

try:

from google.colab import output

except ImportError:

print('No possible to import output from google.colab')

return ''

else:

print('Recording')

display(Javascript(RECORD))

s = output.eval_js('record(%d)' % (sec*1000))

fname = 'recorded_audio.wav'

print('Saving to', fname)

b = b64decode(s.split(',')[1])

with open(fname, 'wb') as f:

f.write(b)

return fname

Select how to input your audio

INPUT_SOURCE = 'https://storage.googleapis.com/download.tensorflow.org/data/c-scale-metronome.wav'

print('You selected', INPUT_SOURCE)

if INPUT_SOURCE == 'RECORD':

uploaded_file_name = record(5)

elif INPUT_SOURCE == 'UPLOAD':

try:

from google.colab import files

except ImportError:

print("ImportError: files from google.colab seems to not be available")

else:

uploaded = files.upload()

for fn in uploaded.keys():

print('User uploaded file "{name}" with length {length} bytes'.format(

name=fn, length=len(uploaded[fn])))

uploaded_file_name = next(iter(uploaded))

print('Uploaded file: ' + uploaded_file_name)

elif INPUT_SOURCE.startswith('./drive/'):

try:

from google.colab import drive

except ImportError:

print("ImportError: files from google.colab seems to not be available")

else:

drive.mount('/content/drive')

# don't forget to change the name of the file you

# will you here!

gdrive_audio_file = 'YOUR_MUSIC_FILE.wav'

uploaded_file_name = INPUT_SOURCE

elif INPUT_SOURCE.startswith('http'):

!wget --no-check-certificate 'https://storage.googleapis.com/download.tensorflow.org/data/c-scale-metronome.wav' -O c-scale.wav

uploaded_file_name = 'c-scale.wav'

else:

print('Unrecognized input format!')

print('Please select "RECORD", "UPLOAD", or specify a file hosted on Google Drive or a file from the web to download file to download')

You selected https://storage.googleapis.com/download.tensorflow.org/data/c-scale-metronome.wav --2024-01-11 18:44:45-- https://storage.googleapis.com/download.tensorflow.org/data/c-scale-metronome.wav Resolving storage.googleapis.com (storage.googleapis.com)... 142.250.136.207, 142.250.125.207, 209.85.147.207, ... Connecting to storage.googleapis.com (storage.googleapis.com)|142.250.136.207|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 384728 (376K) [audio/wav] Saving to: ‘c-scale.wav’ c-scale.wav 100%[===================>] 375.71K --.-KB/s in 0.004s 2024-01-11 18:44:45 (93.5 MB/s) - ‘c-scale.wav’ saved [384728/384728]

音声データを準備する

音声データを入手したので、期待される形式に変換して聴いてみましょう!

SPICE モデルでは、入力として、サンプリングレート 16 kHz の音声ファイルが必要です。また、チャンネル数は 1 つ(Mono)である必要があります。

この部分の作業を支援するために、wav ファイルをモデルが期待する形式に変換する関数(convert_audio_for_model)を用意しました。

# Function that converts the user-created audio to the format that the model

# expects: bitrate 16kHz and only one channel (mono).

EXPECTED_SAMPLE_RATE = 16000

def convert_audio_for_model(user_file, output_file='converted_audio_file.wav'):

audio = AudioSegment.from_file(user_file)

audio = audio.set_frame_rate(EXPECTED_SAMPLE_RATE).set_channels(1)

audio.export(output_file, format="wav")

return output_file

# Converting to the expected format for the model

# in all the input 4 input method before, the uploaded file name is at

# the variable uploaded_file_name

converted_audio_file = convert_audio_for_model(uploaded_file_name)

# Loading audio samples from the wav file:

sample_rate, audio_samples = wavfile.read(converted_audio_file, 'rb')

# Show some basic information about the audio.

duration = len(audio_samples)/sample_rate

print(f'Sample rate: {sample_rate} Hz')

print(f'Total duration: {duration:.2f}s')

print(f'Size of the input: {len(audio_samples)}')

# Let's listen to the wav file.

Audio(audio_samples, rate=sample_rate)

Sample rate: 16000 Hz Total duration: 11.89s Size of the input: 190316

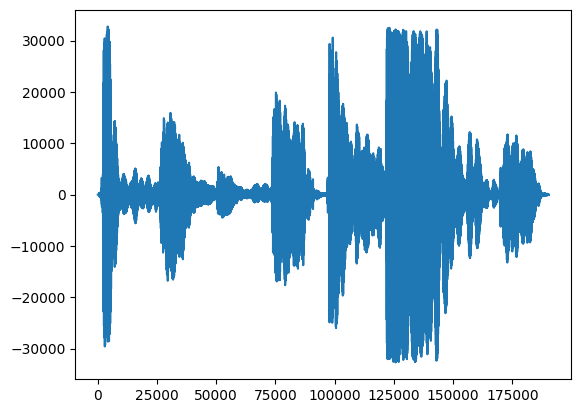

まずはじめに、歌声の波形を見てみましょう。

# We can visualize the audio as a waveform.

_ = plt.plot(audio_samples)

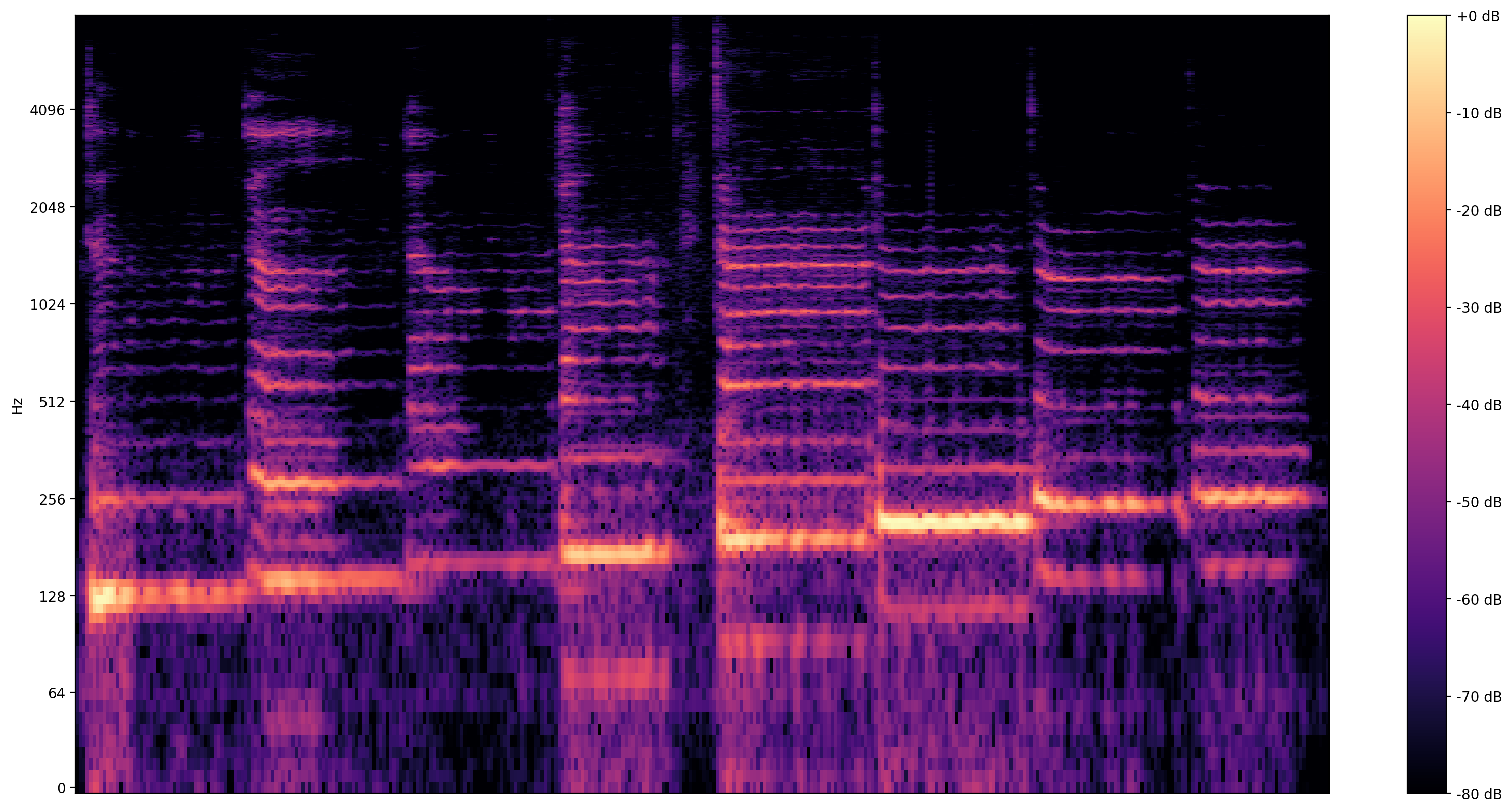

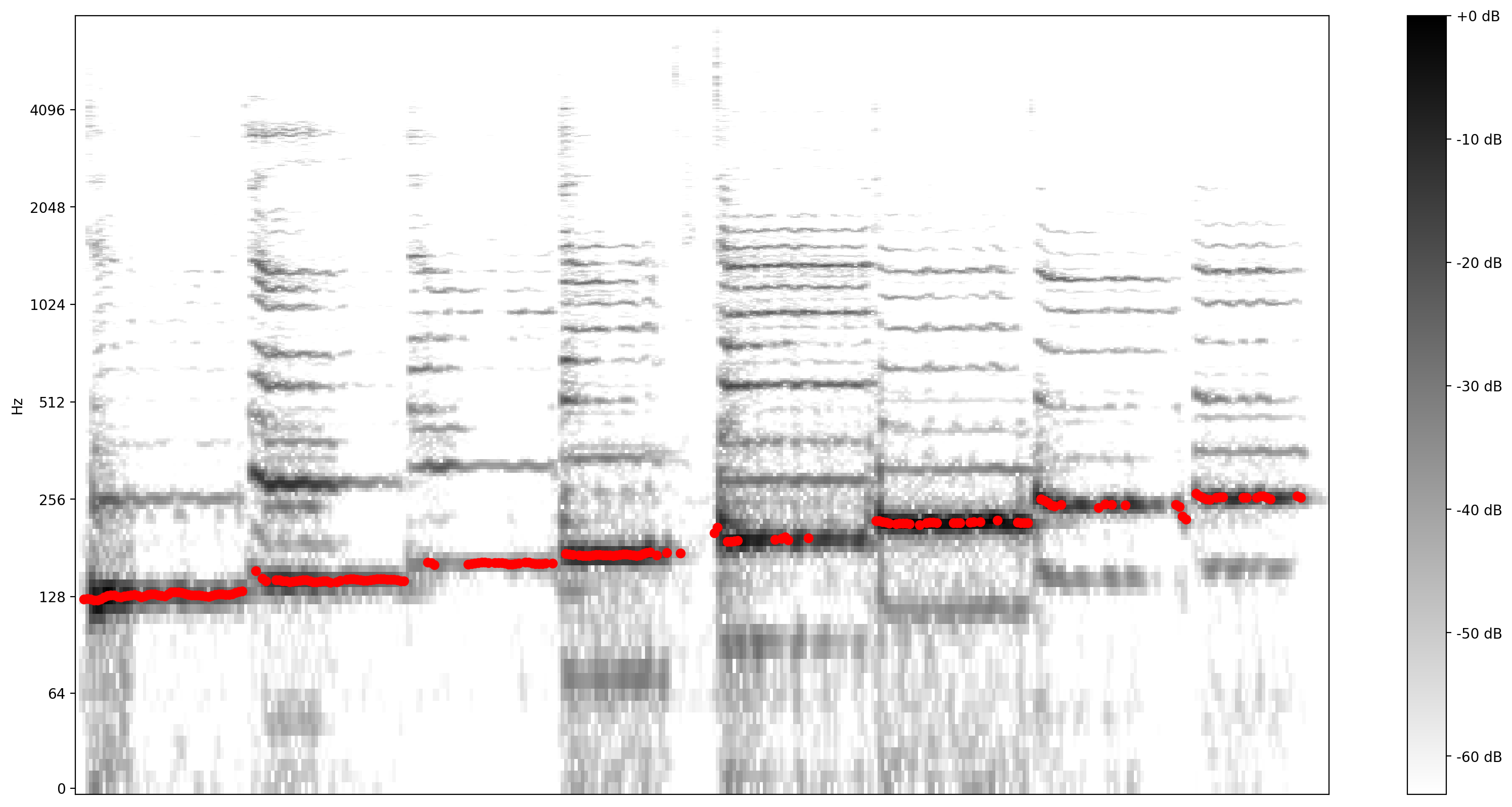

より詳しいビジュアライゼーションとして、経時的に周波数を示すスペクトログラム があります。

ここでは、対数による周波数スケールを使用して、歌声をより鮮明に視覚化させます。

MAX_ABS_INT16 = 32768.0

def plot_stft(x, sample_rate, show_black_and_white=False):

x_stft = np.abs(librosa.stft(x, n_fft=2048))

fig, ax = plt.subplots()

fig.set_size_inches(20, 10)

x_stft_db = librosa.amplitude_to_db(x_stft, ref=np.max)

if(show_black_and_white):

librosadisplay.specshow(data=x_stft_db, y_axis='log',

sr=sample_rate, cmap='gray_r')

else:

librosadisplay.specshow(data=x_stft_db, y_axis='log', sr=sample_rate)

plt.colorbar(format='%+2.0f dB')

plot_stft(audio_samples / MAX_ABS_INT16 , sample_rate=EXPECTED_SAMPLE_RATE)

plt.show()

ここで、最後の変換を行う必要があります。音声サンプルは int16 形式です。これらを -1 と 1 の間の浮動小数点数に正規化する必要があります。

audio_samples = audio_samples / float(MAX_ABS_INT16)

モデルを実行する

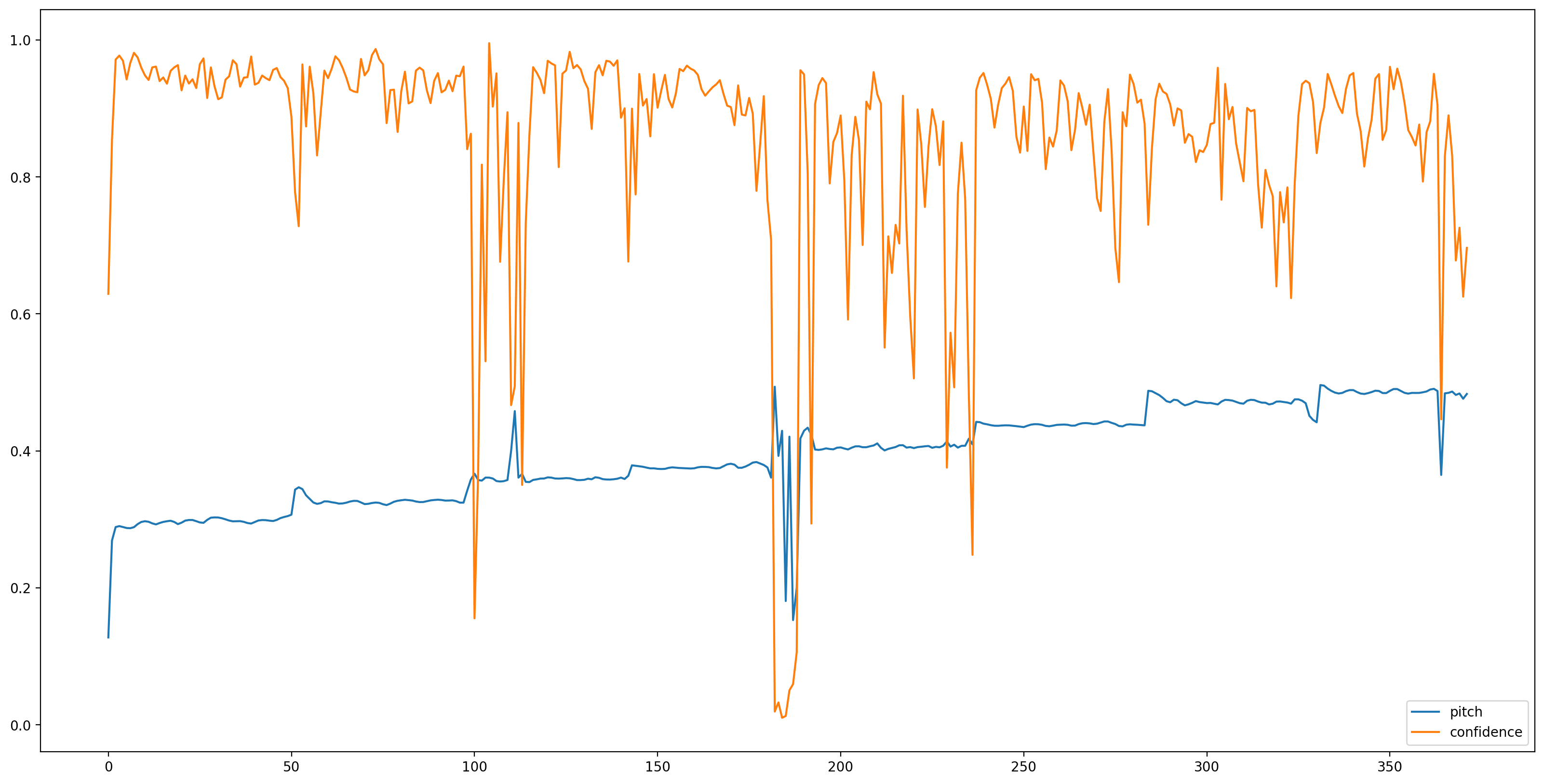

ようやく簡単な作業です。TensorFlow Hub でモデルを読み込み、音声をフィードしましょう。SPICE から ピッチと不確実性の 2 つの出力が得られます。

TensorFlow Hub は、機械学習モデルの再利用可能な部分の公開、発見、および消費のためのライブラリです。ユーザーの抱える課題の解決する機械学習の使用を簡単にすることができます。

モデルを読み込むには、Hub モジュールと、モデルにポイントする URL のみが必要です。

# Loading the SPICE model is easy:

model = hub.load("https://tfhub.dev/google/spice/2")

WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'global_step:0' shape=() dtype=int64_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'global_step:0' shape=() dtype=int64_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/conv2d/kernel:0' shape=(1, 3, 1, 64) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/conv2d/kernel:0' shape=(1, 3, 1, 64) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/gamma:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/gamma:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/beta:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/beta:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/moving_mean:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/moving_mean:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'global_step:0' shape=() dtype=int64_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'global_step:0' shape=() dtype=int64_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/conv2d/kernel:0' shape=(1, 3, 1, 64) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/conv2d/kernel:0' shape=(1, 3, 1, 64) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/gamma:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/gamma:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/beta:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/beta:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/moving_mean:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/moving_mean:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'global_step:0' shape=() dtype=int64_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'global_step:0' shape=() dtype=int64_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/conv2d/kernel:0' shape=(1, 3, 1, 64) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/conv2d/kernel:0' shape=(1, 3, 1, 64) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/gamma:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/gamma:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/beta:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/beta:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/moving_mean:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/moving_mean:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'global_step:0' shape=() dtype=int64_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'global_step:0' shape=() dtype=int64_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/conv2d/kernel:0' shape=(1, 3, 1, 64) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/conv2d/kernel:0' shape=(1, 3, 1, 64) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/gamma:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/gamma:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/beta:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/beta:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/moving_mean:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables(). WARNING:tensorflow:Unable to create a python object for variable <tf.Variable 'encoder/batch_normalization/moving_mean:0' shape=(64,) dtype=float32_ref> because it is a reference variable. It may not be visible to training APIs. If this is a problem, consider rebuilding the SavedModel after running tf.compat.v1.enable_resource_variables().

注意: ここでの豆知識は、Hub のすべてのモデル URL は、ダウンロードだけでなく、ドキュメントの読み取りにも利用できるということです。そのため、ブラウザでそのリンクを開くと、モデルの使用方法を読み、どのようにしてトレーニングされたのかという詳細も知ることができます。

モデルが読み込まれ、データの準備が完了したので、結果を取得するための 3 行を追加しましょう。

# We now feed the audio to the SPICE tf.hub model to obtain pitch and uncertainty outputs as tensors.

model_output = model.signatures["serving_default"](tf.constant(audio_samples, tf.float32))

pitch_outputs = model_output["pitch"]

uncertainty_outputs = model_output["uncertainty"]

# 'Uncertainty' basically means the inverse of confidence.

confidence_outputs = 1.0 - uncertainty_outputs

fig, ax = plt.subplots()

fig.set_size_inches(20, 10)

plt.plot(pitch_outputs, label='pitch')

plt.plot(confidence_outputs, label='confidence')

plt.legend(loc="lower right")

plt.show()

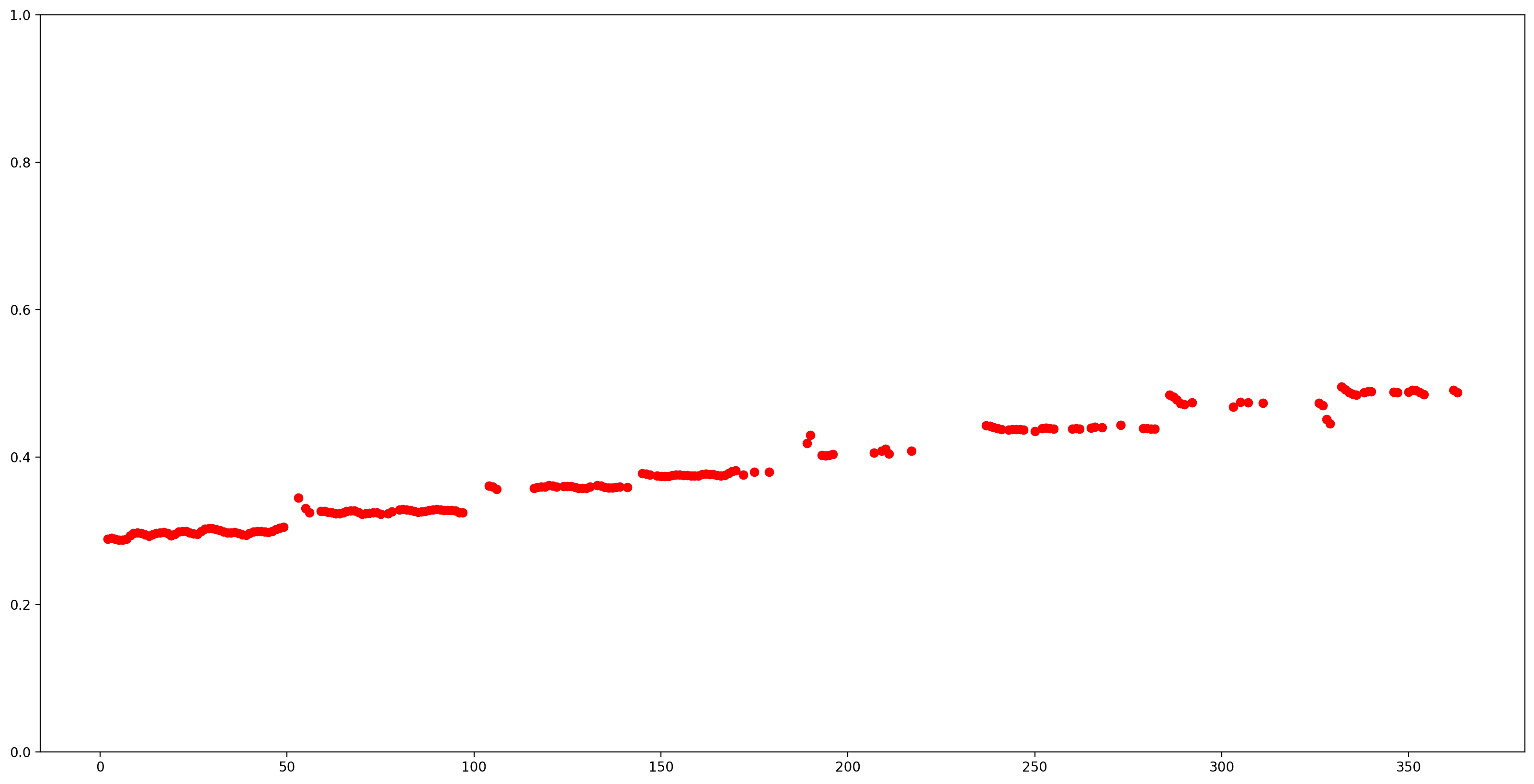

信頼度の低い(confidence < 0.9)すべてのピッチ推定値を取り除いて、残りのピッチをグラフ化して、結果を理解しやすくしましょう。

confidence_outputs = list(confidence_outputs)

pitch_outputs = [ float(x) for x in pitch_outputs]

indices = range(len (pitch_outputs))

confident_pitch_outputs = [ (i,p)

for i, p, c in zip(indices, pitch_outputs, confidence_outputs) if c >= 0.9 ]

confident_pitch_outputs_x, confident_pitch_outputs_y = zip(*confident_pitch_outputs)

fig, ax = plt.subplots()

fig.set_size_inches(20, 10)

ax.set_ylim([0, 1])

plt.scatter(confident_pitch_outputs_x, confident_pitch_outputs_y, )

plt.scatter(confident_pitch_outputs_x, confident_pitch_outputs_y, c="r")

plt.show()

SPICE が返すピッチ値の範囲は 0 から 1 です。この値を Hz 単位の絶対ピッチ値に変換しましょう。

def output2hz(pitch_output):

# Constants taken from https://tfhub.dev/google/spice/2

PT_OFFSET = 25.58

PT_SLOPE = 63.07

FMIN = 10.0;

BINS_PER_OCTAVE = 12.0;

cqt_bin = pitch_output * PT_SLOPE + PT_OFFSET;

return FMIN * 2.0 ** (1.0 * cqt_bin / BINS_PER_OCTAVE)

confident_pitch_values_hz = [ output2hz(p) for p in confident_pitch_outputs_y ]

では、どれほど良い予測が得られるか確認しましょう。予測されるピッチを元のスペクトログラムにオーバーレイ表示します。ピッチ予測をより見やすくするために、スペクトログラムをモノクロに変更してます。

plot_stft(audio_samples / MAX_ABS_INT16 ,

sample_rate=EXPECTED_SAMPLE_RATE, show_black_and_white=True)

# Note: conveniently, since the plot is in log scale, the pitch outputs

# also get converted to the log scale automatically by matplotlib.

plt.scatter(confident_pitch_outputs_x, confident_pitch_values_hz, c="r")

plt.show()

音符に変換する

ピッチ値を得たので、次はこの値を音符に変換することにしましょう!この作業はそれ自体が困難となる部分です。次の 2 つの項目を考慮する必要があります。

- 休符(歌声がないところ)

- 各音符のサイズ(オフセット)

1: 出力にゼロを追加して、歌声がない部分を示す

pitch_outputs_and_rests = [

output2hz(p) if c >= 0.9 else 0

for i, p, c in zip(indices, pitch_outputs, confidence_outputs)

]

2: 音符のオフセットを追加する

自由に歌う場合、メロディーには音符が表現する絶対ピッチ値に対するオフセットがある場合があります。したがって、予測を音符に変換するには、この潜在的なオフセットを修正する必要があります。次のコードはこれを計算しています。

A4 = 440

C0 = A4 * pow(2, -4.75)

note_names = ["C", "C#", "D", "D#", "E", "F", "F#", "G", "G#", "A", "A#", "B"]

def hz2offset(freq):

# This measures the quantization error for a single note.

if freq == 0: # Rests always have zero error.

return None

# Quantized note.

h = round(12 * math.log2(freq / C0))

return 12 * math.log2(freq / C0) - h

# The ideal offset is the mean quantization error for all the notes

# (excluding rests):

offsets = [hz2offset(p) for p in pitch_outputs_and_rests if p != 0]

print("offsets: ", offsets)

ideal_offset = statistics.mean(offsets)

print("ideal offset: ", ideal_offset)

offsets: [0.2851075707500712, 0.3700368844097355, 0.2861620445674262, 0.19608817682917135, 0.17851549283916768, 0.27334483073408933, -0.4475316266590852, -0.24651997073237908, -0.17965956403558891, -0.23060136331860548, -0.3782634107643901, -0.4725100625926686, -0.3457194541269999, -0.2436666886383776, -0.1818906877810207, -0.1348058943325796, -0.24551812662426897, -0.4454884661609313, -0.3126792745167535, -0.12241535707060791, -0.06614479972665066, -0.06702634735648871, -0.1744116301709866, -0.29365739389006507, -0.32520890458170726, -0.0564402572685907, 0.1470506338899895, 0.17167006002122775, 0.16529058740790248, 0.09569531546290477, -0.006323616641203955, -0.11799822075907684, -0.18835098459069144, -0.17934754504506145, -0.17215419157092526, -0.23695640070980062, -0.34594501002376177, -0.39380421205108007, -0.2528674895936689, -0.11009436621014146, -0.07118785365169344, -0.08042248799149121, -0.12799598588293293, -0.16227484329287023, -0.059321733849699854, 0.10667988763506742, 0.21044687793906292, 0.2931920586651131, -0.22329278631751492, -0.12365553720538713, -0.4571117360765271, -0.34864566459005175, -0.35947798653189267, -0.4313194192821328, -0.4818984494978622, 0.44220950977261, 0.45883109973128455, -0.47095522924010425, -0.3674495078498552, -0.3047205333287053, -0.31075979246441676, -0.4501401792341895, 0.3966096259778311, 0.4238116671269694, 0.4982695482795947, -0.45932030423227843, -0.4890504510576079, 0.3836871527260044, 0.4441304941600137, -0.38787359430138935, -0.24855711503570177, -0.20666386647764767, -0.23811575664822726, -0.2760223047310504, -0.3641714288169524, -0.41670715643708434, -0.41009460939710607, -0.3340427999073796, -0.26122959716860805, -0.2232610212141708, -0.19940660549943345, -0.22528914465252825, -0.2780899004513415, -0.2744434134537457, -0.25655119194333764, -0.33068201704567457, -0.4678933079416083, -0.4695116715008396, -0.1648153518015647, -0.24618840082233362, -0.48052218123024204, -0.3771743489677135, -0.32261613680665846, -0.25560535951201757, -0.24629741950576545, -0.14035005553309787, -0.16659348412100883, -0.2442749349648139, -0.236978201704666, -0.20882882578912643, -0.22637519492452896, -0.29836135937516417, -0.39081296219175243, -0.3909896476442043, -0.3650093676025108, -0.2642347521955202, -0.13023199393098395, -0.18214744283501716, -0.3020849113041564, -0.33754229827467697, -0.34391801162306024, -0.31454499496763333, -0.26713314546887545, -0.2910458297902778, -0.11686573876684037, -0.1673094354445226, -0.24345146729295664, -0.30852622314040445, -0.35647376789395935, -0.37154654069487236, -0.3600149954730796, -0.2667062802488047, -0.21902000440899627, -0.2484456507736823, -0.2774126668149748, -0.2941432754570741, -0.31118778272216474, -0.32662896348779213, -0.3053947554403962, -0.2160201109821145, -0.17343703730647775, -0.17792559965198507, -0.19880643679444177, -0.2725011871630372, -0.3152120758468442, -0.28217189623658356, -0.11595223738495974, 0.0541902144377957, 0.11488166735824024, -0.2559679399306063, 0.019302356106599916, -0.002236352401425279, 0.4468796487277231, 0.15514959977323883, 0.4207713650291751, 0.3854474319642307, 0.4373497234409669, -0.4695032097274563, -0.3662756739431998, -0.20354085369650932, -0.015043790774988963, -0.4185651697093675, -0.17896653874461066, -0.032896162706066434, -0.061098168330843805, -0.1953772325689087, -0.2545161090666568, -0.3363741032654488, -0.39191536320988973, -0.36531668408458984, -0.3489657612020167, -0.35455202891175475, -0.38925380362813655, 0.48781259374078445, -0.2820884378129733, -0.241939488189864, -0.24987341685836384, -0.3034880535179809, -0.2910712014014081, -0.2783103765422581, -0.30017802073304267, -0.23735882385318519, -0.15802705569807785, -0.1688725350672513, 0.00533368216211727, -0.2545762573057857, -0.28210347487274845, -0.29791494323556833, -0.3228332309300086, -0.3895802937323367, 0.4323827980583488, 0.17438820408041522, -0.12961415393892395, -0.2236296109730489, -0.04022635205333813, -0.4264043621594098, -0.0018987662965486152, -0.07466309859101727, -0.08664951487129002, -0.08169480367248383, -0.31617519541327965, -0.47420548422877573, 0.1502044753855003, 0.30507923857624064, 0.031032583278971515, -0.17852388186996393, -0.3371385477358615, -0.4178048549467732, -0.2023933346444835, -0.10605277224127718, -0.10771248771493447, -0.16037790997569346, -0.18698410763089868, -0.17355977250879562, -0.008242337244190878, -0.011401999431292609, -0.18767393274848132, -0.360175323324853, 0.011681766969516616, -0.1931417836124183] ideal offset: -0.16889355771202758

ヒューリスティックを使用して歌われた可能性の最も高い音符のシーケンスの予測を試みることができるようになりました。上記で計算された理想的なオフセットは 1 つの材料ではありますが、速度(8 個など、どれくらいの予測をたてるのか)と量子化を始める時間オフセットを知る必要もあります。単純にしておくために、異なる速度と時間オフセットを試して、量子化誤差を測定し、最終的に、この誤差を最小限に抑える値を使用します。

def quantize_predictions(group, ideal_offset):

# Group values are either 0, or a pitch in Hz.

non_zero_values = [v for v in group if v != 0]

zero_values_count = len(group) - len(non_zero_values)

# Create a rest if 80% is silent, otherwise create a note.

if zero_values_count > 0.8 * len(group):

# Interpret as a rest. Count each dropped note as an error, weighted a bit

# worse than a badly sung note (which would 'cost' 0.5).

return 0.51 * len(non_zero_values), "Rest"

else:

# Interpret as note, estimating as mean of non-rest predictions.

h = round(

statistics.mean([

12 * math.log2(freq / C0) - ideal_offset for freq in non_zero_values

]))

octave = h // 12

n = h % 12

note = note_names[n] + str(octave)

# Quantization error is the total difference from the quantized note.

error = sum([

abs(12 * math.log2(freq / C0) - ideal_offset - h)

for freq in non_zero_values

])

return error, note

def get_quantization_and_error(pitch_outputs_and_rests, predictions_per_eighth,

prediction_start_offset, ideal_offset):

# Apply the start offset - we can just add the offset as rests.

pitch_outputs_and_rests = [0] * prediction_start_offset + \

pitch_outputs_and_rests

# Collect the predictions for each note (or rest).

groups = [

pitch_outputs_and_rests[i:i + predictions_per_eighth]

for i in range(0, len(pitch_outputs_and_rests), predictions_per_eighth)

]

quantization_error = 0

notes_and_rests = []

for group in groups:

error, note_or_rest = quantize_predictions(group, ideal_offset)

quantization_error += error

notes_and_rests.append(note_or_rest)

return quantization_error, notes_and_rests

best_error = float("inf")

best_notes_and_rests = None

best_predictions_per_note = None

for predictions_per_note in range(20, 65, 1):

for prediction_start_offset in range(predictions_per_note):

error, notes_and_rests = get_quantization_and_error(

pitch_outputs_and_rests, predictions_per_note,

prediction_start_offset, ideal_offset)

if error < best_error:

best_error = error

best_notes_and_rests = notes_and_rests

best_predictions_per_note = predictions_per_note

# At this point, best_notes_and_rests contains the best quantization.

# Since we don't need to have rests at the beginning, let's remove these:

while best_notes_and_rests[0] == 'Rest':

best_notes_and_rests = best_notes_and_rests[1:]

# Also remove silence at the end.

while best_notes_and_rests[-1] == 'Rest':

best_notes_and_rests = best_notes_and_rests[:-1]

では、量子化された音符を楽譜として書き出しましょう!

これを行うには、music21 と Open Sheet Music Display の 2 つのライブラリを使用します。

注意: 単純に行えるように、ここではすべての音符の長さが同じ(半音)であると仮定しています。

# Creating the sheet music score.

sc = music21.stream.Score()

# Adjust the speed to match the actual singing.

bpm = 60 * 60 / best_predictions_per_note

print ('bpm: ', bpm)

a = music21.tempo.MetronomeMark(number=bpm)

sc.insert(0,a)

for snote in best_notes_and_rests:

d = 'half'

if snote == 'Rest':

sc.append(music21.note.Rest(type=d))

else:

sc.append(music21.note.Note(snote, type=d))

bpm: 78.26086956521739

[Run this] Helper function to use Open Sheet Music Display (JS code) to show a music score

from IPython.core.display import display, HTML, Javascript

import json, random

def showScore(score):

xml = open(score.write('musicxml')).read()

showMusicXML(xml)

def showMusicXML(xml):

DIV_ID = "OSMD_div"

display(HTML('<div id="'+DIV_ID+'">loading OpenSheetMusicDisplay</div>'))

script = """

var div_id = %%DIV_ID%%;

function loadOSMD() {

return new Promise(function(resolve, reject){

if (window.opensheetmusicdisplay) {

return resolve(window.opensheetmusicdisplay)

}

// OSMD script has a 'define' call which conflicts with requirejs

var _define = window.define // save the define object

window.define = undefined // now the loaded script will ignore requirejs

var s = document.createElement( 'script' );

s.setAttribute( 'src', "https://cdn.jsdelivr.net/npm/opensheetmusicdisplay@0.7.6/build/opensheetmusicdisplay.min.js" );

//s.setAttribute( 'src', "/custom/opensheetmusicdisplay.js" );

s.onload=function(){

window.define = _define

resolve(opensheetmusicdisplay);

};

document.body.appendChild( s ); // browser will try to load the new script tag

})

}

loadOSMD().then((OSMD)=>{

window.openSheetMusicDisplay = new OSMD.OpenSheetMusicDisplay(div_id, {

drawingParameters: "compacttight"

});

openSheetMusicDisplay

.load(%%data%%)

.then(

function() {

openSheetMusicDisplay.render();

}

);

})

""".replace('%%DIV_ID%%',DIV_ID).replace('%%data%%',json.dumps(xml))

display(Javascript(script))

return

/tmpfs/tmp/ipykernel_61905/2305315633.py:3: DeprecationWarning: Importing display from IPython.core.display is deprecated since IPython 7.14, please import from IPython display from IPython.core.display import display, HTML, Javascript

# rendering the music score

showScore(sc)

print(best_notes_and_rests)

/tmpfs/src/tf_docs_env/lib/python3.9/site-packages/music21/musicxml/m21ToXml.py:510: MusicXMLWarning: <music21.stream.Score 0x7f33ea3fa550> is not well-formed; see isWellFormedNotation()

warnings.warn(f'{scOut} is not well-formed; see isWellFormedNotation()',

<IPython.core.display.Javascript object> ['C3', 'D3', 'E3', 'F3', 'G3', 'A3', 'B3', 'C4']

音符を MIDI ファイルに変換して、聴いてみましょう。

このファイルを作成するには、前に作成したストリームを使用できます。

# Saving the recognized musical notes as a MIDI file

converted_audio_file_as_midi = converted_audio_file[:-4] + '.mid'

fp = sc.write('midi', fp=converted_audio_file_as_midi)

wav_from_created_midi = converted_audio_file_as_midi.replace(' ', '_') + "_midioutput.wav"

print(wav_from_created_midi)

converted_audio_file.mid_midioutput.wav

Colab で聴くには、wav に変換し直す必要があります。簡単な方法は、Timidty を使用することです。

timidity $converted_audio_file_as_midi -Ow -o $wav_from_created_midi

Playing converted_audio_file.mid MIDI file: converted_audio_file.mid Format: 1 Tracks: 2 Divisions: 1024 Track name: Playing time: ~16 seconds Notes cut: 0 Notes lost totally: 0

最後に、モデルが推論し、予測されたピッチから MIDI 経由で作成され、音符から作成された音声を聴いてみましょう!

Audio(wav_from_created_midi)