View on TensorFlow.org View on TensorFlow.org

|

Run in Google Colab Run in Google Colab

|

View source on GitHub View source on GitHub

|

Download notebook Download notebook

|

Overview

In this codelab you'll train a simple image classification model on the CIFAR10 dataset, and then use the "membership inference attack" against this model to assess if the attacker is able to "guess" whether a particular sample was present in the training set. You will use the TF Privacy Report to visualize results from multiple models and model checkpoints.

Setup

import numpy as np

from typing import Tuple

from scipy import special

from sklearn import metrics

import tensorflow as tf

import tensorflow_datasets as tfds

# Set verbosity.

tf.compat.v1.logging.set_verbosity(tf.compat.v1.logging.ERROR)

from sklearn.exceptions import ConvergenceWarning

import warnings

warnings.simplefilter(action="ignore", category=ConvergenceWarning)

warnings.simplefilter(action="ignore", category=FutureWarning)

2022-12-12 10:19:37.399500: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer.so.7'; dlerror: libnvinfer.so.7: cannot open shared object file: No such file or directory 2022-12-12 10:19:37.399668: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer_plugin.so.7'; dlerror: libnvinfer_plugin.so.7: cannot open shared object file: No such file or directory 2022-12-12 10:19:37.399684: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Cannot dlopen some TensorRT libraries. If you would like to use Nvidia GPU with TensorRT, please make sure the missing libraries mentioned above are installed properly.

Install TensorFlow Privacy.

pip install tensorflow_privacy

from tensorflow_privacy.privacy.privacy_tests.membership_inference_attack import membership_inference_attack as mia

from tensorflow_privacy.privacy.privacy_tests.membership_inference_attack.data_structures import AttackInputData

from tensorflow_privacy.privacy.privacy_tests.membership_inference_attack.data_structures import AttackResultsCollection

from tensorflow_privacy.privacy.privacy_tests.membership_inference_attack.data_structures import AttackType

from tensorflow_privacy.privacy.privacy_tests.membership_inference_attack.data_structures import PrivacyMetric

from tensorflow_privacy.privacy.privacy_tests.membership_inference_attack.data_structures import PrivacyReportMetadata

from tensorflow_privacy.privacy.privacy_tests.membership_inference_attack.data_structures import SlicingSpec

from tensorflow_privacy.privacy.privacy_tests.membership_inference_attack import privacy_report

import tensorflow_privacy

Train two models, with privacy metrics

This section trains a pair of keras.Model classifiers on the CIFAR-10 dataset. During the training process it collects privacy metrics, that will be used to generate reports in the bext section.

The first step is to define some hyperparameters:

dataset = 'cifar10'

num_classes = 10

activation = 'relu'

num_conv = 3

batch_size=50

epochs_per_report = 2

total_epochs = 50

lr = 0.001

Next, load the dataset. There's nothing privacy-specific in this code.

print('Loading the dataset.')

train_ds = tfds.as_numpy(

tfds.load(dataset, split=tfds.Split.TRAIN, batch_size=-1))

test_ds = tfds.as_numpy(

tfds.load(dataset, split=tfds.Split.TEST, batch_size=-1))

x_train = train_ds['image'].astype('float32') / 255.

y_train_indices = train_ds['label'][:, np.newaxis]

x_test = test_ds['image'].astype('float32') / 255.

y_test_indices = test_ds['label'][:, np.newaxis]

# Convert class vectors to binary class matrices.

y_train = tf.keras.utils.to_categorical(y_train_indices, num_classes)

y_test = tf.keras.utils.to_categorical(y_test_indices, num_classes)

input_shape = x_train.shape[1:]

assert x_train.shape[0] % batch_size == 0, "The tensorflow_privacy optimizer doesn't handle partial batches"

Loading the dataset.

Next define a function to build the models.

def small_cnn(input_shape: Tuple[int],

num_classes: int,

num_conv: int,

activation: str = 'relu') -> tf.keras.models.Sequential:

"""Setup a small CNN for image classification.

Args:

input_shape: Integer tuple for the shape of the images.

num_classes: Number of prediction classes.

num_conv: Number of convolutional layers.

activation: The activation function to use for conv and dense layers.

Returns:

The Keras model.

"""

model = tf.keras.models.Sequential()

model.add(tf.keras.layers.Input(shape=input_shape))

# Conv layers

for _ in range(num_conv):

model.add(tf.keras.layers.Conv2D(32, (3, 3), activation=activation))

model.add(tf.keras.layers.MaxPooling2D())

model.add(tf.keras.layers.Flatten())

model.add(tf.keras.layers.Dense(64, activation=activation))

model.add(tf.keras.layers.Dense(num_classes))

model.compile(

loss=tf.keras.losses.CategoricalCrossentropy(from_logits=True),

optimizer=tf.keras.optimizers.Adam(learning_rate=lr),

metrics=['accuracy'])

return model

Build two three-layer CNN models using that function.

Configure the first to use a basic SGD optimizer, an the second to use a differentially private optimizer (tf_privacy.DPKerasAdamOptimizer), so you can compare the results.

model_2layers = small_cnn(

input_shape, num_classes, num_conv=2, activation=activation)

model_3layers = small_cnn(

input_shape, num_classes, num_conv=3, activation=activation)

Define a callback to collect privacy metrics

Next define a keras.callbacks.Callback to periorically run some privacy attacks against the model, and log the results.

The keras fit method will call the on_epoch_end method after each training epoch. The n argument is the (0-based) epoch number.

You could implement this procedure by writing a loop that repeatedly calls Model.fit(..., epochs=epochs_per_report) and runs the attack code. The callback is used here just because it gives a clear separation between the training logic, and the privacy evaluation logic.

class PrivacyMetrics(tf.keras.callbacks.Callback):

def __init__(self, epochs_per_report, model_name):

self.epochs_per_report = epochs_per_report

self.model_name = model_name

self.attack_results = []

def on_epoch_end(self, epoch, logs=None):

epoch = epoch+1

if epoch % self.epochs_per_report != 0:

return

print(f'\nRunning privacy report for epoch: {epoch}\n')

logits_train = self.model.predict(x_train, batch_size=batch_size)

logits_test = self.model.predict(x_test, batch_size=batch_size)

prob_train = special.softmax(logits_train, axis=1)

prob_test = special.softmax(logits_test, axis=1)

# Add metadata to generate a privacy report.

privacy_report_metadata = PrivacyReportMetadata(

# Show the validation accuracy on the plot

# It's what you send to train_accuracy that gets plotted.

accuracy_train=logs['val_accuracy'],

accuracy_test=logs['val_accuracy'],

epoch_num=epoch,

model_variant_label=self.model_name)

attack_results = mia.run_attacks(

AttackInputData(

labels_train=y_train_indices[:, 0],

labels_test=y_test_indices[:, 0],

probs_train=prob_train,

probs_test=prob_test),

SlicingSpec(entire_dataset=True, by_class=True),

attack_types=(AttackType.THRESHOLD_ATTACK,

AttackType.LOGISTIC_REGRESSION),

privacy_report_metadata=privacy_report_metadata)

self.attack_results.append(attack_results)

Train the models

The next code block trains the two models. The all_reports list is used to collect all the results from all the models' training runs. The individual reports are tagged witht the model_name, so there's no confusion about which model generated which report.

all_reports = []

callback = PrivacyMetrics(epochs_per_report, "2 Layers")

history = model_2layers.fit(

x_train,

y_train,

batch_size=batch_size,

epochs=total_epochs,

validation_data=(x_test, y_test),

callbacks=[callback],

shuffle=True)

all_reports.extend(callback.attack_results)

Epoch 1/50 1000/1000 [==============================] - 9s 5ms/step - loss: 1.5649 - accuracy: 0.4351 - val_loss: 1.2904 - val_accuracy: 0.5383 Epoch 2/50 989/1000 [============================>.] - ETA: 0s - loss: 1.2361 - accuracy: 0.5654 Running privacy report for epoch: 2 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 1.2357 - accuracy: 0.5652 - val_loss: 1.2187 - val_accuracy: 0.5630 Epoch 3/50 1000/1000 [==============================] - 4s 4ms/step - loss: 1.1003 - accuracy: 0.6162 - val_loss: 1.0723 - val_accuracy: 0.6251 Epoch 4/50 989/1000 [============================>.] - ETA: 0s - loss: 1.0168 - accuracy: 0.6453 Running privacy report for epoch: 4 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 19ms/step - loss: 1.0172 - accuracy: 0.6451 - val_loss: 1.0015 - val_accuracy: 0.6496 Epoch 5/50 1000/1000 [==============================] - 4s 4ms/step - loss: 0.9590 - accuracy: 0.6676 - val_loss: 1.0388 - val_accuracy: 0.6423 Epoch 6/50 994/1000 [============================>.] - ETA: 0s - loss: 0.9149 - accuracy: 0.6838 Running privacy report for epoch: 6 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 19ms/step - loss: 0.9153 - accuracy: 0.6836 - val_loss: 0.9783 - val_accuracy: 0.6641 Epoch 7/50 1000/1000 [==============================] - 4s 4ms/step - loss: 0.8771 - accuracy: 0.6975 - val_loss: 0.9397 - val_accuracy: 0.6778 Epoch 8/50 989/1000 [============================>.] - ETA: 0s - loss: 0.8443 - accuracy: 0.7055 Running privacy report for epoch: 8 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 19ms/step - loss: 0.8452 - accuracy: 0.7051 - val_loss: 0.9455 - val_accuracy: 0.6803 Epoch 9/50 1000/1000 [==============================] - 4s 4ms/step - loss: 0.8066 - accuracy: 0.7198 - val_loss: 0.9285 - val_accuracy: 0.6818 Epoch 10/50 991/1000 [============================>.] - ETA: 0s - loss: 0.7846 - accuracy: 0.7262 Running privacy report for epoch: 10 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 19ms/step - loss: 0.7843 - accuracy: 0.7264 - val_loss: 0.9228 - val_accuracy: 0.6852 Epoch 11/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.7545 - accuracy: 0.7370 - val_loss: 0.9160 - val_accuracy: 0.6894 Epoch 12/50 989/1000 [============================>.] - ETA: 0s - loss: 0.7280 - accuracy: 0.7468 Running privacy report for epoch: 12 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 19ms/step - loss: 0.7280 - accuracy: 0.7468 - val_loss: 0.8930 - val_accuracy: 0.7064 Epoch 13/50 1000/1000 [==============================] - 4s 4ms/step - loss: 0.7038 - accuracy: 0.7532 - val_loss: 0.9070 - val_accuracy: 0.6988 Epoch 14/50 990/1000 [============================>.] - ETA: 0s - loss: 0.6826 - accuracy: 0.7615 Running privacy report for epoch: 14 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 19ms/step - loss: 0.6826 - accuracy: 0.7613 - val_loss: 0.9246 - val_accuracy: 0.6932 Epoch 15/50 1000/1000 [==============================] - 4s 4ms/step - loss: 0.6600 - accuracy: 0.7696 - val_loss: 0.9641 - val_accuracy: 0.6936 Epoch 16/50 991/1000 [============================>.] - ETA: 0s - loss: 0.6447 - accuracy: 0.7763 Running privacy report for epoch: 16 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 19ms/step - loss: 0.6447 - accuracy: 0.7760 - val_loss: 0.9312 - val_accuracy: 0.7003 Epoch 17/50 1000/1000 [==============================] - 4s 4ms/step - loss: 0.6262 - accuracy: 0.7814 - val_loss: 0.9573 - val_accuracy: 0.6950 Epoch 18/50 989/1000 [============================>.] - ETA: 0s - loss: 0.6086 - accuracy: 0.7869 Running privacy report for epoch: 18 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.6082 - accuracy: 0.7868 - val_loss: 0.9419 - val_accuracy: 0.7011 Epoch 19/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.5935 - accuracy: 0.7921 - val_loss: 0.9571 - val_accuracy: 0.6925 Epoch 20/50 988/1000 [============================>.] - ETA: 0s - loss: 0.5741 - accuracy: 0.7998 Running privacy report for epoch: 20 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 19ms/step - loss: 0.5743 - accuracy: 0.7995 - val_loss: 0.9609 - val_accuracy: 0.6989 Epoch 21/50 1000/1000 [==============================] - 4s 4ms/step - loss: 0.5621 - accuracy: 0.8033 - val_loss: 0.9695 - val_accuracy: 0.6963 Epoch 22/50 993/1000 [============================>.] - ETA: 0s - loss: 0.5452 - accuracy: 0.8095 Running privacy report for epoch: 22 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 19ms/step - loss: 0.5457 - accuracy: 0.8093 - val_loss: 0.9815 - val_accuracy: 0.6956 Epoch 23/50 1000/1000 [==============================] - 4s 4ms/step - loss: 0.5383 - accuracy: 0.8110 - val_loss: 0.9856 - val_accuracy: 0.6919 Epoch 24/50 992/1000 [============================>.] - ETA: 0s - loss: 0.5219 - accuracy: 0.8162 Running privacy report for epoch: 24 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 19ms/step - loss: 0.5219 - accuracy: 0.8162 - val_loss: 1.0300 - val_accuracy: 0.6919 Epoch 25/50 1000/1000 [==============================] - 4s 4ms/step - loss: 0.5085 - accuracy: 0.8195 - val_loss: 1.0299 - val_accuracy: 0.6950 Epoch 26/50 996/1000 [============================>.] - ETA: 0s - loss: 0.5001 - accuracy: 0.8234 Running privacy report for epoch: 26 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.5001 - accuracy: 0.8234 - val_loss: 1.0387 - val_accuracy: 0.6934 Epoch 27/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.4877 - accuracy: 0.8275 - val_loss: 1.0503 - val_accuracy: 0.6883 Epoch 28/50 989/1000 [============================>.] - ETA: 0s - loss: 0.4764 - accuracy: 0.8327 Running privacy report for epoch: 28 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.4768 - accuracy: 0.8326 - val_loss: 1.0804 - val_accuracy: 0.6926 Epoch 29/50 1000/1000 [==============================] - 4s 4ms/step - loss: 0.4560 - accuracy: 0.8401 - val_loss: 1.1016 - val_accuracy: 0.6916 Epoch 30/50 992/1000 [============================>.] - ETA: 0s - loss: 0.4502 - accuracy: 0.8408 Running privacy report for epoch: 30 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 19ms/step - loss: 0.4512 - accuracy: 0.8405 - val_loss: 1.1585 - val_accuracy: 0.6826 Epoch 31/50 1000/1000 [==============================] - 4s 4ms/step - loss: 0.4377 - accuracy: 0.8435 - val_loss: 1.1852 - val_accuracy: 0.6817 Epoch 32/50 989/1000 [============================>.] - ETA: 0s - loss: 0.4343 - accuracy: 0.8448 Running privacy report for epoch: 32 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.4346 - accuracy: 0.8446 - val_loss: 1.1789 - val_accuracy: 0.6828 Epoch 33/50 1000/1000 [==============================] - 4s 4ms/step - loss: 0.4200 - accuracy: 0.8493 - val_loss: 1.1821 - val_accuracy: 0.6839 Epoch 34/50 989/1000 [============================>.] - ETA: 0s - loss: 0.4097 - accuracy: 0.8533 Running privacy report for epoch: 34 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.4103 - accuracy: 0.8532 - val_loss: 1.1683 - val_accuracy: 0.6915 Epoch 35/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.3989 - accuracy: 0.8582 - val_loss: 1.2722 - val_accuracy: 0.6754 Epoch 36/50 992/1000 [============================>.] - ETA: 0s - loss: 0.3927 - accuracy: 0.8600 Running privacy report for epoch: 36 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.3935 - accuracy: 0.8597 - val_loss: 1.2278 - val_accuracy: 0.6824 Epoch 37/50 1000/1000 [==============================] - 4s 4ms/step - loss: 0.3800 - accuracy: 0.8641 - val_loss: 1.3000 - val_accuracy: 0.6755 Epoch 38/50 996/1000 [============================>.] - ETA: 0s - loss: 0.3741 - accuracy: 0.8655 Running privacy report for epoch: 38 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 19ms/step - loss: 0.3742 - accuracy: 0.8655 - val_loss: 1.2690 - val_accuracy: 0.6831 Epoch 39/50 1000/1000 [==============================] - 4s 4ms/step - loss: 0.3626 - accuracy: 0.8710 - val_loss: 1.3669 - val_accuracy: 0.6685 Epoch 40/50 989/1000 [============================>.] - ETA: 0s - loss: 0.3553 - accuracy: 0.8716 Running privacy report for epoch: 40 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.3559 - accuracy: 0.8714 - val_loss: 1.3724 - val_accuracy: 0.6762 Epoch 41/50 1000/1000 [==============================] - 4s 4ms/step - loss: 0.3463 - accuracy: 0.8763 - val_loss: 1.4895 - val_accuracy: 0.6636 Epoch 42/50 990/1000 [============================>.] - ETA: 0s - loss: 0.3324 - accuracy: 0.8809 Running privacy report for epoch: 42 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.3326 - accuracy: 0.8808 - val_loss: 1.4031 - val_accuracy: 0.6827 Epoch 43/50 1000/1000 [==============================] - 4s 4ms/step - loss: 0.3343 - accuracy: 0.8802 - val_loss: 1.3989 - val_accuracy: 0.6731 Epoch 44/50 991/1000 [============================>.] - ETA: 0s - loss: 0.3278 - accuracy: 0.8814 Running privacy report for epoch: 44 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 19ms/step - loss: 0.3276 - accuracy: 0.8816 - val_loss: 1.4769 - val_accuracy: 0.6752 Epoch 45/50 1000/1000 [==============================] - 5s 4ms/step - loss: 0.3167 - accuracy: 0.8859 - val_loss: 1.4796 - val_accuracy: 0.6738 Epoch 46/50 988/1000 [============================>.] - ETA: 0s - loss: 0.3098 - accuracy: 0.8901 Running privacy report for epoch: 46 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 19ms/step - loss: 0.3104 - accuracy: 0.8899 - val_loss: 1.4881 - val_accuracy: 0.6705 Epoch 47/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.3008 - accuracy: 0.8912 - val_loss: 1.5639 - val_accuracy: 0.6753 Epoch 48/50 989/1000 [============================>.] - ETA: 0s - loss: 0.2926 - accuracy: 0.8942 Running privacy report for epoch: 48 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.2929 - accuracy: 0.8943 - val_loss: 1.5777 - val_accuracy: 0.6676 Epoch 49/50 1000/1000 [==============================] - 4s 4ms/step - loss: 0.2943 - accuracy: 0.8924 - val_loss: 1.6487 - val_accuracy: 0.6646 Epoch 50/50 989/1000 [============================>.] - ETA: 0s - loss: 0.2796 - accuracy: 0.8982 Running privacy report for epoch: 50 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 19ms/step - loss: 0.2795 - accuracy: 0.8981 - val_loss: 1.6146 - val_accuracy: 0.6679

callback = PrivacyMetrics(epochs_per_report, "3 Layers")

history = model_3layers.fit(

x_train,

y_train,

batch_size=batch_size,

epochs=total_epochs,

validation_data=(x_test, y_test),

callbacks=[callback],

shuffle=True)

all_reports.extend(callback.attack_results)

Epoch 1/50 1000/1000 [==============================] - 7s 6ms/step - loss: 1.6493 - accuracy: 0.3968 - val_loss: 1.4011 - val_accuracy: 0.4976 Epoch 2/50 995/1000 [============================>.] - ETA: 0s - loss: 1.3303 - accuracy: 0.5235 Running privacy report for epoch: 2 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 1.3302 - accuracy: 0.5236 - val_loss: 1.2646 - val_accuracy: 0.5475 Epoch 3/50 1000/1000 [==============================] - 5s 5ms/step - loss: 1.2050 - accuracy: 0.5712 - val_loss: 1.1931 - val_accuracy: 0.5687 Epoch 4/50 992/1000 [============================>.] - ETA: 0s - loss: 1.1274 - accuracy: 0.6006 Running privacy report for epoch: 4 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 1.1279 - accuracy: 0.6006 - val_loss: 1.1270 - val_accuracy: 0.6036 Epoch 5/50 1000/1000 [==============================] - 5s 5ms/step - loss: 1.0594 - accuracy: 0.6287 - val_loss: 1.0538 - val_accuracy: 0.6290 Epoch 6/50 993/1000 [============================>.] - ETA: 0s - loss: 1.0093 - accuracy: 0.6466 Running privacy report for epoch: 6 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 1.0090 - accuracy: 0.6466 - val_loss: 1.0629 - val_accuracy: 0.6370 Epoch 7/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.9690 - accuracy: 0.6632 - val_loss: 1.0139 - val_accuracy: 0.6395 Epoch 8/50 999/1000 [============================>.] - ETA: 0s - loss: 0.9303 - accuracy: 0.6738 Running privacy report for epoch: 8 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 19ms/step - loss: 0.9303 - accuracy: 0.6737 - val_loss: 0.9682 - val_accuracy: 0.6622 Epoch 9/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.9035 - accuracy: 0.6831 - val_loss: 1.0037 - val_accuracy: 0.6497 Epoch 10/50 992/1000 [============================>.] - ETA: 0s - loss: 0.8711 - accuracy: 0.6972 Running privacy report for epoch: 10 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.8712 - accuracy: 0.6971 - val_loss: 0.9455 - val_accuracy: 0.6727 Epoch 11/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.8457 - accuracy: 0.7061 - val_loss: 0.9383 - val_accuracy: 0.6731 Epoch 12/50 989/1000 [============================>.] - ETA: 0s - loss: 0.8274 - accuracy: 0.7109 Running privacy report for epoch: 12 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 20ms/step - loss: 0.8277 - accuracy: 0.7107 - val_loss: 0.9382 - val_accuracy: 0.6737 Epoch 13/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.8013 - accuracy: 0.7194 - val_loss: 0.9203 - val_accuracy: 0.6827 Epoch 14/50 992/1000 [============================>.] - ETA: 0s - loss: 0.7849 - accuracy: 0.7259 Running privacy report for epoch: 14 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 19ms/step - loss: 0.7849 - accuracy: 0.7259 - val_loss: 0.9031 - val_accuracy: 0.6917 Epoch 15/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.7728 - accuracy: 0.7297 - val_loss: 0.9353 - val_accuracy: 0.6772 Epoch 16/50 999/1000 [============================>.] - ETA: 0s - loss: 0.7505 - accuracy: 0.7377 Running privacy report for epoch: 16 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 19ms/step - loss: 0.7504 - accuracy: 0.7377 - val_loss: 0.8779 - val_accuracy: 0.7059 Epoch 17/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.7352 - accuracy: 0.7434 - val_loss: 0.8919 - val_accuracy: 0.6940 Epoch 18/50 991/1000 [============================>.] - ETA: 0s - loss: 0.7246 - accuracy: 0.7456 Running privacy report for epoch: 18 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 19s 19ms/step - loss: 0.7237 - accuracy: 0.7459 - val_loss: 0.8733 - val_accuracy: 0.7102 Epoch 19/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.7058 - accuracy: 0.7508 - val_loss: 0.8981 - val_accuracy: 0.6971 Epoch 20/50 992/1000 [============================>.] - ETA: 0s - loss: 0.6964 - accuracy: 0.7544 Running privacy report for epoch: 20 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.6964 - accuracy: 0.7545 - val_loss: 0.8978 - val_accuracy: 0.6985 Epoch 21/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.6821 - accuracy: 0.7609 - val_loss: 0.9203 - val_accuracy: 0.6953 Epoch 22/50 999/1000 [============================>.] - ETA: 0s - loss: 0.6713 - accuracy: 0.7611 Running privacy report for epoch: 22 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.6712 - accuracy: 0.7612 - val_loss: 0.8934 - val_accuracy: 0.7026 Epoch 23/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.6609 - accuracy: 0.7691 - val_loss: 0.8827 - val_accuracy: 0.7083 Epoch 24/50 990/1000 [============================>.] - ETA: 0s - loss: 0.6496 - accuracy: 0.7717 Running privacy report for epoch: 24 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.6497 - accuracy: 0.7715 - val_loss: 0.9050 - val_accuracy: 0.7000 Epoch 25/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.6384 - accuracy: 0.7756 - val_loss: 0.9388 - val_accuracy: 0.6930 Epoch 26/50 1000/1000 [==============================] - ETA: 0s - loss: 0.6330 - accuracy: 0.7776 Running privacy report for epoch: 26 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.6330 - accuracy: 0.7776 - val_loss: 0.9033 - val_accuracy: 0.7001 Epoch 27/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.6236 - accuracy: 0.7811 - val_loss: 0.8921 - val_accuracy: 0.7045 Epoch 28/50 993/1000 [============================>.] - ETA: 0s - loss: 0.6126 - accuracy: 0.7845 Running privacy report for epoch: 28 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.6132 - accuracy: 0.7844 - val_loss: 0.9148 - val_accuracy: 0.7010 Epoch 29/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.6057 - accuracy: 0.7846 - val_loss: 0.9259 - val_accuracy: 0.6993 Epoch 30/50 994/1000 [============================>.] - ETA: 0s - loss: 0.5954 - accuracy: 0.7885 Running privacy report for epoch: 30 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.5960 - accuracy: 0.7883 - val_loss: 0.9197 - val_accuracy: 0.7083 Epoch 31/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.5872 - accuracy: 0.7920 - val_loss: 0.9272 - val_accuracy: 0.7102 Epoch 32/50 989/1000 [============================>.] - ETA: 0s - loss: 0.5803 - accuracy: 0.7940 Running privacy report for epoch: 32 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.5798 - accuracy: 0.7943 - val_loss: 0.9030 - val_accuracy: 0.7069 Epoch 33/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.5740 - accuracy: 0.7965 - val_loss: 0.9242 - val_accuracy: 0.7097 Epoch 34/50 992/1000 [============================>.] - ETA: 0s - loss: 0.5646 - accuracy: 0.8005 Running privacy report for epoch: 34 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.5647 - accuracy: 0.8006 - val_loss: 0.9156 - val_accuracy: 0.7129 Epoch 35/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.5574 - accuracy: 0.8013 - val_loss: 0.9191 - val_accuracy: 0.7082 Epoch 36/50 989/1000 [============================>.] - ETA: 0s - loss: 0.5597 - accuracy: 0.8022 Running privacy report for epoch: 36 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.5592 - accuracy: 0.8023 - val_loss: 0.9431 - val_accuracy: 0.7045 Epoch 37/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.5490 - accuracy: 0.8067 - val_loss: 0.9823 - val_accuracy: 0.6963 Epoch 38/50 993/1000 [============================>.] - ETA: 0s - loss: 0.5400 - accuracy: 0.8086 Running privacy report for epoch: 38 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.5402 - accuracy: 0.8085 - val_loss: 0.9820 - val_accuracy: 0.6983 Epoch 39/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.5368 - accuracy: 0.8102 - val_loss: 0.9567 - val_accuracy: 0.7085 Epoch 40/50 992/1000 [============================>.] - ETA: 0s - loss: 0.5313 - accuracy: 0.8134 Running privacy report for epoch: 40 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.5323 - accuracy: 0.8130 - val_loss: 0.9361 - val_accuracy: 0.7132 Epoch 41/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.5299 - accuracy: 0.8123 - val_loss: 0.9987 - val_accuracy: 0.7062 Epoch 42/50 992/1000 [============================>.] - ETA: 0s - loss: 0.5230 - accuracy: 0.8140 Running privacy report for epoch: 42 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.5232 - accuracy: 0.8140 - val_loss: 0.9999 - val_accuracy: 0.7019 Epoch 43/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.5143 - accuracy: 0.8169 - val_loss: 0.9726 - val_accuracy: 0.7089 Epoch 44/50 995/1000 [============================>.] - ETA: 0s - loss: 0.5082 - accuracy: 0.8195 Running privacy report for epoch: 44 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.5086 - accuracy: 0.8194 - val_loss: 1.0347 - val_accuracy: 0.6967 Epoch 45/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.5071 - accuracy: 0.8188 - val_loss: 0.9906 - val_accuracy: 0.6986 Epoch 46/50 995/1000 [============================>.] - ETA: 0s - loss: 0.4977 - accuracy: 0.8206 Running privacy report for epoch: 46 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.4980 - accuracy: 0.8205 - val_loss: 0.9928 - val_accuracy: 0.7034 Epoch 47/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.4928 - accuracy: 0.8234 - val_loss: 1.0239 - val_accuracy: 0.7011 Epoch 48/50 997/1000 [============================>.] - ETA: 0s - loss: 0.4910 - accuracy: 0.8253 Running privacy report for epoch: 48 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 21s 21ms/step - loss: 0.4911 - accuracy: 0.8253 - val_loss: 1.0298 - val_accuracy: 0.6963 Epoch 49/50 1000/1000 [==============================] - 5s 5ms/step - loss: 0.4884 - accuracy: 0.8270 - val_loss: 1.0199 - val_accuracy: 0.7032 Epoch 50/50 994/1000 [============================>.] - ETA: 0s - loss: 0.4860 - accuracy: 0.8268 Running privacy report for epoch: 50 1000/1000 [==============================] - 2s 2ms/step 200/200 [==============================] - 0s 2ms/step 1000/1000 [==============================] - 20s 20ms/step - loss: 0.4857 - accuracy: 0.8268 - val_loss: 1.0268 - val_accuracy: 0.7100

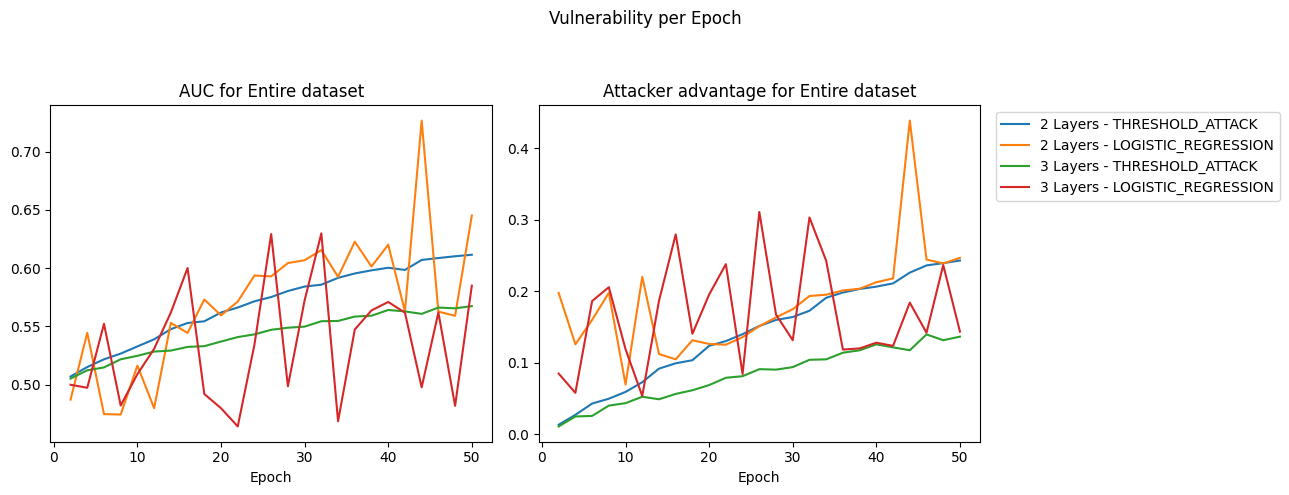

Epoch Plots

You can visualize how privacy risks happen as you train models by probing the model periodically (e.g. every 5 epochs), you can pick the point in time with the best performance / privacy trade-off.

Use the TF Privacy Membership Inference Attack module to generate AttackResults. These AttackResults get combined into an AttackResultsCollection. The TF Privacy Report is designed to analyze the provided AttackResultsCollection.

results = AttackResultsCollection(all_reports)

privacy_metrics = (PrivacyMetric.AUC, PrivacyMetric.ATTACKER_ADVANTAGE)

epoch_plot = privacy_report.plot_by_epochs(

results, privacy_metrics=privacy_metrics)

See that as a rule, privacy vulnerability tends to increase as the number of epochs goes up. This is true across model variants as well as different attacker types.

Two layer models (with fewer convolutional layers) are generally more vulnerable than their three layer model counterparts.

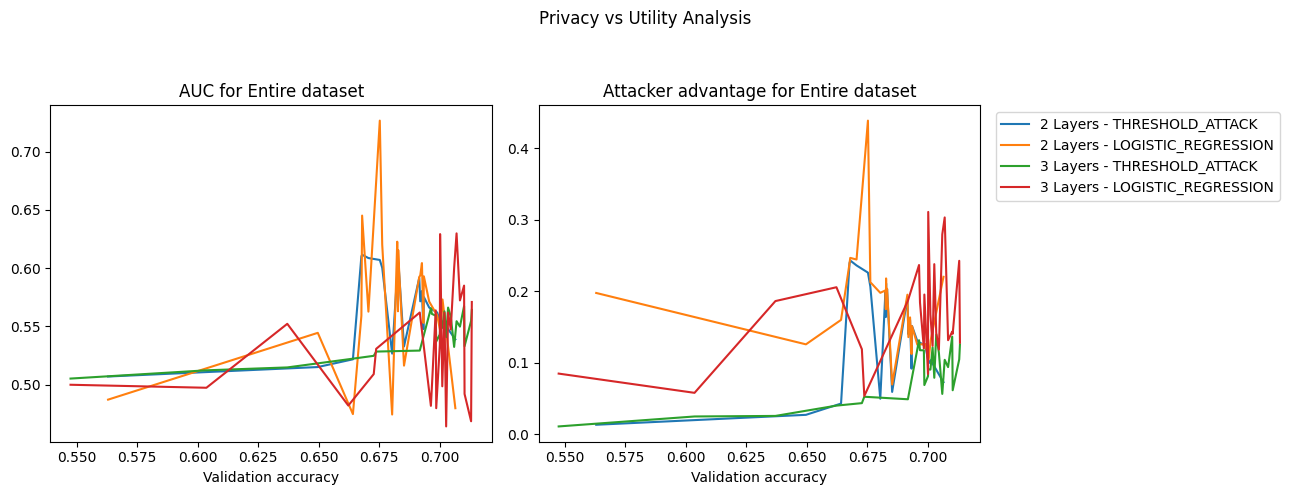

Now let's see how model performance changes with respect to privacy risk.

Privacy vs Utility

privacy_metrics = (PrivacyMetric.AUC, PrivacyMetric.ATTACKER_ADVANTAGE)

utility_privacy_plot = privacy_report.plot_privacy_vs_accuracy(

results, privacy_metrics=privacy_metrics)

for axis in utility_privacy_plot.axes:

axis.set_xlabel('Validation accuracy')

Three layer models (perhaps due to too many parameters) only achieve a train accuracy of 0.85. The two layer models achieve roughly equal performance for that level of privacy risk but they continue to get better accuracy.

You can also see how the line for two layer models gets steeper. This means that additional marginal gains in train accuracy come at an expense of vast privacy vulnerabilities.

This is the end of the tutorial. Feel free to analyze your own results.