View on TensorFlow.org View on TensorFlow.org

|

Run in Google Colab Run in Google Colab

|

View source on GitHub View source on GitHub

|

Download notebook Download notebook

|

TensorBoard can be used directly within notebook experiences such as Colab and Jupyter. This can be helpful for sharing results, integrating TensorBoard into existing workflows, and using TensorBoard without installing anything locally.

Setup

Start by installing TF 2.0 and loading the TensorBoard notebook extension:

For Jupyter users: If you’ve installed Jupyter and TensorBoard into

the same virtualenv, then you should be good to go. If you’re using a

more complicated setup, like a global Jupyter installation and kernels

for different Conda/virtualenv environments, then you must ensure that

the tensorboard binary is on your PATH inside the Jupyter notebook

context. One way to do this is to modify the kernel_spec to prepend

the environment’s bin directory to PATH, as described here.

For Docker users: In case you are running a Docker image of Jupyter Notebook server using TensorFlow's nightly, it is necessary to expose not only the notebook's port, but the TensorBoard's port. Thus, run the container with the following command:

docker run -it -p 8888:8888 -p 6006:6006 \

tensorflow/tensorflow:nightly-py3-jupyter

where the -p 6006 is the default port of TensorBoard. This will allocate a port for you to run one TensorBoard instance. To have concurrent instances, it is necessary to allocate more ports. Also, pass --bind_all to %tensorboard to expose the port outside the container.

# Load the TensorBoard notebook extension

%load_ext tensorboard

Import TensorFlow, datetime, and os:

import tensorflow as tf

import datetime, os

TensorBoard in notebooks

Download the FashionMNIST dataset and scale it:

fashion_mnist = tf.keras.datasets.fashion_mnist

(x_train, y_train),(x_test, y_test) = fashion_mnist.load_data()

x_train, x_test = x_train / 255.0, x_test / 255.0

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/train-labels-idx1-ubyte.gz 32768/29515 [=================================] - 0s 0us/step Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/train-images-idx3-ubyte.gz 26427392/26421880 [==============================] - 0s 0us/step Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/t10k-labels-idx1-ubyte.gz 8192/5148 [===============================================] - 0s 0us/step Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/t10k-images-idx3-ubyte.gz 4423680/4422102 [==============================] - 0s 0us/step

Create a very simple model:

def create_model():

return tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28), name='layers_flatten'),

tf.keras.layers.Dense(512, activation='relu', name='layers_dense'),

tf.keras.layers.Dropout(0.2, name='layers_dropout'),

tf.keras.layers.Dense(10, activation='softmax', name='layers_dense_2')

])

Train the model using Keras and the TensorBoard callback:

def train_model():

model = create_model()

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

logdir = os.path.join("logs", datetime.datetime.now().strftime("%Y%m%d-%H%M%S"))

tensorboard_callback = tf.keras.callbacks.TensorBoard(logdir, histogram_freq=1)

model.fit(x=x_train,

y=y_train,

epochs=5,

validation_data=(x_test, y_test),

callbacks=[tensorboard_callback])

train_model()

Train on 60000 samples, validate on 10000 samples Epoch 1/5 60000/60000 [==============================] - 11s 182us/sample - loss: 0.4976 - accuracy: 0.8204 - val_loss: 0.4143 - val_accuracy: 0.8538 Epoch 2/5 60000/60000 [==============================] - 10s 174us/sample - loss: 0.3845 - accuracy: 0.8588 - val_loss: 0.3855 - val_accuracy: 0.8626 Epoch 3/5 60000/60000 [==============================] - 10s 175us/sample - loss: 0.3513 - accuracy: 0.8705 - val_loss: 0.3740 - val_accuracy: 0.8607 Epoch 4/5 60000/60000 [==============================] - 11s 177us/sample - loss: 0.3287 - accuracy: 0.8793 - val_loss: 0.3596 - val_accuracy: 0.8719 Epoch 5/5 60000/60000 [==============================] - 11s 178us/sample - loss: 0.3153 - accuracy: 0.8825 - val_loss: 0.3360 - val_accuracy: 0.8782

Start TensorBoard within the notebook using magics:

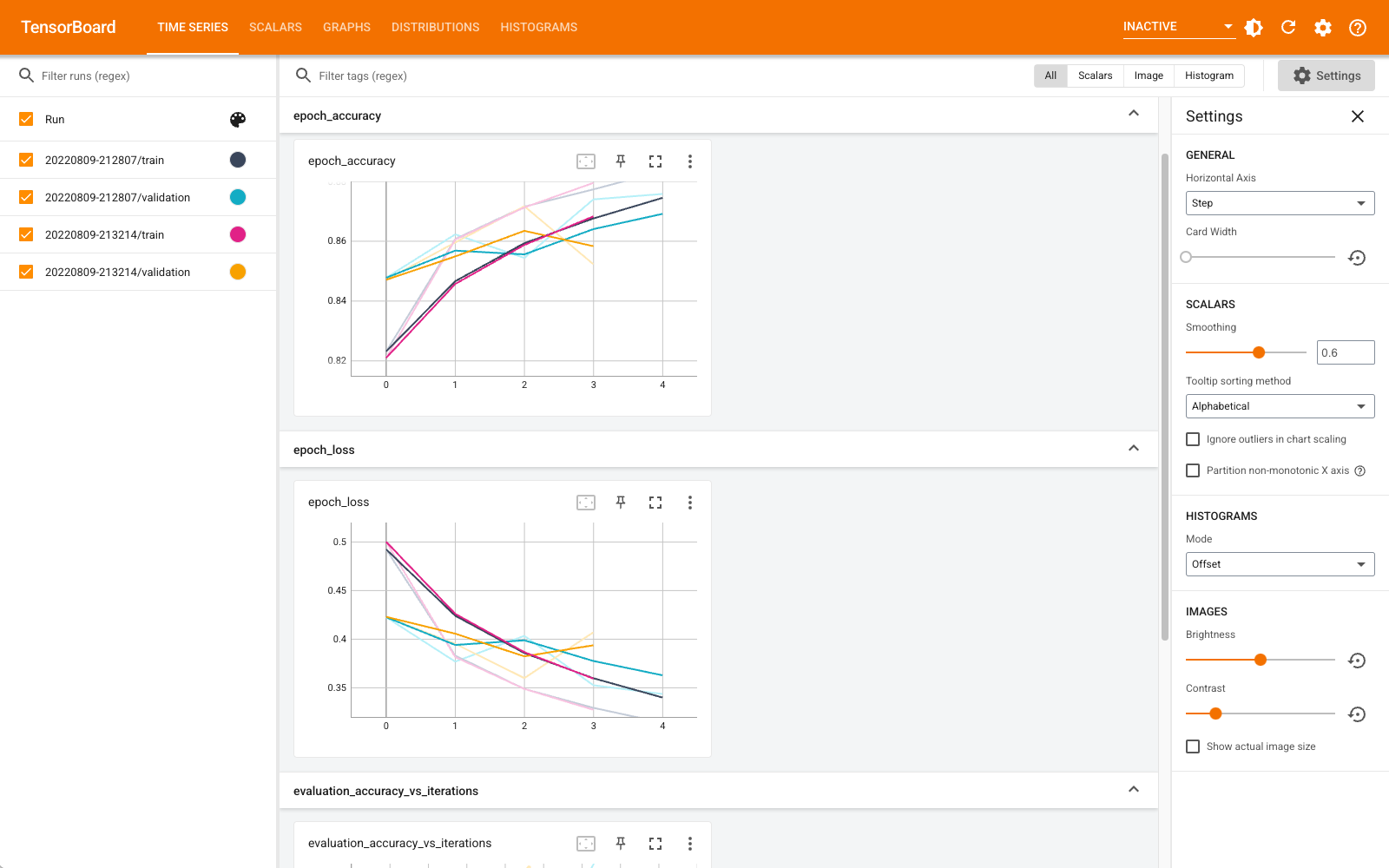

%tensorboard --logdir logs

You can now view dashboards such as Time Series, Graphs, Distributions, and others. Some dashboards are not available yet in Colab (such as the profile plugin).

The %tensorboard magic has exactly the same format as the TensorBoard command line invocation, but with a %-sign in front of it.

You can also start TensorBoard before training to monitor it in progress:

%tensorboard --logdir logs

The same TensorBoard backend is reused by issuing the same command. If a different logs directory was chosen, a new instance of TensorBoard would be opened. Ports are managed automatically.

Start training a new model and watch TensorBoard update automatically every 30 seconds or refresh it with the button on the top right:

train_model()

Train on 60000 samples, validate on 10000 samples Epoch 1/5 60000/60000 [==============================] - 11s 184us/sample - loss: 0.4968 - accuracy: 0.8223 - val_loss: 0.4216 - val_accuracy: 0.8481 Epoch 2/5 60000/60000 [==============================] - 11s 176us/sample - loss: 0.3847 - accuracy: 0.8587 - val_loss: 0.4056 - val_accuracy: 0.8545 Epoch 3/5 60000/60000 [==============================] - 11s 176us/sample - loss: 0.3495 - accuracy: 0.8727 - val_loss: 0.3600 - val_accuracy: 0.8700 Epoch 4/5 60000/60000 [==============================] - 11s 179us/sample - loss: 0.3282 - accuracy: 0.8795 - val_loss: 0.3636 - val_accuracy: 0.8694 Epoch 5/5 60000/60000 [==============================] - 11s 176us/sample - loss: 0.3115 - accuracy: 0.8839 - val_loss: 0.3438 - val_accuracy: 0.8764

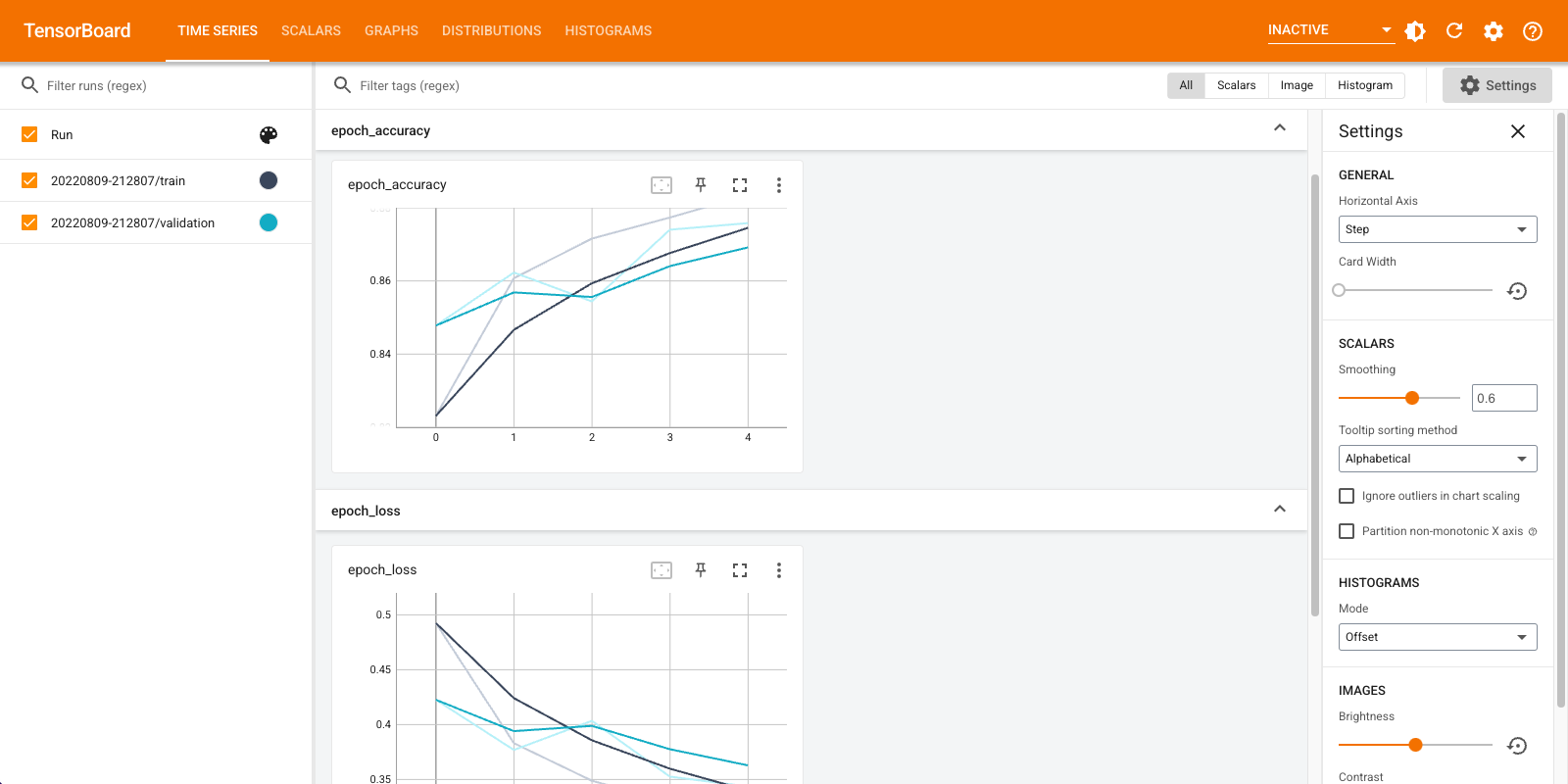

You can use the tensorboard.notebook APIs for a bit more control:

from tensorboard import notebook

notebook.list() # View open TensorBoard instances

Known TensorBoard instances: - port 6006: logdir logs (started 0:00:54 ago; pid 265)

# Control TensorBoard display. If no port is provided,

# the most recently launched TensorBoard is used

notebook.display(port=6006, height=1000)