TensorFlow.org'da görüntüleyin TensorFlow.org'da görüntüleyin |  Google Colab'da çalıştırın Google Colab'da çalıştırın |  Kaynağı GitHub'da görüntüleyin Kaynağı GitHub'da görüntüleyin |  Not defterini indir Not defterini indir |

genel bakış

tf.distribute.Strategy API, eğitiminizi birden çok işlem birimi arasında dağıtmak için bir soyutlama sağlar. Mevcut modelleri ve eğitim kodunu kullanarak en az değişiklikle dağıtılmış eğitim gerçekleştirmenizi sağlar.

Bu öğretici , tek bir makinede birçok GPU'da eşzamanlı eğitimle grafik içi çoğaltma gerçekleştirmek için tf.distribute.MirroredStrategy nasıl kullanılacağını gösterir. Strateji esasen modelin tüm değişkenlerini her işlemciye kopyalar. Ardından, tüm işlemcilerden gelen gradyanları birleştirmek için all-reduce'u kullanır ve birleştirilmiş değeri modelin tüm kopyalarına uygular.

Modeli oluşturmak için tf.keras API'lerini ve onu eğitmek için Model.fit . (Özel bir eğitim döngüsü ve MirroredStrategy ile dağıtılmış eğitim hakkında bilgi edinmek için bu eğiticiye göz atın.)

MirroredStrategy , modelinizi tek bir makinede birden çok GPU üzerinde eğitir. Birden çok çalışan üzerinde birçok GPU üzerinde eşzamanlı eğitim için , tf.distribute.MultiWorkerMirroredStrategy Model.fit veya özel bir eğitim döngüsü ile tf.distribute.MultiWorkerMirrorredStrategy'yi kullanın. Diğer seçenekler için Dağıtılmış eğitim kılavuzuna bakın.

Diğer çeşitli stratejiler hakkında bilgi edinmek için TensorFlow kılavuzu ile Dağıtılmış eğitim bulunmaktadır .

Kurmak

import tensorflow_datasets as tfds

import tensorflow as tf

import os

# Load the TensorBoard notebook extension.

%load_ext tensorboard

print(tf.__version__)

2.8.0-rc1

Veri kümesini indirin

MNIST veri kümesini TensorFlow Veri Kümeleri'nden yükleyin. Bu, tf.data biçiminde bir veri kümesi döndürür.

with_info bağımsız değişkenini True olarak ayarlamak, burada info öğesine kaydedilen tüm veri kümesinin meta verilerini içerir. Diğer şeylerin yanı sıra, bu meta veri nesnesi, tren ve test örneklerinin sayısını içerir.

datasets, info = tfds.load(name='mnist', with_info=True, as_supervised=True)

mnist_train, mnist_test = datasets['train'], datasets['test']

Dağıtım stratejisini tanımlayın

Bir MirroredStrategy nesnesi oluşturun. Bu, dağıtımı yönetecek ve modelinizi içeride oluşturmak için bir bağlam yöneticisi ( MirroredStrategy.scope ) sağlayacaktır.

strategy = tf.distribute.MirroredStrategy()

INFO:tensorflow:Using MirroredStrategy with devices ('/job:localhost/replica:0/task:0/device:GPU:0',)

INFO:tensorflow:Using MirroredStrategy with devices ('/job:localhost/replica:0/task:0/device:GPU:0',)

yer tutucu6 l10n-yerprint('Number of devices: {}'.format(strategy.num_replicas_in_sync))

Number of devices: 1

Giriş ardışık düzenini ayarlayın

Birden çok GPU'lu bir modeli eğitirken, parti boyutunu artırarak ekstra bilgi işlem gücünü etkin bir şekilde kullanabilirsiniz. Genel olarak, GPU belleğine uyan en büyük toplu iş boyutunu kullanın ve öğrenme hızını buna göre ayarlayın.

# You can also do info.splits.total_num_examples to get the total

# number of examples in the dataset.

num_train_examples = info.splits['train'].num_examples

num_test_examples = info.splits['test'].num_examples

BUFFER_SIZE = 10000

BATCH_SIZE_PER_REPLICA = 64

BATCH_SIZE = BATCH_SIZE_PER_REPLICA * strategy.num_replicas_in_sync

[0, 255] aralığından [0, 1] aralığına ( özellik ölçekleme ) görüntü piksel değerlerini normalleştiren bir işlev tanımlayın:

def scale(image, label):

image = tf.cast(image, tf.float32)

image /= 255

return image, label

Bu scale işlevini eğitim ve test verilerine uygulayın ve ardından eğitim verilerini karıştırmak ( Dataset.shuffle ) ve toplu hale getirmek ( Dataset.batch ) için tf.data.Dataset API'lerini kullanın. Performansı artırmak için eğitim verilerinin bir bellek içi önbelleğini de tuttuğunuza dikkat edin ( Dataset.cache ).

train_dataset = mnist_train.map(scale).cache().shuffle(BUFFER_SIZE).batch(BATCH_SIZE)

eval_dataset = mnist_test.map(scale).batch(BATCH_SIZE)

modeli oluştur

Keras modelini Strategy.scope bağlamında oluşturun ve derleyin:

with strategy.scope():

model = tf.keras.Sequential([

tf.keras.layers.Conv2D(32, 3, activation='relu', input_shape=(28, 28, 1)),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(10)

])

model.compile(loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

optimizer=tf.keras.optimizers.Adam(),

metrics=['accuracy'])

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

Geri aramaları tanımlayın

Aşağıdaki tf.keras.callbacks tanımlayın:

-

tf.keras.callbacks.TensorBoard: TensorBoard için grafikleri görselleştirmenizi sağlayan bir günlük yazar. -

tf.keras.callbacks.ModelCheckpoint: modeli her epoch sonrası gibi belirli bir frekansta kaydeder. -

tf.keras.callbacks.LearningRateScheduler: öğrenme oranını, örneğin her dönem/gruptan sonra değişecek şekilde programlar.

Açıklama amacıyla, not defterinde öğrenme oranını görüntülemek için PrintLR adlı özel bir geri arama ekleyin.

# Define the checkpoint directory to store the checkpoints.

checkpoint_dir = './training_checkpoints'

# Define the name of the checkpoint files.

checkpoint_prefix = os.path.join(checkpoint_dir, "ckpt_{epoch}")

# Define a function for decaying the learning rate.

# You can define any decay function you need.

def decay(epoch):

if epoch < 3:

return 1e-3

elif epoch >= 3 and epoch < 7:

return 1e-4

else:

return 1e-5

# Define a callback for printing the learning rate at the end of each epoch.

class PrintLR(tf.keras.callbacks.Callback):

def on_epoch_end(self, epoch, logs=None):

print('\nLearning rate for epoch {} is {}'.format(epoch + 1,

model.optimizer.lr.numpy()))

# Put all the callbacks together.

callbacks = [

tf.keras.callbacks.TensorBoard(log_dir='./logs'),

tf.keras.callbacks.ModelCheckpoint(filepath=checkpoint_prefix,

save_weights_only=True),

tf.keras.callbacks.LearningRateScheduler(decay),

PrintLR()

]

Eğitin ve değerlendirin

Şimdi, modelde Model.fit çağırarak ve öğreticinin başında oluşturulan veri kümesini ileterek modeli olağan şekilde eğitin. Eğitimi dağıtsanız da dağıtmasanız da bu adım aynıdır.

EPOCHS = 12

model.fit(train_dataset, epochs=EPOCHS, callbacks=callbacks)

2022-01-26 05:38:28.865380: W tensorflow/core/grappler/optimizers/data/auto_shard.cc:547] The `assert_cardinality` transformation is currently not handled by the auto-shard rewrite and will be removed.

Epoch 1/12

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

INFO:tensorflow:Reduce to /job:localhost/replica:0/task:0/device:CPU:0 then broadcast to ('/job:localhost/replica:0/task:0/device:CPU:0',).

933/938 [============================>.] - ETA: 0s - loss: 0.2029 - accuracy: 0.9399

Learning rate for epoch 1 is 0.0010000000474974513

938/938 [==============================] - 10s 4ms/step - loss: 0.2022 - accuracy: 0.9401 - lr: 0.0010

Epoch 2/12

930/938 [============================>.] - ETA: 0s - loss: 0.0654 - accuracy: 0.9813

Learning rate for epoch 2 is 0.0010000000474974513

938/938 [==============================] - 3s 3ms/step - loss: 0.0652 - accuracy: 0.9813 - lr: 0.0010

Epoch 3/12

931/938 [============================>.] - ETA: 0s - loss: 0.0453 - accuracy: 0.9864

Learning rate for epoch 3 is 0.0010000000474974513

938/938 [==============================] - 3s 3ms/step - loss: 0.0453 - accuracy: 0.9864 - lr: 0.0010

Epoch 4/12

923/938 [============================>.] - ETA: 0s - loss: 0.0246 - accuracy: 0.9933

Learning rate for epoch 4 is 9.999999747378752e-05

938/938 [==============================] - 3s 3ms/step - loss: 0.0244 - accuracy: 0.9934 - lr: 1.0000e-04

Epoch 5/12

929/938 [============================>.] - ETA: 0s - loss: 0.0211 - accuracy: 0.9944

Learning rate for epoch 5 is 9.999999747378752e-05

938/938 [==============================] - 3s 3ms/step - loss: 0.0212 - accuracy: 0.9944 - lr: 1.0000e-04

Epoch 6/12

930/938 [============================>.] - ETA: 0s - loss: 0.0192 - accuracy: 0.9950

Learning rate for epoch 6 is 9.999999747378752e-05

938/938 [==============================] - 3s 3ms/step - loss: 0.0194 - accuracy: 0.9950 - lr: 1.0000e-04

Epoch 7/12

927/938 [============================>.] - ETA: 0s - loss: 0.0179 - accuracy: 0.9953

Learning rate for epoch 7 is 9.999999747378752e-05

938/938 [==============================] - 3s 3ms/step - loss: 0.0179 - accuracy: 0.9953 - lr: 1.0000e-04

Epoch 8/12

938/938 [==============================] - ETA: 0s - loss: 0.0153 - accuracy: 0.9966

Learning rate for epoch 8 is 9.999999747378752e-06

938/938 [==============================] - 3s 3ms/step - loss: 0.0153 - accuracy: 0.9966 - lr: 1.0000e-05

Epoch 9/12

927/938 [============================>.] - ETA: 0s - loss: 0.0151 - accuracy: 0.9966

Learning rate for epoch 9 is 9.999999747378752e-06

938/938 [==============================] - 3s 3ms/step - loss: 0.0150 - accuracy: 0.9966 - lr: 1.0000e-05

Epoch 10/12

935/938 [============================>.] - ETA: 0s - loss: 0.0148 - accuracy: 0.9966

Learning rate for epoch 10 is 9.999999747378752e-06

938/938 [==============================] - 3s 3ms/step - loss: 0.0148 - accuracy: 0.9966 - lr: 1.0000e-05

Epoch 11/12

937/938 [============================>.] - ETA: 0s - loss: 0.0146 - accuracy: 0.9967

Learning rate for epoch 11 is 9.999999747378752e-06

938/938 [==============================] - 3s 3ms/step - loss: 0.0146 - accuracy: 0.9967 - lr: 1.0000e-05

Epoch 12/12

926/938 [============================>.] - ETA: 0s - loss: 0.0145 - accuracy: 0.9967

Learning rate for epoch 12 is 9.999999747378752e-06

938/938 [==============================] - 3s 3ms/step - loss: 0.0144 - accuracy: 0.9967 - lr: 1.0000e-05

<keras.callbacks.History at 0x7fad70067c10>

Kaydedilmiş kontrol noktalarını kontrol edin:

tutucu20 l10n-yer# Check the checkpoint directory.ls {checkpoint_dir}

checkpoint ckpt_4.data-00000-of-00001 ckpt_1.data-00000-of-00001 ckpt_4.index ckpt_1.index ckpt_5.data-00000-of-00001 ckpt_10.data-00000-of-00001 ckpt_5.index ckpt_10.index ckpt_6.data-00000-of-00001 ckpt_11.data-00000-of-00001 ckpt_6.index ckpt_11.index ckpt_7.data-00000-of-00001 ckpt_12.data-00000-of-00001 ckpt_7.index ckpt_12.index ckpt_8.data-00000-of-00001 ckpt_2.data-00000-of-00001 ckpt_8.index ckpt_2.index ckpt_9.data-00000-of-00001 ckpt_3.data-00000-of-00001 ckpt_9.index ckpt_3.index

Modelin ne kadar iyi performans gösterdiğini kontrol etmek için en son kontrol noktasını yükleyin ve test verilerine Model.evaluate çağırın:

model.load_weights(tf.train.latest_checkpoint(checkpoint_dir))

eval_loss, eval_acc = model.evaluate(eval_dataset)

print('Eval loss: {}, Eval accuracy: {}'.format(eval_loss, eval_acc))

2022-01-26 05:39:15.260539: W tensorflow/core/grappler/optimizers/data/auto_shard.cc:547] The `assert_cardinality` transformation is currently not handled by the auto-shard rewrite and will be removed. 157/157 [==============================] - 2s 4ms/step - loss: 0.0373 - accuracy: 0.9879 Eval loss: 0.03732967749238014, Eval accuracy: 0.9879000186920166

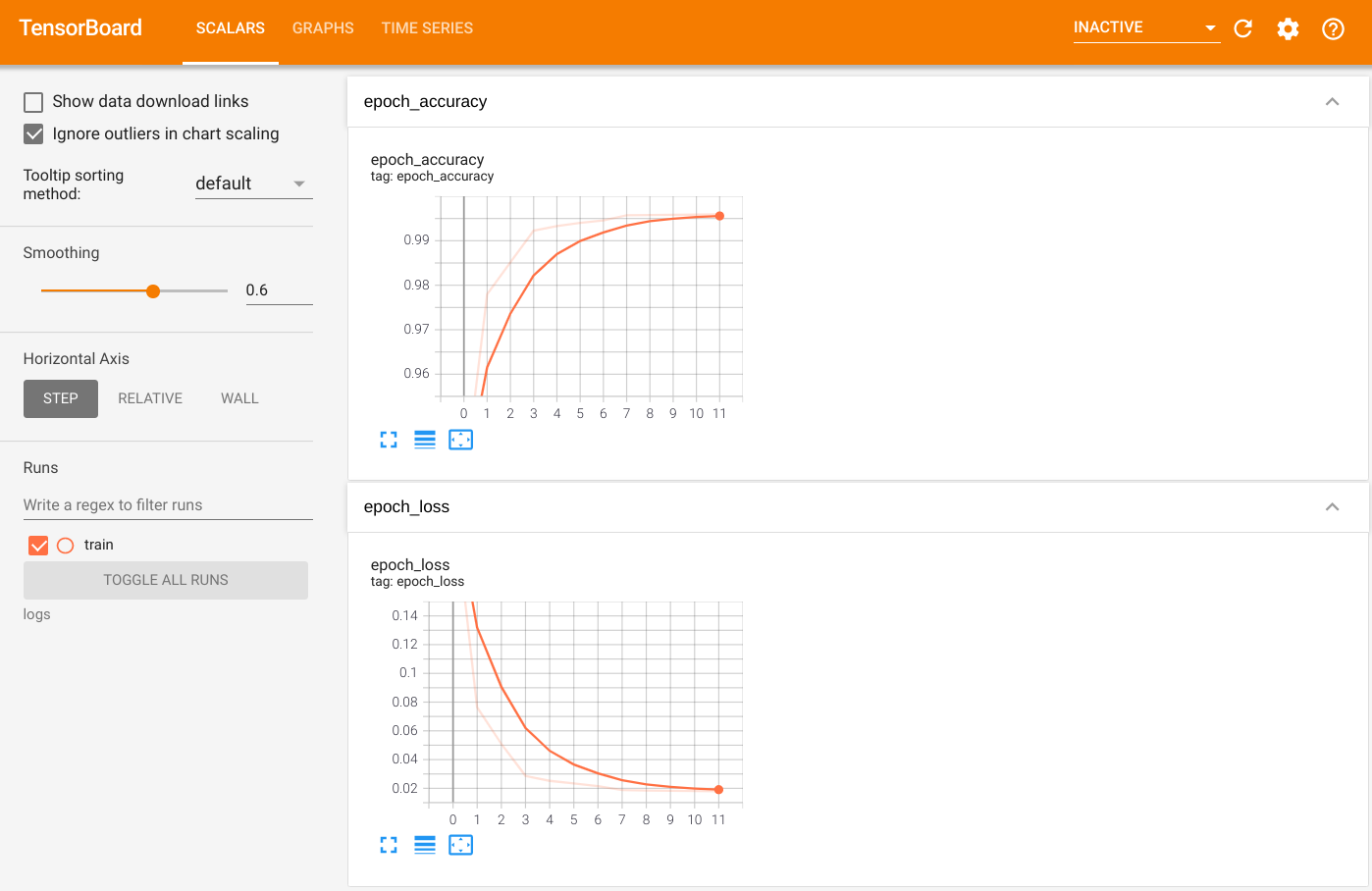

Çıktıyı görselleştirmek için TensorBoard'u başlatın ve günlükleri görüntüleyin:

%tensorboard --logdir=logs

ls -sh ./logs

tutucu25 l10n-yertotal 4.0K 4.0K train

SavedModel'e Aktar

Model.save kullanarak grafiği ve değişkenleri platformdan bağımsız SavedModel biçimine Model.save . Modeliniz kaydedildikten sonra, onu Strategy.scope ile veya onsuz yükleyebilirsiniz.

path = 'saved_model/'

model.save(path, save_format='tf')

2022-01-26 05:39:18.012847: W tensorflow/python/util/util.cc:368] Sets are not currently considered sequences, but this may change in the future, so consider avoiding using them. INFO:tensorflow:Assets written to: saved_model/assets INFO:tensorflow:Assets written to: saved_model/assets

Şimdi, modeli Strategy.scope olmadan yükleyin:

unreplicated_model = tf.keras.models.load_model(path)

unreplicated_model.compile(

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

optimizer=tf.keras.optimizers.Adam(),

metrics=['accuracy'])

eval_loss, eval_acc = unreplicated_model.evaluate(eval_dataset)

print('Eval loss: {}, Eval Accuracy: {}'.format(eval_loss, eval_acc))

157/157 [==============================] - 1s 2ms/step - loss: 0.0373 - accuracy: 0.9879 Eval loss: 0.03732967749238014, Eval Accuracy: 0.9879000186920166

Modeli Strategy.scope ile yükleyin:

with strategy.scope():

replicated_model = tf.keras.models.load_model(path)

replicated_model.compile(loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

optimizer=tf.keras.optimizers.Adam(),

metrics=['accuracy'])

eval_loss, eval_acc = replicated_model.evaluate(eval_dataset)

print ('Eval loss: {}, Eval Accuracy: {}'.format(eval_loss, eval_acc))

2022-01-26 05:39:19.489971: W tensorflow/core/grappler/optimizers/data/auto_shard.cc:547] The `assert_cardinality` transformation is currently not handled by the auto-shard rewrite and will be removed. 157/157 [==============================] - 3s 3ms/step - loss: 0.0373 - accuracy: 0.9879 Eval loss: 0.03732967749238014, Eval Accuracy: 0.9879000186920166

Ek kaynaklar

Model.fit API ile farklı dağıtım stratejileri kullanan daha fazla örnek:

- TPU'da BERT kullanarak GLUE görevlerini

tf.distribute.MirroredStrategyöğreticisi, GPU'larda eğitim için tf.distribute.MirroredStrategy'yi vetf.distribute.TPUStrategykullanır. - Bir modeli bir dağıtım stratejisi kullanarak kaydet ve yükle öğreticisi, SavedModel API'lerinin

tf.distribute.Strategyile nasıl kullanılacağını gösterir. - Resmi TensorFlow modelleri , birden çok dağıtım stratejisini çalıştıracak şekilde yapılandırılabilir.

TensorFlow dağıtım stratejileri hakkında daha fazla bilgi edinmek için:

- tf.distribute.Strategy ile Özel eğitim öğreticisi, özel bir eğitim döngüsüyle tek çalışan eğitimi için

tf.distribute.MirroredStrategynasıl kullanılacağını gösterir. - Keras öğreticisiyle çok çalışanlı eğitim ,

Model.fitMultiWorkerMirroredStrategyile nasıl kullanılacağını gösterir. - Keras ve MultiWorkerMirrorredStrategy ile Özel eğitim döngüsü ,

MultiWorkerMirroredStrategyile nasıl kullanılacağını ve özel bir eğitim döngüsünü gösterir. - TensorFlow kılavuzundaki Dağıtılmış eğitim , mevcut dağıtım stratejilerine genel bir bakış sağlar.

- tf.fonksiyon kılavuzu ile daha iyi performans, TensorFlow modellerinizin performansını optimize etmek için kullanabileceğiniz TensorFlow Profiler gibi diğer stratejiler ve araçlar hakkında bilgi sağlar.