Neural Structured Learning: Training with Structured Signals

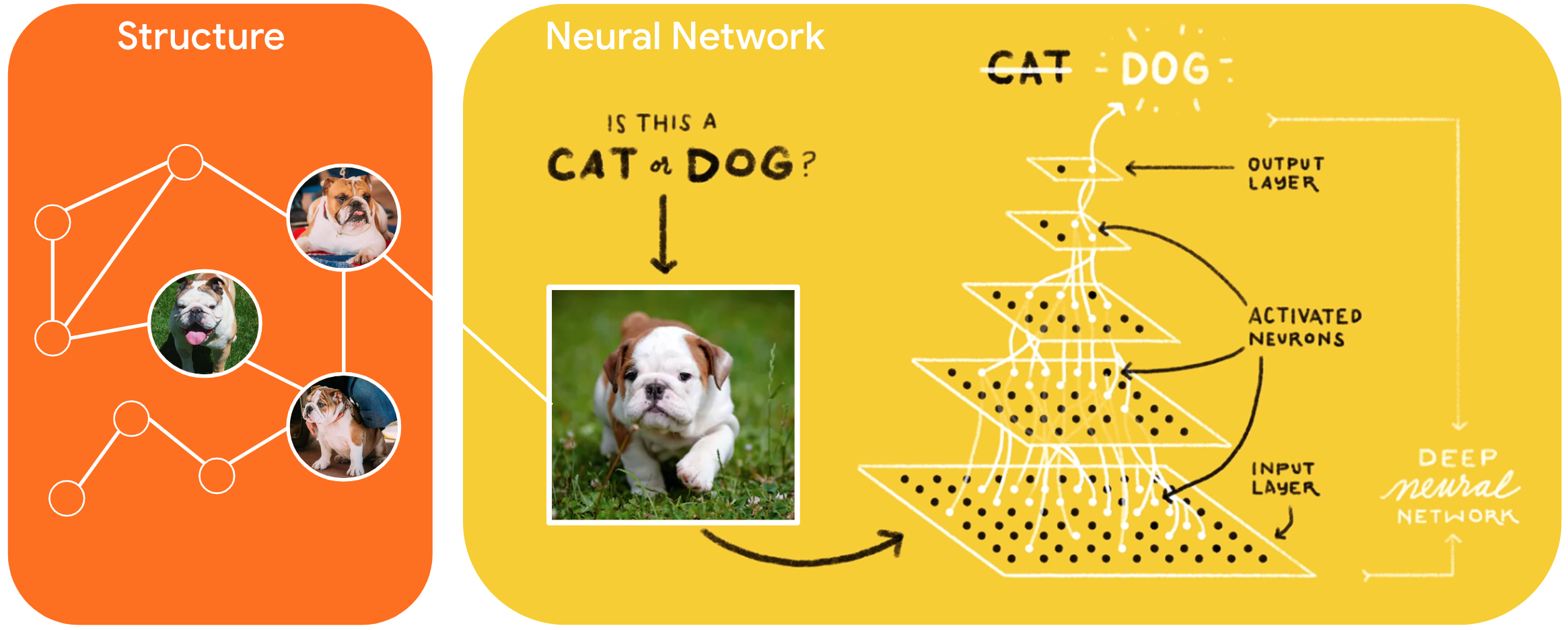

Neural Structured Learning (NSL) is a new learning paradigm to train neural networks by leveraging structured signals in addition to feature inputs. Structure can be explicit as represented by a graph or implicit as induced by adversarial perturbation.

Structured signals are commonly used to represent relations or similarity among samples that may be labeled or unlabeled. Therefore, leveraging these signals during neural network training harnesses both labeled and unlabeled data, which can improve model accuracy, particularly when the amount of labeled data is relatively small. Additionally, models trained with samples that are generated by adding adversarial perturbation have been shown to be robust against malicious attacks, which are designed to mislead a model's prediction or classification.

NSL generalizes to Neural Graph Learning as well as Adversarial Learning. The NSL framework in TensorFlow provides the following easy-to-use APIs and tools for developers to train models with structured signals:

- Keras APIs to enable training with graphs (explicit structure) and adversarial perturbations (implicit structure).

- TF ops and functions to enable training with structure when using lower-level TensorFlow APIs

- Tools to build graphs and construct graph inputs for training

Incorporating structured signals is done only during training. So, the performance of the serving/inference workflow remains unchanged. More information on neural structured learning can be found in our framework description. To get started, please see our install guide, and for a practical introduction to NSL, check out our tutorials.

import tensorflow as tf import neural_structured_learning as nsl # Prepare data. (x_train, y_train), (x_test, y_test) = tf.keras.datasets.mnist.load_data() x_train, x_test = x_train / 255.0, x_test / 255.0 # Create a base model -- sequential, functional, or subclass. model = tf.keras.Sequential([ tf.keras.Input((28, 28), name='feature'), tf.keras.layers.Flatten(), tf.keras.layers.Dense(128, activation=tf.nn.relu), tf.keras.layers.Dense(10, activation=tf.nn.softmax) ]) # Wrap the model with adversarial regularization. adv_config = nsl.configs.make_adv_reg_config(multiplier=0.2, adv_step_size=0.05) adv_model = nsl.keras.AdversarialRegularization(model, adv_config=adv_config) # Compile, train, and evaluate. adv_model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy']) adv_model.fit({'feature': x_train, 'label': y_train}, batch_size=32, epochs=5) adv_model.evaluate({'feature': x_test, 'label': y_test})