The task of identifying what an image represents is called image classification. An image classification model is trained to recognize various classes of images. For example, you may train a model to recognize photos representing three different types of animals: rabbits, hamsters, and dogs. TensorFlow Lite provides optimized pre-trained models that you can deploy in your mobile applications. Learn more about image classification using TensorFlow here.

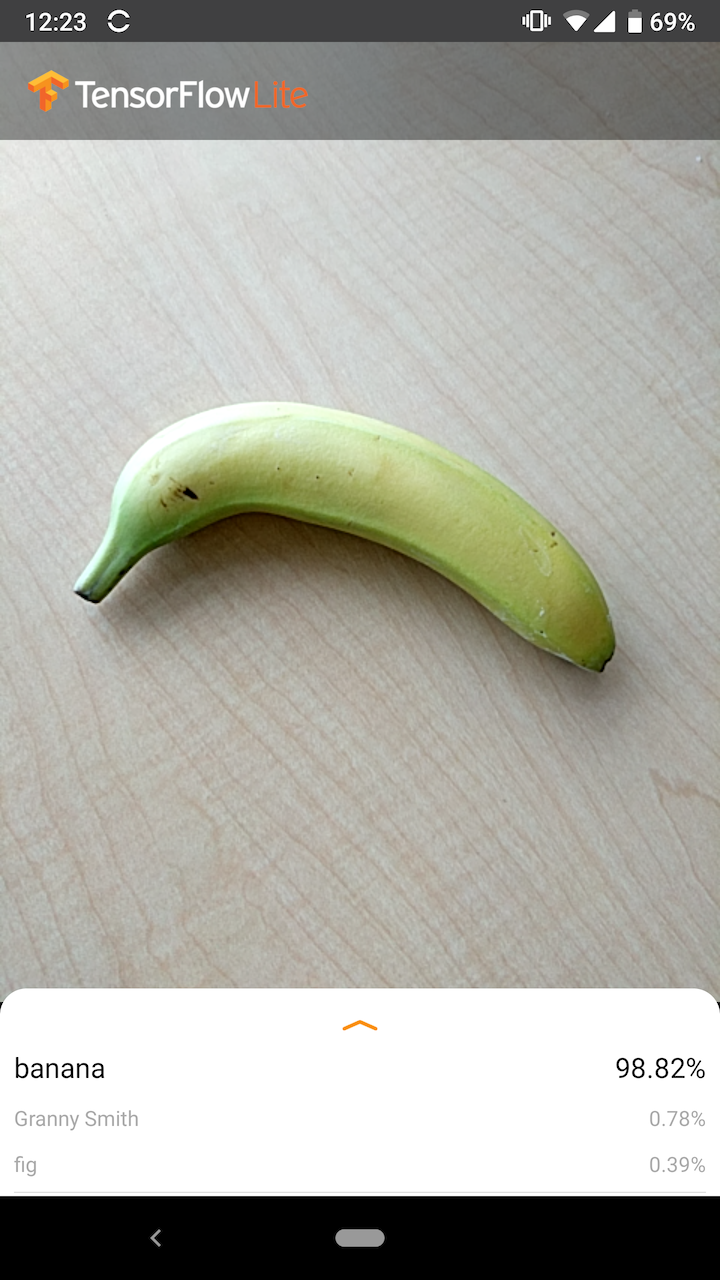

The following image shows the output of the image classification model on Android.

Get started

If you are new to TensorFlow Lite and are working with Android or iOS, it is recommended you explore the following example applications that can help you get started.

You can leverage the out-of-box API from TensorFlow Lite Task Library to integrate image classification models in just a few lines of code. You can also build your own custom inference pipeline using the TensorFlow Lite Support Library.

The Android example below demonstrates the implementation for both methods as lib_task_api and lib_support, respectively.

If you are using a platform other than Android/iOS, or if you are already familiar with the TensorFlow Lite APIs, download the starter model and supporting files (if applicable).

Model description

How it works

During training, an image classification model is fed images and their associated labels. Each label is the name of a distinct concept, or class, that the model will learn to recognize.

Given sufficient training data (often hundreds or thousands of images per label), an image classification model can learn to predict whether new images belong to any of the classes it has been trained on. This process of prediction is called inference. Note that you can also use transfer learning to identify new classes of images by using a pre-existing model. Transfer learning does not require a very large training dataset.

When you subsequently provide a new image as input to the model, it will output the probabilities of the image representing each of the types of animal it was trained on. An example output might be as follows:

| Animal type | Probability |

|---|---|

| Rabbit | 0.07 |

| Hamster | 0.02 |

| Dog | 0.91 |

Each number in the output corresponds to a label in the training data. Associating the output with the three labels the model was trained on, you can see that the model has predicted a high probability that the image represents a dog.

You might notice that the sum of all the probabilities (for rabbit, hamster, and dog) is equal to 1. This is a common type of output for models with multiple classes (see Softmax for more information).

Ambiguous results

Since the output probabilities will always sum to 1, if an image is not confidently recognized as belonging to any of the classes the model was trained on you may see the probability distributed throughout the labels without any one value being significantly larger.

For example, the following might indicate an ambiguous result:

| Label | Probability |

|---|---|

| rabbit | 0.31 |

| hamster | 0.35 |

| dog | 0.34 |

Choosing a model architecture

TensorFlow Lite provides you with a variety of image classification models which are all trained on the original dataset. Model architectures like MobileNet, Inception, and NASNet are available on TensorFlow Hub. To choose the best model for your use case, you need to consider the individual architectures as well as some of the tradeoffs between various models. Some of these model tradeoffs are based on metrics such as performance, accuracy, and model size. For example, you might need a faster model for building a bar code scanner while you might prefer a slower, more accurate model for a medical imaging app. Note that the image classification models provided accept varying sizes of input. For some models, this is indicated in the filename. For example, the Mobilenet_V1_1.0_224 model accepts an input of 224x224 pixels. All of the models require three color channels per pixel (red, green, and blue). Quantized models require 1 byte per channel, and float models require 4 bytes per channel. The Android and iOS code samples demonstrate how to process full-sized camera images into the required format for each model.Uses and limitations

The TensorFlow Lite image classification models are useful for single-label classification; that is, predicting which single label the image is most likely to represent. They are trained to recognize 1000 image classes. For a full list of classes, see the labels file in the model zip. If you want to train a model to recognize new classes, see Customize model. For the following use cases, you should use a different type of model:- Predicting the type and position of one or more objects within an image (see Object detection)

- Predicting the composition of an image, for example subject versus background (see Segmentation)

Customize model

The pre-trained models provided are trained to recognize 1000 classes of images. For a full list of classes, see the labels file in the model zip. You can also use transfer learning to re-train a model to recognize classes not in the original set. For example, you could re-train the model to distinguish between different species of tree, despite there being no trees in the original training data. To do this, you will need a set of training images for each of the new labels you wish to train. Learn how to perform transfer learning with the TFLite Model Maker, or in the Recognize flowers with TensorFlow codelab.Performance benchmarks

Model performance is measured in terms of the amount of time it takes for a model to run inference on a given piece of hardware. The lower the time, the faster the model. The performance you require depends on your application. Performance can be important for applications like real-time video, where it may be important to analyze each frame in the time before the next frame is drawn (e.g. inference must be faster than 33ms to perform real-time inference on a 30fps video stream). The TensorFlow Lite quantized MobileNet models' performance range from 3.7ms to 80.3 ms. Performance benchmark numbers are generated with the benchmarking tool.| Model Name | Model size | Device | NNAPI | CPU |

|---|---|---|---|---|

| Mobilenet_V1_1.0_224_quant | 4.3 Mb | Pixel 3 (Android 10) | 6ms | 13ms* |

| Pixel 4 (Android 10) | 3.3ms | 5ms* | ||

| iPhone XS (iOS 12.4.1) | 11ms** |

* 4 threads used.

** 2 threads used on iPhone for the best performance result.

Model accuracy

Accuracy is measured in terms of how often the model correctly classifies an image. For example, a model with a stated accuracy of 60% can be expected to classify an image correctly an average of 60% of the time.

The most relevant accuracy metrics are Top-1 and Top-5. Top-1 refers to how often the correct label appears as the label with the highest probability in the model’s output. Top-5 refers to how often the correct label appears in the 5 highest probabilities in the model’s output.

The TensorFlow Lite quantized MobileNet models’ Top-5 accuracy range from 64.4 to 89.9%.

Model size

The size of a model on-disk varies with its performance and accuracy. Size may be important for mobile development (where it might impact app download sizes) or when working with hardware (where available storage might be limited).

The TensorFlow Lite quantized MobileNet models' sizes range from 0.5 to 3.4 MB.

Further reading and resources

Use the following resources to learn more about concepts related to image classification: