在 TensorFlow.org上查看 在 TensorFlow.org上查看 |

在 Google Colab 中运行 在 Google Colab 中运行 |

在 GitHub 中查看源代码 在 GitHub 中查看源代码 |

文本特征向量

此笔记本利用评论文本将电影评论分类为正面或负面评价。这是一个二元分类示例,也是一个重要且应用广泛的机器学习问题。

在此笔记本中,我们将通过根据给定的输入构建计算图来演示如何使用计算图正则化。当输入不包含显式计算图时,使用神经结构学习 (NSL) 框架构建计算图正则化模型的一般方法如下:

- 为输入中的每个文本样本创建嵌入向量。该操作可使用 word2vec、Swivel、BERT 等预训练模型来完成。

- 通过使用诸如“L2”距离、“余弦”距离等相似度指标,基于这些嵌入向量构建计算图。计算图中的节点对应于样本,计算图中的边对应于样本对之间的相似度。

- 基于上述合成计算图和样本特征生成训练数据。除原始节点特征外,所得的训练数据还将包含近邻特征。

- 使用 Keras 序列式、函数式或子类 API 作为基础模型创建神经网络。

- 使用 NSL 框架提供的 GraphRegularization 包装器类包装基础模型,以创建新的计算图 Keras 模型。这个新模型将包含计算图正则化损失作为其训练目标中的一个正规化项。

- 训练和评估计算图 Keras 模型。

注:我们预计读者阅读本教程所需时间为 1 小时左右。

要求

- 安装 Neural Structured Learning 软件包。

- 安装 tensorflow-hub。

pip install --quiet neural-structured-learningpip install --quiet tensorflow-hub

依赖项和导入

import matplotlib.pyplot as plt

import numpy as np

import neural_structured_learning as nsl

import tensorflow as tf

import tensorflow_hub as hub

# Resets notebook state

tf.keras.backend.clear_session()

print("Version: ", tf.__version__)

print("Eager mode: ", tf.executing_eagerly())

print("Hub version: ", hub.__version__)

print(

"GPU is",

"available" if tf.config.list_physical_devices("GPU") else "NOT AVAILABLE")

Version: 2.6.0 Eager mode: True Hub version: 0.12.0 GPU is available

IMDB 数据集

IMDB 数据集包含 Internet Movie Database 中的 50,000 条电影评论文本 。我们将这些评论分为两组,其中 25,000 条用于训练,另外 25,000 条用于测试。训练组和测试组是均衡的,也就是说其中包含相等数量的正面评价和负面评价。

在本教程中,我们将使用 IMDB 数据集的预处理版本。

下载预处理的 IMDB 数据集

TensorFlow 随附 IMDB 数据集。该数据集经过预处理,已将评论(单词序列)转换为整数序列,其中每个整数均代表字典中的特定单词。

以下代码可下载 IMDB 数据集(如已下载,则使用缓存副本):

imdb = tf.keras.datasets.imdb

(pp_train_data, pp_train_labels), (pp_test_data, pp_test_labels) = (

imdb.load_data(num_words=10000))

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/imdb.npz 17465344/17464789 [==============================] - 0s 0us/step 17473536/17464789 [==============================] - 0s 0us/step

参数 num_words=10000 会将训练数据中的前 10,000 个最频繁出现的单词保留下来。稀有单词将被丢弃以保持词汇量的可管理性。

探索数据

我们花一点时间来了解数据的格式。数据集经过预处理:每个样本都是一个整数数组,每个整数代表电影评论中的单词。每个标签是一个整数值(0 或 1),其中 0 表示负面评价,而 1 表示正面评价。

print('Training entries: {}, labels: {}'.format(

len(pp_train_data), len(pp_train_labels)))

training_samples_count = len(pp_train_data)

Training entries: 25000, labels: 25000

评论文本已转换为整数,其中每个整数均代表字典中的特定单词。第一条评论如下所示:

print(pp_train_data[0])

[1, 14, 22, 16, 43, 530, 973, 1622, 1385, 65, 458, 4468, 66, 3941, 4, 173, 36, 256, 5, 25, 100, 43, 838, 112, 50, 670, 2, 9, 35, 480, 284, 5, 150, 4, 172, 112, 167, 2, 336, 385, 39, 4, 172, 4536, 1111, 17, 546, 38, 13, 447, 4, 192, 50, 16, 6, 147, 2025, 19, 14, 22, 4, 1920, 4613, 469, 4, 22, 71, 87, 12, 16, 43, 530, 38, 76, 15, 13, 1247, 4, 22, 17, 515, 17, 12, 16, 626, 18, 2, 5, 62, 386, 12, 8, 316, 8, 106, 5, 4, 2223, 5244, 16, 480, 66, 3785, 33, 4, 130, 12, 16, 38, 619, 5, 25, 124, 51, 36, 135, 48, 25, 1415, 33, 6, 22, 12, 215, 28, 77, 52, 5, 14, 407, 16, 82, 2, 8, 4, 107, 117, 5952, 15, 256, 4, 2, 7, 3766, 5, 723, 36, 71, 43, 530, 476, 26, 400, 317, 46, 7, 4, 2, 1029, 13, 104, 88, 4, 381, 15, 297, 98, 32, 2071, 56, 26, 141, 6, 194, 7486, 18, 4, 226, 22, 21, 134, 476, 26, 480, 5, 144, 30, 5535, 18, 51, 36, 28, 224, 92, 25, 104, 4, 226, 65, 16, 38, 1334, 88, 12, 16, 283, 5, 16, 4472, 113, 103, 32, 15, 16, 5345, 19, 178, 32]

电影评论的长度可能各不相同。以下代码显示了第一条评论和第二条评论中的单词数。由于神经网络的输入必须具有相同的长度,因此我们稍后需要解决长度问题。

len(pp_train_data[0]), len(pp_train_data[1])

(218, 189)

将整数重新转换为单词

了解如何将整数重新转换为相应的文本可能非常实用。在这里,我们将创建一个辅助函数来查询包含整数到字符串映射的字典对象:

def build_reverse_word_index():

# A dictionary mapping words to an integer index

word_index = imdb.get_word_index()

# The first indices are reserved

word_index = {k: (v + 3) for k, v in word_index.items()}

word_index['<PAD>'] = 0

word_index['<START>'] = 1

word_index['<UNK>'] = 2 # unknown

word_index['<UNUSED>'] = 3

return dict((value, key) for (key, value) in word_index.items())

reverse_word_index = build_reverse_word_index()

def decode_review(text):

return ' '.join([reverse_word_index.get(i, '?') for i in text])

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/imdb_word_index.json 1646592/1641221 [==============================] - 0s 0us/step 1654784/1641221 [==============================] - 0s 0us/step

现在,我们可以使用 decode_review 函数来显示第一条评论的文本:

decode_review(pp_train_data[0])

"<START> this film was just brilliant casting location scenery story direction everyone's really suited the part they played and you could just imagine being there robert <UNK> is an amazing actor and now the same being director <UNK> father came from the same scottish island as myself so i loved the fact there was a real connection with this film the witty remarks throughout the film were great it was just brilliant so much that i bought the film as soon as it was released for <UNK> and would recommend it to everyone to watch and the fly fishing was amazing really cried at the end it was so sad and you know what they say if you cry at a film it must have been good and this definitely was also <UNK> to the two little boy's that played the <UNK> of norman and paul they were just brilliant children are often left out of the <UNK> list i think because the stars that play them all grown up are such a big profile for the whole film but these children are amazing and should be praised for what they have done don't you think the whole story was so lovely because it was true and was someone's life after all that was shared with us all"

计算图构造

计算图的构造涉及为文本样本创建嵌入向量,然后使用相似度函数比较嵌入向量。

在继续之前,我们先创建一个目录来存储在本教程中创建的工件。

mkdir -p /tmp/imdb创建样本嵌入向量

我们将使用预训练的 Swivel 嵌入向量为输入中的每个样本创建 tf.train.Example 格式的嵌入向量。我们将以 TFRecord 格式存储生成的嵌入向量以及代表每个样本 ID 的附加特征。这有助于我们在未来能够将样本嵌入向量与计算图中的相应节点进行匹配。

pretrained_embedding = 'https://tfhub.dev/google/tf2-preview/gnews-swivel-20dim/1'

hub_layer = hub.KerasLayer(

pretrained_embedding, input_shape=[], dtype=tf.string, trainable=True)

def _int64_feature(value):

"""Returns int64 tf.train.Feature."""

return tf.train.Feature(int64_list=tf.train.Int64List(value=value.tolist()))

def _bytes_feature(value):

"""Returns bytes tf.train.Feature."""

return tf.train.Feature(

bytes_list=tf.train.BytesList(value=[value.encode('utf-8')]))

def _float_feature(value):

"""Returns float tf.train.Feature."""

return tf.train.Feature(float_list=tf.train.FloatList(value=value.tolist()))

def create_embedding_example(word_vector, record_id):

"""Create tf.Example containing the sample's embedding and its ID."""

text = decode_review(word_vector)

# Shape = [batch_size,].

sentence_embedding = hub_layer(tf.reshape(text, shape=[-1,]))

# Flatten the sentence embedding back to 1-D.

sentence_embedding = tf.reshape(sentence_embedding, shape=[-1])

features = {

'id': _bytes_feature(str(record_id)),

'embedding': _float_feature(sentence_embedding.numpy())

}

return tf.train.Example(features=tf.train.Features(feature=features))

def create_embeddings(word_vectors, output_path, starting_record_id):

record_id = int(starting_record_id)

with tf.io.TFRecordWriter(output_path) as writer:

for word_vector in word_vectors:

example = create_embedding_example(word_vector, record_id)

record_id = record_id + 1

writer.write(example.SerializeToString())

return record_id

# Persist TF.Example features containing embeddings for training data in

# TFRecord format.

create_embeddings(pp_train_data, '/tmp/imdb/embeddings.tfr', 0)

25000

构建计算图

现在有了样本嵌入向量,我们将使用它们来构建相似度计算图:此计算图中的节点将与样本对应,此计算图中的边将与节点对之间的相似度对应。

神经结构学习提供了一个计算图构建库,用于基于样本嵌入向量构建计算图。它使用余弦相似度作为相似度指标来比较嵌入向量并在它们之间构建边。它还支持指定相似度阈值,用于从最终计算图中丢弃不相似的边。在本示例中,使用 0.99 作为相似度阈值,使用 12345 作为随机种子,我们最终得到一个具有 429,415 条双向边的计算图。在这里,我们借助计算图构建器对局部敏感哈希 (LSH) 算法的支持来加快计算图构建。有关使用计算图构建器的 LSH 支持的详细信息,请参阅 build_graph_from_config API 文档。

graph_builder_config = nsl.configs.GraphBuilderConfig(

similarity_threshold=0.99, lsh_splits=32, lsh_rounds=15, random_seed=12345)

nsl.tools.build_graph_from_config(['/tmp/imdb/embeddings.tfr'],

'/tmp/imdb/graph_99.tsv',

graph_builder_config)

在输出 TSV 文件中,每条双向边均由两条有向边表示,因此该文件共含 429,415 * 2 = 858,830 行:

wc -l /tmp/imdb/graph_99.tsv858828 /tmp/imdb/graph_99.tsv

注:计算图质量以及与之相关的嵌入向量质量对于计算图正则化非常重要。虽然我们在此笔记本中使用了 Swivel 嵌入向量,但如果使用 BERT 等嵌入向量,可能会更准确地捕获评论语义。我们鼓励用户根据自身需求选用合适的嵌入向量。

样本特征

我们使用 tf.train.Example 格式为问题创建样本特征,并将其保留为 TFRecord 格式。每个样本将包含以下三个特征:

- id:样本的节点 ID。

- words:包含单词 ID 的 int64 列表。

- label:用于标识评论的目标类的单例 int64。

def create_example(word_vector, label, record_id):

"""Create tf.Example containing the sample's word vector, label, and ID."""

features = {

'id': _bytes_feature(str(record_id)),

'words': _int64_feature(np.asarray(word_vector)),

'label': _int64_feature(np.asarray([label])),

}

return tf.train.Example(features=tf.train.Features(feature=features))

def create_records(word_vectors, labels, record_path, starting_record_id):

record_id = int(starting_record_id)

with tf.io.TFRecordWriter(record_path) as writer:

for word_vector, label in zip(word_vectors, labels):

example = create_example(word_vector, label, record_id)

record_id = record_id + 1

writer.write(example.SerializeToString())

return record_id

# Persist TF.Example features (word vectors and labels) for training and test

# data in TFRecord format.

next_record_id = create_records(pp_train_data, pp_train_labels,

'/tmp/imdb/train_data.tfr', 0)

create_records(pp_test_data, pp_test_labels, '/tmp/imdb/test_data.tfr',

next_record_id)

50000

使用计算图近邻增强训练数据

拥有样本特征与合成计算图后,我们可以生成用于神经结构学习的增强训练数据。NSL 框架提供了一个将计算图和样本特征相结合的库,二者结合可生成用于计算图正则化的最终训练数据。所得的训练数据将包括原始样本特征及其相应近邻的特征。

在本教程中,我们考虑无向边并为每个样本最多使用 3 个近邻,以使用计算图近邻来增强训练数据。

nsl.tools.pack_nbrs(

'/tmp/imdb/train_data.tfr',

'',

'/tmp/imdb/graph_99.tsv',

'/tmp/imdb/nsl_train_data.tfr',

add_undirected_edges=True,

max_nbrs=3)

基础模型

现在,我们已准备好构建无计算图正则化的基础模型。为了构建此模型,我们可以使用在构建计算图时使用的嵌入向量,也可以与分类任务一起学习新的嵌入向量。在此笔记本中,我们将使用后者。

全局变量

NBR_FEATURE_PREFIX = 'NL_nbr_'

NBR_WEIGHT_SUFFIX = '_weight'

超参数

我们将使用 HParams 的实例来包含用于训练和评估的各种超参数和常量。以下为各项内容的简要介绍:

num_classes:有 2 个 类 - 正面和负面。

max_seq_length:在本示例中,此参数为每条电影评论中考虑的最大单词数。

vocab_size:此参数为本示例考虑的词汇量。

distance_type:此参数为用于正则化样本与其近邻的距离指标。

graph_regularization_multiplier:此参数控制计算图正则化项在总体损失函数中的相对权重。

num_neighbors:用于计算图正则化的近邻数。此值必须小于或等于调用

nsl.tools.pack_nbrs时上文使用的max_nbrs参数。num_fc_units:神经网络的全连接层中的单元数。

train_epochs:训练周期数。

batch_size:用于训练和评估的批次大小。

eval_steps:认定评估完成之前需要处理的批次数。如果设置为

None,则将评估测试集中的所有实例。

class HParams(object):

"""Hyperparameters used for training."""

def __init__(self):

### dataset parameters

self.num_classes = 2

self.max_seq_length = 256

self.vocab_size = 10000

### neural graph learning parameters

self.distance_type = nsl.configs.DistanceType.L2

self.graph_regularization_multiplier = 0.1

self.num_neighbors = 2

### model architecture

self.num_embedding_dims = 16

self.num_lstm_dims = 64

self.num_fc_units = 64

### training parameters

self.train_epochs = 10

self.batch_size = 128

### eval parameters

self.eval_steps = None # All instances in the test set are evaluated.

HPARAMS = HParams()

准备数据

评论(整数数组)必须先转换为张量,然后才能馈入神经网络。可以通过以下两种方式完成此转换:

将数组转换为指示单词是否出现的

0和1向量,类似于独热编码。例如,序列[3, 5]将成为10000-维向量,除了索引3和5为 1 之外,其余均为 0。然后,使其成为我们网络中的第一层(Dense层),可以处理浮点向量数据。但是,此方法需要占用大量内存,需要num_words * num_reviews大小的矩阵。另外,我们可以填充数组以使其均具有相同的长度,然后创建形状为

max_length * num_reviews的整数张量。我们可以使用能够处理此形状的嵌入向量层作为网络中的第一层。

在本教程中,我们将使用第二种方法。

由于电影评论长度必须相同,因此我们将使用如下定义的 pad_sequence 函数来标准化长度。

def make_dataset(file_path, training=False):

"""Creates a `tf.data.TFRecordDataset`.

Args:

file_path: Name of the file in the `.tfrecord` format containing

`tf.train.Example` objects.

training: Boolean indicating if we are in training mode.

Returns:

An instance of `tf.data.TFRecordDataset` containing the `tf.train.Example`

objects.

"""

def pad_sequence(sequence, max_seq_length):

"""Pads the input sequence (a `tf.SparseTensor`) to `max_seq_length`."""

pad_size = tf.maximum([0], max_seq_length - tf.shape(sequence)[0])

padded = tf.concat(

[sequence.values,

tf.fill((pad_size), tf.cast(0, sequence.dtype))],

axis=0)

# The input sequence may be larger than max_seq_length. Truncate down if

# necessary.

return tf.slice(padded, [0], [max_seq_length])

def parse_example(example_proto):

"""Extracts relevant fields from the `example_proto`.

Args:

example_proto: An instance of `tf.train.Example`.

Returns:

A pair whose first value is a dictionary containing relevant features

and whose second value contains the ground truth labels.

"""

# The 'words' feature is a variable length word ID vector.

feature_spec = {

'words': tf.io.VarLenFeature(tf.int64),

'label': tf.io.FixedLenFeature((), tf.int64, default_value=-1),

}

# We also extract corresponding neighbor features in a similar manner to

# the features above during training.

if training:

for i in range(HPARAMS.num_neighbors):

nbr_feature_key = '{}{}_{}'.format(NBR_FEATURE_PREFIX, i, 'words')

nbr_weight_key = '{}{}{}'.format(NBR_FEATURE_PREFIX, i,

NBR_WEIGHT_SUFFIX)

feature_spec[nbr_feature_key] = tf.io.VarLenFeature(tf.int64)

# We assign a default value of 0.0 for the neighbor weight so that

# graph regularization is done on samples based on their exact number

# of neighbors. In other words, non-existent neighbors are discounted.

feature_spec[nbr_weight_key] = tf.io.FixedLenFeature(

[1], tf.float32, default_value=tf.constant([0.0]))

features = tf.io.parse_single_example(example_proto, feature_spec)

# Since the 'words' feature is a variable length word vector, we pad it to a

# constant maximum length based on HPARAMS.max_seq_length

features['words'] = pad_sequence(features['words'], HPARAMS.max_seq_length)

if training:

for i in range(HPARAMS.num_neighbors):

nbr_feature_key = '{}{}_{}'.format(NBR_FEATURE_PREFIX, i, 'words')

features[nbr_feature_key] = pad_sequence(features[nbr_feature_key],

HPARAMS.max_seq_length)

labels = features.pop('label')

return features, labels

dataset = tf.data.TFRecordDataset([file_path])

if training:

dataset = dataset.shuffle(10000)

dataset = dataset.map(parse_example)

dataset = dataset.batch(HPARAMS.batch_size)

return dataset

train_dataset = make_dataset('/tmp/imdb/nsl_train_data.tfr', True)

test_dataset = make_dataset('/tmp/imdb/test_data.tfr')

构建模型

神经网络是通过堆叠层创建的,这需要确定两个主要架构决策:

- 在模型中使用多少个层?

- 为每个层使用多少个隐藏单元?

在本示例中,输入数据由单词索引数组组成。要预测的标签为 0 或 1。

在本教程中,我们将使用双向 LSTM 作为基础模型。

# This function exists as an alternative to the bi-LSTM model used in this

# notebook.

def make_feed_forward_model():

"""Builds a simple 2 layer feed forward neural network."""

inputs = tf.keras.Input(

shape=(HPARAMS.max_seq_length,), dtype='int64', name='words')

embedding_layer = tf.keras.layers.Embedding(HPARAMS.vocab_size, 16)(inputs)

pooling_layer = tf.keras.layers.GlobalAveragePooling1D()(embedding_layer)

dense_layer = tf.keras.layers.Dense(16, activation='relu')(pooling_layer)

outputs = tf.keras.layers.Dense(1, activation='sigmoid')(dense_layer)

return tf.keras.Model(inputs=inputs, outputs=outputs)

def make_bilstm_model():

"""Builds a bi-directional LSTM model."""

inputs = tf.keras.Input(

shape=(HPARAMS.max_seq_length,), dtype='int64', name='words')

embedding_layer = tf.keras.layers.Embedding(HPARAMS.vocab_size,

HPARAMS.num_embedding_dims)(

inputs)

lstm_layer = tf.keras.layers.Bidirectional(

tf.keras.layers.LSTM(HPARAMS.num_lstm_dims))(

embedding_layer)

dense_layer = tf.keras.layers.Dense(

HPARAMS.num_fc_units, activation='relu')(

lstm_layer)

outputs = tf.keras.layers.Dense(1, activation='sigmoid')(dense_layer)

return tf.keras.Model(inputs=inputs, outputs=outputs)

# Feel free to use an architecture of your choice.

model = make_bilstm_model()

model.summary()

Model: "model" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= words (InputLayer) [(None, 256)] 0 _________________________________________________________________ embedding (Embedding) (None, 256, 16) 160000 _________________________________________________________________ bidirectional (Bidirectional (None, 128) 41472 _________________________________________________________________ dense (Dense) (None, 64) 8256 _________________________________________________________________ dense_1 (Dense) (None, 1) 65 ================================================================= Total params: 209,793 Trainable params: 209,793 Non-trainable params: 0 _________________________________________________________________

按顺序有效堆叠层以构建分类器:

- 第一层为接受整数编码词汇的

Input层。 - 第二层为

Embedding层,该层接受整数编码词汇并查找嵌入向量中的每个单词索引。在模型训练时会学习这些向量。向量会向输出数组添加维度。得到的维度为:(batch, sequence, embedding)。 - 接下来,双向 LSTM 层会为每个样本返回固定长度的输出向量。

- 此固定长度的输出向量穿过一个包含 64 个隐藏单元的全连接 (

Dense) 层。 - 最后一层与单个输出节点密集连接。利用

sigmoid激活函数,得出此值是 0 到 1 之间的浮点数,表示概率或置信度。

隐藏单元

上述模型在输入和输出之间有两个中间(或称“隐藏”)层(不包括 Embedding 层)。输出(单元、节点或神经元)的数量是层的表示空间的维度。换言之,即网络学习内部表示时允许的自由度。

模型的隐藏单元越多(更高维度的表示空间)和/或层越多,则网络可以学习的表示越复杂。但是,这会导致网络的计算开销增加,并且可能导致学习不需要的模式——提高在训练数据(而不是测试数据)上的性能的模式。这就叫过拟合。

损失函数和优化器

模型训练需要一个损失函数和一个优化器。由于这是二元分类问题,并且模型输出概率(具有 Sigmoid 激活的单一单元层),我们将使用 binary_crossentropy 损失函数。

model.compile(

optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

创建验证集

训练时,我们希望检验该模型在未见过的数据上的准确率。为此,需要将原始训练数据中的一部分分离出来,创建一个验证集。(为何现在不使用测试集?因为我们的目标是仅使用训练数据开发和调整模型,然后只使用一次测试数据来评估准确率)。

在本教程中,我们将大约 10% 的初始训练样本(25000 的 10%)作为用于训练的带标签数据,其余作为验证数据。由于初始训练/测试数据集以 50/50 的比例拆分(每个数据集 25000 个样本),因此我们现在的有效训练/验证/测试数据集拆分比例为 5/45/50。

请注意,“train_dataset”已进行批处理并且已打乱顺序。

validation_fraction = 0.9

validation_size = int(validation_fraction *

int(training_samples_count / HPARAMS.batch_size))

print(validation_size)

validation_dataset = train_dataset.take(validation_size)

train_dataset = train_dataset.skip(validation_size)

175

训练模型。

以 mini-batch 训练模型。训练时,基于验证集监测模型的损失和准确率:

history = model.fit(

train_dataset,

validation_data=validation_dataset,

epochs=HPARAMS.train_epochs,

verbose=1)

Epoch 1/10 /tmpfs/src/tf_docs_env/lib/python3.7/site-packages/keras/engine/functional.py:585: UserWarning: Input dict contained keys ['NL_nbr_0_words', 'NL_nbr_1_words', 'NL_nbr_0_weight', 'NL_nbr_1_weight'] which did not match any model input. They will be ignored by the model. [n for n in tensors.keys() if n not in ref_input_names]) 21/21 [==============================] - 9s 146ms/step - loss: 0.6931 - accuracy: 0.5085 - val_loss: 0.6928 - val_accuracy: 0.4989 Epoch 2/10 21/21 [==============================] - 3s 119ms/step - loss: 0.6903 - accuracy: 0.5200 - val_loss: 0.6726 - val_accuracy: 0.5987 Epoch 3/10 21/21 [==============================] - 3s 115ms/step - loss: 0.6142 - accuracy: 0.6700 - val_loss: 0.5208 - val_accuracy: 0.7495 Epoch 4/10 21/21 [==============================] - 3s 119ms/step - loss: 0.5798 - accuracy: 0.7031 - val_loss: 0.5288 - val_accuracy: 0.7393 Epoch 5/10 21/21 [==============================] - 3s 117ms/step - loss: 0.4258 - accuracy: 0.8088 - val_loss: 0.4528 - val_accuracy: 0.7882 Epoch 6/10 21/21 [==============================] - 3s 117ms/step - loss: 0.3700 - accuracy: 0.8469 - val_loss: 0.3157 - val_accuracy: 0.8724 Epoch 7/10 21/21 [==============================] - 3s 118ms/step - loss: 0.3016 - accuracy: 0.8815 - val_loss: 0.3324 - val_accuracy: 0.8650 Epoch 8/10 21/21 [==============================] - 3s 118ms/step - loss: 0.2782 - accuracy: 0.8846 - val_loss: 0.2791 - val_accuracy: 0.8908 Epoch 9/10 21/21 [==============================] - 3s 115ms/step - loss: 0.2848 - accuracy: 0.8908 - val_loss: 0.3076 - val_accuracy: 0.8850 Epoch 10/10 21/21 [==============================] - 3s 116ms/step - loss: 0.2376 - accuracy: 0.9115 - val_loss: 0.2608 - val_accuracy: 0.9019

评估模型

现在,我们来看看模型的表现。模型将返回两个值:损失(表示错误的数字,值越低越好)和准确率。

results = model.evaluate(test_dataset, steps=HPARAMS.eval_steps)

print(results)

196/196 [==============================] - 3s 10ms/step - loss: 0.3607 - accuracy: 0.8494 [0.3607163727283478, 0.8494399785995483]

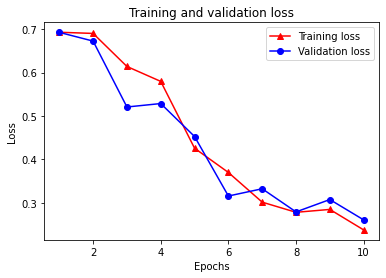

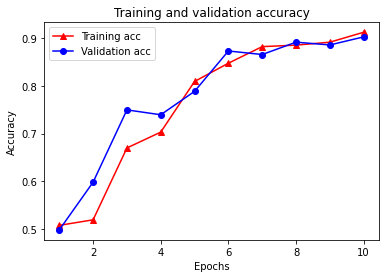

Create a graph of accuracy/loss over time

model.fit() 会返回包含一个字典的 History 对象。该字典包含训练过程中产生的所有信息:

history_dict = history.history

history_dict.keys()

dict_keys(['loss', 'accuracy', 'val_loss', 'val_accuracy'])

其中有四个条目:每个条目代表训练和验证过程中的一项监测指标。我们可以使用这些指标来绘制用于比较的训练和验证图表,以及训练和验证准确率图表:

acc = history_dict['accuracy']

val_acc = history_dict['val_accuracy']

loss = history_dict['loss']

val_loss = history_dict['val_loss']

epochs = range(1, len(acc) + 1)

# "-r^" is for solid red line with triangle markers.

plt.plot(epochs, loss, '-r^', label='Training loss')

# "-b0" is for solid blue line with circle markers.

plt.plot(epochs, val_loss, '-bo', label='Validation loss')

plt.title('Training and validation loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend(loc='best')

plt.show()

plt.clf() # clear figure

plt.plot(epochs, acc, '-r^', label='Training acc')

plt.plot(epochs, val_acc, '-bo', label='Validation acc')

plt.title('Training and validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend(loc='best')

plt.show()

请注意,训练损失会逐周期下降,而训练准确率则逐周期上升。使用梯度下降优化时,这是预期结果——它应该在每次迭代中最大限度减少所需的数量。

计算图正则化

现在,我们已准备好尝试使用上面构建的基础模型来执行计算图正则化。我们将使用神经结构学习框架提供的 GraphRegularization 包装器类来包装基础 (bi-LSTM) 模型以包含计算图正则化。训练和评估计算图正则化模型的其余步骤与基础模型相似。

创建计算图正则化模型

为了评估计算图正则化的增量收益,我们将创建一个新的基础模型实例。这是因为 model 已完成了几次训练迭代,重用这个经过训练的模型来创建计算图正则化模型对于 model 的比较而言,结果将有失偏颇。

# Build a new base LSTM model.

base_reg_model = make_bilstm_model()

# Wrap the base model with graph regularization.

graph_reg_config = nsl.configs.make_graph_reg_config(

max_neighbors=HPARAMS.num_neighbors,

multiplier=HPARAMS.graph_regularization_multiplier,

distance_type=HPARAMS.distance_type,

sum_over_axis=-1)

graph_reg_model = nsl.keras.GraphRegularization(base_reg_model,

graph_reg_config)

graph_reg_model.compile(

optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

训练模型。

graph_reg_history = graph_reg_model.fit(

train_dataset,

validation_data=validation_dataset,

epochs=HPARAMS.train_epochs,

verbose=1)

Epoch 1/10

/tmpfs/src/tf_docs_env/lib/python3.7/site-packages/tensorflow/python/framework/indexed_slices.py:449: UserWarning: Converting sparse IndexedSlices(IndexedSlices(indices=Tensor("gradient_tape/GraphRegularization/graph_loss/Reshape_1:0", shape=(None,), dtype=int32), values=Tensor("gradient_tape/GraphRegularization/graph_loss/Reshape:0", shape=(None, 1), dtype=float32), dense_shape=Tensor("gradient_tape/GraphRegularization/graph_loss/Cast:0", shape=(2,), dtype=int32))) to a dense Tensor of unknown shape. This may consume a large amount of memory.

"shape. This may consume a large amount of memory." % value)

21/21 [==============================] - 10s 198ms/step - loss: 0.6939 - accuracy: 0.5046 - scaled_graph_loss: 6.9759e-07 - val_loss: 0.6928 - val_accuracy: 0.4982

Epoch 2/10

21/21 [==============================] - 3s 147ms/step - loss: 0.6863 - accuracy: 0.5692 - scaled_graph_loss: 1.0529e-04 - val_loss: 0.6651 - val_accuracy: 0.6108

Epoch 3/10

21/21 [==============================] - 4s 149ms/step - loss: 0.6368 - accuracy: 0.6619 - scaled_graph_loss: 0.0021 - val_loss: 0.5921 - val_accuracy: 0.6766

Epoch 4/10

21/21 [==============================] - 4s 150ms/step - loss: 0.5739 - accuracy: 0.7512 - scaled_graph_loss: 0.0028 - val_loss: 0.4775 - val_accuracy: 0.7827

Epoch 5/10

21/21 [==============================] - 4s 150ms/step - loss: 0.4458 - accuracy: 0.8112 - scaled_graph_loss: 0.0125 - val_loss: 0.3648 - val_accuracy: 0.8467

Epoch 6/10

21/21 [==============================] - 4s 151ms/step - loss: 0.3891 - accuracy: 0.8392 - scaled_graph_loss: 0.0175 - val_loss: 0.3937 - val_accuracy: 0.8268

Epoch 7/10

21/21 [==============================] - 4s 152ms/step - loss: 0.3802 - accuracy: 0.8477 - scaled_graph_loss: 0.0167 - val_loss: 0.3406 - val_accuracy: 0.8646

Epoch 8/10

21/21 [==============================] - 4s 149ms/step - loss: 0.3556 - accuracy: 0.8608 - scaled_graph_loss: 0.0170 - val_loss: 0.3115 - val_accuracy: 0.8771

Epoch 9/10

21/21 [==============================] - 4s 155ms/step - loss: 0.2992 - accuracy: 0.8935 - scaled_graph_loss: 0.0220 - val_loss: 0.2904 - val_accuracy: 0.8891

Epoch 10/10

21/21 [==============================] - 4s 149ms/step - loss: 0.3050 - accuracy: 0.8919 - scaled_graph_loss: 0.0237 - val_loss: 0.2651 - val_accuracy: 0.8999

评估模型

graph_reg_results = graph_reg_model.evaluate(test_dataset, steps=HPARAMS.eval_steps)

print(graph_reg_results)

196/196 [==============================] - 3s 10ms/step - loss: 0.3608 - accuracy: 0.8490 [0.36080455780029297, 0.8490399718284607]

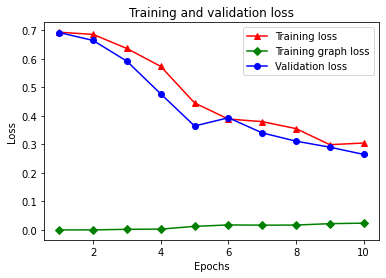

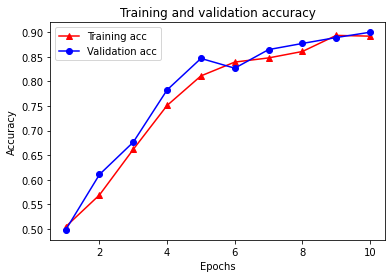

创建准确率/损失随时间变化的图表

graph_reg_history_dict = graph_reg_history.history

graph_reg_history_dict.keys()

dict_keys(['loss', 'accuracy', 'scaled_graph_loss', 'val_loss', 'val_accuracy'])

字典中共有五个条目:训练损失、训练准确率、训练计算图损失、验证损失和验证准确率。我们可以共同绘制这些条目以便比较。请注意,计算图损失仅在训练期间计算。

acc = graph_reg_history_dict['accuracy']

val_acc = graph_reg_history_dict['val_accuracy']

loss = graph_reg_history_dict['loss']

graph_loss = graph_reg_history_dict['scaled_graph_loss']

val_loss = graph_reg_history_dict['val_loss']

epochs = range(1, len(acc) + 1)

plt.clf() # clear figure

# "-r^" is for solid red line with triangle markers.

plt.plot(epochs, loss, '-r^', label='Training loss')

# "-gD" is for solid green line with diamond markers.

plt.plot(epochs, graph_loss, '-gD', label='Training graph loss')

# "-b0" is for solid blue line with circle markers.

plt.plot(epochs, val_loss, '-bo', label='Validation loss')

plt.title('Training and validation loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend(loc='best')

plt.show()

plt.clf() # clear figure

plt.plot(epochs, acc, '-r^', label='Training acc')

plt.plot(epochs, val_acc, '-bo', label='Validation acc')

plt.title('Training and validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend(loc='best')

plt.show()

半监督学习的能力

当训练数据量很少时,半监督学习(更具体地说,即本教程背景中的计算图正则化)将非常实用。可通过利用训练样本之间的相似度来弥补缺乏训练数据的不足,这在传统的监督学习中是无法实现的。

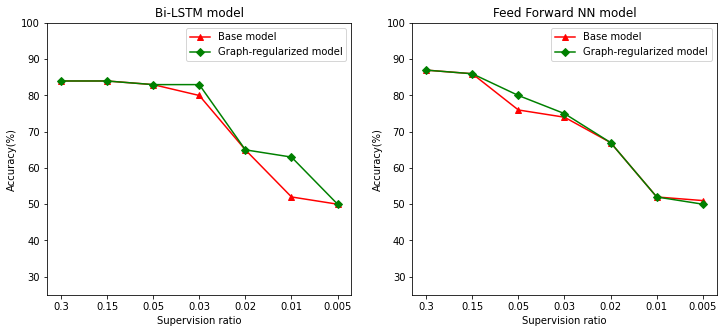

我们将监督比率定义为训练样本与样本总数(包括训练样本、验证样本和测试样本)之间的比率。在此笔记本中,我们使用了 0.05 的监督比率(即带标签数据的 5%)来训练基础模型和计算图正则化模型。我们在下面的单元中展示了监督比率对模型准确率的影响。

# Accuracy values for both the Bi-LSTM model and the feed forward NN model have

# been precomputed for the following supervision ratios.

supervision_ratios = [0.3, 0.15, 0.05, 0.03, 0.02, 0.01, 0.005]

model_tags = ['Bi-LSTM model', 'Feed Forward NN model']

base_model_accs = [[84, 84, 83, 80, 65, 52, 50], [87, 86, 76, 74, 67, 52, 51]]

graph_reg_model_accs = [[84, 84, 83, 83, 65, 63, 50],

[87, 86, 80, 75, 67, 52, 50]]

plt.clf() # clear figure

fig, axes = plt.subplots(1, 2)

fig.set_size_inches((12, 5))

for ax, model_tag, base_model_acc, graph_reg_model_acc in zip(

axes, model_tags, base_model_accs, graph_reg_model_accs):

# "-r^" is for solid red line with triangle markers.

ax.plot(base_model_acc, '-r^', label='Base model')

# "-gD" is for solid green line with diamond markers.

ax.plot(graph_reg_model_acc, '-gD', label='Graph-regularized model')

ax.set_title(model_tag)

ax.set_xlabel('Supervision ratio')

ax.set_ylabel('Accuracy(%)')

ax.set_ylim((25, 100))

ax.set_xticks(range(len(supervision_ratios)))

ax.set_xticklabels(supervision_ratios)

ax.legend(loc='best')

plt.show()

<Figure size 432x288 with 0 Axes>

可以观察到,随着监督比率的降低,模型的准确率也会降低。这一规律对于基础模型和计算图正则化模型均是如此,无论使用哪种模型架构。但请注意,在两种架构中,计算图正则化模型的性能均优于基础模型。特别是对于 Bi-LSTM 模型,当监督比率为 0.01 时,计算图正则化模型的准确率将比基础模型高 20% 左右。这主要归功于计算图正则化模型的半监督学习,除训练样本本身之外,半监督学习还使用了训练样本之间的结构相似度。

结论

我们演示了如何使用计算图正则化来实现利用神经结构学习 (NSL) 框架的情感分类(即使在输入不包含显式计算图时)。我们选取了 IMDB 电影评论的情感分类任务,为此,我们基于评论嵌入向量合成了相似度计算图。我们鼓励用户通过更改超参数、监督量以及使用不同的模型架构来进行进一步实验。