Bayesian model selection

View on TensorFlow.org View on TensorFlow.org

|

Run in Google Colab Run in Google Colab

|

View source on GitHub View source on GitHub

|

Download notebook Download notebook

|

Imports

import numpy as np

import tensorflow as tf

import tf_keras

import tensorflow_probability as tfp

from tensorflow_probability import distributions as tfd

from matplotlib import pylab as plt

%matplotlib inline

import scipy.stats

Task: changepoint detection with multiple changepoints

Consider a changepoint detection task: events happen at a rate that changes over time, driven by sudden shifts in the (unobserved) state of some system or process generating the data.

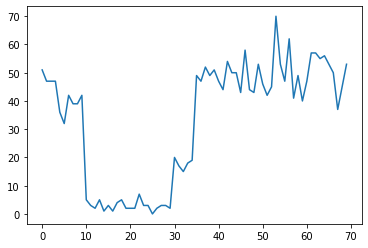

For example, we might observe a series of counts like the following:

true_rates = [40, 3, 20, 50]

true_durations = [10, 20, 5, 35]

observed_counts = tf.concat(

[tfd.Poisson(rate).sample(num_steps)

for (rate, num_steps) in zip(true_rates, true_durations)], axis=0)

plt.plot(observed_counts)

[<matplotlib.lines.Line2D at 0x7f7589bdae10>]

These could represent the number of failures in a datacenter, number of visitors to a webpage, number of packets on a network link, etc.

Note it's not entirely apparent how many distinct system regimes there are just from looking at the data. Can you tell where each of the three switchpoints occurs?

Known number of states

We'll first consider the (perhaps unrealistic) case where the number of unobserved states is known a priori. Here, we'd assume we know there are four latent states.

We model this problem as a switching (inhomogeneous) Poisson process: at each point in time, the number of events that occur is Poisson distributed, and the rate of events is determined by the unobserved system state \(z_t\):

\[x_t \sim \text{Poisson}(\lambda_{z_t})\]

The latent states are discrete: \(z_t \in \{1, 2, 3, 4\}\), so \(\lambda = [\lambda_1, \lambda_2, \lambda_3, \lambda_4]\) is a simple vector containing a Poisson rate for each state. To model the evolution of states over time, we'll define a simple transition model \(p(z_t | z_{t-1})\): let's say that at each step we stay in the previous state with some probability \(p\), and with probability \(1-p\) we transition to a different state uniformly at random. The initial state is also chosen uniformly at random, so we have:

\[ \begin{align*} z_1 &\sim \text{Categorical}\left(\left\{\frac{1}{4}, \frac{1}{4}, \frac{1}{4}, \frac{1}{4}\right\}\right)\\ z_t | z_{t-1} &\sim \text{Categorical}\left(\left\{\begin{array}{cc}p & \text{if } z_t = z_{t-1} \\ \frac{1-p}{4-1} & \text{otherwise}\end{array}\right\}\right) \end{align*}\]

These assumptions correspond to a hidden Markov model with Poisson emissions. We can encode them in TFP using tfd.HiddenMarkovModel. First, we define the transition matrix and the uniform prior on the initial state:

num_states = 4

initial_state_logits = tf.zeros([num_states]) # uniform distribution

daily_change_prob = 0.05

transition_probs = tf.fill([num_states, num_states],

daily_change_prob / (num_states - 1))

transition_probs = tf.linalg.set_diag(transition_probs,

tf.fill([num_states],

1 - daily_change_prob))

print("Initial state logits:\n{}".format(initial_state_logits))

print("Transition matrix:\n{}".format(transition_probs))

Initial state logits: [0. 0. 0. 0.] Transition matrix: [[0.95 0.01666667 0.01666667 0.01666667] [0.01666667 0.95 0.01666667 0.01666667] [0.01666667 0.01666667 0.95 0.01666667] [0.01666667 0.01666667 0.01666667 0.95 ]]

Next, we build a tfd.HiddenMarkovModel distribution, using a trainable variable to represent the rates associated with each system state. We parameterize the rates in log-space to ensure they are positive-valued.

# Define variable to represent the unknown log rates.

trainable_log_rates = tf.Variable(

tf.math.log(tf.reduce_mean(observed_counts)) +

tf.random.stateless_normal([num_states], seed=(42, 42)),

name='log_rates')

hmm = tfd.HiddenMarkovModel(

initial_distribution=tfd.Categorical(

logits=initial_state_logits),

transition_distribution=tfd.Categorical(probs=transition_probs),

observation_distribution=tfd.Poisson(log_rate=trainable_log_rates),

num_steps=len(observed_counts))

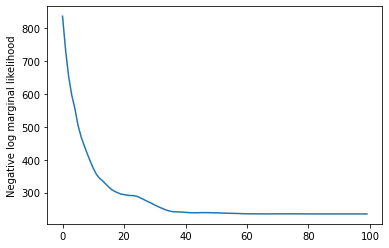

Finally, we define the model's total log density, including a weakly-informative LogNormal prior on the rates, and run an optimizer to compute the maximum a posteriori (MAP) fit to the observed count data.

rate_prior = tfd.LogNormal(5, 5)

def log_prob():

return (tf.reduce_sum(rate_prior.log_prob(tf.math.exp(trainable_log_rates))) +

hmm.log_prob(observed_counts))

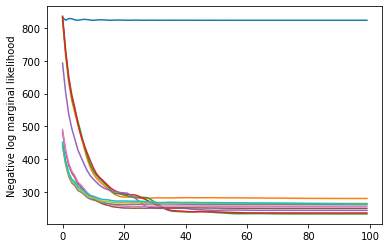

losses = tfp.math.minimize(

lambda: -log_prob(),

optimizer=tf_keras.optimizers.Adam(learning_rate=0.1),

num_steps=100)

plt.plot(losses)

plt.ylabel('Negative log marginal likelihood')

Text(0, 0.5, 'Negative log marginal likelihood')

rates = tf.exp(trainable_log_rates)

print("Inferred rates: {}".format(rates))

print("True rates: {}".format(true_rates))

Inferred rates: [ 2.8302798 49.58499 41.928307 17.35112 ] True rates: [40, 3, 20, 50]

It worked! Note that the latent states in this model are identifiable only up to permutation, so the rates we recovered are in a different order, and there's a bit of noise, but generally they match pretty well.

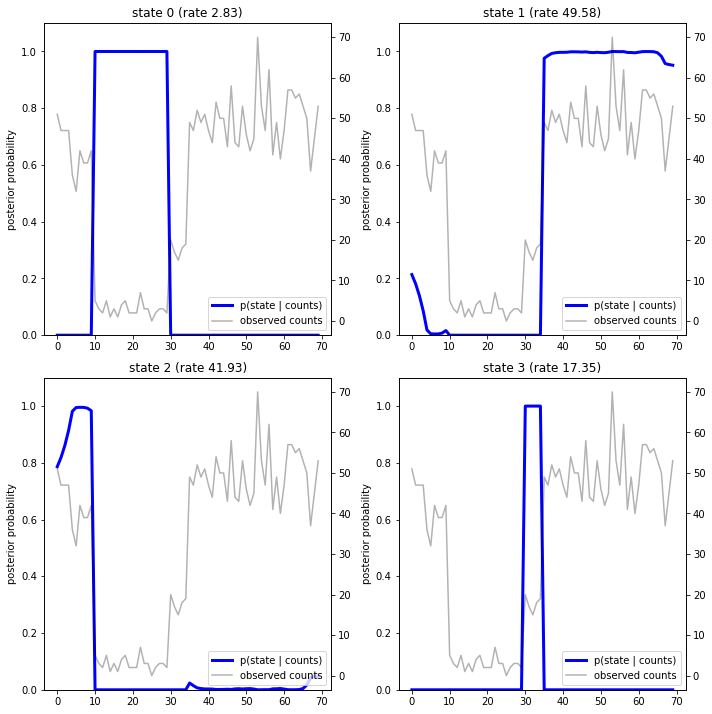

Recovering the state trajectory

Now that we've fit the model, we might want to reconstruct which state the model believes the system was in at each timestep.

This is a posterior inference task: given the observed counts \(x_{1:T}\) and model parameters (rates) \(\lambda\), we want to infer the sequence of discrete latent variables, following the posterior distribution \(p(z_{1:T} | x_{1:T}, \lambda)\). In a hidden Markov model, we can efficiently compute marginals and other properties of this distribution using standard message-passing algorithms. In particular, the posterior_marginals method will efficiently compute (using the forward-backward algorithm) the marginal probability distribution \(p(Z_t = z_t | x_{1:T})\) over the discrete latent state \(Z_t\) at each timestep \(t\).

# Runs forward-backward algorithm to compute marginal posteriors.

posterior_dists = hmm.posterior_marginals(observed_counts)

posterior_probs = posterior_dists.probs_parameter().numpy()

Plotting the posterior probabilities, we recover the model's "explanation" of the data: at which points in time is each state active?

def plot_state_posterior(ax, state_posterior_probs, title):

ln1 = ax.plot(state_posterior_probs, c='blue', lw=3, label='p(state | counts)')

ax.set_ylim(0., 1.1)

ax.set_ylabel('posterior probability')

ax2 = ax.twinx()

ln2 = ax2.plot(observed_counts, c='black', alpha=0.3, label='observed counts')

ax2.set_title(title)

ax2.set_xlabel("time")

lns = ln1+ln2

labs = [l.get_label() for l in lns]

ax.legend(lns, labs, loc=4)

ax.grid(True, color='white')

ax2.grid(False)

fig = plt.figure(figsize=(10, 10))

plot_state_posterior(fig.add_subplot(2, 2, 1),

posterior_probs[:, 0],

title="state 0 (rate {:.2f})".format(rates[0]))

plot_state_posterior(fig.add_subplot(2, 2, 2),

posterior_probs[:, 1],

title="state 1 (rate {:.2f})".format(rates[1]))

plot_state_posterior(fig.add_subplot(2, 2, 3),

posterior_probs[:, 2],

title="state 2 (rate {:.2f})".format(rates[2]))

plot_state_posterior(fig.add_subplot(2, 2, 4),

posterior_probs[:, 3],

title="state 3 (rate {:.2f})".format(rates[3]))

plt.tight_layout()

In this (simple) case, we see that the model is usually quite confident: at most timesteps it assigns essentially all probability mass to a single one of the four states. Luckily, the explanations look reasonable!

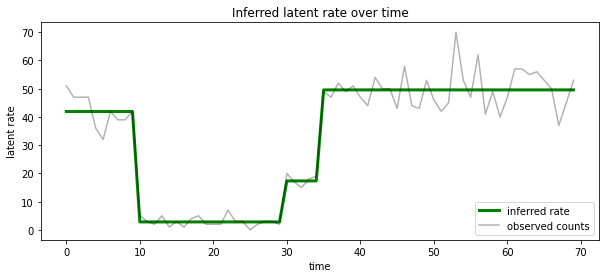

We can also visualize this posterior in terms of the rate associated with the most likely latent state at each timestep, condensing the probabilistic posterior into a single explanation:

most_probable_states = hmm.posterior_mode(observed_counts)

most_probable_rates = tf.gather(rates, most_probable_states)

fig = plt.figure(figsize=(10, 4))

ax = fig.add_subplot(1, 1, 1)

ax.plot(most_probable_rates, c='green', lw=3, label='inferred rate')

ax.plot(observed_counts, c='black', alpha=0.3, label='observed counts')

ax.set_ylabel("latent rate")

ax.set_xlabel("time")

ax.set_title("Inferred latent rate over time")

ax.legend(loc=4)

<matplotlib.legend.Legend at 0x7f75849e70f0>

Unknown number of states

In real problems, we may not know the 'true' number of states in the system we're modeling. This may not always be a concern: if you don't particularly care about the identities of the unknown states, you could just run a model with more states than you know the model will need, and learn (something like) a bunch of duplicate copies of the actual states. But let's assume you do care about inferring the 'true' number of latent states.

We can view this as a case of Bayesian model selection: we have a set of candidate models, each with a different number of latent states, and we want to choose the one that is most likely to have generated the observed data. To do this, we compute the marginal likelihood of the data under each model (we could also add a prior on the models themselves, but that won't be necessary in this analysis; the Bayesian Occam's razor turns out to be sufficient to encode a preference towards simpler models).

Unfortunately, the true marginal likelihood, which integrates over both the discrete states \(z_{1:T}\) and the (vector of) rate parameters \(\lambda\), \(p(x_{1:T}) = \int p(x_{1:T}, z_{1:T}, \lambda) dz d\lambda,\) is not tractable for this model. For convenience, we'll approximate it using a so-called "empirical Bayes" or "type II maximum likelihood" estimate: instead of fully integrating out the (unknown) rate parameters \(\lambda\) associated with each system state, we'll optimize over their values:

\[\tilde{p}(x_{1:T}) = \max_\lambda \int p(x_{1:T}, z_{1:T}, \lambda) dz\]

This approximation may overfit, i.e., it will prefer more complex models than the true marginal likelihood would. We could consider more faithful approximations, e.g., optimizing a variational lower bound, or using a Monte Carlo estimator such as annealed importance sampling; these are (sadly) beyond the scope of this notebook. (For more on Bayesian model selection and approximations, chapter 7 of the excellent Machine Learning: a Probabilistic Perspective is a good reference.)

In principle, we could do this model comparison simply by rerunning the optimization above many times with different values of num_states, but that would be a lot of work. Here we'll show how to consider multiple models in parallel, using TFP's batch_shape mechanism for vectorization.

Transition matrix and initial state prior: rather than building a single model description, now we'll build a batch of transition matrices and prior logits, one for each candidate model up to max_num_states. For easy batching we'll need to ensure that all computations have the same 'shape': this must correspond to the dimensions of the largest model we'll fit. To handle smaller models, we can 'embed' their descriptions in the topmost dimensions of the state space, effectively treating the remaining dimensions as dummy states that are never used.

max_num_states = 10

def build_latent_state(num_states, max_num_states, daily_change_prob=0.05):

# Give probability exp(-100) ~= 0 to states outside of the current model.

active_states_mask = tf.concat([tf.ones([num_states]),

tf.zeros([max_num_states - num_states])],

axis=0)

initial_state_logits = -100. * (1 - active_states_mask)

# Build a transition matrix that transitions only within the current

# `num_states` states.

transition_probs = tf.fill([num_states, num_states],

0. if num_states == 1

else daily_change_prob / (num_states - 1))

padded_transition_probs = tf.eye(max_num_states) + tf.pad(

tf.linalg.set_diag(transition_probs,

tf.fill([num_states], - daily_change_prob)),

paddings=[(0, max_num_states - num_states),

(0, max_num_states - num_states)])

return initial_state_logits, padded_transition_probs

# For each candidate model, build the initial state prior and transition matrix.

batch_initial_state_logits = []

batch_transition_probs = []

for num_states in range(1, max_num_states+1):

initial_state_logits, transition_probs = build_latent_state(

num_states=num_states,

max_num_states=max_num_states)

batch_initial_state_logits.append(initial_state_logits)

batch_transition_probs.append(transition_probs)

batch_initial_state_logits = tf.stack(batch_initial_state_logits)

batch_transition_probs = tf.stack(batch_transition_probs)

print("Shape of initial_state_logits: {}".format(batch_initial_state_logits.shape))

print("Shape of transition probs: {}".format(batch_transition_probs.shape))

print("Example initial state logits for num_states==3:\n{}".format(batch_initial_state_logits[2, :]))

print("Example transition_probs for num_states==3:\n{}".format(batch_transition_probs[2, :, :]))

Shape of initial_state_logits: (10, 10) Shape of transition probs: (10, 10, 10) Example initial state logits for num_states==3: [ -0. -0. -0. -100. -100. -100. -100. -100. -100. -100.] Example transition_probs for num_states==3: [[0.95 0.025 0.025 0. 0. 0. 0. 0. 0. 0. ] [0.025 0.95 0.025 0. 0. 0. 0. 0. 0. 0. ] [0.025 0.025 0.95 0. 0. 0. 0. 0. 0. 0. ] [0. 0. 0. 1. 0. 0. 0. 0. 0. 0. ] [0. 0. 0. 0. 1. 0. 0. 0. 0. 0. ] [0. 0. 0. 0. 0. 1. 0. 0. 0. 0. ] [0. 0. 0. 0. 0. 0. 1. 0. 0. 0. ] [0. 0. 0. 0. 0. 0. 0. 1. 0. 0. ] [0. 0. 0. 0. 0. 0. 0. 0. 1. 0. ] [0. 0. 0. 0. 0. 0. 0. 0. 0. 1. ]]

Now we proceed similarly as above. This time we'll use an extra batch dimension in trainable_rates to separately fit the rates for each model under consideration.

trainable_log_rates = tf.Variable(

tf.fill([batch_initial_state_logits.shape[0], max_num_states],

tf.math.log(tf.reduce_mean(observed_counts))) +

tf.random.stateless_normal([1, max_num_states], seed=(42, 42)),

name='log_rates')

hmm = tfd.HiddenMarkovModel(

initial_distribution=tfd.Categorical(

logits=batch_initial_state_logits),

transition_distribution=tfd.Categorical(probs=batch_transition_probs),

observation_distribution=tfd.Poisson(log_rate=trainable_log_rates),

num_steps=len(observed_counts))

print("Defined HMM with batch shape: {}".format(hmm.batch_shape))

Defined HMM with batch shape: (10,)

In computing the total log prob, we are careful to sum over only the priors for the rates actually used by each model component:

rate_prior = tfd.LogNormal(5, 5)

def log_prob():

prior_lps = rate_prior.log_prob(tf.math.exp(trainable_log_rates))

prior_lp = tf.stack(

[tf.reduce_sum(prior_lps[i, :i+1]) for i in range(max_num_states)])

return prior_lp + hmm.log_prob(observed_counts)

Now we optimize the batch objective we've constructed, fitting all candidate models simultaneously:

losses = tfp.math.minimize(

lambda: -log_prob(),

optimizer=tf_keras.optimizers.Adam(0.1),

num_steps=100)

plt.plot(losses)

plt.ylabel('Negative log marginal likelihood')

Text(0, 0.5, 'Negative log marginal likelihood')

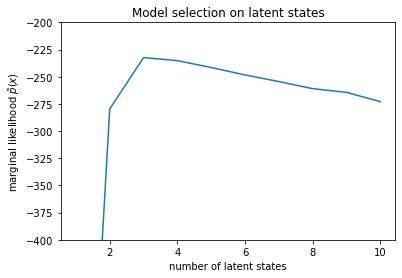

num_states = np.arange(1, max_num_states+1)

plt.plot(num_states, -losses[-1])

plt.ylim([-400, -200])

plt.ylabel("marginal likelihood $\\tilde{p}(x)$")

plt.xlabel("number of latent states")

plt.title("Model selection on latent states")

Text(0.5, 1.0, 'Model selection on latent states')

Examining the likelihoods, we see that the (approximate) marginal likelihood tends to prefer a three-state model. This seems quite plausible -- the 'true' model had four states, but from just looking at the data it's hard to rule out a three-state explanation.

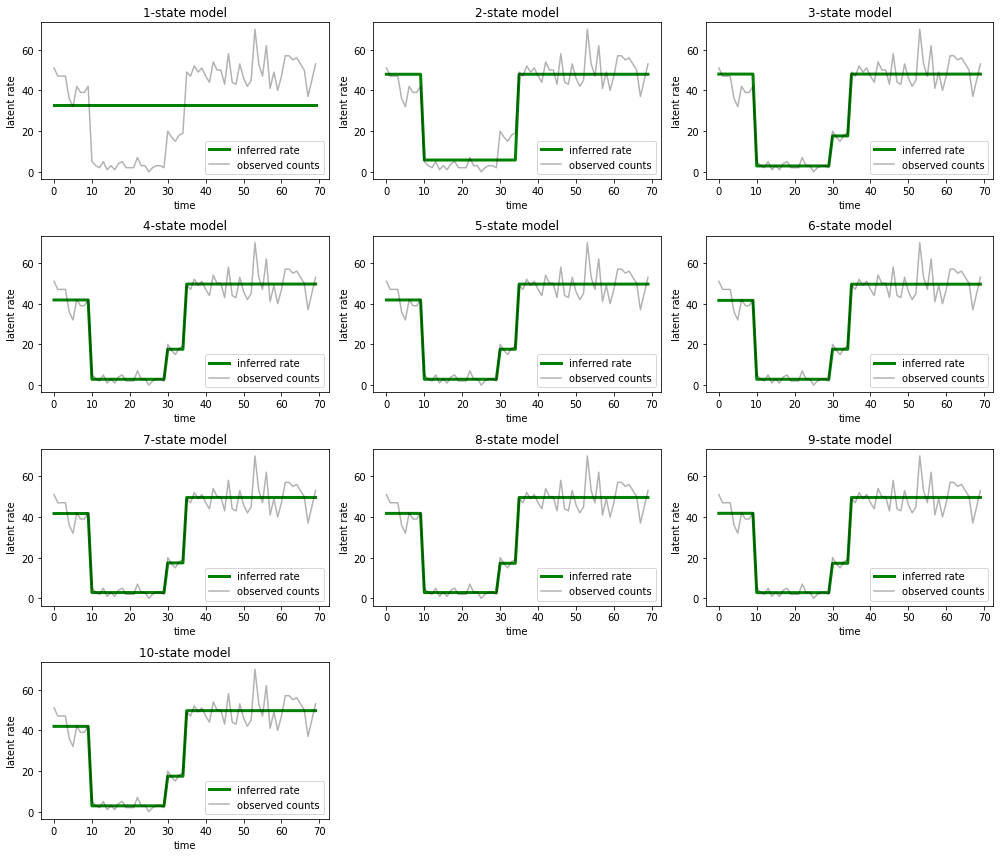

We can also extract the rates fit for each candidate model:

rates = tf.exp(trainable_log_rates)

for i, learned_model_rates in enumerate(rates):

print("rates for {}-state model: {}".format(i+1, learned_model_rates[:i+1]))

rates for 1-state model: [32.968506] rates for 2-state model: [ 5.789209 47.948917] rates for 3-state model: [ 2.841977 48.057507 17.958897] rates for 4-state model: [ 2.8302798 49.585037 41.928406 17.351114 ] rates for 5-state model: [17.399694 77.83679 41.975216 49.62771 2.8256145] rates for 6-state model: [41.63677 77.20768 49.570934 49.557076 17.630419 2.8713436] rates for 7-state model: [41.711704 76.405945 49.581184 49.561283 17.451889 2.8722699 17.43608 ] rates for 8-state model: [41.771793 75.41323 49.568714 49.591846 17.2523 17.247969 17.231388 2.830598] rates for 9-state model: [41.83378 74.50916 49.619488 49.622494 2.8369408 17.254414 17.21532 2.5904858 17.252514 ] rates for 10-state model: [4.1886074e+01 7.3912338e+01 4.1940136e+01 4.9652588e+01 2.8485537e+00 1.7433832e+01 6.7564294e-02 1.9590002e+00 1.7430998e+01 7.8838937e-02]

And plot the explanations each model provides for the data:

most_probable_states = hmm.posterior_mode(observed_counts)

fig = plt.figure(figsize=(14, 12))

for i, learned_model_rates in enumerate(rates):

ax = fig.add_subplot(4, 3, i+1)

ax.plot(tf.gather(learned_model_rates, most_probable_states[i]), c='green', lw=3, label='inferred rate')

ax.plot(observed_counts, c='black', alpha=0.3, label='observed counts')

ax.set_ylabel("latent rate")

ax.set_xlabel("time")

ax.set_title("{}-state model".format(i+1))

ax.legend(loc=4)

plt.tight_layout()

It's easy to see how the one-, two-, and (more subtly) three-state models provide inadequate explanations. Interestingly, all models above four states provide essentially the same explanation! This is likely because our 'data' is relatively clean and leaves little room for alternative explanations; on messier real-world data we would expect the higher-capacity models to provide progressively better fits to the data, with some tradeoff point where the improved fit is outweighted by model complexity.

Extensions

The models in this notebook could be straightforwardly extended in many ways. For example:

- allowing latent states to have different probabilities (some states may be common vs rare)

- allowing nonuniform transitions between latent states (e.g., to learn that a machine crash is usually followed by a system reboot is usually followed by a period of good performance, etc.)

- other emission models, e.g.

NegativeBinomialto model varying dispersions in count data, or continous distributions such asNormalfor real-valued data.