View on TensorFlow.org View on TensorFlow.org

|

Run in Google Colab Run in Google Colab

|

View on GitHub View on GitHub

|

Download notebook Download notebook

|

Introduction

Decision Forests (DF) are a family of Machine Learning algorithms for supervised classification, regression and ranking. As the name suggests, DFs use decision trees as a building block. Today, the two most popular DF training algorithms are Random Forests and Gradient Boosted Decision Trees.

TensorFlow Decision Forests (TF-DF) is a library for the training, evaluation, interpretation and inference of Decision Forest models.

In this tutorial, you will learn how to:

- Train a multi-class classification Random Forest on a dataset containing numerical, categorical and missing features.

- Evaluate the model on a test dataset.

- Prepare the model for TensorFlow Serving.

- Examine the overall structure of the model and the importance of each feature.

- Re-train the model with a different learning algorithm (Gradient Boosted Decision Trees).

- Use a different set of input features.

- Change the hyperparameters of the model.

- Preprocess the features.

- Train a model for regression.

Detailed documentation is available in the user manual. The example directory contains other end-to-end examples.

Installing TensorFlow Decision Forests

Install TF-DF by running the following cell.

pip install tensorflow_decision_forestsWurlitzer is needed to display the detailed training logs in Colabs (when using verbose=2 in the model constructor).

pip install wurlitzerImporting libraries

import os

# Keep using Keras 2

os.environ['TF_USE_LEGACY_KERAS'] = '1'

import tensorflow_decision_forests as tfdf

import numpy as np

import pandas as pd

import tensorflow as tf

import tf_keras

import math

2026-01-12 13:59:46.395546: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:467] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered WARNING: All log messages before absl::InitializeLog() is called are written to STDERR E0000 00:00:1768226386.418824 10462 cuda_dnn.cc:8579] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered E0000 00:00:1768226386.426729 10462 cuda_blas.cc:1407] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered W0000 00:00:1768226386.445654 10462 computation_placer.cc:177] computation placer already registered. Please check linkage and avoid linking the same target more than once. W0000 00:00:1768226386.445674 10462 computation_placer.cc:177] computation placer already registered. Please check linkage and avoid linking the same target more than once. W0000 00:00:1768226386.445677 10462 computation_placer.cc:177] computation placer already registered. Please check linkage and avoid linking the same target more than once. W0000 00:00:1768226386.445679 10462 computation_placer.cc:177] computation placer already registered. Please check linkage and avoid linking the same target more than once.

The hidden code cell limits the output height in colab.

from IPython.core.magic import register_line_magic

from IPython.display import Javascript

from IPython.display import display as ipy_display

# Some of the model training logs can cover the full

# screen if not compressed to a smaller viewport.

# This magic allows setting a max height for a cell.

@register_line_magic

def set_cell_height(size):

ipy_display(

Javascript("google.colab.output.setIframeHeight(0, true, {maxHeight: " +

str(size) + "})"))

# Check the version of TensorFlow Decision Forests

print("Found TensorFlow Decision Forests v" + tfdf.__version__)

Found TensorFlow Decision Forests v1.12.0

Training a Random Forest model

In this section, we train, evaluate, analyse and export a multi-class classification Random Forest trained on the Palmer's Penguins dataset.

Load the dataset and convert it in a tf.Dataset

This dataset is very small (300 examples) and stored as a .csv-like file. Therefore, use Pandas to load it.

Let's assemble the dataset into a csv file (i.e. add the header), and load it:

# Download the dataset

!wget -q https://storage.googleapis.com/download.tensorflow.org/data/palmer_penguins/penguins.csv -O /tmp/penguins.csv

# Load a dataset into a Pandas Dataframe.

dataset_df = pd.read_csv("/tmp/penguins.csv")

# Display the first 3 examples.

dataset_df.head(3)

The dataset contains a mix of numerical (e.g. bill_depth_mm), categorical

(e.g. island) and missing features. TF-DF supports all these feature types natively (differently than NN based models), therefore there is no need for preprocessing in the form of one-hot encoding, normalization or extra is_present feature.

Labels are a bit different: Keras metrics expect integers. The label (species) is stored as a string, so let's convert it into an integer.

# Encode the categorical labels as integers.

#

# Details:

# This stage is necessary if your classification label is represented as a

# string since Keras expects integer classification labels.

# When using `pd_dataframe_to_tf_dataset` (see below), this step can be skipped.

# Name of the label column.

label = "species"

classes = dataset_df[label].unique().tolist()

print(f"Label classes: {classes}")

dataset_df[label] = dataset_df[label].map(classes.index)

Label classes: ['Adelie', 'Gentoo', 'Chinstrap']

Next split the dataset into training and testing:

# Split the dataset into a training and a testing dataset.

def split_dataset(dataset, test_ratio=0.30):

"""Splits a panda dataframe in two."""

test_indices = np.random.rand(len(dataset)) < test_ratio

return dataset[~test_indices], dataset[test_indices]

train_ds_pd, test_ds_pd = split_dataset(dataset_df)

print("{} examples in training, {} examples for testing.".format(

len(train_ds_pd), len(test_ds_pd)))

250 examples in training, 94 examples for testing.

And finally, convert the pandas dataframe (pd.Dataframe) into tensorflow datasets (tf.data.Dataset):

train_ds = tfdf.keras.pd_dataframe_to_tf_dataset(train_ds_pd, label=label)

test_ds = tfdf.keras.pd_dataframe_to_tf_dataset(test_ds_pd, label=label)

I0000 00:00:1768226390.702906 10462 gpu_device.cc:2019] Created device /job:localhost/replica:0/task:0/device:GPU:0 with 13638 MB memory: -> device: 0, name: Tesla T4, pci bus id: 0000:00:05.0, compute capability: 7.5 I0000 00:00:1768226390.705145 10462 gpu_device.cc:2019] Created device /job:localhost/replica:0/task:0/device:GPU:1 with 13756 MB memory: -> device: 1, name: Tesla T4, pci bus id: 0000:00:06.0, compute capability: 7.5 I0000 00:00:1768226390.707437 10462 gpu_device.cc:2019] Created device /job:localhost/replica:0/task:0/device:GPU:2 with 13756 MB memory: -> device: 2, name: Tesla T4, pci bus id: 0000:00:07.0, compute capability: 7.5 I0000 00:00:1768226390.709568 10462 gpu_device.cc:2019] Created device /job:localhost/replica:0/task:0/device:GPU:3 with 13756 MB memory: -> device: 3, name: Tesla T4, pci bus id: 0000:00:08.0, compute capability: 7.5

Notes: Recall that pd_dataframe_to_tf_dataset converts string labels to integers if necessary.

If you want to create the tf.data.Dataset yourself, there are a couple of things to remember:

- The learning algorithms work with a one-epoch dataset and without shuffling.

- The batch size does not impact the training algorithm, but a small value might slow down reading the dataset.

Train the model

%set_cell_height 300

# Specify the model.

model_1 = tfdf.keras.RandomForestModel(verbose=2)

# Train the model.

model_1.fit(train_ds)

<IPython.core.display.Javascript object>

Warning: The `num_threads` constructor argument is not set and the number of CPU is os.cpu_count()=32 > 32. Setting num_threads to 32. Set num_threads manually to use more than 32 cpus.

WARNING:absl:The `num_threads` constructor argument is not set and the number of CPU is os.cpu_count()=32 > 32. Setting num_threads to 32. Set num_threads manually to use more than 32 cpus.

Use /tmpfs/tmp/tmpauvzz185 as temporary training directory

Reading training dataset...

Training tensor examples:

Features: {'island': <tf.Tensor 'data:0' shape=(None,) dtype=string>, 'bill_length_mm': <tf.Tensor 'data_1:0' shape=(None,) dtype=float64>, 'bill_depth_mm': <tf.Tensor 'data_2:0' shape=(None,) dtype=float64>, 'flipper_length_mm': <tf.Tensor 'data_3:0' shape=(None,) dtype=float64>, 'body_mass_g': <tf.Tensor 'data_4:0' shape=(None,) dtype=float64>, 'sex': <tf.Tensor 'data_5:0' shape=(None,) dtype=string>, 'year': <tf.Tensor 'data_6:0' shape=(None,) dtype=int64>}

Label: Tensor("data_7:0", shape=(None,), dtype=int64)

Weights: None

Normalized tensor features:

{'island': SemanticTensor(semantic=<Semantic.CATEGORICAL: 2>, tensor=<tf.Tensor 'data:0' shape=(None,) dtype=string>), 'bill_length_mm': SemanticTensor(semantic=<Semantic.NUMERICAL: 1>, tensor=<tf.Tensor 'Cast:0' shape=(None,) dtype=float32>), 'bill_depth_mm': SemanticTensor(semantic=<Semantic.NUMERICAL: 1>, tensor=<tf.Tensor 'Cast_1:0' shape=(None,) dtype=float32>), 'flipper_length_mm': SemanticTensor(semantic=<Semantic.NUMERICAL: 1>, tensor=<tf.Tensor 'Cast_2:0' shape=(None,) dtype=float32>), 'body_mass_g': SemanticTensor(semantic=<Semantic.NUMERICAL: 1>, tensor=<tf.Tensor 'Cast_3:0' shape=(None,) dtype=float32>), 'sex': SemanticTensor(semantic=<Semantic.CATEGORICAL: 2>, tensor=<tf.Tensor 'data_5:0' shape=(None,) dtype=string>), 'year': SemanticTensor(semantic=<Semantic.NUMERICAL: 1>, tensor=<tf.Tensor 'Cast_4:0' shape=(None,) dtype=float32>)}

Training dataset read in 0:00:03.851266. Found 250 examples.

Training model...

Standard output detected as not visible to the user e.g. running in a notebook. Creating a training log redirection. If training gets stuck, try calling tfdf.keras.set_training_logs_redirection(False).

Model trained in 0:00:00.058962

Compiling model...

Model compiled.

<tf_keras.src.callbacks.History at 0x7f85d804e670>

Remarks

- No input features are specified. Therefore, all the columns will be used as

input features except for the label. The feature used by the model are shown

in the training logs and in the

model.summary(). - DFs consume natively numerical, categorical, categorical-set features and missing-values. Numerical features do not need to be normalized. Categorical string values do not need to be encoded in a dictionary.

- No training hyper-parameters are specified. Therefore the default hyper-parameters will be used. Default hyper-parameters provide reasonable results in most situations.

- Calling

compileon the model before thefitis optional. Compile can be used to provide extra evaluation metrics. - Training algorithms do not need validation datasets. If a validation dataset is provided, it will only be used to show metrics.

- Tweak the

verboseargument toRandomForestModelto control the amount of displayed training logs. Setverbose=0to hide most of the logs. Setverbose=2to show all the logs.

Evaluate the model

Let's evaluate our model on the test dataset.

model_1.compile(metrics=["accuracy"])

evaluation = model_1.evaluate(test_ds, return_dict=True)

print()

for name, value in evaluation.items():

print(f"{name}: {value:.4f}")

1/1 [==============================] - 0s 290ms/step - loss: 0.0000e+00 - accuracy: 1.0000 loss: 0.0000 accuracy: 1.0000

Remark: The test accuracy is close to the Out-of-bag accuracy shown in the training logs.

See the Model Self Evaluation section below for more evaluation methods.

Prepare this model for TensorFlow Serving.

Export the model to the SavedModel format for later re-use e.g. TensorFlow Serving.

model_1.save("/tmp/my_saved_model")

INFO:tensorflow:Assets written to: /tmp/my_saved_model/assets INFO:tensorflow:Assets written to: /tmp/my_saved_model/assets

Plot the model

Plotting a decision tree and following the first branches helps learning about decision forests. In some cases, plotting a model can even be used for debugging.

Because of the difference in the way they are trained, some models are more interesting to plan than others. Because of the noise injected during training and the depth of the trees, plotting Random Forest is less informative than plotting a CART or the first tree of a Gradient Boosted Tree.

Never the less, let's plot the first tree of our Random Forest model:

tfdf.model_plotter.plot_model_in_colab(model_1, tree_idx=0, max_depth=3)

The root node on the left contains the first condition (bill_depth_mm >= 16.55), number of examples (240) and label distribution (the red-blue-green bar).

Examples that evaluates true to bill_depth_mm >= 16.55 are branched to the green path. The other ones are branched to the red path.

The deeper the node, the more pure they become i.e. the label distribution is biased toward a subset of classes.

Model structure and feature importance

The overall structure of the model is show with .summary(). You will see:

- Type: The learning algorithm used to train the model (

Random Forestin our case). - Task: The problem solved by the model (

Classificationin our case). - Input Features: The input features of the model.

- Variable Importance: Different measures of the importance of each feature for the model.

- Out-of-bag evaluation: The out-of-bag evaluation of the model. This is a cheap and efficient alternative to cross-validation.

- Number of {trees, nodes} and other metrics: Statistics about the structure of the decisions forests.

Remark: The summary's content depends on the learning algorithm (e.g. Out-of-bag is only available for Random Forest) and the hyper-parameters (e.g. the mean-decrease-in-accuracy variable importance can be disabled in the hyper-parameters).

%set_cell_height 300

model_1.summary()

<IPython.core.display.Javascript object>

Model: "random_forest_model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

=================================================================

Total params: 1 (1.00 Byte)

Trainable params: 0 (0.00 Byte)

Non-trainable params: 1 (1.00 Byte)

_________________________________________________________________

Type: "RANDOM_FOREST"

Task: CLASSIFICATION

Label: "__LABEL"

Input Features (7):

bill_depth_mm

bill_length_mm

body_mass_g

flipper_length_mm

island

sex

year

No weights

Variable Importance: INV_MEAN_MIN_DEPTH:

1. "flipper_length_mm" 0.435977 ################

2. "bill_length_mm" 0.396917 ############

3. "bill_depth_mm" 0.327861 #######

4. "island" 0.309749 ######

5. "body_mass_g" 0.258320 ##

6. "sex" 0.234113

7. "year" 0.231849

Variable Importance: NUM_AS_ROOT:

1. "flipper_length_mm" 151.000000 ################

2. "bill_depth_mm" 75.000000 #######

3. "bill_length_mm" 58.000000 #####

4. "island" 13.000000 #

5. "body_mass_g" 3.000000

Variable Importance: NUM_NODES:

1. "bill_length_mm" 707.000000 ################

2. "bill_depth_mm" 416.000000 #########

3. "flipper_length_mm" 394.000000 ########

4. "island" 297.000000 ######

5. "body_mass_g" 290.000000 ######

6. "sex" 52.000000

7. "year" 19.000000

Variable Importance: SUM_SCORE:

1. "flipper_length_mm" 24627.319999 ################

2. "bill_length_mm" 24086.337571 ###############

3. "bill_depth_mm" 14073.983456 #########

4. "island" 10081.743781 ######

5. "body_mass_g" 2495.079835 #

6. "sex" 442.453467

7. "year" 56.784963

Winner takes all: true

Out-of-bag evaluation: accuracy:0.98 logloss:0.0970684

Number of trees: 300

Total number of nodes: 4650

Number of nodes by tree:

Count: 300 Average: 15.5 StdDev: 3.15859

Min: 9 Max: 27 Ignored: 0

----------------------------------------------

[ 9, 10) 1 0.33% 0.33%

[ 10, 11) 0 0.00% 0.33%

[ 11, 12) 29 9.67% 10.00% ###

[ 12, 13) 0 0.00% 10.00%

[ 13, 14) 72 24.00% 34.00% ########

[ 14, 15) 0 0.00% 34.00%

[ 15, 16) 94 31.33% 65.33% ##########

[ 16, 17) 0 0.00% 65.33%

[ 17, 18) 48 16.00% 81.33% #####

[ 18, 19) 0 0.00% 81.33%

[ 19, 20) 28 9.33% 90.67% ###

[ 20, 21) 0 0.00% 90.67%

[ 21, 22) 15 5.00% 95.67% ##

[ 22, 23) 0 0.00% 95.67%

[ 23, 24) 8 2.67% 98.33% #

[ 24, 25) 0 0.00% 98.33%

[ 25, 26) 3 1.00% 99.33%

[ 26, 27) 0 0.00% 99.33%

[ 27, 27] 2 0.67% 100.00%

Depth by leafs:

Count: 2475 Average: 3.41293 StdDev: 1.08618

Min: 1 Max: 7 Ignored: 0

----------------------------------------------

[ 1, 2) 5 0.20% 0.20%

[ 2, 3) 599 24.20% 24.40% ########

[ 3, 4) 711 28.73% 53.13% #########

[ 4, 5) 754 30.46% 83.60% ##########

[ 5, 6) 351 14.18% 97.78% #####

[ 6, 7) 45 1.82% 99.60% #

[ 7, 7] 10 0.40% 100.00%

Number of training obs by leaf:

Count: 2475 Average: 30.303 StdDev: 32.0454

Min: 5 Max: 112 Ignored: 0

----------------------------------------------

[ 5, 10) 1257 50.79% 50.79% ##########

[ 10, 15) 120 4.85% 55.64% #

[ 15, 21) 79 3.19% 58.83% #

[ 21, 26) 59 2.38% 61.21%

[ 26, 32) 88 3.56% 64.77% #

[ 32, 37) 66 2.67% 67.43% #

[ 37, 42) 74 2.99% 70.42% #

[ 42, 48) 76 3.07% 73.49% #

[ 48, 53) 53 2.14% 75.64%

[ 53, 59) 32 1.29% 76.93%

[ 59, 64) 31 1.25% 78.18%

[ 64, 69) 42 1.70% 79.88%

[ 69, 75) 56 2.26% 82.14%

[ 75, 80) 61 2.46% 84.61%

[ 80, 86) 113 4.57% 89.17% #

[ 86, 91) 105 4.24% 93.41% #

[ 91, 96) 77 3.11% 96.53% #

[ 96, 102) 63 2.55% 99.07% #

[ 102, 107) 18 0.73% 99.80%

[ 107, 112] 5 0.20% 100.00%

Attribute in nodes:

707 : bill_length_mm [NUMERICAL]

416 : bill_depth_mm [NUMERICAL]

394 : flipper_length_mm [NUMERICAL]

297 : island [CATEGORICAL]

290 : body_mass_g [NUMERICAL]

52 : sex [CATEGORICAL]

19 : year [NUMERICAL]

Attribute in nodes with depth <= 0:

151 : flipper_length_mm [NUMERICAL]

75 : bill_depth_mm [NUMERICAL]

58 : bill_length_mm [NUMERICAL]

13 : island [CATEGORICAL]

3 : body_mass_g [NUMERICAL]

Attribute in nodes with depth <= 1:

250 : flipper_length_mm [NUMERICAL]

223 : bill_length_mm [NUMERICAL]

206 : bill_depth_mm [NUMERICAL]

152 : island [CATEGORICAL]

63 : body_mass_g [NUMERICAL]

1 : year [NUMERICAL]

Attribute in nodes with depth <= 2:

437 : bill_length_mm [NUMERICAL]

329 : flipper_length_mm [NUMERICAL]

299 : bill_depth_mm [NUMERICAL]

249 : island [CATEGORICAL]

160 : body_mass_g [NUMERICAL]

9 : sex [CATEGORICAL]

3 : year [NUMERICAL]

Attribute in nodes with depth <= 3:

619 : bill_length_mm [NUMERICAL]

381 : bill_depth_mm [NUMERICAL]

370 : flipper_length_mm [NUMERICAL]

289 : island [CATEGORICAL]

249 : body_mass_g [NUMERICAL]

38 : sex [CATEGORICAL]

11 : year [NUMERICAL]

Attribute in nodes with depth <= 5:

704 : bill_length_mm [NUMERICAL]

414 : bill_depth_mm [NUMERICAL]

394 : flipper_length_mm [NUMERICAL]

297 : island [CATEGORICAL]

290 : body_mass_g [NUMERICAL]

52 : sex [CATEGORICAL]

19 : year [NUMERICAL]

Condition type in nodes:

1826 : HigherCondition

349 : ContainsBitmapCondition

Condition type in nodes with depth <= 0:

287 : HigherCondition

13 : ContainsBitmapCondition

Condition type in nodes with depth <= 1:

743 : HigherCondition

152 : ContainsBitmapCondition

Condition type in nodes with depth <= 2:

1228 : HigherCondition

258 : ContainsBitmapCondition

Condition type in nodes with depth <= 3:

1630 : HigherCondition

327 : ContainsBitmapCondition

Condition type in nodes with depth <= 5:

1821 : HigherCondition

349 : ContainsBitmapCondition

Node format: NOT_SET

Training OOB:

trees: 1, Out-of-bag evaluation: accuracy:0.947917 logloss:1.87727

trees: 11, Out-of-bag evaluation: accuracy:0.943775 logloss:0.660757

trees: 21, Out-of-bag evaluation: accuracy:0.968 logloss:0.506145

trees: 31, Out-of-bag evaluation: accuracy:0.956 logloss:0.241419

trees: 42, Out-of-bag evaluation: accuracy:0.956 logloss:0.240549

trees: 52, Out-of-bag evaluation: accuracy:0.964 logloss:0.234582

trees: 63, Out-of-bag evaluation: accuracy:0.964 logloss:0.23867

trees: 73, Out-of-bag evaluation: accuracy:0.972 logloss:0.235637

trees: 83, Out-of-bag evaluation: accuracy:0.972 logloss:0.232233

trees: 93, Out-of-bag evaluation: accuracy:0.972 logloss:0.228022

trees: 103, Out-of-bag evaluation: accuracy:0.976 logloss:0.0953604

trees: 114, Out-of-bag evaluation: accuracy:0.972 logloss:0.0925293

trees: 124, Out-of-bag evaluation: accuracy:0.976 logloss:0.0922547

trees: 134, Out-of-bag evaluation: accuracy:0.976 logloss:0.0932468

trees: 144, Out-of-bag evaluation: accuracy:0.976 logloss:0.0949779

trees: 154, Out-of-bag evaluation: accuracy:0.976 logloss:0.0958475

trees: 165, Out-of-bag evaluation: accuracy:0.976 logloss:0.096324

trees: 175, Out-of-bag evaluation: accuracy:0.976 logloss:0.0955369

trees: 186, Out-of-bag evaluation: accuracy:0.98 logloss:0.0917101

trees: 196, Out-of-bag evaluation: accuracy:0.98 logloss:0.091533

trees: 208, Out-of-bag evaluation: accuracy:0.98 logloss:0.092786

trees: 218, Out-of-bag evaluation: accuracy:0.98 logloss:0.092438

trees: 228, Out-of-bag evaluation: accuracy:0.98 logloss:0.0941152

trees: 238, Out-of-bag evaluation: accuracy:0.98 logloss:0.0955081

trees: 249, Out-of-bag evaluation: accuracy:0.98 logloss:0.0950918

trees: 259, Out-of-bag evaluation: accuracy:0.98 logloss:0.0952201

trees: 269, Out-of-bag evaluation: accuracy:0.98 logloss:0.0941477

trees: 279, Out-of-bag evaluation: accuracy:0.98 logloss:0.0946589

trees: 289, Out-of-bag evaluation: accuracy:0.98 logloss:0.0960823

trees: 299, Out-of-bag evaluation: accuracy:0.98 logloss:0.0960505

trees: 300, Out-of-bag evaluation: accuracy:0.98 logloss:0.0970684

The information in summary are all available programmatically using the model inspector:

# The input features

model_1.make_inspector().features()

["bill_depth_mm" (1; #1), "bill_length_mm" (1; #2), "body_mass_g" (1; #3), "flipper_length_mm" (1; #4), "island" (4; #5), "sex" (4; #6), "year" (1; #7)]

# The feature importances

model_1.make_inspector().variable_importances()

{'INV_MEAN_MIN_DEPTH': [("flipper_length_mm" (1; #4), 0.43597672269512794),

("bill_length_mm" (1; #2), 0.3969174550696071),

("bill_depth_mm" (1; #1), 0.3278611602910266),

("island" (4; #5), 0.3097488348618274),

("body_mass_g" (1; #3), 0.25832019126054884),

("sex" (4; #6), 0.23411343302569165),

("year" (1; #7), 0.23184865027854668)],

'SUM_SCORE': [("flipper_length_mm" (1; #4), 24627.319999137893),

("bill_length_mm" (1; #2), 24086.33757083863),

("bill_depth_mm" (1; #1), 14073.983455628157),

("island" (4; #5), 10081.743780997582),

("body_mass_g" (1; #3), 2495.07983523421),

("sex" (4; #6), 442.45346712321043),

("year" (1; #7), 56.7849625647068)],

'NUM_AS_ROOT': [("flipper_length_mm" (1; #4), 151.0),

("bill_depth_mm" (1; #1), 75.0),

("bill_length_mm" (1; #2), 58.0),

("island" (4; #5), 13.0),

("body_mass_g" (1; #3), 3.0)],

'NUM_NODES': [("bill_length_mm" (1; #2), 707.0),

("bill_depth_mm" (1; #1), 416.0),

("flipper_length_mm" (1; #4), 394.0),

("island" (4; #5), 297.0),

("body_mass_g" (1; #3), 290.0),

("sex" (4; #6), 52.0),

("year" (1; #7), 19.0)]}

The content of the summary and the inspector depends on the learning algorithm (tfdf.keras.RandomForestModel in this case) and its hyper-parameters (e.g. compute_oob_variable_importances=True will trigger the computation of Out-of-bag variable importances for the Random Forest learner).

Model Self Evaluation

During training TFDF models can self evaluate even if no validation dataset is provided to the fit() method. The exact logic depends on the model. For example, Random Forest will use Out-of-bag evaluation while Gradient Boosted Trees will use internal train-validation.

The model self evaluation is available with the inspector's evaluation():

model_1.make_inspector().evaluation()

Evaluation(num_examples=250, accuracy=0.98, loss=0.09706837522983551, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)

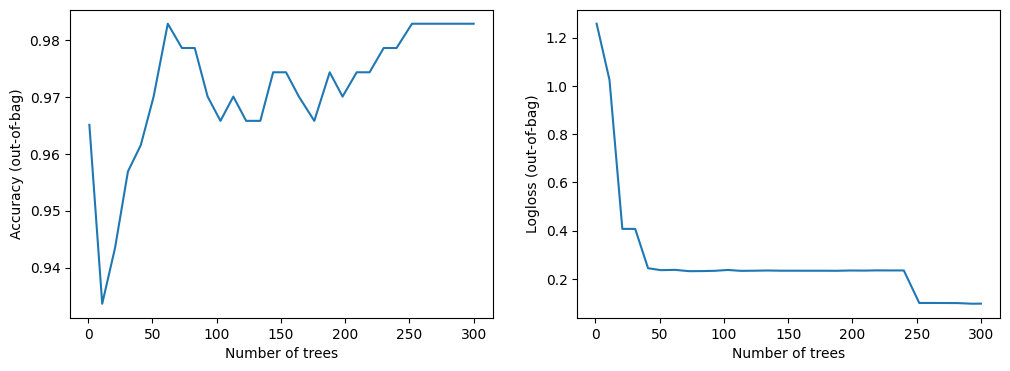

Plotting the training logs

The training logs show the quality of the model (e.g. accuracy evaluated on the out-of-bag or validation dataset) according to the number of trees in the model. These logs are helpful to study the balance between model size and model quality.

The logs are available in multiple ways:

- Displayed in during training if

fit()is wrapped inwith sys_pipes():(see example above). - At the end of the model summary i.e.

model.summary()(see example above). - Programmatically, using the model inspector i.e.

model.make_inspector().training_logs(). - Using TensorBoard

Let's try the options 2 and 3:

%set_cell_height 150

model_1.make_inspector().training_logs()

<IPython.core.display.Javascript object> [TrainLog(num_trees=1, evaluation=Evaluation(num_examples=96, accuracy=0.9479166666666666, loss=1.8772735198338826, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=11, evaluation=Evaluation(num_examples=249, accuracy=0.9437751004016064, loss=0.6607572125143795, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=21, evaluation=Evaluation(num_examples=250, accuracy=0.968, loss=0.506145211815834, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=31, evaluation=Evaluation(num_examples=250, accuracy=0.956, loss=0.24141885021328927, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=42, evaluation=Evaluation(num_examples=250, accuracy=0.956, loss=0.2405486009567976, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=52, evaluation=Evaluation(num_examples=250, accuracy=0.964, loss=0.23458186860382557, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=63, evaluation=Evaluation(num_examples=250, accuracy=0.964, loss=0.23867048719525338, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=73, evaluation=Evaluation(num_examples=250, accuracy=0.972, loss=0.2356372931599617, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=83, evaluation=Evaluation(num_examples=250, accuracy=0.972, loss=0.2322330457419157, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=93, evaluation=Evaluation(num_examples=250, accuracy=0.972, loss=0.22802215158939362, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=103, evaluation=Evaluation(num_examples=250, accuracy=0.976, loss=0.0953604139611125, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=114, evaluation=Evaluation(num_examples=250, accuracy=0.972, loss=0.09252933283150196, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=124, evaluation=Evaluation(num_examples=250, accuracy=0.976, loss=0.0922547305226326, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=134, evaluation=Evaluation(num_examples=250, accuracy=0.976, loss=0.09324676994979382, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=144, evaluation=Evaluation(num_examples=250, accuracy=0.976, loss=0.09497785523533821, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=154, evaluation=Evaluation(num_examples=250, accuracy=0.976, loss=0.09584752155095339, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=165, evaluation=Evaluation(num_examples=250, accuracy=0.976, loss=0.09632397620007396, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=175, evaluation=Evaluation(num_examples=250, accuracy=0.976, loss=0.09553687626868486, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=186, evaluation=Evaluation(num_examples=250, accuracy=0.98, loss=0.09171005741134286, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=196, evaluation=Evaluation(num_examples=250, accuracy=0.98, loss=0.09153303373605012, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=208, evaluation=Evaluation(num_examples=250, accuracy=0.98, loss=0.09278595473244786, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=218, evaluation=Evaluation(num_examples=250, accuracy=0.98, loss=0.09243803822994232, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=228, evaluation=Evaluation(num_examples=250, accuracy=0.98, loss=0.09411523111537098, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=238, evaluation=Evaluation(num_examples=250, accuracy=0.98, loss=0.0955081114768982, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=249, evaluation=Evaluation(num_examples=250, accuracy=0.98, loss=0.09509177429974079, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=259, evaluation=Evaluation(num_examples=250, accuracy=0.98, loss=0.0952201502174139, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=269, evaluation=Evaluation(num_examples=250, accuracy=0.98, loss=0.0941477091498673, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=279, evaluation=Evaluation(num_examples=250, accuracy=0.98, loss=0.09465892957895994, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=289, evaluation=Evaluation(num_examples=250, accuracy=0.98, loss=0.09608231737092138, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=299, evaluation=Evaluation(num_examples=250, accuracy=0.98, loss=0.09605051735416055, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None)), TrainLog(num_trees=300, evaluation=Evaluation(num_examples=250, accuracy=0.98, loss=0.09706837522983551, rmse=None, ndcg=None, aucs=None, auuc=None, qini=None))]

Let's plot it:

import matplotlib.pyplot as plt

logs = model_1.make_inspector().training_logs()

plt.figure(figsize=(12, 4))

plt.subplot(1, 2, 1)

plt.plot([log.num_trees for log in logs], [log.evaluation.accuracy for log in logs])

plt.xlabel("Number of trees")

plt.ylabel("Accuracy (out-of-bag)")

plt.subplot(1, 2, 2)

plt.plot([log.num_trees for log in logs], [log.evaluation.loss for log in logs])

plt.xlabel("Number of trees")

plt.ylabel("Logloss (out-of-bag)")

plt.show()

This dataset is small. You can see the model converging almost immediately.

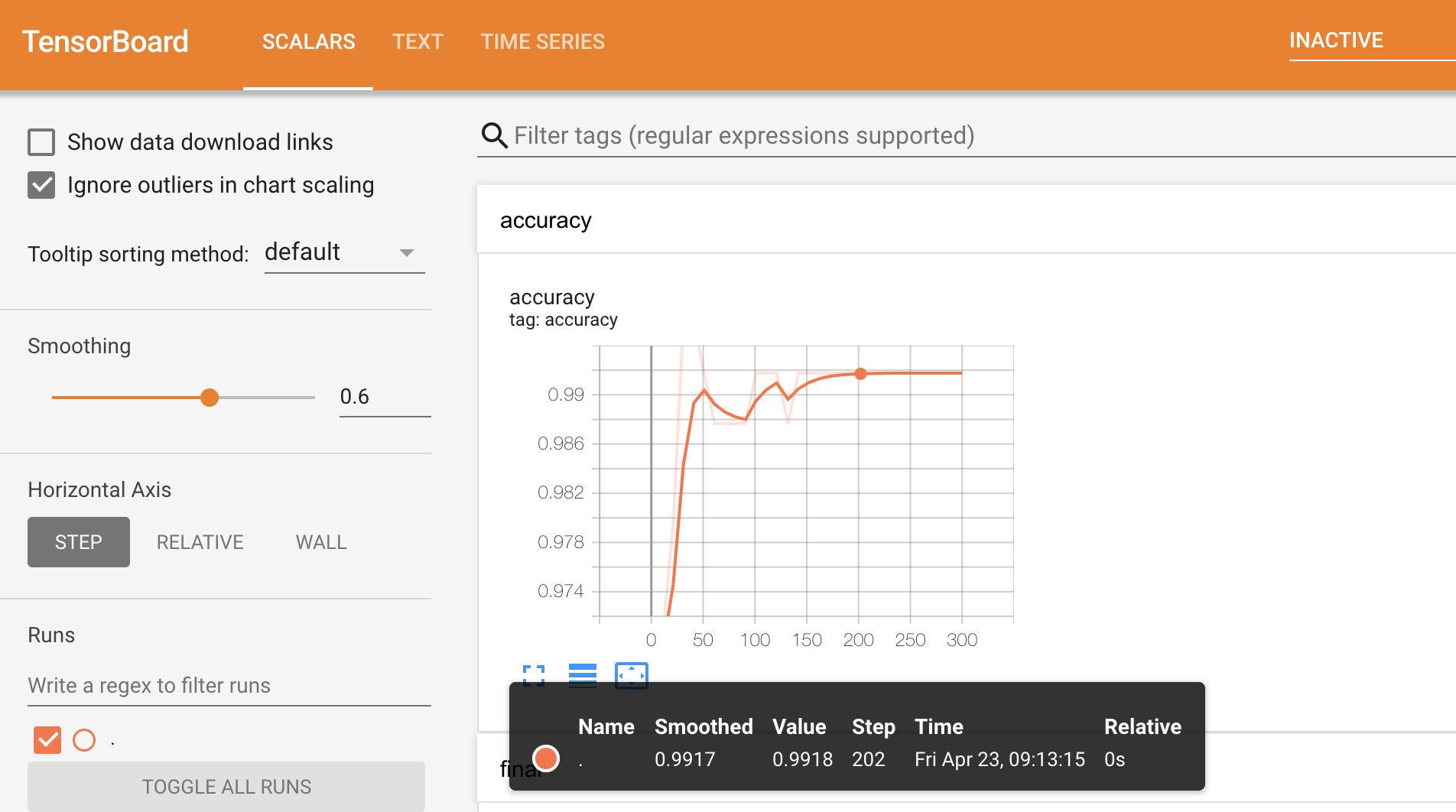

Let's use TensorBoard:

# This cell start TensorBoard that can be slow.

# Load the TensorBoard notebook extension

%load_ext tensorboard

# Google internal version

# %load_ext google3.learning.brain.tensorboard.notebook.extension

# Clear existing results (if any)rm -fr "/tmp/tensorboard_logs"

# Export the meta-data to tensorboard.

model_1.make_inspector().export_to_tensorboard("/tmp/tensorboard_logs")

# docs_infra: no_execute

# Start a tensorboard instance.

%tensorboard --logdir "/tmp/tensorboard_logs"

Re-train the model with a different learning algorithm

The learning algorithm is defined by the model class. For

example, tfdf.keras.RandomForestModel() trains a Random Forest, while

tfdf.keras.GradientBoostedTreesModel() trains a Gradient Boosted Decision

Trees.

The learning algorithms are listed by calling tfdf.keras.get_all_models() or in the

learner list.

tfdf.keras.get_all_models()

[tensorflow_decision_forests.keras.RandomForestModel, tensorflow_decision_forests.keras.GradientBoostedTreesModel, tensorflow_decision_forests.keras.CartModel, tensorflow_decision_forests.keras.DistributedGradientBoostedTreesModel]

The description of the learning algorithms and their hyper-parameters are also available in the API reference and builtin help:

# help works anywhere.

help(tfdf.keras.RandomForestModel)

# ? only works in ipython or notebooks, it usually opens on a separate panel.

tfdf.keras.RandomForestModel?

Help on class RandomForestModel in module tensorflow_decision_forests.keras:

class RandomForestModel(tensorflow_decision_forests.keras.wrappers.RandomForestModel)

| RandomForestModel(*args, **kwargs)

|

| Method resolution order:

| RandomForestModel

| tensorflow_decision_forests.keras.wrappers.RandomForestModel

| tensorflow_decision_forests.keras.core.CoreModel

| tensorflow_decision_forests.keras.core_inference.InferenceCoreModel

| tf_keras.src.engine.training.Model

| tf_keras.src.engine.base_layer.Layer

| tensorflow.python.module.module.Module

| tensorflow.python.trackable.autotrackable.AutoTrackable

| tensorflow.python.trackable.base.Trackable

| tf_keras.src.utils.version_utils.LayerVersionSelector

| tf_keras.src.utils.version_utils.ModelVersionSelector

| builtins.object

|

| Methods inherited from tensorflow_decision_forests.keras.wrappers.RandomForestModel:

|

| __init__(self, task: Optional[ForwardRef('abstract_model_pb2.Task')] = 1, features: Optional[List[tensorflow_decision_forests.keras.core.FeatureUsage]] = None, exclude_non_specified_features: Optional[bool] = False, preprocessing: Optional[ForwardRef('tf_keras.models.Functional')] = None, postprocessing: Optional[ForwardRef('tf_keras.models.Functional')] = None, training_preprocessing: Optional[ForwardRef('tf_keras.models.Functional')] = None, ranking_group: Optional[str] = None, uplift_treatment: Optional[str] = None, temp_directory: Optional[str] = None, verbose: int = 1, hyperparameter_template: Optional[str] = None, advanced_arguments: Optional[tensorflow_decision_forests.keras.core_inference.AdvancedArguments] = None, num_threads: Optional[int] = None, name: Optional[str] = None, max_vocab_count: Optional[int] = 2000, try_resume_training: Optional[bool] = True, check_dataset: Optional[bool] = True, tuner: Optional[tensorflow_decision_forests.component.tuner.tuner.Tuner] = None, discretize_numerical_features: bool = False, num_discretized_numerical_bins: int = 255, multitask: Optional[List[tensorflow_decision_forests.keras.core_inference.MultiTaskItem]] = None, adapt_bootstrap_size_ratio_for_maximum_training_duration: Optional[bool] = False, allow_na_conditions: Optional[bool] = False, bootstrap_size_ratio: Optional[float] = 1.0, bootstrap_training_dataset: Optional[bool] = True, categorical_algorithm: Optional[str] = 'CART', categorical_set_split_greedy_sampling: Optional[float] = 0.1, categorical_set_split_max_num_items: Optional[int] = -1, categorical_set_split_min_item_frequency: Optional[int] = 1, compute_oob_performances: Optional[bool] = True, compute_oob_variable_importances: Optional[bool] = False, growing_strategy: Optional[str] = 'LOCAL', honest: Optional[bool] = False, honest_fixed_separation: Optional[bool] = False, honest_ratio_leaf_examples: Optional[float] = 0.5, in_split_min_examples_check: Optional[bool] = True, keep_non_leaf_label_distribution: Optional[bool] = True, max_depth: Optional[int] = 16, max_num_nodes: Optional[int] = None, maximum_model_size_in_memory_in_bytes: Optional[float] = -1.0, maximum_training_duration_seconds: Optional[float] = -1.0, mhld_oblique_max_num_attributes: Optional[int] = None, mhld_oblique_sample_attributes: Optional[bool] = None, min_examples: Optional[int] = 5, missing_value_policy: Optional[str] = 'GLOBAL_IMPUTATION', num_candidate_attributes: Optional[int] = 0, num_candidate_attributes_ratio: Optional[float] = -1.0, num_oob_variable_importances_permutations: Optional[int] = 1, num_trees: Optional[int] = 300, numerical_vector_sequence_num_examples: Optional[int] = 1000, numerical_vector_sequence_num_random_anchors: Optional[int] = 100, pure_serving_model: Optional[bool] = False, random_seed: Optional[int] = 123456, sampling_with_replacement: Optional[bool] = True, sorting_strategy: Optional[str] = 'PRESORT', sparse_oblique_max_num_features: Optional[int] = None, sparse_oblique_max_num_projections: Optional[int] = None, sparse_oblique_normalization: Optional[str] = None, sparse_oblique_num_projections_exponent: Optional[float] = None, sparse_oblique_projection_density_factor: Optional[float] = None, sparse_oblique_weights: Optional[str] = None, sparse_oblique_weights_integer_maximum: Optional[int] = None, sparse_oblique_weights_integer_minimum: Optional[int] = None, sparse_oblique_weights_power_of_two_max_exponent: Optional[int] = None, sparse_oblique_weights_power_of_two_min_exponent: Optional[int] = None, split_axis: Optional[str] = 'AXIS_ALIGNED', uplift_min_examples_in_treatment: Optional[int] = 5, uplift_split_score: Optional[str] = 'KULLBACK_LEIBLER', winner_take_all: Optional[bool] = True, explicit_args: Optional[Set[str]] = None)

|

| ----------------------------------------------------------------------

| Static methods inherited from tensorflow_decision_forests.keras.wrappers.RandomForestModel:

|

| capabilities() -> yggdrasil_decision_forests.learner.abstract_learner_pb2.LearnerCapabilities

| Lists the capabilities of the learning algorithm.

|

| predefined_hyperparameters() -> List[tensorflow_decision_forests.keras.core.HyperParameterTemplate]

| Returns a better than default set of hyper-parameters.

|

| They can be used directly with the `hyperparameter_template` argument of the

| model constructor.

|

| These hyper-parameters outperform the default hyper-parameters (either

| generally or in specific scenarios). Like default hyper-parameters, existing

| pre-defined hyper-parameters cannot change.

|

| ----------------------------------------------------------------------

| Methods inherited from tensorflow_decision_forests.keras.core.CoreModel:

|

| collect_data_step(self, data, is_training_example)

| Collect examples e.g. training or validation.

|

| fit(self, x=None, y=None, callbacks=None, verbose: Optional[Any] = None, validation_steps: Optional[int] = None, validation_data: Optional[Any] = None, sample_weight: Optional[Any] = None, steps_per_epoch: Optional[Any] = None, class_weight: Optional[Any] = None, **kwargs) -> tf_keras.src.callbacks.History

| Trains the model.

|

| Local training

| ==============

|

| It is recommended to use a Pandas Dataframe dataset and to convert it to

| a TensorFlow dataset with `pd_dataframe_to_tf_dataset()`:

| ```python

| pd_dataset = pandas.Dataframe(...)

| tf_dataset = pd_dataframe_to_tf_dataset(dataset, label="my_label")

| model.fit(pd_dataset)

| ```

|

| The following dataset formats are supported:

|

| 1. "x" is a `tf.data.Dataset` containing a tuple "(features, labels)".

| "features" can be a dictionary a tensor, a list of tensors or a

| dictionary of tensors (recommended). "labels" is a tensor.

|

| 2. "x" is a tensor, list of tensors or dictionary of tensors containing

| the input features. "y" is a tensor.

|

| 3. "x" is a numpy-array, list of numpy-arrays or dictionary of

| numpy-arrays containing the input features. "y" is a numpy-array.

|

| IMPORTANT: This model trains on the entire dataset at once. This has the

| following consequences:

|

| 1. The dataset need to be read exactly once. If you use a TensorFlow

| dataset, make sure NOT to add a "repeat" operation.

| 2. The algorithm does not benefit from shuffling the dataset. If you use a

| TensorFlow dataset, make sure NOT to add a "shuffle" operation.

| 3. The dataset needs to be batched (i.e. with a "batch" operation).

| However, the number of elements per batch has not impact on the model.

| Generally, it is recommended to use batches as large as possible as its

| speeds-up reading the dataset in TensorFlow.

|

| Input features do not need to be normalized (e.g. dividing numerical values

| by the variance) or indexed (e.g. replacing categorical string values by

| an integer). Additionally, missing values can be consumed natively.

|

| Distributed training

| ====================

|

| Some of the learning algorithms will support distributed training with the

| ParameterServerStrategy.

|

| In this case, the dataset is read asynchronously in between the workers. The

| distribution of the training depends on the learning algorithm.

|

| Like for non-distributed training, the dataset should be read exactly once.

| The simplest solution is to divide the dataset into different files (i.e.

| shards) and have each of the worker read a non overlapping subset of shards.

|

| IMPORTANT: The training dataset should not be infinite i.e. the training

| dataset should not contain any repeat operation.

|

| Currently (to be changed), the validation dataset (if provided) is simply

| feed to the `model.evaluate()` method. Therefore, it should satisfy Keras'

| evaluate API. Notably, for distributed training, the validation dataset

| should be infinite (i.e. have a repeat operation).

|

| See https://www.tensorflow.org/decision_forests/distributed_training for

| more details and examples.

|

| Here is a single example of distributed training using PSS for both dataset

| reading and training distribution.

|

| ```python

| def dataset_fn(context, paths, training=True):

| ds_path = tf.data.Dataset.from_tensor_slices(paths)

|

|

| if context is not None:

| # Train on at least 2 workers.

| current_worker = tfdf.keras.get_worker_idx_and_num_workers(context)

| assert current_worker.num_workers > 2

|

| # Split the dataset's examples among the workers.

| ds_path = ds_path.shard(

| num_shards=current_worker.num_workers,

| index=current_worker.worker_idx)

|

| def read_csv_file(path):

| numerical = tf.constant([math.nan], dtype=tf.float32)

| categorical_string = tf.constant([""], dtype=tf.string)

| csv_columns = [

| numerical, # age

| categorical_string, # workclass

| numerical, # fnlwgt

| ...

| ]

| column_names = [

| "age", "workclass", "fnlwgt", ...

| ]

| label_name = "label"

| return tf.data.experimental.CsvDataset(path, csv_columns, header=True)

|

| ds_columns = ds_path.interleave(read_csv_file)

|

| def map_features(*columns):

| assert len(column_names) == len(columns)

| features = {column_names[i]: col for i, col in enumerate(columns)}

| label = label_table.lookup(features.pop(label_name))

| return features, label

|

| ds_dataset = ds_columns.map(map_features)

| if not training:

| dataset = dataset.repeat(None)

| ds_dataset = ds_dataset.batch(batch_size)

| return ds_dataset

|

| strategy = tf.distribute.experimental.ParameterServerStrategy(...)

| sharded_train_paths = [list of dataset files]

| with strategy.scope():

| model = DistributedGradientBoostedTreesModel()

| train_dataset = strategy.distribute_datasets_from_function(

| lambda context: dataset_fn(context, sharded_train_paths))

|

| test_dataset = strategy.distribute_datasets_from_function(

| lambda context: dataset_fn(context, sharded_test_paths))

|

| model.fit(sharded_train_paths)

| evaluation = model.evaluate(test_dataset, steps=num_test_examples //

| batch_size)

| ```

|

| Args:

| x: Training dataset (See details above for the supported formats).

| y: Label of the training dataset. Only used if "x" does not contains the

| labels.

| callbacks: Callbacks triggered during the training. The training runs in a

| single epoch, itself run in a single step. Therefore, callback logic can

| be called equivalently before/after the fit function.

| verbose: Verbosity mode. 0 = silent, 1 = small details, 2 = full details.

| validation_steps: Number of steps in the evaluation dataset when

| evaluating the trained model with `model.evaluate()`. If not specified,

| evaluates the model on the entire dataset (generally recommended; not

| yet supported for distributed datasets).

| validation_data: Validation dataset. If specified, the learner might use

| this dataset to help training e.g. early stopping.

| sample_weight: Training weights. Note: training weights can also be

| provided as the third output in a `tf.data.Dataset` e.g. (features,

| label, weights).

| steps_per_epoch: [Parameter will be removed] Number of training batch to

| load before training the model. Currently, only supported for

| distributed training.

| class_weight: For binary classification only. Mapping class indices

| (integers) to a weight (float) value. Only available for non-Distributed

| training. For maximum compatibility, feed example weights through the

| tf.data.Dataset or using the `weight` argument of

| `pd_dataframe_to_tf_dataset`.

| **kwargs: Extra arguments passed to the core keras model's fit. Note that

| not all keras' model fit arguments are supported.

|

| Returns:

| A `History` object. Its `History.history` attribute is not yet

| implemented for decision forests algorithms, and will return empty.

| All other fields are filled as usual for `Keras.Mode.fit()`.

|

| fit_on_dataset_path(self, train_path: str, label_key: Optional[str] = None, weight_key: Optional[str] = None, valid_path: Optional[str] = None, dataset_format: Optional[str] = 'csv', max_num_scanned_rows_to_accumulate_statistics: Optional[int] = 100000, try_resume_training: Optional[bool] = True, input_model_signature_fn: Optional[Callable[[tensorflow_decision_forests.component.inspector.inspector.AbstractInspector], Any]] = <function build_default_input_model_signature at 0x7f853ea9aca0>, num_io_threads: int = 10)

| Trains the model on a dataset stored on disk.

|

| This solution is generally more efficient and easier than loading the

| dataset with a `tf.Dataset` both for local and distributed training.

|

| Usage example:

|

| # Local training

| ```python

| model = keras.GradientBoostedTreesModel()

| model.fit_on_dataset_path(

| train_path="/path/to/dataset.csv",

| label_key="label",

| dataset_format="csv")

| model.save("/model/path")

| ```

|

| # Distributed training

| ```python

| with tf.distribute.experimental.ParameterServerStrategy(...).scope():

| model = model = keras.DistributedGradientBoostedTreesModel()

| model.fit_on_dataset_path(

| train_path="/path/to/dataset@10",

| label_key="label",

| dataset_format="tfrecord+tfe")

| model.save("/model/path")

| ```

|

| Args:

| train_path: Path to the training dataset. Supports comma separated files,

| shard and glob notation.

| label_key: Name of the label column.

| weight_key: Name of the weighing column.

| valid_path: Path to the validation dataset. If not provided, or if the

| learning algorithm does not supports/needs a validation dataset,

| `valid_path` is ignored.

| dataset_format: Format of the dataset. Should be one of the registered

| dataset format (see [User

| Manual](https://github.com/google/yggdrasil-decision-forests/blob/main/documentation/rtd/cli_user_manual#dataset-path-and-format)

| for more details). The format "csv" is always available but it is

| generally only suited for small datasets.

| max_num_scanned_rows_to_accumulate_statistics: Maximum number of examples

| to scan to determine the statistics of the features (i.e. the dataspec,

| e.g. mean value, dictionaries). (Currently) the "first" examples of the

| dataset are scanned (e.g. the first examples of the dataset is a single

| file). Therefore, it is important that the sampled dataset is relatively

| uniformly sampled, notably the scanned examples should contains all the

| possible categorical values (otherwise the not seen value will be

| treated as out-of-vocabulary). If set to None, the entire dataset is

| scanned. This parameter has no effect if the dataset is stored in a

| format that already contains those values.

| try_resume_training: If true, tries to resume training from the model

| checkpoint stored in the `temp_directory` directory. If `temp_directory`

| does not contain any model checkpoint, start the training from the

| start. Works in the following three situations: (1) The training was

| interrupted by the user (e.g. ctrl+c). (2) the training job was

| interrupted (e.g. rescheduling), ond (3) the hyper-parameter of the

| model were changed such that an initially completed training is now

| incomplete (e.g. increasing the number of trees).

| input_model_signature_fn: A lambda that returns the

| (Dense,Sparse,Ragged)TensorSpec (or structure of TensorSpec e.g.

| dictionary, list) corresponding to input signature of the model. If not

| specified, the input model signature is created by

| `build_default_input_model_signature`. For example, specify

| `input_model_signature_fn` if an numerical input feature (which is

| consumed as DenseTensorSpec(float32) by default) will be feed

| differently (e.g. RaggedTensor(int64)).

| num_io_threads: Number of threads to use for IO operations e.g. reading a

| dataset from disk. Increasing this value can speed-up IO operations when

| IO operations are either latency or cpu bounded.

|

| Returns:

| A `History` object. Its `History.history` attribute is not yet

| implemented for decision forests algorithms, and will return empty.

| All other fields are filled as usual for `Keras.Mode.fit()`.

|

| load_weights(self, *args, **kwargs)

| No-op for TensorFlow Decision Forests models.

|

| `load_weights` is not supported by TensorFlow Decision Forests models.

| To save and restore a model, use the SavedModel API i.e.

| `model.save(...)` and `tf_keras.models.load_model(...)`. To resume the

| training of an existing model, create the model with

| `try_resume_training=True` (default value) and with a similar

| `temp_directory` argument. See documentation of `try_resume_training`

| for more details.

|

| Args:

| *args: Passed through to base `keras.Model` implemenation.

| **kwargs: Passed through to base `keras.Model` implemenation.

|

| save(self, filepath: str, overwrite: Optional[bool] = True, **kwargs)

| Saves the model as a TensorFlow SavedModel.

|

| The exported SavedModel contains a standalone Yggdrasil Decision Forests

| model in the "assets" sub-directory. The Yggdrasil model can be used

| directly using the Yggdrasil API. However, this model does not contain the

| "preprocessing" layer (if any).

|

| Args:

| filepath: Path to the output model.

| overwrite: If true, override an already existing model. If false, raise an

| error if a model already exist.

| **kwargs: Arguments passed to the core keras model's save.

|

| support_distributed_training(self)

|

| train_on_batch(self, *args, **kwargs)

| No supported for Tensorflow Decision Forests models.

|

| Decision forests are not trained in batches the same way neural networks

| are. To avoid confusion, train_on_batch is disabled.

|

| Args:

| *args: Ignored

| **kwargs: Ignored.

|

| train_step(self, data)

| Collects training examples.

|

| valid_step(self, data)

| Collects validation examples.

|

| ----------------------------------------------------------------------

| Readonly properties inherited from tensorflow_decision_forests.keras.core.CoreModel:

|

| exclude_non_specified_features

| If true, only use the features specified in "features".

|

| learner

| Name of the learning algorithm used to train the model.

|

| learner_params

| Gets the dictionary of hyper-parameters passed in the model constructor.

|

| Changing this dictionary will impact the training.

|

| num_threads

| Number of threads used to train the model.

|

| num_training_examples

| Number of training examples.

|

| num_validation_examples

| Number of validation examples.

|

| training_model_id

| Identifier of the model.

|

| ----------------------------------------------------------------------

| Methods inherited from tensorflow_decision_forests.keras.core_inference.InferenceCoreModel:

|

| call(self, inputs, training=False)

| Inference of the model.

|

| This method is used for prediction and evaluation of a trained model.

|

| Args:

| inputs: Input tensors.

| training: Is the model being trained. Always False.

|

| Returns:

| Model predictions.

|

| call_get_leaves(self, inputs)

| Computes the index of the active leaf in each tree.

|

| The active leaf is the leave that that receive the example during inference.

|

| The returned value "leaves[i,j]" is the index of the active leave for the

| i-th example and the j-th tree. Leaves are indexed by depth first

| exploration with the negative child visited before the positive one

| (similarly as "iterate_on_nodes()" iteration). Leaf indices are also

| available with LeafNode.leaf_idx.

|

| Args:

| inputs: Input tensors. Same signature as the model's "call(inputs)".

|

| Returns:

| Index of the active leaf for each tree in the model.

|

| compile(self, metrics=None, weighted_metrics=None, **kwargs)

| Configure the model for training.

|

| Unlike for most Keras model, calling "compile" is optional before calling

| "fit".

|

| Args:

| metrics: List of metrics to be evaluated by the model during training and

| testing.

| weighted_metrics: List of metrics to be evaluated and weighted by

| `sample_weight` or `class_weight` during training and testing.

| **kwargs: Other arguments passed to compile.

|

| Raises:

| ValueError: Invalid arguments.

|

| get_config(self)

| Not supported by TF-DF, returning empty directory to avoid warnings.

|

| make_inspector(self, index: int = 0) -> tensorflow_decision_forests.component.inspector.inspector.AbstractInspector

| Creates an inspector to access the internal model structure.

|

| Usage example:

|

| ```python

| inspector = model.make_inspector()

| print(inspector.num_trees())

| print(inspector.variable_importances())

| ```

|

| Args:

| index: Index of the sub-model. Only used for multitask models.

|

| Returns:

| A model inspector.

|

| make_predict_function(self)

| Prediction of the model (!= evaluation).

|

| make_test_function(self)

| Predictions for evaluation.

|

| predict_get_leaves(self, x)

| Gets the index of the active leaf of each tree.

|

| The active leaf is the leave that that receive the example during inference.

|

| The returned value "leaves[i,j]" is the index of the active leave for the

| i-th example and the j-th tree. Leaves are indexed by depth first

| exploration with the negative child visited before the positive one

| (similarly as "iterate_on_nodes()" iteration). Leaf indices are also

| available with LeafNode.leaf_idx.

|

| Args:

| x: Input samples as a tf.data.Dataset.

|

| Returns:

| Index of the active leaf for each tree in the model.

|

| ranking_group(self) -> Optional[str]

|

| summary(self, line_length=None, positions=None, print_fn=None)

| Shows information about the model.

|

| uplift_treatment(self) -> Optional[str]

|

| yggdrasil_model_path_tensor(self, multitask_model_index: int = 0) -> Optional[tensorflow.python.framework.tensor.Tensor]

| Gets the path to yggdrasil model, if available.

|

| The effective path can be obtained with:

|

| ```python

| yggdrasil_model_path_tensor().numpy().decode("utf-8")

| ```

|

| Args:

| multitask_model_index: Index of the sub-model. Only used for multitask

| models.

|

| Returns:

| Path to the Yggdrasil model.

|

| yggdrasil_model_prefix(self, index: int = 0) -> str

| Gets the prefix of the internal yggdrasil model.

|

| ----------------------------------------------------------------------

| Readonly properties inherited from tensorflow_decision_forests.keras.core_inference.InferenceCoreModel:

|

| multitask

| Tasks to solve.

|

| task

| Task to solve (e.g. CLASSIFICATION, REGRESSION, RANKING).

|

| ----------------------------------------------------------------------

| Methods inherited from tf_keras.src.engine.training.Model:

|

| __call__(self, *args, **kwargs)

|

| __copy__(self)

|

| __deepcopy__(self, memo)

|

| __reduce__(self)

| Helper for pickle.

|

| __setattr__(self, name, value)

| Support self.foo = trackable syntax.

|

| build(self, input_shape)

| Builds the model based on input shapes received.

|

| This is to be used for subclassed models, which do not know at

| instantiation time what their inputs look like.

|

| This method only exists for users who want to call `model.build()` in a

| standalone way (as a substitute for calling the model on real data to

| build it). It will never be called by the framework (and thus it will

| never throw unexpected errors in an unrelated workflow).

|

| Args:

| input_shape: Single tuple, `TensorShape` instance, or list/dict of

| shapes, where shapes are tuples, integers, or `TensorShape`

| instances.

|

| Raises:

| ValueError:

| 1. In case of invalid user-provided data (not of type tuple,

| list, `TensorShape`, or dict).

| 2. If the model requires call arguments that are agnostic

| to the input shapes (positional or keyword arg in call

| signature).

| 3. If not all layers were properly built.

| 4. If float type inputs are not supported within the layers.

|

| In each of these cases, the user should build their model by calling

| it on real tensor data.

|

| compile_from_config(self, config)

| Compiles the model with the information given in config.

|

| This method uses the information in the config (optimizer, loss,

| metrics, etc.) to compile the model.

|

| Args:

| config: Dict containing information for compiling the model.

|

| compute_loss(self, x=None, y=None, y_pred=None, sample_weight=None)

| Compute the total loss, validate it, and return it.

|

| Subclasses can optionally override this method to provide custom loss

| computation logic.

|

| Example:

| ```python

| class MyModel(tf.keras.Model):

|

| def __init__(self, *args, **kwargs):

| super(MyModel, self).__init__(*args, **kwargs)

| self.loss_tracker = tf.keras.metrics.Mean(name='loss')

|

| def compute_loss(self, x, y, y_pred, sample_weight):

| loss = tf.reduce_mean(tf.math.squared_difference(y_pred, y))

| loss += tf.add_n(self.losses)

| self.loss_tracker.update_state(loss)

| return loss

|

| def reset_metrics(self):

| self.loss_tracker.reset_states()

|

| @property

| def metrics(self):

| return [self.loss_tracker]

|

| tensors = tf.random.uniform((10, 10)), tf.random.uniform((10,))

| dataset = tf.data.Dataset.from_tensor_slices(tensors).repeat().batch(1)

|

| inputs = tf.keras.layers.Input(shape=(10,), name='my_input')

| outputs = tf.keras.layers.Dense(10)(inputs)

| model = MyModel(inputs, outputs)

| model.add_loss(tf.reduce_sum(outputs))

|

| optimizer = tf.keras.optimizers.SGD()

| model.compile(optimizer, loss='mse', steps_per_execution=10)

| model.fit(dataset, epochs=2, steps_per_epoch=10)

| print('My custom loss: ', model.loss_tracker.result().numpy())

| ```

|

| Args:

| x: Input data.

| y: Target data.

| y_pred: Predictions returned by the model (output of `model(x)`)

| sample_weight: Sample weights for weighting the loss function.

|

| Returns:

| The total loss as a `tf.Tensor`, or `None` if no loss results (which

| is the case when called by `Model.test_step`).

|

| compute_metrics(self, x, y, y_pred, sample_weight)

| Update metric states and collect all metrics to be returned.

|

| Subclasses can optionally override this method to provide custom metric

| updating and collection logic.

|

| Example:

| ```python

| class MyModel(tf.keras.Sequential):

|

| def compute_metrics(self, x, y, y_pred, sample_weight):

|

| # This super call updates `self.compiled_metrics` and returns

| # results for all metrics listed in `self.metrics`.

| metric_results = super(MyModel, self).compute_metrics(

| x, y, y_pred, sample_weight)

|

| # Note that `self.custom_metric` is not listed in `self.metrics`.

| self.custom_metric.update_state(x, y, y_pred, sample_weight)

| metric_results['custom_metric_name'] = self.custom_metric.result()

| return metric_results

| ```

|

| Args:

| x: Input data.

| y: Target data.

| y_pred: Predictions returned by the model (output of `model.call(x)`)

| sample_weight: Sample weights for weighting the loss function.

|

| Returns:

| A `dict` containing values that will be passed to

| `tf.keras.callbacks.CallbackList.on_train_batch_end()`. Typically, the

| values of the metrics listed in `self.metrics` are returned. Example:

| `{'loss': 0.2, 'accuracy': 0.7}`.

|

| evaluate(self, x=None, y=None, batch_size=None, verbose='auto', sample_weight=None, steps=None, callbacks=None, max_queue_size=10, workers=1, use_multiprocessing=False, return_dict=False, **kwargs)

| Returns the loss value & metrics values for the model in test mode.

|

| Computation is done in batches (see the `batch_size` arg.)

|

| Args:

| x: Input data. It could be:

| - A Numpy array (or array-like), or a list of arrays

| (in case the model has multiple inputs).

| - A TensorFlow tensor, or a list of tensors

| (in case the model has multiple inputs).

| - A dict mapping input names to the corresponding array/tensors,

| if the model has named inputs.

| - A `tf.data` dataset. Should return a tuple

| of either `(inputs, targets)` or

| `(inputs, targets, sample_weights)`.

| - A generator or `keras.utils.Sequence` returning `(inputs,

| targets)` or `(inputs, targets, sample_weights)`.

| A more detailed description of unpacking behavior for iterator

| types (Dataset, generator, Sequence) is given in the `Unpacking

| behavior for iterator-like inputs` section of `Model.fit`.

| y: Target data. Like the input data `x`, it could be either Numpy

| array(s) or TensorFlow tensor(s). It should be consistent with `x`

| (you cannot have Numpy inputs and tensor targets, or inversely).

| If `x` is a dataset, generator or `keras.utils.Sequence` instance,

| `y` should not be specified (since targets will be obtained from

| the iterator/dataset).

| batch_size: Integer or `None`. Number of samples per batch of

| computation. If unspecified, `batch_size` will default to 32. Do

| not specify the `batch_size` if your data is in the form of a

| dataset, generators, or `keras.utils.Sequence` instances (since

| they generate batches).

| verbose: `"auto"`, 0, 1, or 2. Verbosity mode.

| 0 = silent, 1 = progress bar, 2 = single line.

| `"auto"` becomes 1 for most cases, and to 2 when used with

| `ParameterServerStrategy`. Note that the progress bar is not

| particularly useful when logged to a file, so `verbose=2` is

| recommended when not running interactively (e.g. in a production

| environment). Defaults to 'auto'.

| sample_weight: Optional Numpy array of weights for the test samples,

| used for weighting the loss function. You can either pass a flat

| (1D) Numpy array with the same length as the input samples

| (1:1 mapping between weights and samples), or in the case of

| temporal data, you can pass a 2D array with shape `(samples,

| sequence_length)`, to apply a different weight to every

| timestep of every sample. This argument is not supported when

| `x` is a dataset, instead pass sample weights as the third

| element of `x`.

| steps: Integer or `None`. Total number of steps (batches of samples)

| before declaring the evaluation round finished. Ignored with the

| default value of `None`. If x is a `tf.data` dataset and `steps`

| is None, 'evaluate' will run until the dataset is exhausted. This

| argument is not supported with array inputs.

| callbacks: List of `keras.callbacks.Callback` instances. List of

| callbacks to apply during evaluation. See

| [callbacks](https://www.tensorflow.org/api_docs/python/tf/tf_keras/callbacks).

| max_queue_size: Integer. Used for generator or

| `keras.utils.Sequence` input only. Maximum size for the generator

| queue. If unspecified, `max_queue_size` will default to 10.

| workers: Integer. Used for generator or `keras.utils.Sequence` input

| only. Maximum number of processes to spin up when using

| process-based threading. If unspecified, `workers` will default to

| 1.

| use_multiprocessing: Boolean. Used for generator or

| `keras.utils.Sequence` input only. If `True`, use process-based

| threading. If unspecified, `use_multiprocessing` will default to

| `False`. Note that because this implementation relies on

| multiprocessing, you should not pass non-pickleable arguments to

| the generator as they can't be passed easily to children

| processes.

| return_dict: If `True`, loss and metric results are returned as a

| dict, with each key being the name of the metric. If `False`, they

| are returned as a list.

| **kwargs: Unused at this time.

|

| See the discussion of `Unpacking behavior for iterator-like inputs` for

| `Model.fit`.

|

| Returns:

| Scalar test loss (if the model has a single output and no metrics)

| or list of scalars (if the model has multiple outputs

| and/or metrics). The attribute `model.metrics_names` will give you

| the display labels for the scalar outputs.

|

| Raises:

| RuntimeError: If `model.evaluate` is wrapped in a `tf.function`.

|

| evaluate_generator(self, generator, steps=None, callbacks=None, max_queue_size=10, workers=1, use_multiprocessing=False, verbose=0)

| Evaluates the model on a data generator.

|

| DEPRECATED:

| `Model.evaluate` now supports generators, so there is no longer any

| need to use this endpoint.

|

| export(self, filepath)

| Create a SavedModel artifact for inference (e.g. via TF-Serving).

|

| This method lets you export a model to a lightweight SavedModel artifact

| that contains the model's forward pass only (its `call()` method)

| and can be served via e.g. TF-Serving. The forward pass is registered

| under the name `serve()` (see example below).

|

| The original code of the model (including any custom layers you may

| have used) is *no longer* necessary to reload the artifact -- it is

| entirely standalone.

|

| Args:

| filepath: `str` or `pathlib.Path` object. Path where to save

| the artifact.

|

| Example:

|

| ```python

| # Create the artifact

| model.export("path/to/location")

|

| # Later, in a different process / environment...

| reloaded_artifact = tf.saved_model.load("path/to/location")

| predictions = reloaded_artifact.serve(input_data)

| ```

|

| If you would like to customize your serving endpoints, you can

| use the lower-level `keras.export.ExportArchive` class. The `export()`

| method relies on `ExportArchive` internally.

|

| fit_generator(self, generator, steps_per_epoch=None, epochs=1, verbose=1, callbacks=None, validation_data=None, validation_steps=None, validation_freq=1, class_weight=None, max_queue_size=10, workers=1, use_multiprocessing=False, shuffle=True, initial_epoch=0)

| Fits the model on data yielded batch-by-batch by a Python generator.

|

| DEPRECATED:

| `Model.fit` now supports generators, so there is no longer any need to

| use this endpoint.

|

| get_compile_config(self)

| Returns a serialized config with information for compiling the model.

|

| This method returns a config dictionary containing all the information

| (optimizer, loss, metrics, etc.) with which the model was compiled.

|

| Returns:

| A dict containing information for compiling the model.

|

| get_layer(self, name=None, index=None)

| Retrieves a layer based on either its name (unique) or index.

|

| If `name` and `index` are both provided, `index` will take precedence.

| Indices are based on order of horizontal graph traversal (bottom-up).

|

| Args:

| name: String, name of layer.

| index: Integer, index of layer.

|

| Returns:

| A layer instance.

|

| get_metrics_result(self)

| Returns the model's metrics values as a dict.

|

| If any of the metric result is a dict (containing multiple metrics),

| each of them gets added to the top level returned dict of this method.

|

| Returns:

| A `dict` containing values of the metrics listed in `self.metrics`.

| Example:

| `{'loss': 0.2, 'accuracy': 0.7}`.

|

| get_weight_paths(self)

| Retrieve all the variables and their paths for the model.

|

| The variable path (string) is a stable key to identify a `tf.Variable`

| instance owned by the model. It can be used to specify variable-specific

| configurations (e.g. DTensor, quantization) from a global view.

|

| This method returns a dict with weight object paths as keys

| and the corresponding `tf.Variable` instances as values.

|

| Note that if the model is a subclassed model and the weights haven't

| been initialized, an empty dict will be returned.

|

| Returns:

| A dict where keys are variable paths and values are `tf.Variable`

| instances.

|

| Example:

|

| ```python

| class SubclassModel(tf.keras.Model):

|

| def __init__(self, name=None):

| super().__init__(name=name)

| self.d1 = tf.keras.layers.Dense(10)

| self.d2 = tf.keras.layers.Dense(20)

|

| def call(self, inputs):

| x = self.d1(inputs)

| return self.d2(x)

|

| model = SubclassModel()

| model(tf.zeros((10, 10)))

| weight_paths = model.get_weight_paths()

| # weight_paths:

| # {

| # 'd1.kernel': model.d1.kernel,

| # 'd1.bias': model.d1.bias,

| # 'd2.kernel': model.d2.kernel,

| # 'd2.bias': model.d2.bias,

| # }

|

| # Functional model

| inputs = tf.keras.Input((10,), batch_size=10)

| x = tf.keras.layers.Dense(20, name='d1')(inputs)

| output = tf.keras.layers.Dense(30, name='d2')(x)

| model = tf.keras.Model(inputs, output)

| d1 = model.layers[1]

| d2 = model.layers[2]

| weight_paths = model.get_weight_paths()

| # weight_paths:

| # {

| # 'd1.kernel': d1.kernel,

| # 'd1.bias': d1.bias,

| # 'd2.kernel': d2.kernel,

| # 'd2.bias': d2.bias,

| # }

| ```

|

| get_weights(self)

| Retrieves the weights of the model.

|

| Returns:

| A flat list of Numpy arrays.

|

| make_train_function(self, force=False)

| Creates a function that executes one step of training.

|

| This method can be overridden to support custom training logic.

| This method is called by `Model.fit` and `Model.train_on_batch`.

|

| Typically, this method directly controls `tf.function` and

| `tf.distribute.Strategy` settings, and delegates the actual training

| logic to `Model.train_step`.

|

| This function is cached the first time `Model.fit` or

| `Model.train_on_batch` is called. The cache is cleared whenever

| `Model.compile` is called. You can skip the cache and generate again the

| function with `force=True`.

|

| Args:

| force: Whether to regenerate the train function and skip the cached

| function if available.

|

| Returns:

| Function. The function created by this method should accept a

| `tf.data.Iterator`, and return a `dict` containing values that will

| be passed to `tf.keras.Callbacks.on_train_batch_end`, such as

| `{'loss': 0.2, 'accuracy': 0.7}`.

|

| predict(self, x, batch_size=None, verbose='auto', steps=None, callbacks=None, max_queue_size=10, workers=1, use_multiprocessing=False)

| Generates output predictions for the input samples.

|

| Computation is done in batches. This method is designed for batch

| processing of large numbers of inputs. It is not intended for use inside

| of loops that iterate over your data and process small numbers of inputs

| at a time.

|

| For small numbers of inputs that fit in one batch,

| directly use `__call__()` for faster execution, e.g.,

| `model(x)`, or `model(x, training=False)` if you have layers such as

| `tf.keras.layers.BatchNormalization` that behave differently during

| inference. You may pair the individual model call with a `tf.function`

| for additional performance inside your inner loop.

| If you need access to numpy array values instead of tensors after your

| model call, you can use `tensor.numpy()` to get the numpy array value of

| an eager tensor.

|

| Also, note the fact that test loss is not affected by

| regularization layers like noise and dropout.

|

| Note: See [this FAQ entry](

| https://keras.io/getting_started/faq/#whats-the-difference-between-model-methods-predict-and-call)

| for more details about the difference between `Model` methods

| `predict()` and `__call__()`.

|

| Args:

| x: Input samples. It could be:

| - A Numpy array (or array-like), or a list of arrays

| (in case the model has multiple inputs).

| - A TensorFlow tensor, or a list of tensors

| (in case the model has multiple inputs).

| - A `tf.data` dataset.

| - A generator or `keras.utils.Sequence` instance.

| A more detailed description of unpacking behavior for iterator

| types (Dataset, generator, Sequence) is given in the `Unpacking

| behavior for iterator-like inputs` section of `Model.fit`.

| batch_size: Integer or `None`.

| Number of samples per batch.

| If unspecified, `batch_size` will default to 32.

| Do not specify the `batch_size` if your data is in the

| form of dataset, generators, or `keras.utils.Sequence` instances

| (since they generate batches).

| verbose: `"auto"`, 0, 1, or 2. Verbosity mode.

| 0 = silent, 1 = progress bar, 2 = single line.

| `"auto"` becomes 1 for most cases, and to 2 when used with

| `ParameterServerStrategy`. Note that the progress bar is not

| particularly useful when logged to a file, so `verbose=2` is

| recommended when not running interactively (e.g. in a production

| environment). Defaults to 'auto'.

| steps: Total number of steps (batches of samples)

| before declaring the prediction round finished.

| Ignored with the default value of `None`. If x is a `tf.data`

| dataset and `steps` is None, `predict()` will

| run until the input dataset is exhausted.