Voir sur TensorFlow.org Voir sur TensorFlow.org |  Exécuter dans Google Colab Exécuter dans Google Colab |  Voir sur GitHub Voir sur GitHub |  Télécharger le cahier Télécharger le cahier |  Voir les modèles TF Hub Voir les modèles TF Hub |

Ce Colab montre l'utilisation d'un module TF-Hub formé pour effectuer la détection d'objets.

Installer

Importations et définitions de fonctions

# For running inference on the TF-Hub module.

import tensorflow as tf

import tensorflow_hub as hub

# For downloading the image.

import matplotlib.pyplot as plt

import tempfile

from six.moves.urllib.request import urlopen

from six import BytesIO

# For drawing onto the image.

import numpy as np

from PIL import Image

from PIL import ImageColor

from PIL import ImageDraw

from PIL import ImageFont

from PIL import ImageOps

# For measuring the inference time.

import time

# Print Tensorflow version

print(tf.__version__)

# Check available GPU devices.

print("The following GPU devices are available: %s" % tf.test.gpu_device_name())

2.7.0 The following GPU devices are available: /device:GPU:0

Exemple d'utilisation

Fonctions d'assistance pour le téléchargement d'images et pour la visualisation.

Code de visualisation adapté de l' API de détection d'objet TF pour la simple fonctionnalité requise.

def display_image(image):

fig = plt.figure(figsize=(20, 15))

plt.grid(False)

plt.imshow(image)

def download_and_resize_image(url, new_width=256, new_height=256,

display=False):

_, filename = tempfile.mkstemp(suffix=".jpg")

response = urlopen(url)

image_data = response.read()

image_data = BytesIO(image_data)

pil_image = Image.open(image_data)

pil_image = ImageOps.fit(pil_image, (new_width, new_height), Image.ANTIALIAS)

pil_image_rgb = pil_image.convert("RGB")

pil_image_rgb.save(filename, format="JPEG", quality=90)

print("Image downloaded to %s." % filename)

if display:

display_image(pil_image)

return filename

def draw_bounding_box_on_image(image,

ymin,

xmin,

ymax,

xmax,

color,

font,

thickness=4,

display_str_list=()):

"""Adds a bounding box to an image."""

draw = ImageDraw.Draw(image)

im_width, im_height = image.size

(left, right, top, bottom) = (xmin * im_width, xmax * im_width,

ymin * im_height, ymax * im_height)

draw.line([(left, top), (left, bottom), (right, bottom), (right, top),

(left, top)],

width=thickness,

fill=color)

# If the total height of the display strings added to the top of the bounding

# box exceeds the top of the image, stack the strings below the bounding box

# instead of above.

display_str_heights = [font.getsize(ds)[1] for ds in display_str_list]

# Each display_str has a top and bottom margin of 0.05x.

total_display_str_height = (1 + 2 * 0.05) * sum(display_str_heights)

if top > total_display_str_height:

text_bottom = top

else:

text_bottom = top + total_display_str_height

# Reverse list and print from bottom to top.

for display_str in display_str_list[::-1]:

text_width, text_height = font.getsize(display_str)

margin = np.ceil(0.05 * text_height)

draw.rectangle([(left, text_bottom - text_height - 2 * margin),

(left + text_width, text_bottom)],

fill=color)

draw.text((left + margin, text_bottom - text_height - margin),

display_str,

fill="black",

font=font)

text_bottom -= text_height - 2 * margin

def draw_boxes(image, boxes, class_names, scores, max_boxes=10, min_score=0.1):

"""Overlay labeled boxes on an image with formatted scores and label names."""

colors = list(ImageColor.colormap.values())

try:

font = ImageFont.truetype("/usr/share/fonts/truetype/liberation/LiberationSansNarrow-Regular.ttf",

25)

except IOError:

print("Font not found, using default font.")

font = ImageFont.load_default()

for i in range(min(boxes.shape[0], max_boxes)):

if scores[i] >= min_score:

ymin, xmin, ymax, xmax = tuple(boxes[i])

display_str = "{}: {}%".format(class_names[i].decode("ascii"),

int(100 * scores[i]))

color = colors[hash(class_names[i]) % len(colors)]

image_pil = Image.fromarray(np.uint8(image)).convert("RGB")

draw_bounding_box_on_image(

image_pil,

ymin,

xmin,

ymax,

xmax,

color,

font,

display_str_list=[display_str])

np.copyto(image, np.array(image_pil))

return image

Appliquer le module

Chargez une image publique à partir d'Open Images v4, enregistrez-la localement et affichez-la.

# By Heiko Gorski, Source: https://commons.wikimedia.org/wiki/File:Naxos_Taverna.jpg

image_url = "https://upload.wikimedia.org/wikipedia/commons/6/60/Naxos_Taverna.jpg"

downloaded_image_path = download_and_resize_image(image_url, 1280, 856, True)

Image downloaded to /tmp/tmpu_02gvdt.jpg.

Choisissez un module de détection d'objets et appliquez-le sur l'image téléchargée. Modules:

- FasterRCNN + InceptionResNet V2: haute précision,

- ssd + MobileNet V2: petit et rapide.

module_handle = "https://tfhub.dev/google/faster_rcnn/openimages_v4/inception_resnet_v2/1"

detector = hub.load(module_handle).signatures['default']

INFO:tensorflow:Saver not created because there are no variables in the graph to restore INFO:tensorflow:Saver not created because there are no variables in the graph to restore

def load_img(path):

img = tf.io.read_file(path)

img = tf.image.decode_jpeg(img, channels=3)

return img

def run_detector(detector, path):

img = load_img(path)

converted_img = tf.image.convert_image_dtype(img, tf.float32)[tf.newaxis, ...]

start_time = time.time()

result = detector(converted_img)

end_time = time.time()

result = {key:value.numpy() for key,value in result.items()}

print("Found %d objects." % len(result["detection_scores"]))

print("Inference time: ", end_time-start_time)

image_with_boxes = draw_boxes(

img.numpy(), result["detection_boxes"],

result["detection_class_entities"], result["detection_scores"])

display_image(image_with_boxes)

run_detector(detector, downloaded_image_path)

Found 100 objects. Inference time: 37.78577899932861

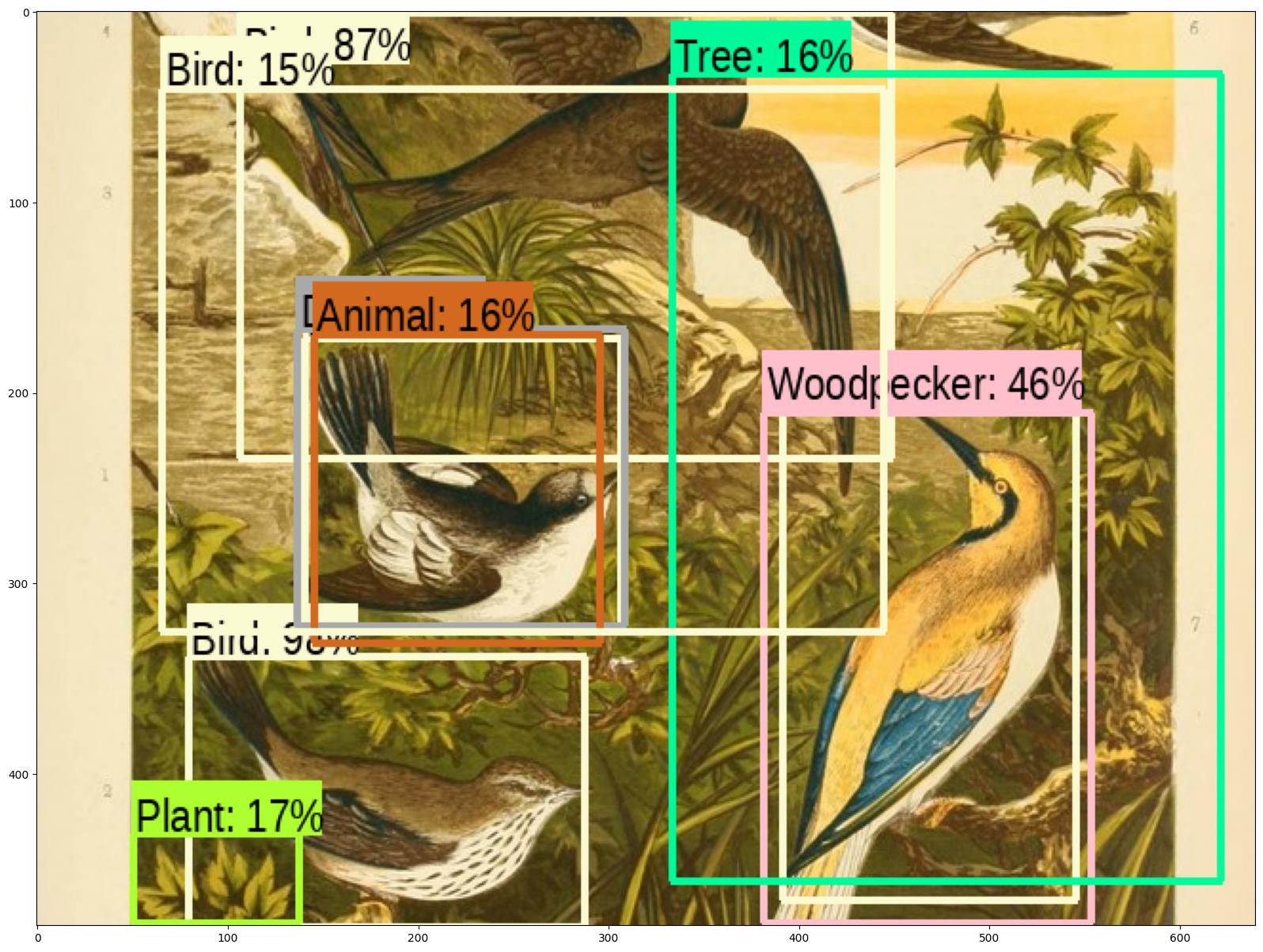

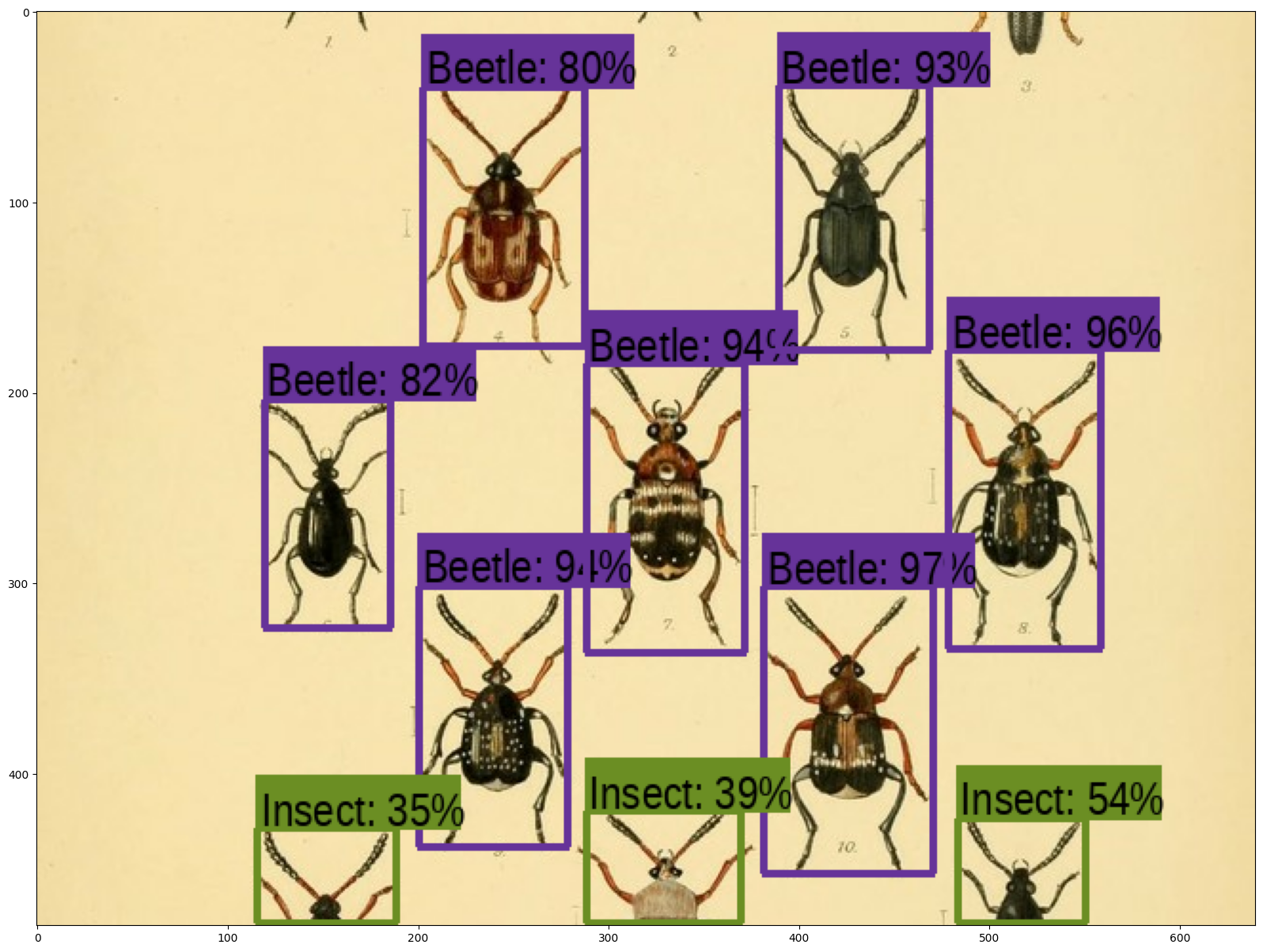

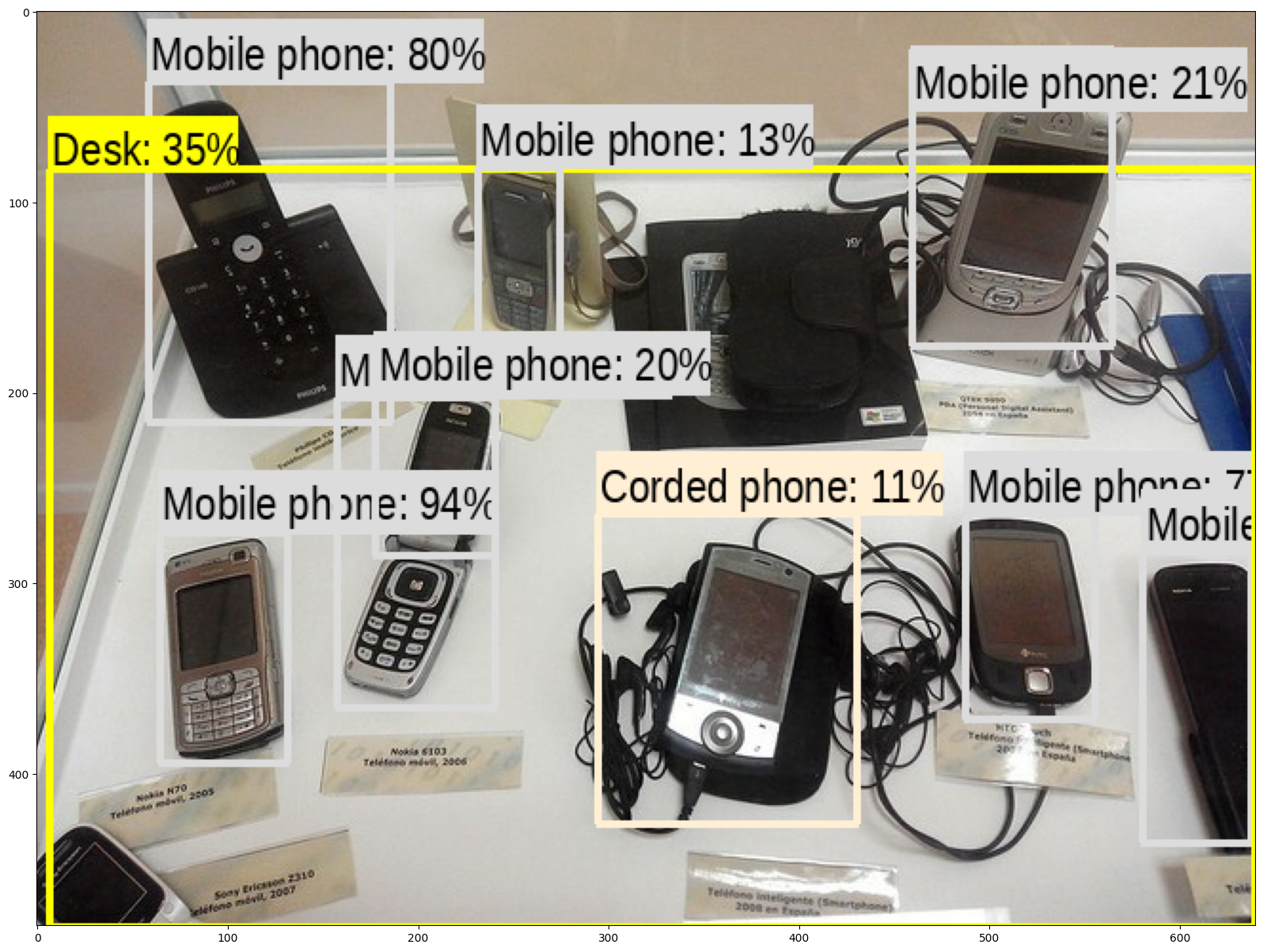

Plus d'images

Effectuez des inférences sur certaines images supplémentaires avec suivi du temps.

image_urls = [

# Source: https://commons.wikimedia.org/wiki/File:The_Coleoptera_of_the_British_islands_(Plate_125)_(8592917784).jpg

"https://upload.wikimedia.org/wikipedia/commons/1/1b/The_Coleoptera_of_the_British_islands_%28Plate_125%29_%288592917784%29.jpg",

# By Américo Toledano, Source: https://commons.wikimedia.org/wiki/File:Biblioteca_Maim%C3%B3nides,_Campus_Universitario_de_Rabanales_007.jpg

"https://upload.wikimedia.org/wikipedia/commons/thumb/0/0d/Biblioteca_Maim%C3%B3nides%2C_Campus_Universitario_de_Rabanales_007.jpg/1024px-Biblioteca_Maim%C3%B3nides%2C_Campus_Universitario_de_Rabanales_007.jpg",

# Source: https://commons.wikimedia.org/wiki/File:The_smaller_British_birds_(8053836633).jpg

"https://upload.wikimedia.org/wikipedia/commons/0/09/The_smaller_British_birds_%288053836633%29.jpg",

]

def detect_img(image_url):

start_time = time.time()

image_path = download_and_resize_image(image_url, 640, 480)

run_detector(detector, image_path)

end_time = time.time()

print("Inference time:",end_time-start_time)

detect_img(image_urls[0])

Image downloaded to /tmp/tmpuxkybwg_.jpg. Found 100 objects. Inference time: 1.385962724685669 Inference time: 1.8049812316894531

detect_img(image_urls[1])

Image downloaded to /tmp/tmp3wrs8a5l.jpg. Found 100 objects. Inference time: 0.8379817008972168 Inference time: 1.2247464656829834

detect_img(image_urls[2])

Image downloaded to /tmp/tmpu5bhhlnw.jpg. Found 100 objects. Inference time: 0.8334732055664062 Inference time: 1.394953966140747