View on TensorFlow.org View on TensorFlow.org

|

Run in Google Colab Run in Google Colab

|

View on GitHub View on GitHub

|

Download notebook Download notebook

|

See TF Hub models See TF Hub models

|

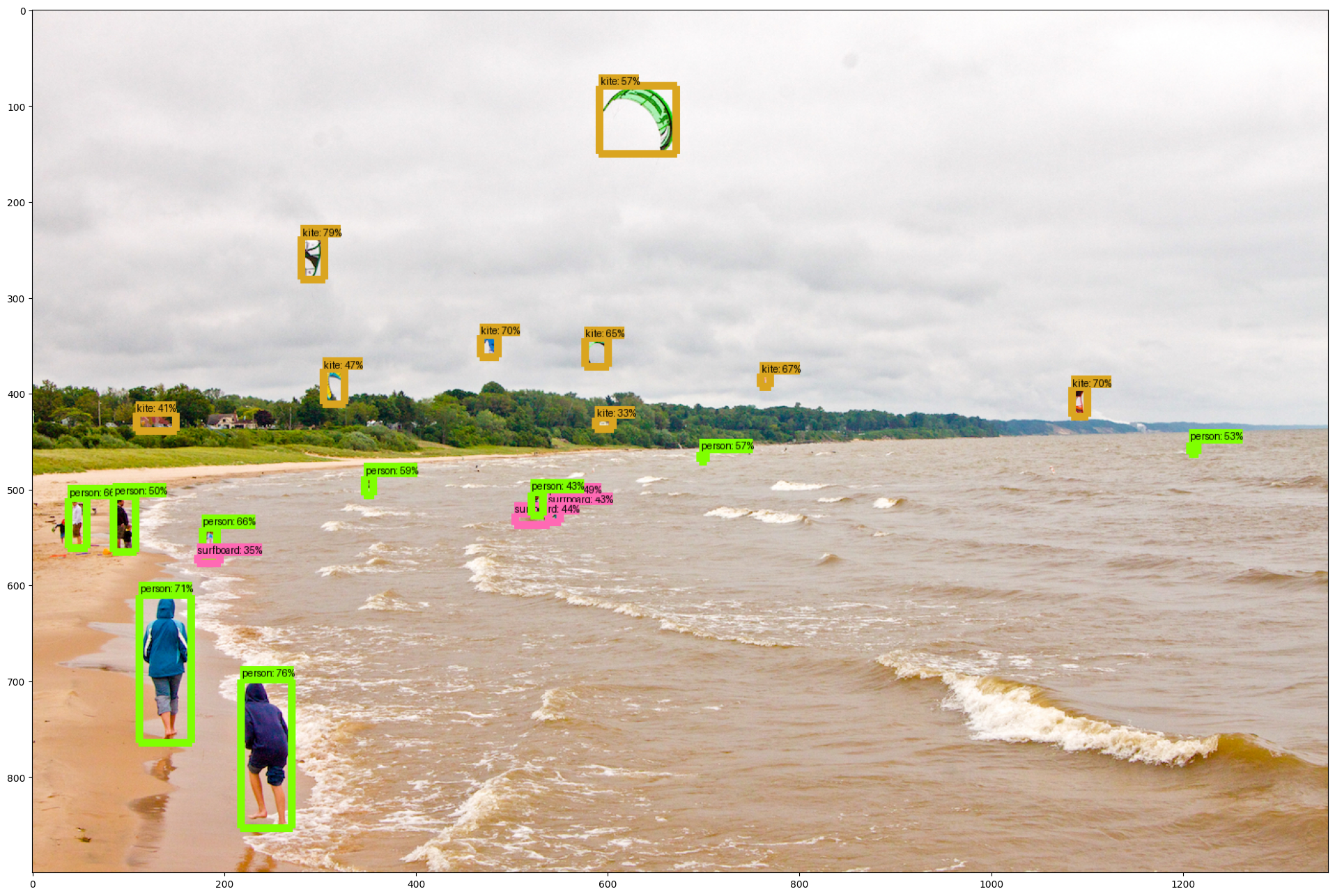

Welcome to the TensorFlow Hub Object Detection Colab! This notebook will take you through the steps of running an "out-of-the-box" object detection model on images.

More models

This collection contains TF2 object detection models that have been trained on the COCO 2017 dataset. Here you can find all object detection models that are currently hosted on tfhub.dev.

Imports and Setup

Let's start with the base imports.

# This Colab requires a recent numpy version.pip install numpy==1.24.3pip install protobuf==3.20.3

import os

import pathlib

import matplotlib

import matplotlib.pyplot as plt

import io

import scipy.misc

import numpy as np

from six import BytesIO

from PIL import Image, ImageDraw, ImageFont

from six.moves.urllib.request import urlopen

import tensorflow as tf

import tensorflow_hub as hub

tf.get_logger().setLevel('ERROR')

Utilities

Run the following cell to create some utils that will be needed later:

- Helper method to load an image

- Map of Model Name to TF Hub handle

- List of tuples with Human Keypoints for the COCO 2017 dataset. This is needed for models with keypoints.

# @title Run this!!

def load_image_into_numpy_array(path):

"""Load an image from file into a numpy array.

Puts image into numpy array to feed into tensorflow graph.

Note that by convention we put it into a numpy array with shape

(height, width, channels), where channels=3 for RGB.

Args:

path: the file path to the image

Returns:

uint8 numpy array with shape (img_height, img_width, 3)

"""

image = None

if(path.startswith('http')):

response = urlopen(path)

image_data = response.read()

image_data = BytesIO(image_data)

image = Image.open(image_data)

else:

image_data = tf.io.gfile.GFile(path, 'rb').read()

image = Image.open(BytesIO(image_data))

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(1, im_height, im_width, 3)).astype(np.uint8)

ALL_MODELS = {

'CenterNet HourGlass104 512x512' : 'https://tfhub.dev/tensorflow/centernet/hourglass_512x512/1',

'CenterNet HourGlass104 Keypoints 512x512' : 'https://tfhub.dev/tensorflow/centernet/hourglass_512x512_kpts/1',

'CenterNet HourGlass104 1024x1024' : 'https://tfhub.dev/tensorflow/centernet/hourglass_1024x1024/1',

'CenterNet HourGlass104 Keypoints 1024x1024' : 'https://tfhub.dev/tensorflow/centernet/hourglass_1024x1024_kpts/1',

'CenterNet Resnet50 V1 FPN 512x512' : 'https://tfhub.dev/tensorflow/centernet/resnet50v1_fpn_512x512/1',

'CenterNet Resnet50 V1 FPN Keypoints 512x512' : 'https://tfhub.dev/tensorflow/centernet/resnet50v1_fpn_512x512_kpts/1',

'CenterNet Resnet101 V1 FPN 512x512' : 'https://tfhub.dev/tensorflow/centernet/resnet101v1_fpn_512x512/1',

'CenterNet Resnet50 V2 512x512' : 'https://tfhub.dev/tensorflow/centernet/resnet50v2_512x512/1',

'CenterNet Resnet50 V2 Keypoints 512x512' : 'https://tfhub.dev/tensorflow/centernet/resnet50v2_512x512_kpts/1',

'EfficientDet D0 512x512' : 'https://tfhub.dev/tensorflow/efficientdet/d0/1',

'EfficientDet D1 640x640' : 'https://tfhub.dev/tensorflow/efficientdet/d1/1',

'EfficientDet D2 768x768' : 'https://tfhub.dev/tensorflow/efficientdet/d2/1',

'EfficientDet D3 896x896' : 'https://tfhub.dev/tensorflow/efficientdet/d3/1',

'EfficientDet D4 1024x1024' : 'https://tfhub.dev/tensorflow/efficientdet/d4/1',

'EfficientDet D5 1280x1280' : 'https://tfhub.dev/tensorflow/efficientdet/d5/1',

'EfficientDet D6 1280x1280' : 'https://tfhub.dev/tensorflow/efficientdet/d6/1',

'EfficientDet D7 1536x1536' : 'https://tfhub.dev/tensorflow/efficientdet/d7/1',

'SSD MobileNet v2 320x320' : 'https://tfhub.dev/tensorflow/ssd_mobilenet_v2/2',

'SSD MobileNet V1 FPN 640x640' : 'https://tfhub.dev/tensorflow/ssd_mobilenet_v1/fpn_640x640/1',

'SSD MobileNet V2 FPNLite 320x320' : 'https://tfhub.dev/tensorflow/ssd_mobilenet_v2/fpnlite_320x320/1',

'SSD MobileNet V2 FPNLite 640x640' : 'https://tfhub.dev/tensorflow/ssd_mobilenet_v2/fpnlite_640x640/1',

'SSD ResNet50 V1 FPN 640x640 (RetinaNet50)' : 'https://tfhub.dev/tensorflow/retinanet/resnet50_v1_fpn_640x640/1',

'SSD ResNet50 V1 FPN 1024x1024 (RetinaNet50)' : 'https://tfhub.dev/tensorflow/retinanet/resnet50_v1_fpn_1024x1024/1',

'SSD ResNet101 V1 FPN 640x640 (RetinaNet101)' : 'https://tfhub.dev/tensorflow/retinanet/resnet101_v1_fpn_640x640/1',

'SSD ResNet101 V1 FPN 1024x1024 (RetinaNet101)' : 'https://tfhub.dev/tensorflow/retinanet/resnet101_v1_fpn_1024x1024/1',

'SSD ResNet152 V1 FPN 640x640 (RetinaNet152)' : 'https://tfhub.dev/tensorflow/retinanet/resnet152_v1_fpn_640x640/1',

'SSD ResNet152 V1 FPN 1024x1024 (RetinaNet152)' : 'https://tfhub.dev/tensorflow/retinanet/resnet152_v1_fpn_1024x1024/1',

'Faster R-CNN ResNet50 V1 640x640' : 'https://tfhub.dev/tensorflow/faster_rcnn/resnet50_v1_640x640/1',

'Faster R-CNN ResNet50 V1 1024x1024' : 'https://tfhub.dev/tensorflow/faster_rcnn/resnet50_v1_1024x1024/1',

'Faster R-CNN ResNet50 V1 800x1333' : 'https://tfhub.dev/tensorflow/faster_rcnn/resnet50_v1_800x1333/1',

'Faster R-CNN ResNet101 V1 640x640' : 'https://tfhub.dev/tensorflow/faster_rcnn/resnet101_v1_640x640/1',

'Faster R-CNN ResNet101 V1 1024x1024' : 'https://tfhub.dev/tensorflow/faster_rcnn/resnet101_v1_1024x1024/1',

'Faster R-CNN ResNet101 V1 800x1333' : 'https://tfhub.dev/tensorflow/faster_rcnn/resnet101_v1_800x1333/1',

'Faster R-CNN ResNet152 V1 640x640' : 'https://tfhub.dev/tensorflow/faster_rcnn/resnet152_v1_640x640/1',

'Faster R-CNN ResNet152 V1 1024x1024' : 'https://tfhub.dev/tensorflow/faster_rcnn/resnet152_v1_1024x1024/1',

'Faster R-CNN ResNet152 V1 800x1333' : 'https://tfhub.dev/tensorflow/faster_rcnn/resnet152_v1_800x1333/1',

'Faster R-CNN Inception ResNet V2 640x640' : 'https://tfhub.dev/tensorflow/faster_rcnn/inception_resnet_v2_640x640/1',

'Faster R-CNN Inception ResNet V2 1024x1024' : 'https://tfhub.dev/tensorflow/faster_rcnn/inception_resnet_v2_1024x1024/1',

'Mask R-CNN Inception ResNet V2 1024x1024' : 'https://tfhub.dev/tensorflow/mask_rcnn/inception_resnet_v2_1024x1024/1'

}

IMAGES_FOR_TEST = {

'Beach' : 'models/research/object_detection/test_images/image2.jpg',

'Dogs' : 'models/research/object_detection/test_images/image1.jpg',

# By Heiko Gorski, Source: https://commons.wikimedia.org/wiki/File:Naxos_Taverna.jpg

'Naxos Taverna' : 'https://upload.wikimedia.org/wikipedia/commons/6/60/Naxos_Taverna.jpg',

# Source: https://commons.wikimedia.org/wiki/File:The_Coleoptera_of_the_British_islands_(Plate_125)_(8592917784).jpg

'Beatles' : 'https://upload.wikimedia.org/wikipedia/commons/1/1b/The_Coleoptera_of_the_British_islands_%28Plate_125%29_%288592917784%29.jpg',

# By Américo Toledano, Source: https://commons.wikimedia.org/wiki/File:Biblioteca_Maim%C3%B3nides,_Campus_Universitario_de_Rabanales_007.jpg

'Phones' : 'https://upload.wikimedia.org/wikipedia/commons/thumb/0/0d/Biblioteca_Maim%C3%B3nides%2C_Campus_Universitario_de_Rabanales_007.jpg/1024px-Biblioteca_Maim%C3%B3nides%2C_Campus_Universitario_de_Rabanales_007.jpg',

# Source: https://commons.wikimedia.org/wiki/File:The_smaller_British_birds_(8053836633).jpg

'Birds' : 'https://upload.wikimedia.org/wikipedia/commons/0/09/The_smaller_British_birds_%288053836633%29.jpg',

}

COCO17_HUMAN_POSE_KEYPOINTS = [(0, 1),

(0, 2),

(1, 3),

(2, 4),

(0, 5),

(0, 6),

(5, 7),

(7, 9),

(6, 8),

(8, 10),

(5, 6),

(5, 11),

(6, 12),

(11, 12),

(11, 13),

(13, 15),

(12, 14),

(14, 16)]

Visualization tools

To visualize the images with the proper detected boxes, keypoints and segmentation, we will use the TensorFlow Object Detection API. To install it we will clone the repo.

# Clone the tensorflow models repositorygit clone --depth 1 https://github.com/tensorflow/models

Cloning into 'models'... remote: Enumerating objects: 4084, done. remote: Counting objects: 100% (4084/4084), done. remote: Compressing objects: 100% (3076/3076), done. remote: Total 4084 (delta 1188), reused 2898 (delta 948), pack-reused 0 Receiving objects: 100% (4084/4084), 44.61 MiB | 49.66 MiB/s, done. Resolving deltas: 100% (1188/1188), done.

Installing the Object Detection API

sudo apt install -y protobuf-compilercd models/research/protoc object_detection/protos/*.proto --python_out=.cp object_detection/packages/tf2/setup.py .python -m pip install .

WARNING: apt does not have a stable CLI interface. Use with caution in scripts. dpkg-preconfigure: unable to re-open stdin: No such file or directory

Now we can import the dependencies we will need later

from object_detection.utils import label_map_util

from object_detection.utils import visualization_utils as viz_utils

from object_detection.utils import ops as utils_ops

%matplotlib inline

Load label map data (for plotting).

Label maps correspond index numbers to category names, so that when our convolution network predicts 5, we know that this corresponds to airplane. Here we use internal utility functions, but anything that returns a dictionary mapping integers to appropriate string labels would be fine.

We are going, for simplicity, to load from the repository that we loaded the Object Detection API code

PATH_TO_LABELS = './models/research/object_detection/data/mscoco_label_map.pbtxt'

category_index = label_map_util.create_category_index_from_labelmap(PATH_TO_LABELS, use_display_name=True)

Build a detection model and load pre-trained model weights

Here we will choose which Object Detection model we will use. Select the architecture and it will be loaded automatically. If you want to change the model to try other architectures later, just change the next cell and execute following ones.

Tip: if you want to read more details about the selected model, you can follow the link (model handle) and read additional documentation on TF Hub. After you select a model, we will print the handle to make it easier.

Model Selection

model_display_name = 'CenterNet HourGlass104 Keypoints 512x512' # @param ['CenterNet HourGlass104 512x512','CenterNet HourGlass104 Keypoints 512x512','CenterNet HourGlass104 1024x1024','CenterNet HourGlass104 Keypoints 1024x1024','CenterNet Resnet50 V1 FPN 512x512','CenterNet Resnet50 V1 FPN Keypoints 512x512','CenterNet Resnet101 V1 FPN 512x512','CenterNet Resnet50 V2 512x512','CenterNet Resnet50 V2 Keypoints 512x512','EfficientDet D0 512x512','EfficientDet D1 640x640','EfficientDet D2 768x768','EfficientDet D3 896x896','EfficientDet D4 1024x1024','EfficientDet D5 1280x1280','EfficientDet D6 1280x1280','EfficientDet D7 1536x1536','SSD MobileNet v2 320x320','SSD MobileNet V1 FPN 640x640','SSD MobileNet V2 FPNLite 320x320','SSD MobileNet V2 FPNLite 640x640','SSD ResNet50 V1 FPN 640x640 (RetinaNet50)','SSD ResNet50 V1 FPN 1024x1024 (RetinaNet50)','SSD ResNet101 V1 FPN 640x640 (RetinaNet101)','SSD ResNet101 V1 FPN 1024x1024 (RetinaNet101)','SSD ResNet152 V1 FPN 640x640 (RetinaNet152)','SSD ResNet152 V1 FPN 1024x1024 (RetinaNet152)','Faster R-CNN ResNet50 V1 640x640','Faster R-CNN ResNet50 V1 1024x1024','Faster R-CNN ResNet50 V1 800x1333','Faster R-CNN ResNet101 V1 640x640','Faster R-CNN ResNet101 V1 1024x1024','Faster R-CNN ResNet101 V1 800x1333','Faster R-CNN ResNet152 V1 640x640','Faster R-CNN ResNet152 V1 1024x1024','Faster R-CNN ResNet152 V1 800x1333','Faster R-CNN Inception ResNet V2 640x640','Faster R-CNN Inception ResNet V2 1024x1024','Mask R-CNN Inception ResNet V2 1024x1024']

model_handle = ALL_MODELS[model_display_name]

print('Selected model:'+ model_display_name)

print('Model Handle at TensorFlow Hub: {}'.format(model_handle))

Selected model:CenterNet HourGlass104 Keypoints 512x512 Model Handle at TensorFlow Hub: https://tfhub.dev/tensorflow/centernet/hourglass_512x512_kpts/1

Loading the selected model from TensorFlow Hub

Here we just need the model handle that was selected and use the Tensorflow Hub library to load it to memory.

print('loading model...')

hub_model = hub.load(model_handle)

print('model loaded!')

loading model... 2024-03-09 13:22:07.698952: E external/local_xla/xla/stream_executor/cuda/cuda_driver.cc:282] failed call to cuInit: CUDA_ERROR_NO_DEVICE: no CUDA-capable device is detected WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_42408) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_209416) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_220336) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_39751) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_218416) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_encoder_decoder_block_layer_call_and_return_conditional_losses_191780) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_hourglass_network_layer_call_and_return_conditional_losses_95722) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_221896) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_convolutional_block_73_layer_call_and_return_conditional_losses_67031) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_24349) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_211456) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_39306) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_47074) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_44404) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_37736) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_55611) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_216136) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_27222) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_222496) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_54225) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_216016) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_33439) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_22353) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_55134) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_31214) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_52655) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_211936) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_217816) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_45955) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_28112) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_212896) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_205696) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_45078) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_43959) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_49731) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_46845) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_27896) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_28557) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_215656) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_29447) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_21018) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_convolutional_block_73_layer_call_and_return_conditional_losses_204261) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_41747) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_49070) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_222016) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_31443) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_212536) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_206896) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_214816) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_23014) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_convolutional_block_36_layer_call_and_return_conditional_losses_66742) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_38397) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_40857) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_205336) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_51530) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_220096) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_207256) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_input_downsample_block_layer_call_and_return_conditional_losses_56840) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_207616) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_220936) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_23904) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_210496) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_41963) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_222976) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_206416) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_221776) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_223336) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_42637) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_205816) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_212416) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_224296) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_21908) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_212176) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_23230) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_36363) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_35702) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_39967) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_44633) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_27451) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_218296) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_38181) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_56272) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_convolutional_block_71_layer_call_and_return_conditional_losses_77067) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_208696) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_224056) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_50621) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_38645) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_217936) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_216616) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_216496) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_209776) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_213016) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_36611) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_214456) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_215296) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_206776) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_213256) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_46184) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_221056) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_220816) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_39535) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_206176) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_217576) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_210736) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_208816) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_220456) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_32333) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_32104) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_53793) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_207136) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_208216) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_input_downsample_block_layer_call_and_return_conditional_losses_184458) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_222256) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_217096) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_encoder_decoder_block_5_layer_call_and_return_conditional_losses_73080) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_215896) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_27667) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_206056) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_210976) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_210616) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_213496) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_55382) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_34113) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_53100) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_22798) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_21247) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_223936) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_218536) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_29002) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_216856) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_216256) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_207856) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_31659) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_217696) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_21463) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_33223) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_54689) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_convolutional_block_72_layer_call_and_return_conditional_losses_66888) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_208456) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_35454) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_217216) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_208936) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_49960) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_26345) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_215056) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_42192) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_41531) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_216736) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_28786) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_220216) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_49286) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_219136) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_37056) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_22124) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_209656) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_37952) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_21679) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_convolutional_block_36_layer_call_and_return_conditional_losses_203862) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_209056) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_209176) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_25671) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_48625) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_214696) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_214216) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_hourglass_network_layer_call_and_return_conditional_losses_158598) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_223816) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_206296) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_217336) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_215536) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_210256) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_41302) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_30553) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_45739) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_29892) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_221296) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_206536) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_211216) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_209296) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_47290) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_208336) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_38861) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_222136) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_219616) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_51066) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_205936) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_215176) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_32778) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_24794) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_212776) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_205456) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_26116) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_212656) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_27006) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_35238) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_23459) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_214936) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_44188) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_219736) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_35918) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_51746) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_218656) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_34329) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_34793) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_29663) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_37488) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_46400) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_51282) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_210856) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_221656) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_205216) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_30782) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_52191) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_219496) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_56043) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_30998) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_222616) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_215416) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_220576) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_42853) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_25900) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_214576) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_41086) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_29218) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_53348) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_25010) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_216976) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_40196) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_219856) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_211096) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_36147) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_35009) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_54473) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_hourglass_network_layer_call_and_return_conditional_losses_177444) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_217456) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_40641) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_222856) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_48180) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_32549) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_215776) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_219376) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_213136) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_47951) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_39090) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_28341) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_207496) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_33668) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_convolutional_block_72_layer_call_and_return_conditional_losses_204131) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_223456) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_52884) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_encoder_decoder_block_5_layer_call_and_return_conditional_losses_200628) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_223576) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_30337) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_24565) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_210376) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_212296) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_222376) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_center_net_hourglass_feature_extractor_layer_call_and_return_conditional_losses_120906) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_220696) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_224176) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_24120) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_36827) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_214096) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_223216) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_34545) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_211576) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_213376) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_25455) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_222736) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_49515) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_218056) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_213616) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_207016) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_51975) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_221536) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_52439) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_211696) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_encoder_decoder_block_layer_call_and_return_conditional_losses_62755) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_44849) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_residual_block_69_layer_call_and_return_conditional_losses_204469) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_209896) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_208576) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_208096) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_206656) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_45523) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_207976) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_53564) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_26561) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_223696) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_48396) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_48841) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_221176) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_residual_block_69_layer_call_and_return_conditional_losses_67266) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_50176) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_50850) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_218176) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_223096) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_50405) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_37272) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_210016) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_209536) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_219016) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_47519) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_54918) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_219976) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_31888) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_210136) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_54009) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_211816) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_46629) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_23675) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_convolutional_block_71_layer_call_and_return_conditional_losses_203998) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_43527) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_40412) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_205576) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_214336) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_218776) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_22569) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_207376) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_45294) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_221416) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_212056) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_32994) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_213736) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_center_net_hourglass_feature_extractor_layer_call_and_return_conditional_losses_139752) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_213856) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_207736) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_43082) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_216376) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_43743) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_43298) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_25226) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_33884) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_26790) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_211336) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_47735) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_30108) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_219256) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_213976) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_218896) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_55827) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. WARNING:absl:Importing a function (__inference_batchnorm_layer_call_and_return_conditional_losses_56488) with ops with unsaved custom gradients. Will likely fail if a gradient is requested. model loaded!

Loading an image

Let's try the model on a simple image. To help with this, we provide a list of test images.

Here are some simple things to try out if you are curious:

- Try running inference on your own images, just upload them to colab and load the same way it's done in the cell below.

- Modify some of the input images and see if detection still works. Some simple things to try out here include flipping the image horizontally, or converting to grayscale (note that we still expect the input image to have 3 channels).

Be careful: when using images with an alpha channel, the model expect 3 channels images and the alpha will count as a 4th.

Image Selection (don't forget to execute the cell!)

selected_image = 'Beach' # @param ['Beach', 'Dogs', 'Naxos Taverna', 'Beatles', 'Phones', 'Birds']

flip_image_horizontally = False

convert_image_to_grayscale = False

image_path = IMAGES_FOR_TEST[selected_image]

image_np = load_image_into_numpy_array(image_path)

# Flip horizontally

if(flip_image_horizontally):

image_np[0] = np.fliplr(image_np[0]).copy()

# Convert image to grayscale

if(convert_image_to_grayscale):

image_np[0] = np.tile(

np.mean(image_np[0], 2, keepdims=True), (1, 1, 3)).astype(np.uint8)

plt.figure(figsize=(24,32))

plt.imshow(image_np[0])

plt.show()

Doing the inference

To do the inference we just need to call our TF Hub loaded model.

Things you can try:

- Print out