View on TensorFlow.org View on TensorFlow.org

|

Run in Google Colab Run in Google Colab

|

View source on GitHub View source on GitHub

|

Download notebook Download notebook

|

When working on ML applications such as object detection and NLP, it is sometimes necessary to work with sub-sections (slices) of tensors. For example, if your model architecture includes routing, where one layer might control which training example gets routed to the next layer. In this case, you could use tensor slicing ops to split the tensors up and put them back together in the right order.

In NLP applications, you can use tensor slicing to perform word masking while training. For example, you can generate training data from a list of sentences by choosing a word index to mask in each sentence, taking the word out as a label, and then replacing the chosen word with a mask token.

In this guide, you will learn how to use the TensorFlow APIs to:

- Extract slices from a tensor

- Insert data at specific indices in a tensor

This guide assumes familiarity with tensor indexing. Read the indexing sections of the Tensor and TensorFlow NumPy guides before getting started with this guide.

Setup

import tensorflow as tf

import numpy as np

2024-08-15 02:59:22.387959: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:485] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered 2024-08-15 02:59:22.409014: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:8454] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered 2024-08-15 02:59:22.415391: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1452] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

Extract tensor slices

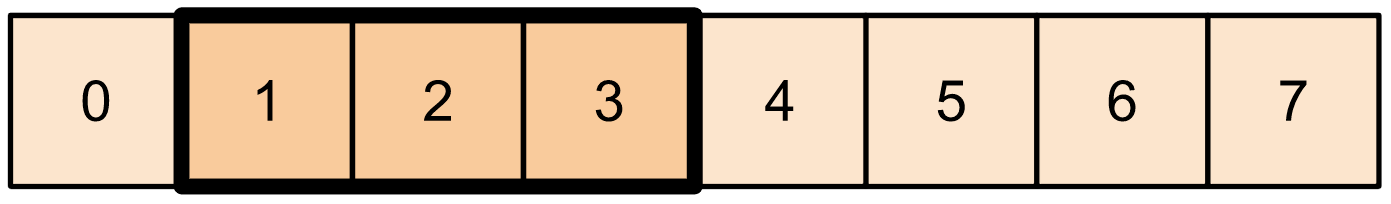

Perform NumPy-like tensor slicing using tf.slice.

t1 = tf.constant([0, 1, 2, 3, 4, 5, 6, 7])

print(tf.slice(t1,

begin=[1],

size=[3]))

tf.Tensor([1 2 3], shape=(3,), dtype=int32) WARNING: All log messages before absl::InitializeLog() is called are written to STDERR I0000 00:00:1723690765.029742 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690765.033507 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690765.037154 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690765.040869 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690765.052765 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690765.056359 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690765.059719 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690765.063138 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690765.066532 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690765.070040 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690765.073650 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690765.077235 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.300773 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.302797 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.304766 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.306851 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.308843 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.310723 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.312589 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.314574 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.316423 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.318291 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.320168 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.322150 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.360968 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.362946 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.364849 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.366867 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.368710 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.370591 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.372447 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.374435 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.376302 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.378677 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.380966 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355 I0000 00:00:1723690766.383365 169903 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

Alternatively, you can use a more Pythonic syntax. Note that tensor slices are evenly spaced over a start-stop range.

print(t1[1:4])

tf.Tensor([1 2 3], shape=(3,), dtype=int32)

print(t1[-3:])

tf.Tensor([5 6 7], shape=(3,), dtype=int32)

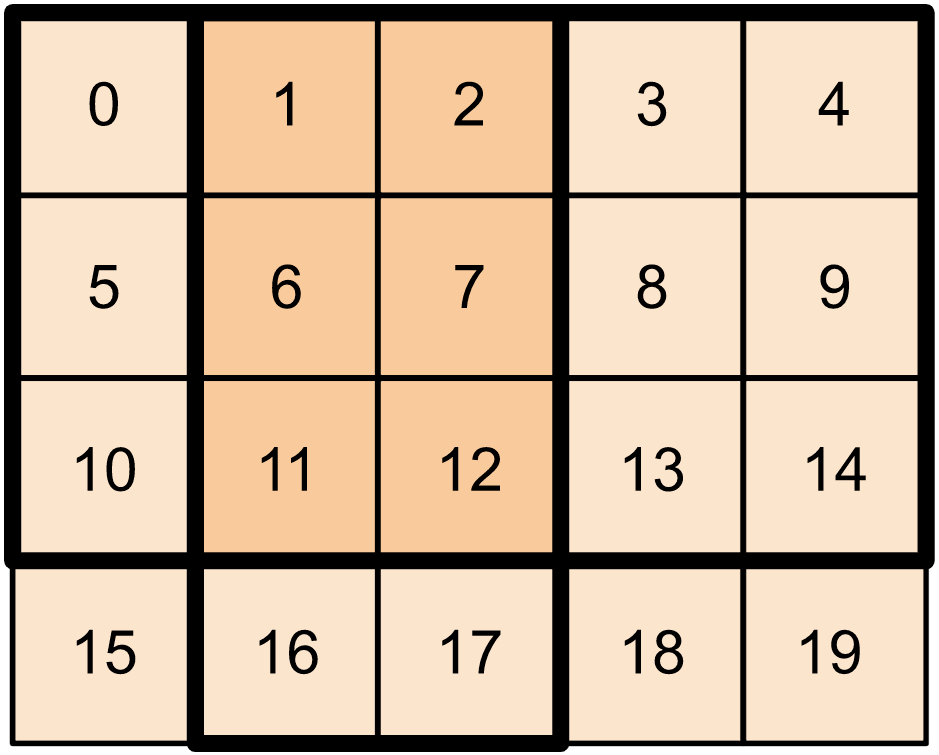

For 2-dimensional tensors,you can use something like:

t2 = tf.constant([[0, 1, 2, 3, 4],

[5, 6, 7, 8, 9],

[10, 11, 12, 13, 14],

[15, 16, 17, 18, 19]])

print(t2[:-1, 1:3])

tf.Tensor( [[ 1 2] [ 6 7] [11 12]], shape=(3, 2), dtype=int32)

You can use tf.slice on higher dimensional tensors as well.

t3 = tf.constant([[[1, 3, 5, 7],

[9, 11, 13, 15]],

[[17, 19, 21, 23],

[25, 27, 29, 31]]

])

print(tf.slice(t3,

begin=[1, 1, 0],

size=[1, 1, 2]))

tf.Tensor([[[25 27]]], shape=(1, 1, 2), dtype=int32)

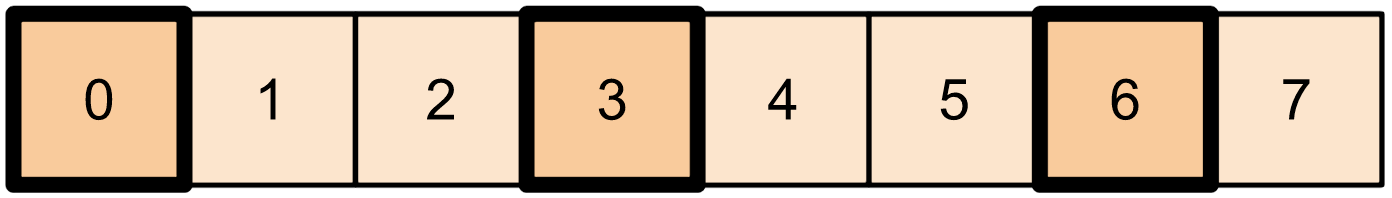

You can also use tf.strided_slice to extract slices of tensors by 'striding' over the tensor dimensions.

Use tf.gather to extract specific indices from a single axis of a tensor.

print(tf.gather(t1,

indices=[0, 3, 6]))

# This is similar to doing

t1[::3]

tf.Tensor([0 3 6], shape=(3,), dtype=int32) <tf.Tensor: shape=(3,), dtype=int32, numpy=array([0, 3, 6], dtype=int32)>

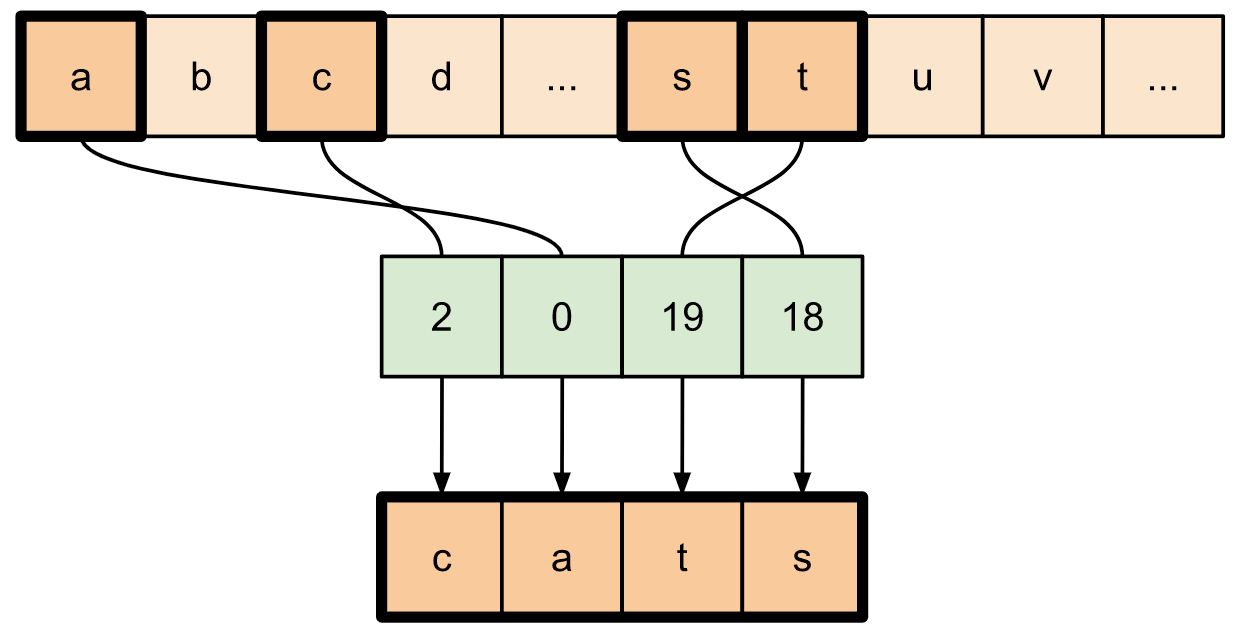

tf.gather does not require indices to be evenly spaced.

alphabet = tf.constant(list('abcdefghijklmnopqrstuvwxyz'))

print(tf.gather(alphabet,

indices=[2, 0, 19, 18]))

tf.Tensor([b'c' b'a' b't' b's'], shape=(4,), dtype=string)

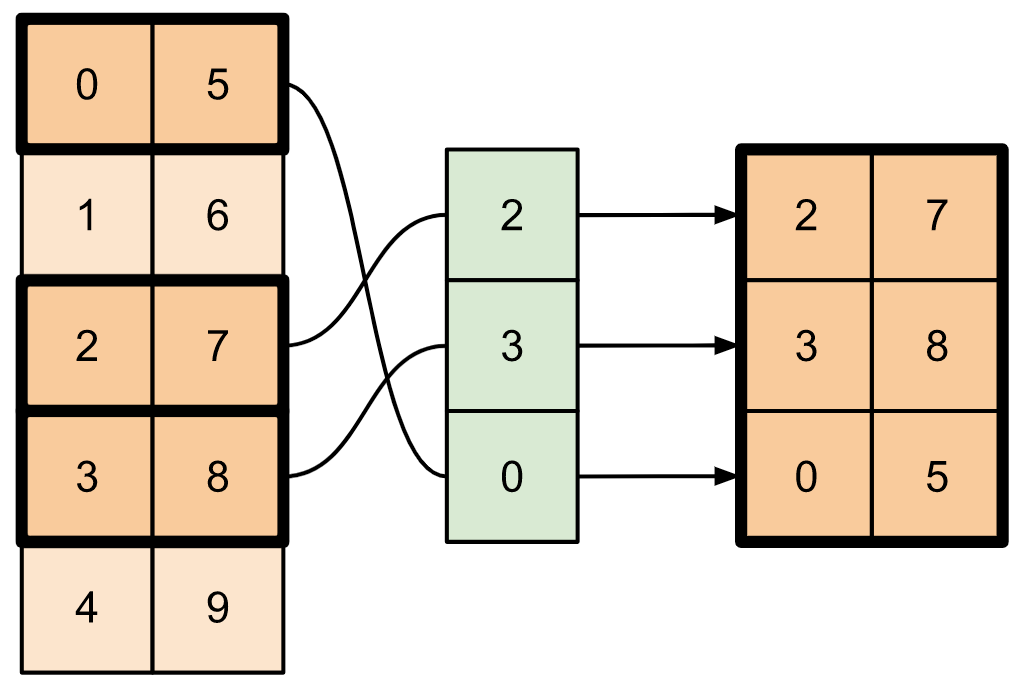

To extract slices from multiple axes of a tensor, use tf.gather_nd. This is useful when you want to gather the elements of a matrix as opposed to just its rows or columns.

t4 = tf.constant([[0, 5],

[1, 6],

[2, 7],

[3, 8],

[4, 9]])

print(tf.gather_nd(t4,

indices=[[2], [3], [0]]))

tf.Tensor( [[2 7] [3 8] [0 5]], shape=(3, 2), dtype=int32)

t5 = np.reshape(np.arange(18), [2, 3, 3])

print(tf.gather_nd(t5,

indices=[[0, 0, 0], [1, 2, 1]]))

tf.Tensor([ 0 16], shape=(2,), dtype=int64)

# Return a list of two matrices

print(tf.gather_nd(t5,

indices=[[[0, 0], [0, 2]], [[1, 0], [1, 2]]]))

tf.Tensor( [[[ 0 1 2] [ 6 7 8]] [[ 9 10 11] [15 16 17]]], shape=(2, 2, 3), dtype=int64)

# Return one matrix

print(tf.gather_nd(t5,

indices=[[0, 0], [0, 2], [1, 0], [1, 2]]))

tf.Tensor( [[ 0 1 2] [ 6 7 8] [ 9 10 11] [15 16 17]], shape=(4, 3), dtype=int64)

Insert data into tensors

Use tf.scatter_nd to insert data at specific slices/indices of a tensor. Note that the tensor into which you insert values is zero-initialized.

t6 = tf.constant([10])

indices = tf.constant([[1], [3], [5], [7], [9]])

data = tf.constant([2, 4, 6, 8, 10])

print(tf.scatter_nd(indices=indices,

updates=data,

shape=t6))

tf.Tensor([ 0 2 0 4 0 6 0 8 0 10], shape=(10,), dtype=int32)

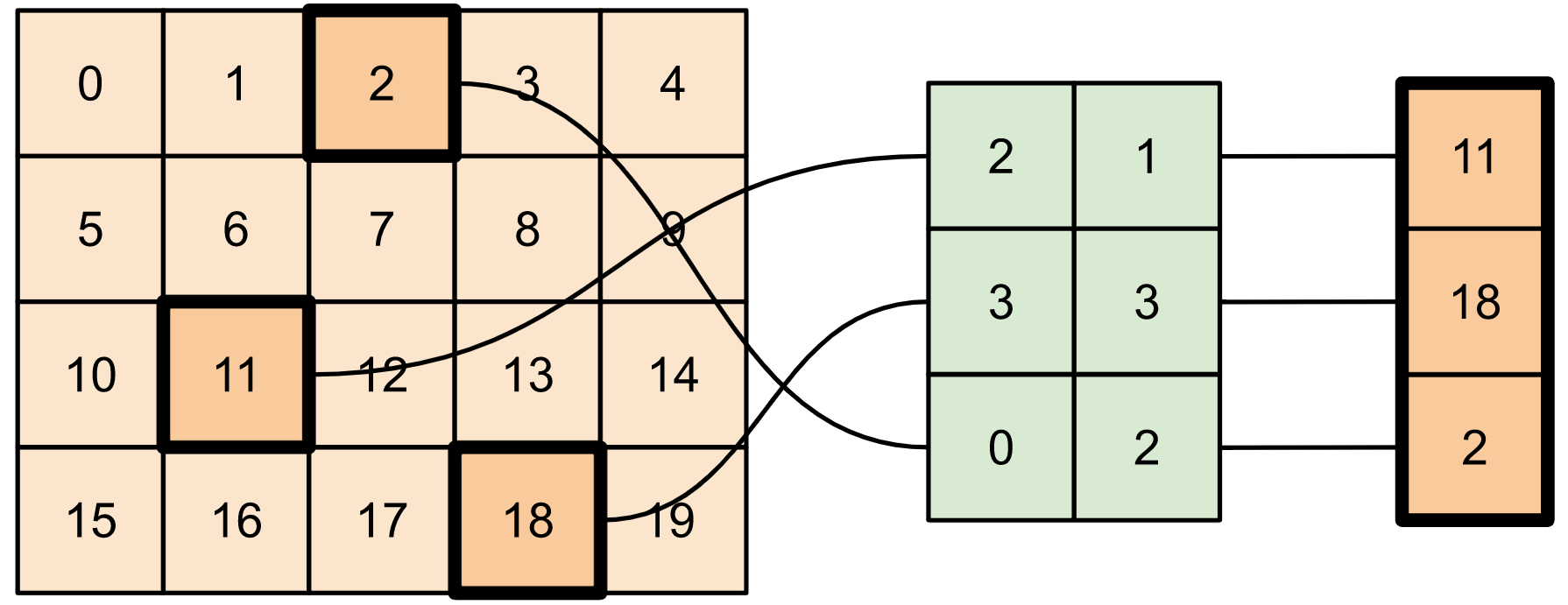

Methods like tf.scatter_nd which require zero-initialized tensors are similar to sparse tensor initializers. You can use tf.gather_nd and tf.scatter_nd to mimic the behavior of sparse tensor ops.

Consider an example where you construct a sparse tensor using these two methods in conjunction.

# Gather values from one tensor by specifying indices

new_indices = tf.constant([[0, 2], [2, 1], [3, 3]])

t7 = tf.gather_nd(t2, indices=new_indices)

# Add these values into a new tensor

t8 = tf.scatter_nd(indices=new_indices, updates=t7, shape=tf.constant([4, 5]))

print(t8)

tf.Tensor( [[ 0 0 2 0 0] [ 0 0 0 0 0] [ 0 11 0 0 0] [ 0 0 0 18 0]], shape=(4, 5), dtype=int32)

This is similar to:

t9 = tf.SparseTensor(indices=[[0, 2], [2, 1], [3, 3]],

values=[2, 11, 18],

dense_shape=[4, 5])

print(t9)

SparseTensor(indices=tf.Tensor( [[0 2] [2 1] [3 3]], shape=(3, 2), dtype=int64), values=tf.Tensor([ 2 11 18], shape=(3,), dtype=int32), dense_shape=tf.Tensor([4 5], shape=(2,), dtype=int64))

# Convert the sparse tensor into a dense tensor

t10 = tf.sparse.to_dense(t9)

print(t10)

tf.Tensor( [[ 0 0 2 0 0] [ 0 0 0 0 0] [ 0 11 0 0 0] [ 0 0 0 18 0]], shape=(4, 5), dtype=int32)

To insert data into a tensor with pre-existing values, use tf.tensor_scatter_nd_add.

t11 = tf.constant([[2, 7, 0],

[9, 0, 1],

[0, 3, 8]])

# Convert the tensor into a magic square by inserting numbers at appropriate indices

t12 = tf.tensor_scatter_nd_add(t11,

indices=[[0, 2], [1, 1], [2, 0]],

updates=[6, 5, 4])

print(t12)

tf.Tensor( [[2 7 6] [9 5 1] [4 3 8]], shape=(3, 3), dtype=int32)

Similarly, use tf.tensor_scatter_nd_sub to subtract values from a tensor with pre-existing values.

# Convert the tensor into an identity matrix

t13 = tf.tensor_scatter_nd_sub(t11,

indices=[[0, 0], [0, 1], [1, 0], [1, 1], [1, 2], [2, 1], [2, 2]],

updates=[1, 7, 9, -1, 1, 3, 7])

print(t13)

tf.Tensor( [[1 0 0] [0 1 0] [0 0 1]], shape=(3, 3), dtype=int32)

Use tf.tensor_scatter_nd_min to copy element-wise minimum values from one tensor to another.

t14 = tf.constant([[-2, -7, 0],

[-9, 0, 1],

[0, -3, -8]])

t15 = tf.tensor_scatter_nd_min(t14,

indices=[[0, 2], [1, 1], [2, 0]],

updates=[-6, -5, -4])

print(t15)

tf.Tensor( [[-2 -7 -6] [-9 -5 1] [-4 -3 -8]], shape=(3, 3), dtype=int32)

Similarly, use tf.tensor_scatter_nd_max to copy element-wise maximum values from one tensor to another.

t16 = tf.tensor_scatter_nd_max(t14,

indices=[[0, 2], [1, 1], [2, 0]],

updates=[6, 5, 4])

print(t16)

tf.Tensor( [[-2 -7 6] [-9 5 1] [ 4 -3 -8]], shape=(3, 3), dtype=int32)

Further reading and resources

In this guide, you learned how to use the tensor slicing ops available with TensorFlow to exert finer control over the elements in your tensors.

Check out the slicing ops available with TensorFlow NumPy such as

tf.experimental.numpy.take_along_axisandtf.experimental.numpy.take.Also check out the Tensor guide and the Variable guide.