在 TensorFlow.org 上查看 在 TensorFlow.org 上查看 |

在 Google Colab 中运行 在 Google Colab 中运行 |

在 GitHub 中查看源代码 在 GitHub 中查看源代码 |

下载笔记本 下载笔记本 |

小心:除了使用 pip 安装 Python 软件包外,此笔记本还使用 sudo apt install 安装系统软件包:unzip。

此 Colab 演示了如何使用 Tensorflow Hub 对非英语/本地语言进行文本分类。在这里,我们选择孟加拉语作为本地语言并使用预训练的单词嵌入向量解决多类分类任务,在这个任务中我们将孟加拉语的新闻文章分为 5 类。针对孟加拉语进行预训练的嵌入向量来自 FastText,这是一个由 Facebook 创建的库,其中包含 157 种语言的预训练单词向量。

我们将使用 TF-Hub 的预训练嵌入向量导出程序先将单词嵌入向量转换为文本嵌入向量模块,然后使用该模块通过 tf.keras(Tensorflow 的高级用户友好 API)训练分类器来构建深度学习模型。即使我们在这里使用 fastText 嵌入向量,您也可以导出任何通过其他任务预训练的其他嵌入向量,并使用 Tensorflow Hub 快速获得结果。

设置

# https://github.com/pypa/setuptools/issues/1694#issuecomment-466010982pip install gdown --no-use-pep517

sudo apt-get install -y unzipReading package lists... Building dependency tree... Reading state information... unzip is already the newest version (6.0-25ubuntu1.1). The following packages were automatically installed and are no longer required: libatasmart4 libblockdev-fs2 libblockdev-loop2 libblockdev-part-err2 libblockdev-part2 libblockdev-swap2 libblockdev-utils2 libblockdev2 libparted-fs-resize0 libxmlb2 Use 'sudo apt autoremove' to remove them. 0 upgraded, 0 newly installed, 0 to remove and 100 not upgraded.

import os

import tensorflow as tf

import tensorflow_hub as hub

import gdown

import numpy as np

from sklearn.metrics import classification_report

import matplotlib.pyplot as plt

import seaborn as sns

2023-11-07 18:39:54.234017: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:9261] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered 2023-11-07 18:39:54.234093: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:607] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered 2023-11-07 18:39:54.235746: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1515] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

数据集

我们将使用 BARD(孟加拉语文章数据集),内含从不同孟加拉语新闻门户收集的约 3,76,226 篇文章,并标记为 5 个类别:经济、国内、国际、体育和娱乐。我们从 Google 云端硬盘下载这个文件,此 (bit.ly/BARD_DATASET) 链接指向此 GitHub 仓库。

gdown.download(

url='https://drive.google.com/uc?id=1Ag0jd21oRwJhVFIBohmX_ogeojVtapLy',

output='bard.zip',

quiet=True

)

'bard.zip'

unzip -qo bard.zip将预训练的单词向量导出到 TF-Hub 模块

TF-Hub 提供了一些有用的脚本将单词嵌入向量转换为 TF-Hub 文本嵌入向量模块,详见这里。要使模块适用于孟加拉语或其他语言,我们只需将单词嵌入向量 .txt 或 .vec 文件下载到与 export_v2.py 相同的目录中,然后运行脚本。

导出程序会读取嵌入向量,并将其导出为 Tensorflow SavedModel。SavedModel 包含完整的 TensorFlow 程序,包括权重和计算图。TF-Hub 可以将 SavedModel 作为模块进行加载,我们将用它来构建文本分类模型。由于我们使用 tf.keras 来构建模型,因此我们将使用 hub.KerasLayer,它为 TF-Hub 模块提供用作 Keras 层的封装容器。

首先,我们从 fastText 获得单词嵌入向量,并从 TF-Hub 仓库获得嵌入向量导出程序。

curl -O https://dl.fbaipublicfiles.com/fasttext/vectors-crawl/cc.bn.300.vec.gzcurl -O https://raw.githubusercontent.com/tensorflow/hub/master/examples/text_embeddings_v2/export_v2.pygunzip -qf cc.bn.300.vec.gz --k

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 840M 100 840M 0 0 20.5M 0 0:00:40 0:00:40 --:--:-- 22.3M

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 7500 100 7500 0 0 50335 0 --:--:-- --:--:-- --:--:-- 50335

然后,我们在嵌入向量文件上运行导出程序脚本。由于 fastText 嵌入向量具有标题行并且相当大(转换为模块后,孟加拉语文件大约有 3.3 GB),因此我们忽略第一行,仅将前 100, 000 个词例导入文本嵌入向量模块。

python export_v2.py --embedding_file=cc.bn.300.vec --export_path=text_module --num_lines_to_ignore=1 --num_lines_to_use=1000002023-11-07 18:41:34.887280: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:9261] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered 2023-11-07 18:41:34.887329: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:607] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered 2023-11-07 18:41:34.888985: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1515] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered INFO:tensorflow:Assets written to: text_module/assets I1107 18:41:50.590195 140024288720704 builder_impl.py:801] Assets written to: text_module/assets I1107 18:41:50.593667 140024288720704 fingerprinting_utils.py:49] Writing fingerprint to text_module/fingerprint.pb

module_path = "text_module"

embedding_layer = hub.KerasLayer(module_path, trainable=False)

文本嵌入向量模块以一维字符串张量中的句子批次作为输入,并输出与句子相对应的形状 (batch_size, embedding_dim) 的嵌入向量。它通过按空格拆分来对输入进行预处理。我们使用 sqrtn 组合程序(请参阅此处)将单词嵌入向量组合到句子嵌入向量。为了演示,我们传递一个孟加拉语单词的列表作为输入,并获得相应的嵌入向量。

embedding_layer(['বাস', 'বসবাস', 'ট্রেন', 'যাত্রী', 'ট্রাক'])

<tf.Tensor: shape=(5, 300), dtype=float64, numpy=

array([[ 0.0462, -0.0355, 0.0129, ..., 0.0025, -0.0966, 0.0216],

[-0.0631, -0.0051, 0.085 , ..., 0.0249, -0.0149, 0.0203],

[ 0.1371, -0.069 , -0.1176, ..., 0.029 , 0.0508, -0.026 ],

[ 0.0532, -0.0465, -0.0504, ..., 0.02 , -0.0023, 0.0011],

[ 0.0908, -0.0404, -0.0536, ..., -0.0275, 0.0528, 0.0253]])>

转换为 TensorFlow 数据集

由于数据集确实很大,因此我们使用生成器通过 Tensorflow 数据集函数在运行时批量生成样本,而不是将整个数据集加载到内存中。数据集也非常不平衡,因此在使用生成器之前,我们将打乱数据集的顺序。

dir_names = ['economy', 'sports', 'entertainment', 'state', 'international']

file_paths = []

labels = []

for i, dir in enumerate(dir_names):

file_names = ["/".join([dir, name]) for name in os.listdir(dir)]

file_paths += file_names

labels += [i] * len(os.listdir(dir))

np.random.seed(42)

permutation = np.random.permutation(len(file_paths))

file_paths = np.array(file_paths)[permutation]

labels = np.array(labels)[permutation]

打乱顺序后,我们可以查看标签在训练和验证样本中的分布。

train_frac = 0.8

train_size = int(len(file_paths) * train_frac)

# plot training vs validation distribution

plt.subplot(1, 2, 1)

plt.hist(labels[0:train_size])

plt.title("Train labels")

plt.subplot(1, 2, 2)

plt.hist(labels[train_size:])

plt.title("Validation labels")

plt.tight_layout()

要使用生成器创建数据集,我们首先编写一个生成器函数,该函数会从 file_paths 读取文章,从标签数组中读取标签,并在每个步骤生成一个训练样本。我们将此生成器函数传递到 tf.data.Dataset.from_generator 方法,并指定输出类型。每个训练样本都是一个元组,其中包含 tf.string 数据类型的文章和独热编码标签。我们使用 tf.data.Dataset.skip 和 tf.data.Dataset.take 方法以 80-20 的比例将数据集拆分为训练集和验证集。

def load_file(path, label):

return tf.io.read_file(path), label

def make_datasets(train_size):

batch_size = 256

train_files = file_paths[:train_size]

train_labels = labels[:train_size]

train_ds = tf.data.Dataset.from_tensor_slices((train_files, train_labels))

train_ds = train_ds.map(load_file).shuffle(5000)

train_ds = train_ds.batch(batch_size).prefetch(tf.data.AUTOTUNE)

test_files = file_paths[train_size:]

test_labels = labels[train_size:]

test_ds = tf.data.Dataset.from_tensor_slices((test_files, test_labels))

test_ds = test_ds.map(load_file)

test_ds = test_ds.batch(batch_size).prefetch(tf.data.AUTOTUNE)

return train_ds, test_ds

train_data, validation_data = make_datasets(train_size)

模型训练和评估

由于我们已经在模块周围添加了封装容器,使其可以像 Keras 中的任何其他层一样使用,因此我们可以创建一个小的序贯模型,此模型是层的线性堆叠。我们可以像使用任何其他层一样,使用 model.add 添加文本嵌入向量模块。我们通过指定损失和优化器来编译模型,并对其进行 10 个周期的训练。tf.keras API 可以将 TensorFlow 数据集作为输入进行处理,因此我们可以将数据集实例传递给用于模型训练的拟合方法。由于我们使用的是生成器函数,tf.data 将负责生成样本,对其进行批处理,并将其馈送给模型。

模型

def create_model():

model = tf.keras.Sequential([

tf.keras.layers.Input(shape=[], dtype=tf.string),

embedding_layer,

tf.keras.layers.Dense(64, activation="relu"),

tf.keras.layers.Dense(16, activation="relu"),

tf.keras.layers.Dense(5),

])

model.compile(loss=tf.losses.SparseCategoricalCrossentropy(from_logits=True),

optimizer="adam", metrics=['accuracy'])

return model

model = create_model()

# Create earlystopping callback

early_stopping_callback = tf.keras.callbacks.EarlyStopping(monitor='val_loss', min_delta=0, patience=3)

训练

history = model.fit(train_data,

validation_data=validation_data,

epochs=5,

callbacks=[early_stopping_callback])

Epoch 1/5 WARNING: All log messages before absl::InitializeLog() is called are written to STDERR I0000 00:00:1699382517.693312 63594 device_compiler.h:186] Compiled cluster using XLA! This line is logged at most once for the lifetime of the process. 1176/1176 [==============================] - 32s 26ms/step - loss: 0.2210 - accuracy: 0.9266 - val_loss: 0.1573 - val_accuracy: 0.9463 Epoch 2/5 1176/1176 [==============================] - 30s 26ms/step - loss: 0.1422 - accuracy: 0.9499 - val_loss: 0.1387 - val_accuracy: 0.9503 Epoch 3/5 1176/1176 [==============================] - 30s 25ms/step - loss: 0.1302 - accuracy: 0.9534 - val_loss: 0.1311 - val_accuracy: 0.9527 Epoch 4/5 1176/1176 [==============================] - 30s 25ms/step - loss: 0.1223 - accuracy: 0.9559 - val_loss: 0.1247 - val_accuracy: 0.9551 Epoch 5/5 1176/1176 [==============================] - 30s 25ms/step - loss: 0.1168 - accuracy: 0.9573 - val_loss: 0.1199 - val_accuracy: 0.9566

评估

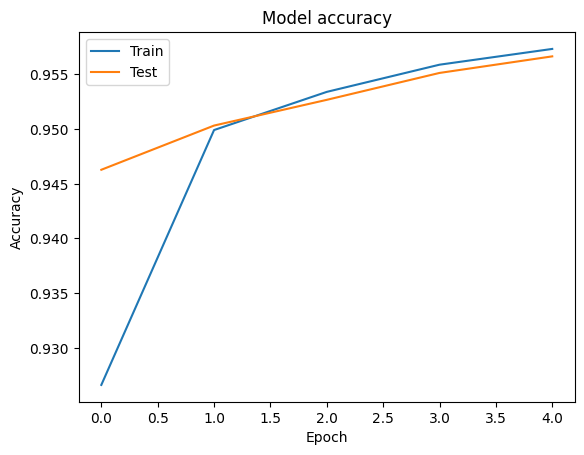

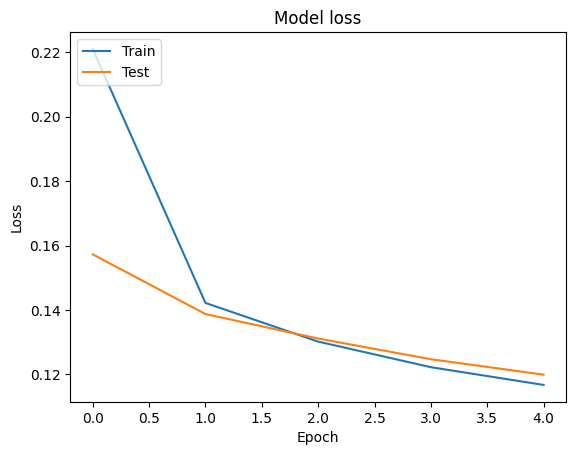

我们可以使用由 tf.keras.Model.fit 方法返回的 tf.keras.callbacks.History 对象(包含每个周期的损失和准确率值)来呈现训练和验证数据的准确率和损失曲线。

# Plot training & validation accuracy values

plt.plot(history.history['accuracy'])

plt.plot(history.history['val_accuracy'])

plt.title('Model accuracy')

plt.ylabel('Accuracy')

plt.xlabel('Epoch')

plt.legend(['Train', 'Test'], loc='upper left')

plt.show()

# Plot training & validation loss values

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('Model loss')

plt.ylabel('Loss')

plt.xlabel('Epoch')

plt.legend(['Train', 'Test'], loc='upper left')

plt.show()

预测

我们可以获得验证数据的预测并检查混淆矩阵,以查看模型在 5 个类中的性能。由于 tf.keras.Model.predict 方法会返回每个类的概率的 N 维数组,因此可以使用 np.argmax 将其转换为类标签。

y_pred = model.predict(validation_data)

294/294 [==============================] - 5s 18ms/step

y_pred = np.argmax(y_pred, axis=1)

samples = file_paths[0:3]

for i, sample in enumerate(samples):

f = open(sample)

text = f.read()

print(text[0:100])

print("True Class: ", sample.split("/")[0])

print("Predicted Class: ", dir_names[y_pred[i]])

f.close()

রবীন্দ্রনাথ ছড়ার ছবি বইতে ‘মাধো’ নামের এক কিশোরের গল্প বলেছেন, যে বাড়ি পালিয়ে পাটকলে কাজ নেয়। পাটকল True Class: entertainment Predicted Class: state সিটি করপোরেশন নির্বাচন শেষ হয়েছে প্রায় দুই বছর হলো। এই সময়ে রাজধানীর অনেক স্থানেই লেগেছে উন্নয়নের True Class: state Predicted Class: state বিশ্বকাপ মানে যেন দক্ষিণ আফ্রিকার চিরস্থায়ী একটা দুঃখ। সেই দুঃখ কিছুটা হলেও ঘুচিয়ে দিয়েছিল প্রোট True Class: sports Predicted Class: state

比较性能

现在,我们可以从 labels 获得验证数据的正确标签,并与我们的预测进行比较,以获得 classification_report。

y_true = np.array(labels[train_size:])

print(classification_report(y_true, y_pred, target_names=dir_names))

precision recall f1-score support

economy 0.81 0.78 0.80 3897

sports 0.99 0.98 0.99 10204

entertainment 0.91 0.94 0.92 6256

state 0.97 0.97 0.97 48512

international 0.93 0.92 0.93 6377

accuracy 0.96 75246

macro avg 0.92 0.92 0.92 75246

weighted avg 0.96 0.96 0.96 75246

我们还可以将模型的性能与原始论文中精度为 0.96 的发布结果进行比较。原作者描述了在数据集上完成的许多预处理步骤,例如删除标点和数字、去除前 25 个最常见的停用词等。正如我们在 classification_report 中所见,在仅训练了 5 个周期而没有进行任何预处理的情况下,我们也获得了 0.96 的精度和准确率!

在此示例中,当我们从嵌入向量模块创建 Keras 层时,我们设置了参数 trainable=False,这意味着训练期间不会更新嵌入向量权重。请尝试将此设置为 True,使用此数据集仅用 2 个周期即可达到 97% 的准确率。