Visualizza su TensorFlow.org Visualizza su TensorFlow.org |  Esegui in Google Colab Esegui in Google Colab |  Visualizza la fonte su GitHub Visualizza la fonte su GitHub |  Scarica taccuino Scarica taccuino |

Questo notebook allena una sequenza per modello di sequenza (seq2seq) per lo spagnolo alla traduzione inglese sulla base di efficace Approcci alla Attenzione a base neurale traduzione automatica . Questo è un esempio avanzato che presuppone una certa conoscenza di:

- Da sequenza a sequenza di modelli

- Fondamenti di TensorFlow sotto lo strato keras:

- Lavorare direttamente con i tensori

- Scrivendo su misura

keras.Models ekeras.layers

Mentre questa architettura è un po 'antiquato, è ancora un progetto molto utile per il lavoro attraverso per ottenere una più profonda comprensione dei meccanismi di attenzione (prima di passare alla Transformers ).

Dopo l'allenamento il modello in questo notebook, si sarà in grado di inserire una frase spagnola, come ad esempio, e restituire la traduzione in inglese "¿todavia estan en casa?": "Sei ancora a casa"

Il modello risultante è esportabile come tf.saved_model , in modo che possa essere utilizzato in altri ambienti tensorflow.

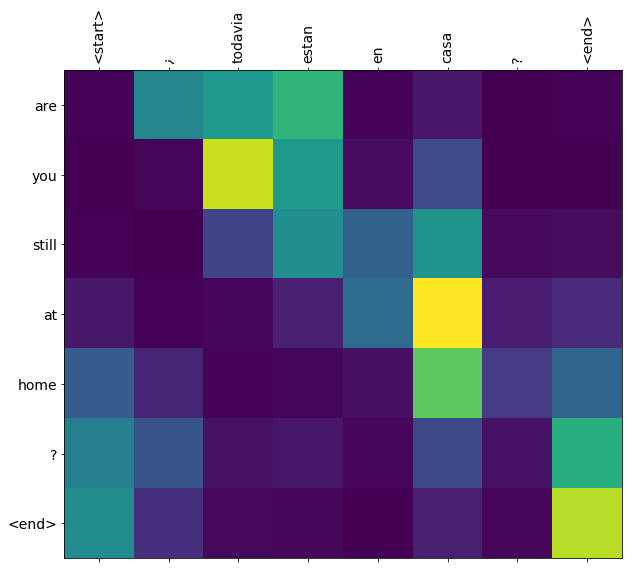

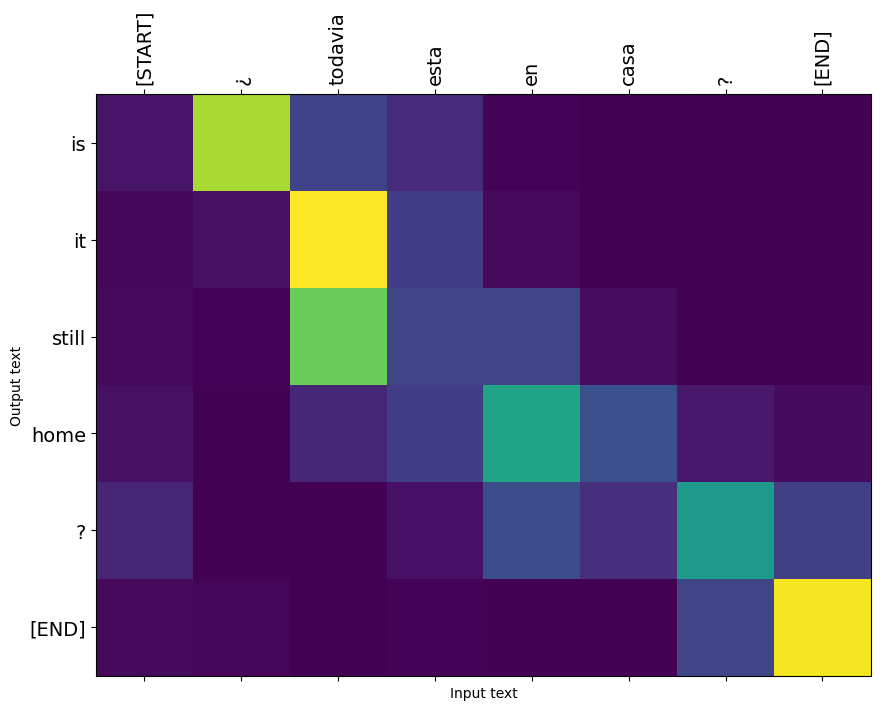

La qualità della traduzione è ragionevole per un esempio giocattolo, ma la trama dell'attenzione generata è forse più interessante. Questo mostra quali parti della frase di input hanno l'attenzione del modello durante la traduzione:

Impostare

pip install tensorflow_text

import numpy as np

import typing

from typing import Any, Tuple

import tensorflow as tf

import tensorflow_text as tf_text

import matplotlib.pyplot as plt

import matplotlib.ticker as ticker

Questo tutorial crea alcuni livelli da zero, usa questa variabile se vuoi passare dall'implementazione personalizzata a quella incorporata.

use_builtins = True

Questo tutorial utilizza molte API di basso livello in cui è facile sbagliare le forme. Questa classe viene utilizzata per controllare le forme durante l'esercitazione.

Controllo forma

class ShapeChecker():

def __init__(self):

# Keep a cache of every axis-name seen

self.shapes = {}

def __call__(self, tensor, names, broadcast=False):

if not tf.executing_eagerly():

return

if isinstance(names, str):

names = (names,)

shape = tf.shape(tensor)

rank = tf.rank(tensor)

if rank != len(names):

raise ValueError(f'Rank mismatch:\n'

f' found {rank}: {shape.numpy()}\n'

f' expected {len(names)}: {names}\n')

for i, name in enumerate(names):

if isinstance(name, int):

old_dim = name

else:

old_dim = self.shapes.get(name, None)

new_dim = shape[i]

if (broadcast and new_dim == 1):

continue

if old_dim is None:

# If the axis name is new, add its length to the cache.

self.shapes[name] = new_dim

continue

if new_dim != old_dim:

raise ValueError(f"Shape mismatch for dimension: '{name}'\n"

f" found: {new_dim}\n"

f" expected: {old_dim}\n")

I dati

Useremo un dataset lingua fornita da http://www.manythings.org/anki/ Questo set di dati contiene le coppie di traduzione lingua nel formato:

May I borrow this book? ¿Puedo tomar prestado este libro?

Hanno una varietà di lingue disponibili, ma utilizzeremo il set di dati inglese-spagnolo.

Scarica e prepara il set di dati

Per comodità, abbiamo ospitato una copia di questo set di dati su Google Cloud, ma puoi anche scaricare la tua copia. Dopo aver scaricato il set di dati, ecco i passaggi che eseguiremo per preparare i dati:

- Aggiungere un inizio e la fine token per ogni frase.

- Pulisci le frasi rimuovendo i caratteri speciali.

- Crea un indice di parole e inverti l'indice delle parole (mapping di dizionari da parola → id e id → parola).

- Riempi ogni frase fino alla lunghezza massima.

# Download the file

import pathlib

path_to_zip = tf.keras.utils.get_file(

'spa-eng.zip', origin='http://storage.googleapis.com/download.tensorflow.org/data/spa-eng.zip',

extract=True)

path_to_file = pathlib.Path(path_to_zip).parent/'spa-eng/spa.txt'

Downloading data from http://storage.googleapis.com/download.tensorflow.org/data/spa-eng.zip 2646016/2638744 [==============================] - 0s 0us/step 2654208/2638744 [==============================] - 0s 0us/step

def load_data(path):

text = path.read_text(encoding='utf-8')

lines = text.splitlines()

pairs = [line.split('\t') for line in lines]

inp = [inp for targ, inp in pairs]

targ = [targ for targ, inp in pairs]

return targ, inp

targ, inp = load_data(path_to_file)

print(inp[-1])

Si quieres sonar como un hablante nativo, debes estar dispuesto a practicar diciendo la misma frase una y otra vez de la misma manera en que un músico de banjo practica el mismo fraseo una y otra vez hasta que lo puedan tocar correctamente y en el tiempo esperado.

print(targ[-1])

If you want to sound like a native speaker, you must be willing to practice saying the same sentence over and over in the same way that banjo players practice the same phrase over and over until they can play it correctly and at the desired tempo.

Crea un set di dati tf.data

Da questi array di stringhe è possibile creare un tf.data.Dataset di stringhe che mescola e li lotti in modo efficiente:

BUFFER_SIZE = len(inp)

BATCH_SIZE = 64

dataset = tf.data.Dataset.from_tensor_slices((inp, targ)).shuffle(BUFFER_SIZE)

dataset = dataset.batch(BATCH_SIZE)

for example_input_batch, example_target_batch in dataset.take(1):

print(example_input_batch[:5])

print()

print(example_target_batch[:5])

break

tf.Tensor( [b'No s\xc3\xa9 lo que quiero.' b'\xc2\xbfDeber\xc3\xada repetirlo?' b'Tard\xc3\xa9 m\xc3\xa1s de 2 horas en traducir unas p\xc3\xa1ginas en ingl\xc3\xa9s.' b'A Tom comenz\xc3\xb3 a temerle a Mary.' b'Mi pasatiempo es la lectura.'], shape=(5,), dtype=string) tf.Tensor( [b"I don't know what I want." b'Should I repeat it?' b'It took me more than two hours to translate a few pages of English.' b'Tom became afraid of Mary.' b'My hobby is reading.'], shape=(5,), dtype=string)

Pre-elaborazione del testo

Uno degli obiettivi di questo tutorial è quello di costruire un modello che può essere esportato come tf.saved_model . Per effettuare tale modello esportato utile che dovrebbe assumere tf.string ingressi e ritorno tf.string uscite: tutte elaborazione testo avviene all'interno del modello.

Standardizzazione

Il modello si occupa di testo multilingue con un vocabolario limitato. Quindi sarà importante standardizzare il testo di input.

Il primo passo è la normalizzazione Unicode per dividere i caratteri accentati e sostituire i caratteri di compatibilità con i loro equivalenti ASCII.

Il tensorflow_text pacchetto contiene un'operazione unicode normalizzare:

example_text = tf.constant('¿Todavía está en casa?')

print(example_text.numpy())

print(tf_text.normalize_utf8(example_text, 'NFKD').numpy())

b'\xc2\xbfTodav\xc3\xada est\xc3\xa1 en casa?' b'\xc2\xbfTodavi\xcc\x81a esta\xcc\x81 en casa?'

La normalizzazione Unicode sarà il primo passo nella funzione di standardizzazione del testo:

def tf_lower_and_split_punct(text):

# Split accecented characters.

text = tf_text.normalize_utf8(text, 'NFKD')

text = tf.strings.lower(text)

# Keep space, a to z, and select punctuation.

text = tf.strings.regex_replace(text, '[^ a-z.?!,¿]', '')

# Add spaces around punctuation.

text = tf.strings.regex_replace(text, '[.?!,¿]', r' \0 ')

# Strip whitespace.

text = tf.strings.strip(text)

text = tf.strings.join(['[START]', text, '[END]'], separator=' ')

return text

print(example_text.numpy().decode())

print(tf_lower_and_split_punct(example_text).numpy().decode())

¿Todavía está en casa? [START] ¿ todavia esta en casa ? [END]

Vettorializzazione del testo

Questa funzione standardizzazione sarà avvolto in una tf.keras.layers.TextVectorization strato che gestirà l'estrazione vocabolario e conversione di testo di input a sequenze di gettoni.

max_vocab_size = 5000

input_text_processor = tf.keras.layers.TextVectorization(

standardize=tf_lower_and_split_punct,

max_tokens=max_vocab_size)

Il TextVectorization strato e molti altri strati di pre-elaborazione hanno un adapt metodo. Questo metodo legge un'epoca dei dati di addestramento, e funziona un po 'come Model.fix . Questo adapt metodo inizializza il livello in base ai dati. Qui determina il vocabolario:

input_text_processor.adapt(inp)

# Here are the first 10 words from the vocabulary:

input_text_processor.get_vocabulary()[:10]

['', '[UNK]', '[START]', '[END]', '.', 'que', 'de', 'el', 'a', 'no']

Questa è la spagnola TextVectorization livello, ora costruire e .adapt() quella inglese:

output_text_processor = tf.keras.layers.TextVectorization(

standardize=tf_lower_and_split_punct,

max_tokens=max_vocab_size)

output_text_processor.adapt(targ)

output_text_processor.get_vocabulary()[:10]

['', '[UNK]', '[START]', '[END]', '.', 'the', 'i', 'to', 'you', 'tom']

Ora questi livelli possono convertire un batch di stringhe in un batch di ID token:

example_tokens = input_text_processor(example_input_batch)

example_tokens[:3, :10]

<tf.Tensor: shape=(3, 10), dtype=int64, numpy=

array([[ 2, 9, 17, 22, 5, 48, 4, 3, 0, 0],

[ 2, 13, 177, 1, 12, 3, 0, 0, 0, 0],

[ 2, 120, 35, 6, 290, 14, 2134, 506, 2637, 14]])>

Il get_vocabulary metodo può essere utilizzato per convertire gli ID token torna al testo:

input_vocab = np.array(input_text_processor.get_vocabulary())

tokens = input_vocab[example_tokens[0].numpy()]

' '.join(tokens)

'[START] no se lo que quiero . [END] '

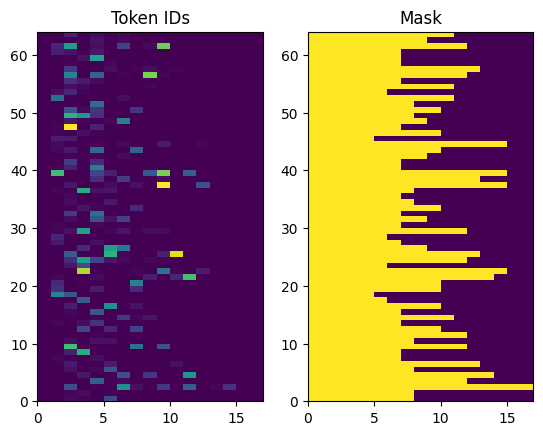

Gli ID token restituiti sono a zero. Questo può essere facilmente trasformato in una maschera:

plt.subplot(1, 2, 1)

plt.pcolormesh(example_tokens)

plt.title('Token IDs')

plt.subplot(1, 2, 2)

plt.pcolormesh(example_tokens != 0)

plt.title('Mask')

Text(0.5, 1.0, 'Mask')

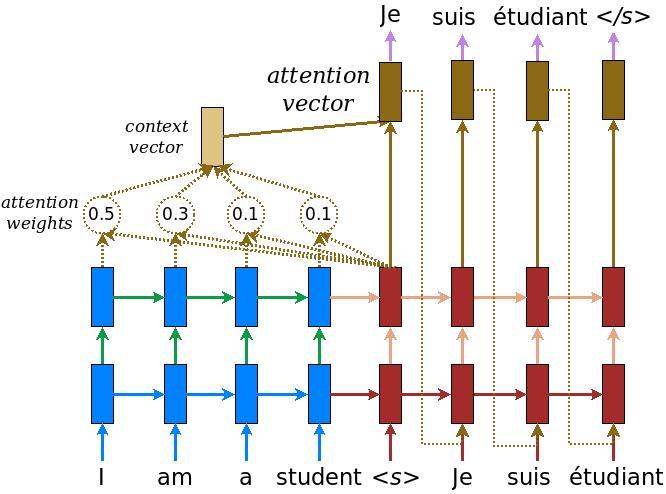

Il modello del codificatore/decodificatore

Il diagramma seguente mostra una panoramica del modello. Ad ogni passo l'uscita del decodificatore è combinata con una somma pesata sull'ingresso codificato, per prevedere la parola successiva. Il diagramma e formule sono da carta di Luong .

Prima di entrare in esso definire alcune costanti per il modello:

embedding_dim = 256

units = 1024

L'encoder

Inizia costruendo l'encoder, la parte blu del diagramma sopra.

Il codificatore:

- Prende un elenco di ID token (da

input_text_processor). - Guarda in alto un vettore di embedding per ogni token (Utilizzo di un

layers.Embedding). - Elabora le immersioni in una nuova sequenza (usando un

layers.GRU). - Ritorna:

- La sequenza elaborata. Questo sarà passato al capo dell'attenzione.

- Lo stato interno. Questo sarà usato per inizializzare il decoder

class Encoder(tf.keras.layers.Layer):

def __init__(self, input_vocab_size, embedding_dim, enc_units):

super(Encoder, self).__init__()

self.enc_units = enc_units

self.input_vocab_size = input_vocab_size

# The embedding layer converts tokens to vectors

self.embedding = tf.keras.layers.Embedding(self.input_vocab_size,

embedding_dim)

# The GRU RNN layer processes those vectors sequentially.

self.gru = tf.keras.layers.GRU(self.enc_units,

# Return the sequence and state

return_sequences=True,

return_state=True,

recurrent_initializer='glorot_uniform')

def call(self, tokens, state=None):

shape_checker = ShapeChecker()

shape_checker(tokens, ('batch', 's'))

# 2. The embedding layer looks up the embedding for each token.

vectors = self.embedding(tokens)

shape_checker(vectors, ('batch', 's', 'embed_dim'))

# 3. The GRU processes the embedding sequence.

# output shape: (batch, s, enc_units)

# state shape: (batch, enc_units)

output, state = self.gru(vectors, initial_state=state)

shape_checker(output, ('batch', 's', 'enc_units'))

shape_checker(state, ('batch', 'enc_units'))

# 4. Returns the new sequence and its state.

return output, state

Ecco come si combina finora:

# Convert the input text to tokens.

example_tokens = input_text_processor(example_input_batch)

# Encode the input sequence.

encoder = Encoder(input_text_processor.vocabulary_size(),

embedding_dim, units)

example_enc_output, example_enc_state = encoder(example_tokens)

print(f'Input batch, shape (batch): {example_input_batch.shape}')

print(f'Input batch tokens, shape (batch, s): {example_tokens.shape}')

print(f'Encoder output, shape (batch, s, units): {example_enc_output.shape}')

print(f'Encoder state, shape (batch, units): {example_enc_state.shape}')

Input batch, shape (batch): (64,) Input batch tokens, shape (batch, s): (64, 14) Encoder output, shape (batch, s, units): (64, 14, 1024) Encoder state, shape (batch, units): (64, 1024)

Il codificatore restituisce il suo stato interno in modo che il suo stato possa essere utilizzato per inizializzare il decodificatore.

È anche comune per un RNN restituire il suo stato in modo che possa elaborare una sequenza su più chiamate. Vedrai di più che costruisce il decoder.

La testa dell'attenzione

Il decodificatore utilizza l'attenzione per concentrarsi selettivamente su parti della sequenza di input. L'attenzione prende una sequenza di vettori come input per ogni esempio e restituisce un vettore di "attenzione" per ogni esempio. Questo strato attenzione è simile a un layers.GlobalAveragePoling1D ma lo strato attenzione esegue una media ponderata.

Diamo un'occhiata a come funziona:

Dove:

- \(s\) è l'indice dell'encoder.

- \(t\) è l'indice decoder.

- \(\alpha_{ts}\) sono i pesi di attenzione.

- \(h_s\) è la sequenza di uscite dell'encoder essendo curato ( "chiave" attenzione e "valore" nella terminologia trasformatore).

- \(h_t\) è stato il decodificatore partecipare alla sequenza (all'attenzione "query" nella terminologia trasformatore).

- \(c_t\) è il vettore risultante contesto.

- \(a_t\) è l'uscita finale combinando il "contesto" e "query".

Le equazioni:

- Calcola il peso attenzione, \(\alpha_{ts}\), come softmax attraverso sequenza di uscita del codificatore.

- Calcola il vettore di contesto come somma pesata degli output dell'encoder.

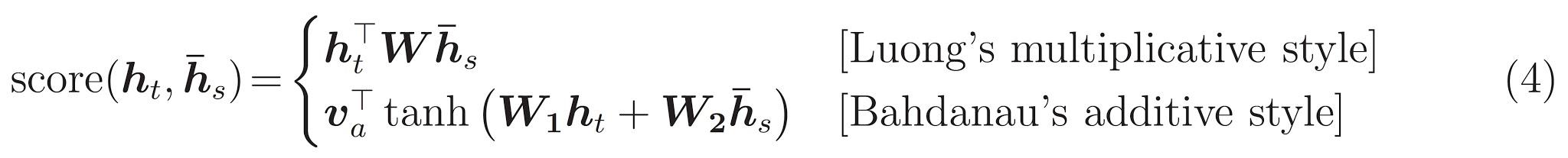

Ultimo è il \(score\) funzione. Il suo compito è calcolare un punteggio logit scalare per ogni coppia di query chiave. Esistono due approcci comuni:

Questo tutorial utilizza l'attenzione additivo di Bahdanau . Tensorflow include implementazioni di sia come layers.Attention e layers.AdditiveAttention . Classe inferiore maniglie le matrici di peso in una coppia di layers.Dense strati, e chiama l'implementazione integrata.

class BahdanauAttention(tf.keras.layers.Layer):

def __init__(self, units):

super().__init__()

# For Eqn. (4), the Bahdanau attention

self.W1 = tf.keras.layers.Dense(units, use_bias=False)

self.W2 = tf.keras.layers.Dense(units, use_bias=False)

self.attention = tf.keras.layers.AdditiveAttention()

def call(self, query, value, mask):

shape_checker = ShapeChecker()

shape_checker(query, ('batch', 't', 'query_units'))

shape_checker(value, ('batch', 's', 'value_units'))

shape_checker(mask, ('batch', 's'))

# From Eqn. (4), `W1@ht`.

w1_query = self.W1(query)

shape_checker(w1_query, ('batch', 't', 'attn_units'))

# From Eqn. (4), `W2@hs`.

w2_key = self.W2(value)

shape_checker(w2_key, ('batch', 's', 'attn_units'))

query_mask = tf.ones(tf.shape(query)[:-1], dtype=bool)

value_mask = mask

context_vector, attention_weights = self.attention(

inputs = [w1_query, value, w2_key],

mask=[query_mask, value_mask],

return_attention_scores = True,

)

shape_checker(context_vector, ('batch', 't', 'value_units'))

shape_checker(attention_weights, ('batch', 't', 's'))

return context_vector, attention_weights

Prova il livello Attenzione

Creare un BahdanauAttention livello:

attention_layer = BahdanauAttention(units)

Questo livello richiede 3 input:

- L'

query: Questo sarà generato dal decodificatore, in seguito. - Il

value: questo sarà l'uscita del codificatore. - La

mask: per escludere l'imbottitura,example_tokens != 0

(example_tokens != 0).shape

TensorShape([64, 14])

L'implementazione vettorizzata del livello di attenzione consente di passare un batch di sequenze di vettori di query e un batch di sequenze di vettori di valore. Il risultato è:

- Un batch di sequenze di risultati vettori la dimensione delle query.

- Un'attenzione lotto mappe, con dimensioni

(query_length, value_length).

# Later, the decoder will generate this attention query

example_attention_query = tf.random.normal(shape=[len(example_tokens), 2, 10])

# Attend to the encoded tokens

context_vector, attention_weights = attention_layer(

query=example_attention_query,

value=example_enc_output,

mask=(example_tokens != 0))

print(f'Attention result shape: (batch_size, query_seq_length, units): {context_vector.shape}')

print(f'Attention weights shape: (batch_size, query_seq_length, value_seq_length): {attention_weights.shape}')

Attention result shape: (batch_size, query_seq_length, units): (64, 2, 1024) Attention weights shape: (batch_size, query_seq_length, value_seq_length): (64, 2, 14)

I pesi attenzione deve sommare a 1.0 per ogni sequenza.

Qui ci sono i pesi di attenzione attraverso le sequenze a t=0 :

plt.subplot(1, 2, 1)

plt.pcolormesh(attention_weights[:, 0, :])

plt.title('Attention weights')

plt.subplot(1, 2, 2)

plt.pcolormesh(example_tokens != 0)

plt.title('Mask')

Text(0.5, 1.0, 'Mask')

A causa della piccola inizializzazione casuale i pesi attenzione sono tutti vicini a 1/(sequence_length) . Se si ingrandisce i pesi per una singola sequenza, si può vedere che c'è qualche piccola variazione che il modello può imparare ad espandersi, e sfruttare.

attention_weights.shape

TensorShape([64, 2, 14])

attention_slice = attention_weights[0, 0].numpy()

attention_slice = attention_slice[attention_slice != 0]

plt.suptitle('Attention weights for one sequence')

plt.figure(figsize=(12, 6))

a1 = plt.subplot(1, 2, 1)

plt.bar(range(len(attention_slice)), attention_slice)

# freeze the xlim

plt.xlim(plt.xlim())

plt.xlabel('Attention weights')

a2 = plt.subplot(1, 2, 2)

plt.bar(range(len(attention_slice)), attention_slice)

plt.xlabel('Attention weights, zoomed')

# zoom in

top = max(a1.get_ylim())

zoom = 0.85*top

a2.set_ylim([0.90*top, top])

a1.plot(a1.get_xlim(), [zoom, zoom], color='k')

[<matplotlib.lines.Line2D at 0x7fb42c5b1090>] <Figure size 432x288 with 0 Axes>

Il decoder

Il compito del decodificatore è generare previsioni per il prossimo token di output.

- Il decoder riceve l'intera uscita dell'encoder.

- Utilizza un RNN per tenere traccia di ciò che ha generato finora.

- Usa il suo output RNN come query per l'attenzione sull'output del codificatore, producendo il vettore di contesto.

- Combina l'output RNN e il vettore di contesto utilizzando l'equazione 3 (sotto) per generare il "vettore di attenzione".

- Genera previsioni logit per il token successivo in base al "vettore di attenzione".

Ecco il Decoder di classe e la sua inizializzazione. L'inizializzatore crea tutti i livelli necessari.

class Decoder(tf.keras.layers.Layer):

def __init__(self, output_vocab_size, embedding_dim, dec_units):

super(Decoder, self).__init__()

self.dec_units = dec_units

self.output_vocab_size = output_vocab_size

self.embedding_dim = embedding_dim

# For Step 1. The embedding layer convets token IDs to vectors

self.embedding = tf.keras.layers.Embedding(self.output_vocab_size,

embedding_dim)

# For Step 2. The RNN keeps track of what's been generated so far.

self.gru = tf.keras.layers.GRU(self.dec_units,

return_sequences=True,

return_state=True,

recurrent_initializer='glorot_uniform')

# For step 3. The RNN output will be the query for the attention layer.

self.attention = BahdanauAttention(self.dec_units)

# For step 4. Eqn. (3): converting `ct` to `at`

self.Wc = tf.keras.layers.Dense(dec_units, activation=tf.math.tanh,

use_bias=False)

# For step 5. This fully connected layer produces the logits for each

# output token.

self.fc = tf.keras.layers.Dense(self.output_vocab_size)

La call metodo per questo strato prende e restituisce più tensori. Organizzali in semplici classi contenitore:

class DecoderInput(typing.NamedTuple):

new_tokens: Any

enc_output: Any

mask: Any

class DecoderOutput(typing.NamedTuple):

logits: Any

attention_weights: Any

Qui è l'implementazione della call metodo:

def call(self,

inputs: DecoderInput,

state=None) -> Tuple[DecoderOutput, tf.Tensor]:

shape_checker = ShapeChecker()

shape_checker(inputs.new_tokens, ('batch', 't'))

shape_checker(inputs.enc_output, ('batch', 's', 'enc_units'))

shape_checker(inputs.mask, ('batch', 's'))

if state is not None:

shape_checker(state, ('batch', 'dec_units'))

# Step 1. Lookup the embeddings

vectors = self.embedding(inputs.new_tokens)

shape_checker(vectors, ('batch', 't', 'embedding_dim'))

# Step 2. Process one step with the RNN

rnn_output, state = self.gru(vectors, initial_state=state)

shape_checker(rnn_output, ('batch', 't', 'dec_units'))

shape_checker(state, ('batch', 'dec_units'))

# Step 3. Use the RNN output as the query for the attention over the

# encoder output.

context_vector, attention_weights = self.attention(

query=rnn_output, value=inputs.enc_output, mask=inputs.mask)

shape_checker(context_vector, ('batch', 't', 'dec_units'))

shape_checker(attention_weights, ('batch', 't', 's'))

# Step 4. Eqn. (3): Join the context_vector and rnn_output

# [ct; ht] shape: (batch t, value_units + query_units)

context_and_rnn_output = tf.concat([context_vector, rnn_output], axis=-1)

# Step 4. Eqn. (3): `at = tanh(Wc@[ct; ht])`

attention_vector = self.Wc(context_and_rnn_output)

shape_checker(attention_vector, ('batch', 't', 'dec_units'))

# Step 5. Generate logit predictions:

logits = self.fc(attention_vector)

shape_checker(logits, ('batch', 't', 'output_vocab_size'))

return DecoderOutput(logits, attention_weights), state

Decoder.call = call

Il codificatore elabora la sua sequenza di ingresso completo con una singola chiamata a sua RNN. Questa implementazione del decoder può fare anche questo per un allenamento efficace. Ma questo tutorial eseguirà il decoder in loop per alcuni motivi:

- Flessibilità: la scrittura del ciclo ti dà il controllo diretto sulla procedura di addestramento.

- Chiarezza: E 'possibile fare i trucchi di mascheramento e utilizzare

layers.RNN, otfa.seq2seqAPI per il confezionamento di tutto questo in una sola chiamata. Ma scriverlo come un ciclo potrebbe essere più chiaro.- Loop formazione gratuita è dimostrata nella generazione di testo tutiorial.

Ora prova a usare questo decoder.

decoder = Decoder(output_text_processor.vocabulary_size(),

embedding_dim, units)

Il decoder prende 4 ingressi.

-

new_tokens- L'ultimo token generato. Inizializzare il decoder con il"[START]"token. -

enc_output- Generato dalEncoder. -

mask- un tensore booleano che indica dovetokens != 0 -

state- Lo precedentestateuscita dal decodificatore (lo stato interno RNN del decoder). PassareNonea zero-inizializzarlo. Il documento originale lo inizializza dallo stato RNN finale del codificatore.

# Convert the target sequence, and collect the "[START]" tokens

example_output_tokens = output_text_processor(example_target_batch)

start_index = output_text_processor.get_vocabulary().index('[START]')

first_token = tf.constant([[start_index]] * example_output_tokens.shape[0])

# Run the decoder

dec_result, dec_state = decoder(

inputs = DecoderInput(new_tokens=first_token,

enc_output=example_enc_output,

mask=(example_tokens != 0)),

state = example_enc_state

)

print(f'logits shape: (batch_size, t, output_vocab_size) {dec_result.logits.shape}')

print(f'state shape: (batch_size, dec_units) {dec_state.shape}')

logits shape: (batch_size, t, output_vocab_size) (64, 1, 5000) state shape: (batch_size, dec_units) (64, 1024)

Campiona un token in base ai logit:

sampled_token = tf.random.categorical(dec_result.logits[:, 0, :], num_samples=1)

Decodifica il token come prima parola dell'output:

vocab = np.array(output_text_processor.get_vocabulary())

first_word = vocab[sampled_token.numpy()]

first_word[:5]

array([['already'],

['plants'],

['pretended'],

['convince'],

['square']], dtype='<U16')

Ora usa il decoder per generare un secondo set di logit.

- Passare lo stesso

enc_outputemask, questi non sono cambiati. - Passare il campionata token come

new_tokens. - Passare il

decoder_stateil decoder è tornato l'ultima volta, in modo che il RNN prosegue con una memoria di cui è stata interrotta l'ultima volta.

dec_result, dec_state = decoder(

DecoderInput(sampled_token,

example_enc_output,

mask=(example_tokens != 0)),

state=dec_state)

sampled_token = tf.random.categorical(dec_result.logits[:, 0, :], num_samples=1)

first_word = vocab[sampled_token.numpy()]

first_word[:5]

array([['nap'],

['mean'],

['worker'],

['passage'],

['baked']], dtype='<U16')

Formazione

Ora che hai tutti i componenti del modello, è il momento di iniziare ad addestrare il modello. Avrai bisogno:

- Una funzione di perdita e un ottimizzatore per eseguire l'ottimizzazione.

- Una funzione della fase di addestramento che definisce come aggiornare il modello per ogni batch di input/target.

- Un ciclo di formazione per guidare la formazione e salvare i checkpoint.

Definire la funzione di perdita

class MaskedLoss(tf.keras.losses.Loss):

def __init__(self):

self.name = 'masked_loss'

self.loss = tf.keras.losses.SparseCategoricalCrossentropy(

from_logits=True, reduction='none')

def __call__(self, y_true, y_pred):

shape_checker = ShapeChecker()

shape_checker(y_true, ('batch', 't'))

shape_checker(y_pred, ('batch', 't', 'logits'))

# Calculate the loss for each item in the batch.

loss = self.loss(y_true, y_pred)

shape_checker(loss, ('batch', 't'))

# Mask off the losses on padding.

mask = tf.cast(y_true != 0, tf.float32)

shape_checker(mask, ('batch', 't'))

loss *= mask

# Return the total.

return tf.reduce_sum(loss)

Implementare la fase di formazione

Inizia con una classe del modello, il processo di formazione sarà attuato come train_step metodo su questo modello. Vedere Personalizzazione adatta per i dettagli.

Qui il train_step metodo è un wrapper _train_step attuazione, che verrà più tardi. Questo involucro include un interruttore per accendere e spegnere tf.function compilazione, per rendere più facile il debug.

class TrainTranslator(tf.keras.Model):

def __init__(self, embedding_dim, units,

input_text_processor,

output_text_processor,

use_tf_function=True):

super().__init__()

# Build the encoder and decoder

encoder = Encoder(input_text_processor.vocabulary_size(),

embedding_dim, units)

decoder = Decoder(output_text_processor.vocabulary_size(),

embedding_dim, units)

self.encoder = encoder

self.decoder = decoder

self.input_text_processor = input_text_processor

self.output_text_processor = output_text_processor

self.use_tf_function = use_tf_function

self.shape_checker = ShapeChecker()

def train_step(self, inputs):

self.shape_checker = ShapeChecker()

if self.use_tf_function:

return self._tf_train_step(inputs)

else:

return self._train_step(inputs)

Nel complesso l'implementazione per il Model.train_step metodo è il seguente:

- Ricevere un lotto di

input_text, target_textdaltf.data.Dataset. - Converti quegli input di testo non elaborati in incorporamenti di token e maschere.

- Eseguire l'encoder sui

input_tokensper ottenereencoder_outputeencoder_state. - Inizializzare lo stato e la perdita del decoder.

- Loop nel corso degli

target_tokens:- Esegui il decoder un passo alla volta.

- Calcola la perdita per ogni passaggio.

- Accumula la perdita media.

- Calcolare il gradiente della perdita e usare l'ottimizzatore per applicare gli aggiornamenti del modello

trainable_variables.

Il _preprocess metodo, aggiunto sotto, implementa i passaggi 1 e # 2 #:

def _preprocess(self, input_text, target_text):

self.shape_checker(input_text, ('batch',))

self.shape_checker(target_text, ('batch',))

# Convert the text to token IDs

input_tokens = self.input_text_processor(input_text)

target_tokens = self.output_text_processor(target_text)

self.shape_checker(input_tokens, ('batch', 's'))

self.shape_checker(target_tokens, ('batch', 't'))

# Convert IDs to masks.

input_mask = input_tokens != 0

self.shape_checker(input_mask, ('batch', 's'))

target_mask = target_tokens != 0

self.shape_checker(target_mask, ('batch', 't'))

return input_tokens, input_mask, target_tokens, target_mask

TrainTranslator._preprocess = _preprocess

Il _train_step metodo, aggiunto sotto, gestisce i passaggi rimanenti eccetto per eseguire effettivamente il decoder:

def _train_step(self, inputs):

input_text, target_text = inputs

(input_tokens, input_mask,

target_tokens, target_mask) = self._preprocess(input_text, target_text)

max_target_length = tf.shape(target_tokens)[1]

with tf.GradientTape() as tape:

# Encode the input

enc_output, enc_state = self.encoder(input_tokens)

self.shape_checker(enc_output, ('batch', 's', 'enc_units'))

self.shape_checker(enc_state, ('batch', 'enc_units'))

# Initialize the decoder's state to the encoder's final state.

# This only works if the encoder and decoder have the same number of

# units.

dec_state = enc_state

loss = tf.constant(0.0)

for t in tf.range(max_target_length-1):

# Pass in two tokens from the target sequence:

# 1. The current input to the decoder.

# 2. The target for the decoder's next prediction.

new_tokens = target_tokens[:, t:t+2]

step_loss, dec_state = self._loop_step(new_tokens, input_mask,

enc_output, dec_state)

loss = loss + step_loss

# Average the loss over all non padding tokens.

average_loss = loss / tf.reduce_sum(tf.cast(target_mask, tf.float32))

# Apply an optimization step

variables = self.trainable_variables

gradients = tape.gradient(average_loss, variables)

self.optimizer.apply_gradients(zip(gradients, variables))

# Return a dict mapping metric names to current value

return {'batch_loss': average_loss}

TrainTranslator._train_step = _train_step

Il _loop_step metodo, aggiunto sotto, esegue il decoder e calcola la perdita incrementale e nuovo stato decodificatore ( dec_state ).

def _loop_step(self, new_tokens, input_mask, enc_output, dec_state):

input_token, target_token = new_tokens[:, 0:1], new_tokens[:, 1:2]

# Run the decoder one step.

decoder_input = DecoderInput(new_tokens=input_token,

enc_output=enc_output,

mask=input_mask)

dec_result, dec_state = self.decoder(decoder_input, state=dec_state)

self.shape_checker(dec_result.logits, ('batch', 't1', 'logits'))

self.shape_checker(dec_result.attention_weights, ('batch', 't1', 's'))

self.shape_checker(dec_state, ('batch', 'dec_units'))

# `self.loss` returns the total for non-padded tokens

y = target_token

y_pred = dec_result.logits

step_loss = self.loss(y, y_pred)

return step_loss, dec_state

TrainTranslator._loop_step = _loop_step

Prova la fase di allenamento

Costruire un TrainTranslator , e configurarlo per la formazione con il Model.compile metodo:

translator = TrainTranslator(

embedding_dim, units,

input_text_processor=input_text_processor,

output_text_processor=output_text_processor,

use_tf_function=False)

# Configure the loss and optimizer

translator.compile(

optimizer=tf.optimizers.Adam(),

loss=MaskedLoss(),

)

Testare la train_step . Per un modello di testo come questo la perdita dovrebbe iniziare vicino a:

np.log(output_text_processor.vocabulary_size())

8.517193191416236

%%time

for n in range(10):

print(translator.train_step([example_input_batch, example_target_batch]))

print()

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=7.5849695>}

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=7.55271>}

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=7.4929113>}

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=7.3296022>}

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=6.80437>}

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=5.000246>}

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=5.8740363>}

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=4.794589>}

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=4.3175836>}

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=4.108163>}

CPU times: user 5.49 s, sys: 0 ns, total: 5.49 s

Wall time: 5.45 s

Mentre è più facile eseguire il debug senza un tf.function lo fa dare un incremento delle prestazioni. Quindi, ora che il _train_step metodo funziona, provare il tf.function -wrapped _tf_train_step , per massimizzare le prestazioni durante l'allenamento:

@tf.function(input_signature=[[tf.TensorSpec(dtype=tf.string, shape=[None]),

tf.TensorSpec(dtype=tf.string, shape=[None])]])

def _tf_train_step(self, inputs):

return self._train_step(inputs)

TrainTranslator._tf_train_step = _tf_train_step

translator.use_tf_function = True

La prima chiamata sarà lenta, perché traccia la funzione.

translator.train_step([example_input_batch, example_target_batch])

2021-12-04 12:09:48.074769: E tensorflow/core/grappler/optimizers/meta_optimizer.cc:812] function_optimizer failed: INVALID_ARGUMENT: Input 6 of node gradient_tape/while/while_grad/body/_531/gradient_tape/while/gradients/while/decoder_1/gru_3/PartitionedCall_grad/PartitionedCall was passed variant from gradient_tape/while/while_grad/body/_531/gradient_tape/while/gradients/while/decoder_1/gru_3/PartitionedCall_grad/TensorListPopBack_2:1 incompatible with expected float.

2021-12-04 12:09:48.180156: E tensorflow/core/grappler/optimizers/meta_optimizer.cc:812] layout failed: OUT_OF_RANGE: src_output = 25, but num_outputs is only 25

2021-12-04 12:09:48.285846: E tensorflow/core/grappler/optimizers/meta_optimizer.cc:812] tfg_optimizer{} failed: INVALID_ARGUMENT: Input 6 of node gradient_tape/while/while_grad/body/_531/gradient_tape/while/gradients/while/decoder_1/gru_3/PartitionedCall_grad/PartitionedCall was passed variant from gradient_tape/while/while_grad/body/_531/gradient_tape/while/gradients/while/decoder_1/gru_3/PartitionedCall_grad/TensorListPopBack_2:1 incompatible with expected float.

when importing GraphDef to MLIR module in GrapplerHook

2021-12-04 12:09:48.307794: E tensorflow/core/grappler/optimizers/meta_optimizer.cc:812] function_optimizer failed: INVALID_ARGUMENT: Input 6 of node gradient_tape/while/while_grad/body/_531/gradient_tape/while/gradients/while/decoder_1/gru_3/PartitionedCall_grad/PartitionedCall was passed variant from gradient_tape/while/while_grad/body/_531/gradient_tape/while/gradients/while/decoder_1/gru_3/PartitionedCall_grad/TensorListPopBack_2:1 incompatible with expected float.

2021-12-04 12:09:48.425447: W tensorflow/core/common_runtime/process_function_library_runtime.cc:866] Ignoring multi-device function optimization failure: INVALID_ARGUMENT: Input 1 of node while/body/_1/while/TensorListPushBack_56 was passed float from while/body/_1/while/decoder_1/gru_3/PartitionedCall:6 incompatible with expected variant.

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=4.045638>}

Ma dopo che di solito è più veloce rispetto al 2-3x desiderosi train_step metodo:

%%time

for n in range(10):

print(translator.train_step([example_input_batch, example_target_batch]))

print()

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=4.1098256>}

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=4.169871>}

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=4.139249>}

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=4.0410743>}

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=3.9664454>}

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=3.895707>}

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=3.8154407>}

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=3.7583396>}

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=3.6986444>}

{'batch_loss': <tf.Tensor: shape=(), dtype=float32, numpy=3.640298>}

CPU times: user 4.4 s, sys: 960 ms, total: 5.36 s

Wall time: 1.67 s

Un buon test di un nuovo modello è vedere che può sovrastimare un singolo batch di input. Provalo, la perdita dovrebbe andare rapidamente a zero:

losses = []

for n in range(100):

print('.', end='')

logs = translator.train_step([example_input_batch, example_target_batch])

losses.append(logs['batch_loss'].numpy())

print()

plt.plot(losses)

.................................................................................................... [<matplotlib.lines.Line2D at 0x7fb427edf210>]

Ora che sei sicuro che la fase di addestramento funzioni, crea una nuova copia del modello da addestrare da zero:

train_translator = TrainTranslator(

embedding_dim, units,

input_text_processor=input_text_processor,

output_text_processor=output_text_processor)

# Configure the loss and optimizer

train_translator.compile(

optimizer=tf.optimizers.Adam(),

loss=MaskedLoss(),

)

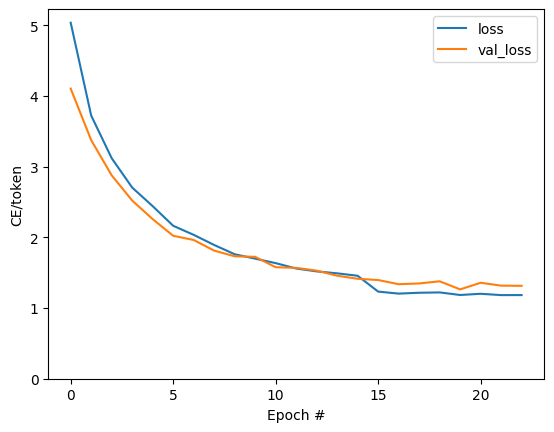

Allena il modello

Mentre non c'è niente di sbagliato con la scrittura del proprio ciclo di formazione personalizzato, l'attuazione del Model.train_step metodo, come nella sezione precedente, consente di eseguire Model.fit e evitare di riscrivere tutto il codice della caldaia-piatto.

Questo tutorial solo treni per un paio di epoche, in modo da utilizzare un callbacks.Callback per raccogliere la storia di perdite in batch, per la stampa:

class BatchLogs(tf.keras.callbacks.Callback):

def __init__(self, key):

self.key = key

self.logs = []

def on_train_batch_end(self, n, logs):

self.logs.append(logs[self.key])

batch_loss = BatchLogs('batch_loss')

train_translator.fit(dataset, epochs=3,

callbacks=[batch_loss])

Epoch 1/3

2021-12-04 12:10:11.617839: E tensorflow/core/grappler/optimizers/meta_optimizer.cc:812] function_optimizer failed: INVALID_ARGUMENT: Input 6 of node StatefulPartitionedCall/gradient_tape/while/while_grad/body/_589/gradient_tape/while/gradients/while/decoder_2/gru_5/PartitionedCall_grad/PartitionedCall was passed variant from StatefulPartitionedCall/gradient_tape/while/while_grad/body/_589/gradient_tape/while/gradients/while/decoder_2/gru_5/PartitionedCall_grad/TensorListPopBack_2:1 incompatible with expected float.

2021-12-04 12:10:11.737105: E tensorflow/core/grappler/optimizers/meta_optimizer.cc:812] layout failed: OUT_OF_RANGE: src_output = 25, but num_outputs is only 25

2021-12-04 12:10:11.855054: E tensorflow/core/grappler/optimizers/meta_optimizer.cc:812] tfg_optimizer{} failed: INVALID_ARGUMENT: Input 6 of node StatefulPartitionedCall/gradient_tape/while/while_grad/body/_589/gradient_tape/while/gradients/while/decoder_2/gru_5/PartitionedCall_grad/PartitionedCall was passed variant from StatefulPartitionedCall/gradient_tape/while/while_grad/body/_589/gradient_tape/while/gradients/while/decoder_2/gru_5/PartitionedCall_grad/TensorListPopBack_2:1 incompatible with expected float.

when importing GraphDef to MLIR module in GrapplerHook

2021-12-04 12:10:11.878896: E tensorflow/core/grappler/optimizers/meta_optimizer.cc:812] function_optimizer failed: INVALID_ARGUMENT: Input 6 of node StatefulPartitionedCall/gradient_tape/while/while_grad/body/_589/gradient_tape/while/gradients/while/decoder_2/gru_5/PartitionedCall_grad/PartitionedCall was passed variant from StatefulPartitionedCall/gradient_tape/while/while_grad/body/_589/gradient_tape/while/gradients/while/decoder_2/gru_5/PartitionedCall_grad/TensorListPopBack_2:1 incompatible with expected float.

2021-12-04 12:10:12.004755: W tensorflow/core/common_runtime/process_function_library_runtime.cc:866] Ignoring multi-device function optimization failure: INVALID_ARGUMENT: Input 1 of node StatefulPartitionedCall/while/body/_59/while/TensorListPushBack_56 was passed float from StatefulPartitionedCall/while/body/_59/while/decoder_2/gru_5/PartitionedCall:6 incompatible with expected variant.

1859/1859 [==============================] - 349s 185ms/step - batch_loss: 2.0443

Epoch 2/3

1859/1859 [==============================] - 350s 188ms/step - batch_loss: 1.0382

Epoch 3/3

1859/1859 [==============================] - 343s 184ms/step - batch_loss: 0.8085

<keras.callbacks.History at 0x7fb42c3eda10>

plt.plot(batch_loss.logs)

plt.ylim([0, 3])

plt.xlabel('Batch #')

plt.ylabel('CE/token')

Text(0, 0.5, 'CE/token')

I salti visibili nella trama sono ai confini dell'epoca.

Tradurre

Ora che il modello è addestrato, implementare una funzione per eseguire la completa text => text traduzione.

Per questo le esigenze del modello di invertire il text => token IDs mappatura fornita dal output_text_processor . Deve anche conoscere gli ID per i token speciali. Tutto questo è implementato nel costruttore per la nuova classe. Seguirà l'implementazione del metodo di traduzione effettivo.

Nel complesso questo è simile al ciclo di addestramento, tranne per il fatto che l'input al decodificatore in ogni fase temporale è un campione dell'ultima previsione del decodificatore.

class Translator(tf.Module):

def __init__(self, encoder, decoder, input_text_processor,

output_text_processor):

self.encoder = encoder

self.decoder = decoder

self.input_text_processor = input_text_processor

self.output_text_processor = output_text_processor

self.output_token_string_from_index = (

tf.keras.layers.StringLookup(

vocabulary=output_text_processor.get_vocabulary(),

mask_token='',

invert=True))

# The output should never generate padding, unknown, or start.

index_from_string = tf.keras.layers.StringLookup(

vocabulary=output_text_processor.get_vocabulary(), mask_token='')

token_mask_ids = index_from_string(['', '[UNK]', '[START]']).numpy()

token_mask = np.zeros([index_from_string.vocabulary_size()], dtype=np.bool)

token_mask[np.array(token_mask_ids)] = True

self.token_mask = token_mask

self.start_token = index_from_string(tf.constant('[START]'))

self.end_token = index_from_string(tf.constant('[END]'))

translator = Translator(

encoder=train_translator.encoder,

decoder=train_translator.decoder,

input_text_processor=input_text_processor,

output_text_processor=output_text_processor,

)

/tmpfs/src/tf_docs_env/lib/python3.7/site-packages/ipykernel_launcher.py:21: DeprecationWarning: `np.bool` is a deprecated alias for the builtin `bool`. To silence this warning, use `bool` by itself. Doing this will not modify any behavior and is safe. If you specifically wanted the numpy scalar type, use `np.bool_` here. Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations

Converti ID token in testo

Il primo metodo per implementare è tokens_to_text che converte dagli ID di token a testo leggibile.

def tokens_to_text(self, result_tokens):

shape_checker = ShapeChecker()

shape_checker(result_tokens, ('batch', 't'))

result_text_tokens = self.output_token_string_from_index(result_tokens)

shape_checker(result_text_tokens, ('batch', 't'))

result_text = tf.strings.reduce_join(result_text_tokens,

axis=1, separator=' ')

shape_checker(result_text, ('batch'))

result_text = tf.strings.strip(result_text)

shape_checker(result_text, ('batch',))

return result_text

Translator.tokens_to_text = tokens_to_text

Inserisci alcuni ID token casuali e guarda cosa genera:

example_output_tokens = tf.random.uniform(

shape=[5, 2], minval=0, dtype=tf.int64,

maxval=output_text_processor.vocabulary_size())

translator.tokens_to_text(example_output_tokens).numpy()

array([b'vain mysteries', b'funny ham', b'drivers responding',

b'mysterious ignoring', b'fashion votes'], dtype=object)

Esempio dalle previsioni del decoder

Questa funzione prende le uscite logit del decoder e campiona gli ID token da quella distribuzione:

def sample(self, logits, temperature):

shape_checker = ShapeChecker()

# 't' is usually 1 here.

shape_checker(logits, ('batch', 't', 'vocab'))

shape_checker(self.token_mask, ('vocab',))

token_mask = self.token_mask[tf.newaxis, tf.newaxis, :]

shape_checker(token_mask, ('batch', 't', 'vocab'), broadcast=True)

# Set the logits for all masked tokens to -inf, so they are never chosen.

logits = tf.where(self.token_mask, -np.inf, logits)

if temperature == 0.0:

new_tokens = tf.argmax(logits, axis=-1)

else:

logits = tf.squeeze(logits, axis=1)

new_tokens = tf.random.categorical(logits/temperature,

num_samples=1)

shape_checker(new_tokens, ('batch', 't'))

return new_tokens

Translator.sample = sample

Prova a eseguire questa funzione su alcuni input casuali:

example_logits = tf.random.normal([5, 1, output_text_processor.vocabulary_size()])

example_output_tokens = translator.sample(example_logits, temperature=1.0)

example_output_tokens

<tf.Tensor: shape=(5, 1), dtype=int64, numpy=

array([[4506],

[3577],

[2961],

[4586],

[ 944]])>

Implementa il ciclo di traduzione

Ecco un'implementazione completa del ciclo di traduzione da testo a testo.

Questa implementazione raccoglie i risultati in liste di pitone, prima di utilizzare tf.concat di unirsi a loro in tensori.

Questa implementazione srotola staticamente grafico ad max_length iterazioni. Questo va bene con l'esecuzione ansiosa in Python.

def translate_unrolled(self,

input_text, *,

max_length=50,

return_attention=True,

temperature=1.0):

batch_size = tf.shape(input_text)[0]

input_tokens = self.input_text_processor(input_text)

enc_output, enc_state = self.encoder(input_tokens)

dec_state = enc_state

new_tokens = tf.fill([batch_size, 1], self.start_token)

result_tokens = []

attention = []

done = tf.zeros([batch_size, 1], dtype=tf.bool)

for _ in range(max_length):

dec_input = DecoderInput(new_tokens=new_tokens,

enc_output=enc_output,

mask=(input_tokens!=0))

dec_result, dec_state = self.decoder(dec_input, state=dec_state)

attention.append(dec_result.attention_weights)

new_tokens = self.sample(dec_result.logits, temperature)

# If a sequence produces an `end_token`, set it `done`

done = done | (new_tokens == self.end_token)

# Once a sequence is done it only produces 0-padding.

new_tokens = tf.where(done, tf.constant(0, dtype=tf.int64), new_tokens)

# Collect the generated tokens

result_tokens.append(new_tokens)

if tf.executing_eagerly() and tf.reduce_all(done):

break

# Convert the list of generates token ids to a list of strings.

result_tokens = tf.concat(result_tokens, axis=-1)

result_text = self.tokens_to_text(result_tokens)

if return_attention:

attention_stack = tf.concat(attention, axis=1)

return {'text': result_text, 'attention': attention_stack}

else:

return {'text': result_text}

Translator.translate = translate_unrolled

Eseguilo su un semplice input:

%%time

input_text = tf.constant([

'hace mucho frio aqui.', # "It's really cold here."

'Esta es mi vida.', # "This is my life.""

])

result = translator.translate(

input_text = input_text)

print(result['text'][0].numpy().decode())

print(result['text'][1].numpy().decode())

print()

its a long cold here . this is my life . CPU times: user 165 ms, sys: 4.37 ms, total: 169 ms Wall time: 164 ms

Se si desidera esportare questo modello è necessario avvolgere questo metodo in una tf.function . Questa implementazione di base presenta alcuni problemi se provi a farlo:

- I grafici risultanti sono molto grandi e richiedono alcuni secondi per essere compilati, salvati o caricati.

- Non si può rompere da un ciclo staticamente srotolato, così sarà sempre eseguito

max_lengthiterazioni, anche se tutte le uscite sono fatti. Ma anche in questo caso è marginalmente più veloce di un'esecuzione ansiosa.

@tf.function(input_signature=[tf.TensorSpec(dtype=tf.string, shape=[None])])

def tf_translate(self, input_text):

return self.translate(input_text)

Translator.tf_translate = tf_translate

Eseguire il tf.function una volta per compilarlo:

%%time

result = translator.tf_translate(

input_text = input_text)

CPU times: user 18.8 s, sys: 0 ns, total: 18.8 s Wall time: 18.7 s

%%time

result = translator.tf_translate(

input_text = input_text)

print(result['text'][0].numpy().decode())

print(result['text'][1].numpy().decode())

print()

its very cold here . this is my life . CPU times: user 175 ms, sys: 0 ns, total: 175 ms Wall time: 88 ms

[Facoltativo] Usa un ciclo simbolico

def translate_symbolic(self,

input_text,

*,

max_length=50,

return_attention=True,

temperature=1.0):

shape_checker = ShapeChecker()

shape_checker(input_text, ('batch',))

batch_size = tf.shape(input_text)[0]

# Encode the input

input_tokens = self.input_text_processor(input_text)

shape_checker(input_tokens, ('batch', 's'))

enc_output, enc_state = self.encoder(input_tokens)

shape_checker(enc_output, ('batch', 's', 'enc_units'))

shape_checker(enc_state, ('batch', 'enc_units'))

# Initialize the decoder

dec_state = enc_state

new_tokens = tf.fill([batch_size, 1], self.start_token)

shape_checker(new_tokens, ('batch', 't1'))

# Initialize the accumulators

result_tokens = tf.TensorArray(tf.int64, size=1, dynamic_size=True)

attention = tf.TensorArray(tf.float32, size=1, dynamic_size=True)

done = tf.zeros([batch_size, 1], dtype=tf.bool)

shape_checker(done, ('batch', 't1'))

for t in tf.range(max_length):

dec_input = DecoderInput(

new_tokens=new_tokens, enc_output=enc_output, mask=(input_tokens != 0))

dec_result, dec_state = self.decoder(dec_input, state=dec_state)

shape_checker(dec_result.attention_weights, ('batch', 't1', 's'))

attention = attention.write(t, dec_result.attention_weights)

new_tokens = self.sample(dec_result.logits, temperature)

shape_checker(dec_result.logits, ('batch', 't1', 'vocab'))

shape_checker(new_tokens, ('batch', 't1'))

# If a sequence produces an `end_token`, set it `done`

done = done | (new_tokens == self.end_token)

# Once a sequence is done it only produces 0-padding.

new_tokens = tf.where(done, tf.constant(0, dtype=tf.int64), new_tokens)

# Collect the generated tokens

result_tokens = result_tokens.write(t, new_tokens)

if tf.reduce_all(done):

break

# Convert the list of generated token ids to a list of strings.

result_tokens = result_tokens.stack()

shape_checker(result_tokens, ('t', 'batch', 't0'))

result_tokens = tf.squeeze(result_tokens, -1)

result_tokens = tf.transpose(result_tokens, [1, 0])

shape_checker(result_tokens, ('batch', 't'))

result_text = self.tokens_to_text(result_tokens)

shape_checker(result_text, ('batch',))

if return_attention:

attention_stack = attention.stack()

shape_checker(attention_stack, ('t', 'batch', 't1', 's'))

attention_stack = tf.squeeze(attention_stack, 2)

shape_checker(attention_stack, ('t', 'batch', 's'))

attention_stack = tf.transpose(attention_stack, [1, 0, 2])

shape_checker(attention_stack, ('batch', 't', 's'))

return {'text': result_text, 'attention': attention_stack}

else:

return {'text': result_text}

Translator.translate = translate_symbolic

L'implementazione iniziale utilizzava gli elenchi Python per raccogliere gli output. Questo utilizza tf.range come iteratore loop, permettendo tf.autograph per convertire il ciclo. Il cambiamento più grande in questa implementazione è l'uso di tf.TensorArray invece di pitone list di tensori accumularsi. tf.TensorArray è necessario raccogliere un numero variabile di tensori in modo grafico.

Con un'esecuzione impaziente, questa implementazione si comporta alla pari con l'originale:

%%time

result = translator.translate(

input_text = input_text)

print(result['text'][0].numpy().decode())

print(result['text'][1].numpy().decode())

print()

its very cold here . this is my life . CPU times: user 175 ms, sys: 0 ns, total: 175 ms Wall time: 170 ms

Ma quando si avvolgono in una tf.function noterete due differenze.

@tf.function(input_signature=[tf.TensorSpec(dtype=tf.string, shape=[None])])

def tf_translate(self, input_text):

return self.translate(input_text)

Translator.tf_translate = tf_translate

Primo: la creazione del grafico è molto più veloce (~ 10x), dal momento che non crea max_iterations copie del modello.

%%time

result = translator.tf_translate(

input_text = input_text)

CPU times: user 1.79 s, sys: 0 ns, total: 1.79 s Wall time: 1.77 s

Secondo: la funzione compilata è molto più veloce su input piccoli (5 volte in questo esempio), perché può uscire dal ciclo.

%%time

result = translator.tf_translate(

input_text = input_text)

print(result['text'][0].numpy().decode())

print(result['text'][1].numpy().decode())

print()

its very cold here . this is my life . CPU times: user 40.1 ms, sys: 0 ns, total: 40.1 ms Wall time: 17.1 ms

Visualizza il processo

I pesi di attenzione restituiti dal translate metodo show dove il modello è stato "guardare" quando si genera ogni token uscita.

Quindi la somma dell'attenzione sull'input dovrebbe restituire tutti quelli:

a = result['attention'][0]

print(np.sum(a, axis=-1))

[1.0000001 0.99999994 1. 0.99999994 1. 0.99999994]

Ecco la distribuzione dell'attenzione per la prima fase di output del primo esempio. Nota come l'attenzione sia ora molto più focalizzata di quanto non fosse per il modello non addestrato:

_ = plt.bar(range(len(a[0, :])), a[0, :])

Poiché c'è un allineamento approssimativo tra le parole di input e output, ti aspetti che l'attenzione sia focalizzata vicino alla diagonale:

plt.imshow(np.array(a), vmin=0.0)

<matplotlib.image.AxesImage at 0x7faf2886ced0>

Ecco del codice per creare una trama di attenzione migliore:

Grafici di attenzione etichettati

def plot_attention(attention, sentence, predicted_sentence):

sentence = tf_lower_and_split_punct(sentence).numpy().decode().split()

predicted_sentence = predicted_sentence.numpy().decode().split() + ['[END]']

fig = plt.figure(figsize=(10, 10))

ax = fig.add_subplot(1, 1, 1)

attention = attention[:len(predicted_sentence), :len(sentence)]

ax.matshow(attention, cmap='viridis', vmin=0.0)

fontdict = {'fontsize': 14}

ax.set_xticklabels([''] + sentence, fontdict=fontdict, rotation=90)

ax.set_yticklabels([''] + predicted_sentence, fontdict=fontdict)

ax.xaxis.set_major_locator(ticker.MultipleLocator(1))

ax.yaxis.set_major_locator(ticker.MultipleLocator(1))

ax.set_xlabel('Input text')

ax.set_ylabel('Output text')

plt.suptitle('Attention weights')

i=0

plot_attention(result['attention'][i], input_text[i], result['text'][i])

/tmpfs/src/tf_docs_env/lib/python3.7/site-packages/ipykernel_launcher.py:14: UserWarning: FixedFormatter should only be used together with FixedLocator /tmpfs/src/tf_docs_env/lib/python3.7/site-packages/ipykernel_launcher.py:15: UserWarning: FixedFormatter should only be used together with FixedLocator from ipykernel import kernelapp as app

Traduci qualche altra frase e tracciale:

%%time

three_input_text = tf.constant([

# This is my life.

'Esta es mi vida.',

# Are they still home?

'¿Todavía están en casa?',

# Try to find out.'

'Tratar de descubrir.',

])

result = translator.tf_translate(three_input_text)

for tr in result['text']:

print(tr.numpy().decode())

print()

this is my life . are you still at home ? all about killed . CPU times: user 78 ms, sys: 23 ms, total: 101 ms Wall time: 23.1 ms

result['text']

<tf.Tensor: shape=(3,), dtype=string, numpy=

array([b'this is my life .', b'are you still at home ?',

b'all about killed .'], dtype=object)>

i = 0

plot_attention(result['attention'][i], three_input_text[i], result['text'][i])

/tmpfs/src/tf_docs_env/lib/python3.7/site-packages/ipykernel_launcher.py:14: UserWarning: FixedFormatter should only be used together with FixedLocator /tmpfs/src/tf_docs_env/lib/python3.7/site-packages/ipykernel_launcher.py:15: UserWarning: FixedFormatter should only be used together with FixedLocator from ipykernel import kernelapp as app

i = 1

plot_attention(result['attention'][i], three_input_text[i], result['text'][i])

/tmpfs/src/tf_docs_env/lib/python3.7/site-packages/ipykernel_launcher.py:14: UserWarning: FixedFormatter should only be used together with FixedLocator /tmpfs/src/tf_docs_env/lib/python3.7/site-packages/ipykernel_launcher.py:15: UserWarning: FixedFormatter should only be used together with FixedLocator from ipykernel import kernelapp as app

i = 2

plot_attention(result['attention'][i], three_input_text[i], result['text'][i])

/tmpfs/src/tf_docs_env/lib/python3.7/site-packages/ipykernel_launcher.py:14: UserWarning: FixedFormatter should only be used together with FixedLocator /tmpfs/src/tf_docs_env/lib/python3.7/site-packages/ipykernel_launcher.py:15: UserWarning: FixedFormatter should only be used together with FixedLocator from ipykernel import kernelapp as app

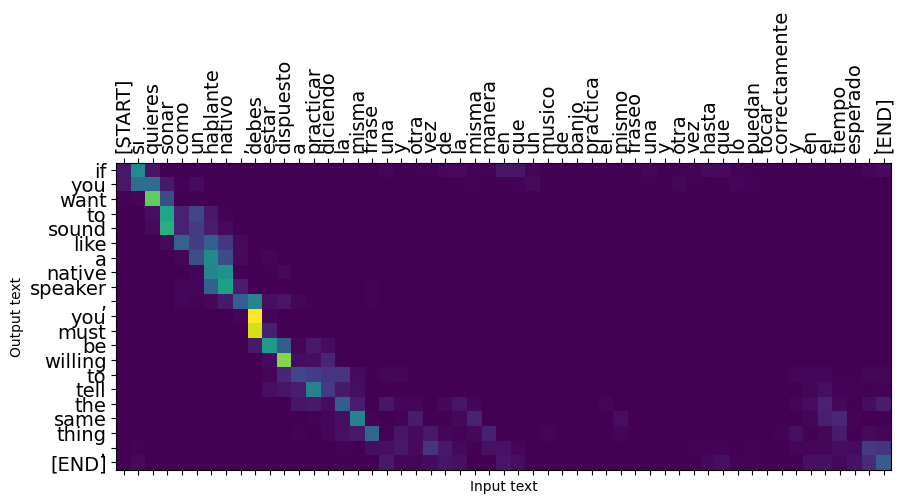

Le frasi brevi spesso funzionano bene, ma se l'input è troppo lungo il modello perde letteralmente il focus e smette di fornire previsioni ragionevoli. Ci sono due ragioni principali per questo:

- Il modello è stato addestrato con la forzatura dell'insegnante che alimenta il token corretto ad ogni passaggio, indipendentemente dalle previsioni del modello. Il modello potrebbe essere reso più robusto se a volte fosse alimentato dalle proprie previsioni.

- Il modello ha accesso solo all'output precedente tramite lo stato RNN. Se lo stato RNN viene danneggiato, non è possibile ripristinare il modello. Transformers risolvere questo utilizzando auto-attenzione nella encoder e decoder.

long_input_text = tf.constant([inp[-1]])

import textwrap

print('Expected output:\n', '\n'.join(textwrap.wrap(targ[-1])))

Expected output: If you want to sound like a native speaker, you must be willing to practice saying the same sentence over and over in the same way that banjo players practice the same phrase over and over until they can play it correctly and at the desired tempo.

result = translator.tf_translate(long_input_text)

i = 0

plot_attention(result['attention'][i], long_input_text[i], result['text'][i])

_ = plt.suptitle('This never works')

/tmpfs/src/tf_docs_env/lib/python3.7/site-packages/ipykernel_launcher.py:14: UserWarning: FixedFormatter should only be used together with FixedLocator /tmpfs/src/tf_docs_env/lib/python3.7/site-packages/ipykernel_launcher.py:15: UserWarning: FixedFormatter should only be used together with FixedLocator from ipykernel import kernelapp as app

Esportare

Una volta che si dispone di un modello si è soddisfatti si potrebbe desiderare di esportarlo come tf.saved_model per l'uso al di fuori di questo programma Python che lo ha creato.

Dal momento che il modello è una sottoclasse di tf.Module (attraverso keras.Model ), e tutte le funzionalità per l'esportazione è compilato in un tf.function il modello dovrebbe esportare in modo pulito con tf.saved_model.save :

Ora che la funzione è stata tracciata può essere esportato utilizzando saved_model.save :

tf.saved_model.save(translator, 'translator',

signatures={'serving_default': translator.tf_translate})

2021-12-04 12:27:54.310890: W tensorflow/python/util/util.cc:368] Sets are not currently considered sequences, but this may change in the future, so consider avoiding using them. WARNING:absl:Found untraced functions such as encoder_2_layer_call_fn, encoder_2_layer_call_and_return_conditional_losses, decoder_2_layer_call_fn, decoder_2_layer_call_and_return_conditional_losses, embedding_4_layer_call_fn while saving (showing 5 of 60). These functions will not be directly callable after loading. INFO:tensorflow:Assets written to: translator/assets INFO:tensorflow:Assets written to: translator/assets

reloaded = tf.saved_model.load('translator')

result = reloaded.tf_translate(three_input_text)

%%time

result = reloaded.tf_translate(three_input_text)

for tr in result['text']:

print(tr.numpy().decode())

print()

this is my life . are you still at home ? find out about to find out . CPU times: user 42.8 ms, sys: 7.69 ms, total: 50.5 ms Wall time: 20 ms

Prossimi passi

- Scarica un set di dati diverso da esperimento con le traduzioni, per esempio, Inglese a Tedesco, o Inglese a Francese.

- Sperimenta con l'addestramento su un set di dati più grande o usando più epoche.

- Provare il trasformatore esercitazione che implementa il task simile ma utilizza uno strati trasformatore anziché RNR. Questa versione utilizza anche un

text.BertTokenizerper implementare wordpiece tokenizzazione. - Dai un'occhiata al tensorflow_addons.seq2seq per l'attuazione di questo tipo di sequenza per modello di sequenza. La

tfa.seq2seqpacchetto include funzionalità di livello superiore comeseq2seq.BeamSearchDecoder.