View on TensorFlow.org View on TensorFlow.org

|

Run in Google Colab Run in Google Colab

|

View on GitHub View on GitHub

|

Download notebook Download notebook

|

See TF Hub models See TF Hub models

|

This notebook illustrates how to access the Universal Sentence Encoder and use it for sentence similarity and sentence classification tasks.

The Universal Sentence Encoder makes getting sentence level embeddings as easy as it has historically been to lookup the embeddings for individual words. The sentence embeddings can then be trivially used to compute sentence level meaning similarity as well as to enable better performance on downstream classification tasks using less supervised training data.

Setup

This section sets up the environment for access to the Universal Sentence Encoder on TF Hub and provides examples of applying the encoder to words, sentences, and paragraphs.

%%capture

!pip3 install seaborn

More detailed information about installing Tensorflow can be found at https://www.tensorflow.org/install/.

Load the Universal Sentence Encoder's TF Hub module

from absl import logging

import tensorflow as tf

import tensorflow_hub as hub

import matplotlib.pyplot as plt

import numpy as np

import os

import pandas as pd

import re

import seaborn as sns

module_url = "https://tfhub.dev/google/universal-sentence-encoder/4"

model = hub.load(module_url)

print ("module %s loaded" % module_url)

def embed(input):

return model(input)

2024-03-10 12:03:32.159319: E external/local_xla/xla/stream_executor/cuda/cuda_driver.cc:282] failed call to cuInit: CUDA_ERROR_NO_DEVICE: no CUDA-capable device is detected module https://tfhub.dev/google/universal-sentence-encoder/4 loaded

Compute a representation for each message, showing various lengths supported.

word = "Elephant"

sentence = "I am a sentence for which I would like to get its embedding."

paragraph = (

"Universal Sentence Encoder embeddings also support short paragraphs. "

"There is no hard limit on how long the paragraph is. Roughly, the longer "

"the more 'diluted' the embedding will be.")

messages = [word, sentence, paragraph]

# Reduce logging output.

logging.set_verbosity(logging.ERROR)

message_embeddings = embed(messages)

for i, message_embedding in enumerate(np.array(message_embeddings).tolist()):

print("Message: {}".format(messages[i]))

print("Embedding size: {}".format(len(message_embedding)))

message_embedding_snippet = ", ".join(

(str(x) for x in message_embedding[:3]))

print("Embedding: [{}, ...]\n".format(message_embedding_snippet))

Message: Elephant Embedding size: 512 Embedding: [0.008344484493136406, 0.0004808559315279126, 0.06595249474048615, ...] Message: I am a sentence for which I would like to get its embedding. Embedding size: 512 Embedding: [0.050808604806661606, -0.016524329781532288, 0.01573779620230198, ...] Message: Universal Sentence Encoder embeddings also support short paragraphs. There is no hard limit on how long the paragraph is. Roughly, the longer the more 'diluted' the embedding will be. Embedding size: 512 Embedding: [-0.028332693502306938, -0.0558621808886528, -0.012941482476890087, ...]

Semantic Textual Similarity Task Example

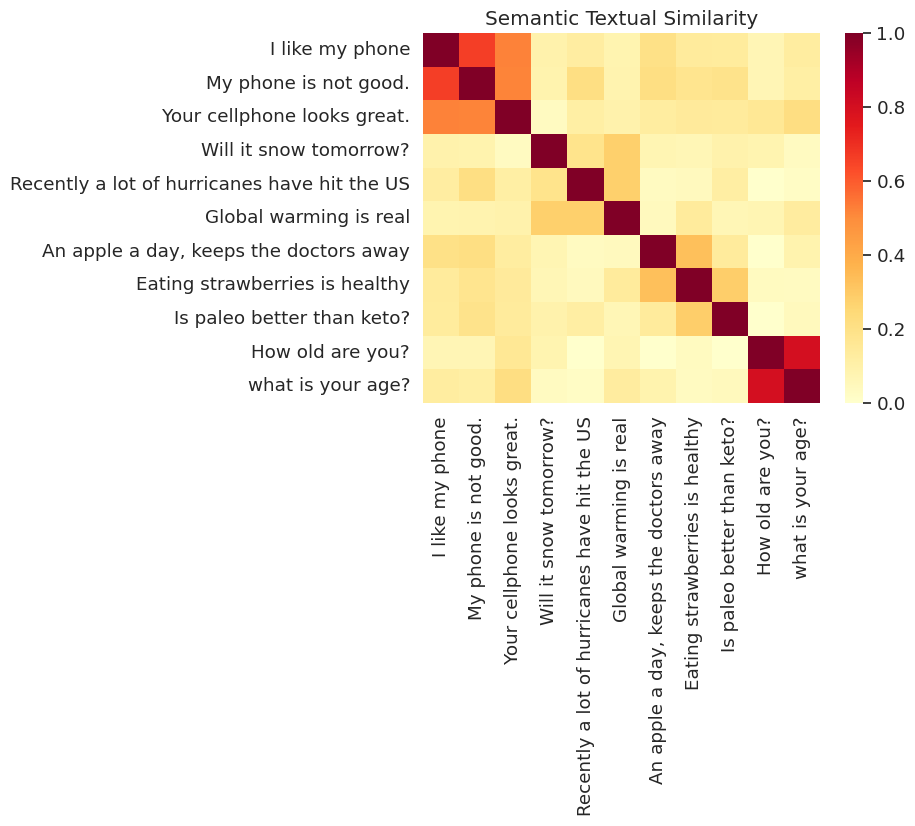

The embeddings produced by the Universal Sentence Encoder are approximately normalized. The semantic similarity of two sentences can be trivially computed as the inner product of the encodings.

def plot_similarity(labels, features, rotation):

corr = np.inner(features, features)

sns.set(font_scale=1.2)

g = sns.heatmap(

corr,

xticklabels=labels,

yticklabels=labels,

vmin=0,

vmax=1,

cmap="YlOrRd")

g.set_xticklabels(labels, rotation=rotation)

g.set_title("Semantic Textual Similarity")

def run_and_plot(messages_):

message_embeddings_ = embed(messages_)

plot_similarity(messages_, message_embeddings_, 90)

Similarity Visualized

Here we show the similarity in a heat map. The final graph is a 9x9 matrix where each entry [i, j] is colored based on the inner product of the encodings for sentence i and j.

messages = [

# Smartphones

"I like my phone",

"My phone is not good.",

"Your cellphone looks great.",

# Weather

"Will it snow tomorrow?",

"Recently a lot of hurricanes have hit the US",

"Global warming is real",

# Food and health

"An apple a day, keeps the doctors away",

"Eating strawberries is healthy",

"Is paleo better than keto?",

# Asking about age

"How old are you?",

"what is your age?",

]

run_and_plot(messages)

Evaluation: STS (Semantic Textual Similarity) Benchmark

The STS Benchmark provides an intrinsic evaluation of the degree to which similarity scores computed using sentence embeddings align with human judgements. The benchmark requires systems to return similarity scores for a diverse selection of sentence pairs. Pearson correlation is then used to evaluate the quality of the machine similarity scores against human judgements.

Download data

import pandas

import scipy

import math

import csv

sts_dataset = tf.keras.utils.get_file(

fname="Stsbenchmark.tar.gz",

origin="http://ixa2.si.ehu.es/stswiki/images/4/48/Stsbenchmark.tar.gz",

extract=True)

sts_dev = pandas.read_table(

os.path.join(os.path.dirname(sts_dataset), "stsbenchmark", "sts-dev.csv"),

skip_blank_lines=True,

usecols=[4, 5, 6],

names=["sim", "sent_1", "sent_2"])

sts_test = pandas.read_table(

os.path.join(

os.path.dirname(sts_dataset), "stsbenchmark", "sts-test.csv"),

quoting=csv.QUOTE_NONE,

skip_blank_lines=True,

usecols=[4, 5, 6],

names=["sim", "sent_1", "sent_2"])

# cleanup some NaN values in sts_dev

sts_dev = sts_dev[[isinstance(s, str) for s in sts_dev['sent_2']]]

Downloading data from http://ixa2.si.ehu.es/stswiki/images/4/48/Stsbenchmark.tar.gz 409630/409630 ━━━━━━━━━━━━━━━━━━━━ 1s 2us/step

Evaluate Sentence Embeddings

sts_data = sts_dev

def run_sts_benchmark(batch):

sts_encode1 = tf.nn.l2_normalize(embed(tf.constant(batch['sent_1'].tolist())), axis=1)

sts_encode2 = tf.nn.l2_normalize(embed(tf.constant(batch['sent_2'].tolist())), axis=1)

cosine_similarities = tf.reduce_sum(tf.multiply(sts_encode1, sts_encode2), axis=1)

clip_cosine_similarities = tf.clip_by_value(cosine_similarities, -1.0, 1.0)

scores = 1.0 - tf.acos(clip_cosine_similarities) / math.pi

"""Returns the similarity scores"""

return scores

dev_scores = sts_data['sim'].tolist()

scores = []

for batch in np.array_split(sts_data, 10):

scores.extend(run_sts_benchmark(batch))

pearson_correlation = scipy.stats.pearsonr(scores, dev_scores)

print('Pearson correlation coefficient = {0}\np-value = {1}'.format(

pearson_correlation[0], pearson_correlation[1]))

/tmpfs/src/tf_docs_env/lib/python3.9/site-packages/numpy/core/fromnumeric.py:59: FutureWarning: 'DataFrame.swapaxes' is deprecated and will be removed in a future version. Please use 'DataFrame.transpose' instead. return bound(*args, **kwds) Pearson correlation coefficient = 0.8036396940028219 p-value = 0.0