Voir sur TensorFlow.org Voir sur TensorFlow.org |  Exécuter dans Google Colab Exécuter dans Google Colab |  Voir sur GitHub Voir sur GitHub |  Télécharger le cahier Télécharger le cahier |  Voir les modèles TF Hub Voir les modèles TF Hub |

Cette collaboration montre comment :

- Modèles de charge BERT de tensorflow Hub qui ont été formés à différentes tâches , notamment Mnll, SQUAD et PubMed

- Utilisez un modèle de prétraitement correspondant pour tokeniser le texte brut et le convertir en identifiants

- Générer la sortie groupée et de séquence à partir des identifiants d'entrée de jeton à l'aide du modèle chargé

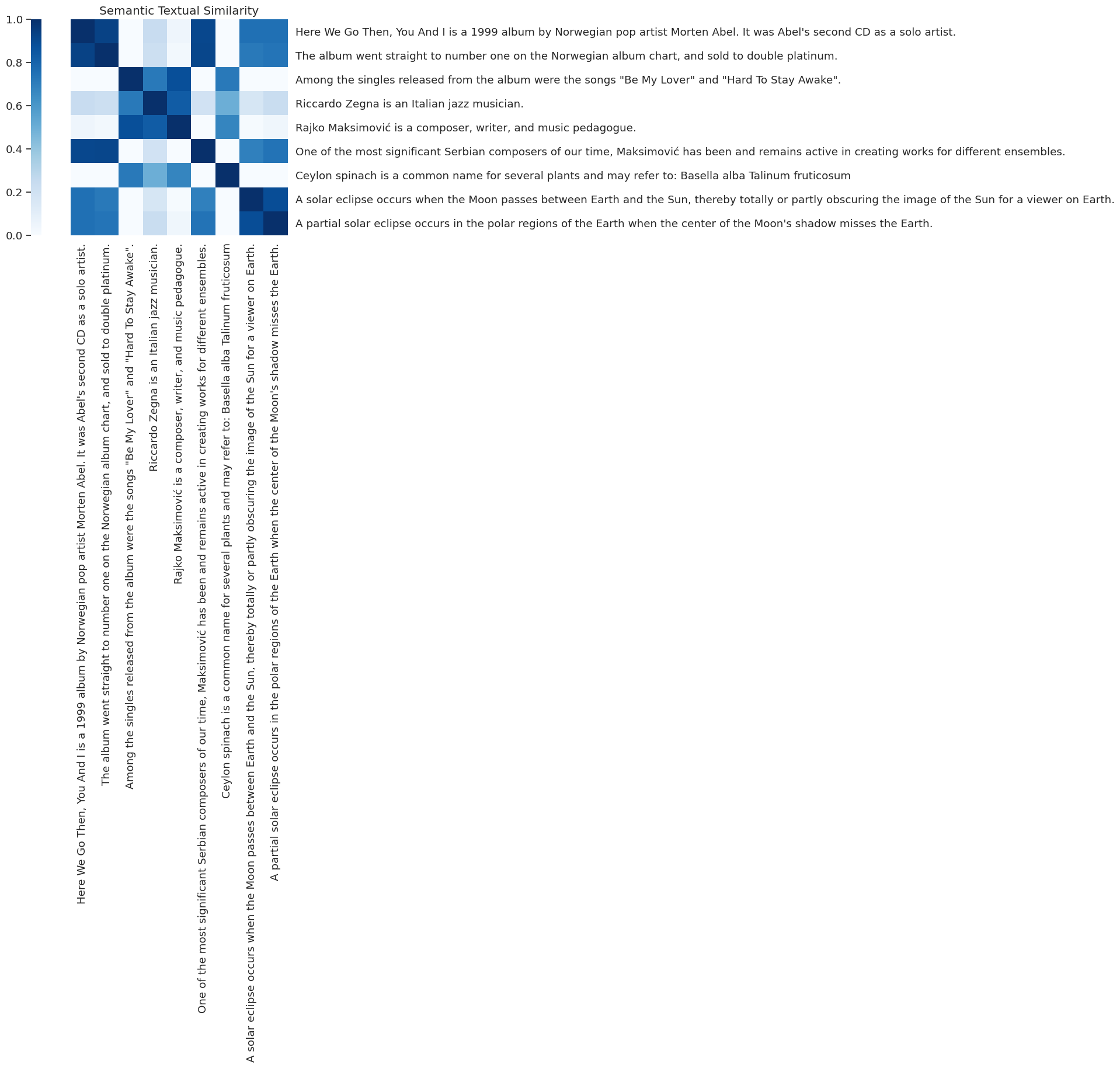

- Regardez la similitude sémantique des sorties groupées de différentes phrases

Remarque : Cette collaboration doit être exécutée avec un environnement d'exécution GPU

Configurer et importer

pip3 install --quiet tensorflowpip3 install --quiet tensorflow_text

import seaborn as sns

from sklearn.metrics import pairwise

import tensorflow as tf

import tensorflow_hub as hub

import tensorflow_text as text # Imports TF ops for preprocessing.

Configurer le modèle

BERT_MODEL = "https://tfhub.dev/google/experts/bert/wiki_books/2" # @param {type: "string"} ["https://tfhub.dev/google/experts/bert/wiki_books/2", "https://tfhub.dev/google/experts/bert/wiki_books/mnli/2", "https://tfhub.dev/google/experts/bert/wiki_books/qnli/2", "https://tfhub.dev/google/experts/bert/wiki_books/qqp/2", "https://tfhub.dev/google/experts/bert/wiki_books/squad2/2", "https://tfhub.dev/google/experts/bert/wiki_books/sst2/2", "https://tfhub.dev/google/experts/bert/pubmed/2", "https://tfhub.dev/google/experts/bert/pubmed/squad2/2"]

# Preprocessing must match the model, but all the above use the same.

PREPROCESS_MODEL = "https://tfhub.dev/tensorflow/bert_en_uncased_preprocess/3"

Phrases

Prenons quelques phrases de Wikipedia pour parcourir le modèle

sentences = [

"Here We Go Then, You And I is a 1999 album by Norwegian pop artist Morten Abel. It was Abel's second CD as a solo artist.",

"The album went straight to number one on the Norwegian album chart, and sold to double platinum.",

"Among the singles released from the album were the songs \"Be My Lover\" and \"Hard To Stay Awake\".",

"Riccardo Zegna is an Italian jazz musician.",

"Rajko Maksimović is a composer, writer, and music pedagogue.",

"One of the most significant Serbian composers of our time, Maksimović has been and remains active in creating works for different ensembles.",

"Ceylon spinach is a common name for several plants and may refer to: Basella alba Talinum fruticosum",

"A solar eclipse occurs when the Moon passes between Earth and the Sun, thereby totally or partly obscuring the image of the Sun for a viewer on Earth.",

"A partial solar eclipse occurs in the polar regions of the Earth when the center of the Moon's shadow misses the Earth.",

]

Exécuter le modèle

Nous allons charger le modèle BERT de TF-Hub, tokeniser nos phrases à l'aide du modèle de prétraitement correspondant de TF-Hub, puis introduire les phrases tokenisées dans le modèle. Pour que cette collaboration reste simple et rapide, nous vous recommandons de l'exécuter sur GPU.

Aller à Runtime → Changer le type d'exécution pour vous assurer que le GPU est sélectionné

preprocess = hub.load(PREPROCESS_MODEL)

bert = hub.load(BERT_MODEL)

inputs = preprocess(sentences)

outputs = bert(inputs)

print("Sentences:")

print(sentences)

print("\nBERT inputs:")

print(inputs)

print("\nPooled embeddings:")

print(outputs["pooled_output"])

print("\nPer token embeddings:")

print(outputs["sequence_output"])

Sentences:

["Here We Go Then, You And I is a 1999 album by Norwegian pop artist Morten Abel. It was Abel's second CD as a solo artist.", 'The album went straight to number one on the Norwegian album chart, and sold to double platinum.', 'Among the singles released from the album were the songs "Be My Lover" and "Hard To Stay Awake".', 'Riccardo Zegna is an Italian jazz musician.', 'Rajko Maksimović is a composer, writer, and music pedagogue.', 'One of the most significant Serbian composers of our time, Maksimović has been and remains active in creating works for different ensembles.', 'Ceylon spinach is a common name for several plants and may refer to: Basella alba Talinum fruticosum', 'A solar eclipse occurs when the Moon passes between Earth and the Sun, thereby totally or partly obscuring the image of the Sun for a viewer on Earth.', "A partial solar eclipse occurs in the polar regions of the Earth when the center of the Moon's shadow misses the Earth."]

BERT inputs:

{'input_word_ids': <tf.Tensor: shape=(9, 128), dtype=int32, numpy=

array([[ 101, 2182, 2057, ..., 0, 0, 0],

[ 101, 1996, 2201, ..., 0, 0, 0],

[ 101, 2426, 1996, ..., 0, 0, 0],

...,

[ 101, 16447, 6714, ..., 0, 0, 0],

[ 101, 1037, 5943, ..., 0, 0, 0],

[ 101, 1037, 7704, ..., 0, 0, 0]], dtype=int32)>, 'input_type_ids': <tf.Tensor: shape=(9, 128), dtype=int32, numpy=

array([[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

...,

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0],

[0, 0, 0, ..., 0, 0, 0]], dtype=int32)>, 'input_mask': <tf.Tensor: shape=(9, 128), dtype=int32, numpy=

array([[1, 1, 1, ..., 0, 0, 0],

[1, 1, 1, ..., 0, 0, 0],

[1, 1, 1, ..., 0, 0, 0],

...,

[1, 1, 1, ..., 0, 0, 0],

[1, 1, 1, ..., 0, 0, 0],

[1, 1, 1, ..., 0, 0, 0]], dtype=int32)>}

Pooled embeddings:

tf.Tensor(

[[ 0.7975967 -0.48580563 0.49781477 ... -0.3448825 0.3972752

-0.2063976 ]

[ 0.57120323 -0.41205275 0.7048914 ... -0.35185075 0.19032307

-0.4041895 ]

[-0.699383 0.1586691 0.06569938 ... -0.0623244 -0.81550187

-0.07923658]

...

[-0.35727128 0.7708977 0.1575658 ... 0.44185698 -0.8644815

0.04504769]

[ 0.91077 0.41501352 0.5606345 ... -0.49263868 0.39640594

-0.05036103]

[ 0.90502906 -0.15505145 0.72672117 ... -0.34734493 0.5052651

-0.19543159]], shape=(9, 768), dtype=float32)

Per token embeddings:

tf.Tensor(

[[[ 1.0919718e+00 -5.3055555e-01 5.4639673e-01 ... -3.5962367e-01

4.2040938e-01 -2.0940571e-01]

[ 1.0143853e+00 7.8079259e-01 8.5375798e-01 ... 5.5282074e-01

-1.1245787e+00 5.6027526e-01]

[ 7.8862888e-01 7.7776514e-02 9.5150793e-01 ... -1.9075295e-01

5.9206045e-01 6.1910731e-01]

...

[-3.2203159e-01 -4.2521179e-01 -1.2823829e-01 ... -3.9094865e-01

-7.9097575e-01 4.2236605e-01]

[-3.1039350e-02 2.3985808e-01 -2.1994556e-01 ... -1.1440065e-01

-1.2680519e+00 -1.6136172e-01]

[-4.2063516e-01 5.4972863e-01 -3.2444897e-01 ... -1.8478543e-01

-1.1342984e+00 -5.8974154e-02]]

[[ 6.4930701e-01 -4.3808129e-01 8.7695646e-01 ... -3.6755449e-01

1.9267237e-01 -4.2864648e-01]

[-1.1248719e+00 2.9931602e-01 1.1799662e+00 ... 4.8729455e-01

5.3400528e-01 2.2836192e-01]

[-2.7057338e-01 3.2351881e-02 1.0425698e+00 ... 5.8993816e-01

1.5367918e+00 5.8425623e-01]

...

[-1.4762508e+00 1.8239072e-01 5.5875197e-02 ... -1.6733241e+00

-6.7398834e-01 -7.2449744e-01]

[-1.5138135e+00 5.8184558e-01 1.6141933e-01 ... -1.2640834e+00

-4.0272138e-01 -9.7197199e-01]

[-4.7153085e-01 2.2817247e-01 5.2776134e-01 ... -7.5483751e-01

-9.0903056e-01 -1.6954714e-01]]

[[-8.6609173e-01 1.6002113e-01 6.5794155e-02 ... -6.2405296e-02

-1.1432388e+00 -7.9403043e-02]

[ 7.7117836e-01 7.0804822e-01 1.1350115e-01 ... 7.8831035e-01

-3.1438148e-01 -9.7487110e-01]

[-4.4002479e-01 -3.0059522e-01 3.5479453e-01 ... 7.9739094e-02

-4.7393662e-01 -1.1001848e+00]

...

[-1.0205302e+00 2.6938522e-01 -4.7310370e-01 ... -6.6319543e-01

-1.4579915e+00 -3.4665459e-01]

[-9.7003460e-01 -4.5014530e-02 -5.9779549e-01 ... -3.0526626e-01

-1.2744237e+00 -2.8051588e-01]

[-7.3144108e-01 1.7699355e-01 -4.6257967e-01 ... -1.6062307e-01

-1.6346070e+00 -3.2060605e-01]]

...

[[-3.7375441e-01 1.0225365e+00 1.5888955e-01 ... 4.7453594e-01

-1.3108152e+00 4.5078207e-02]

[-4.1589144e-01 5.0019276e-01 -4.5844245e-01 ... 4.1482472e-01

-6.2065876e-01 -7.1555024e-01]

[-1.2504390e+00 5.0936425e-01 -5.7103634e-01 ... 3.5491806e-01

2.4368477e-01 -2.0577228e+00]

...

[ 1.3393667e-01 1.1859171e+00 -2.2169831e-01 ... -8.1946820e-01

-1.6737309e+00 -3.9692628e-01]

[-3.3662504e-01 1.6556220e+00 -3.7812781e-01 ... -9.6745497e-01

-1.4801039e+00 -8.3330971e-01]

[-2.2649485e-01 1.6178465e+00 -6.7044652e-01 ... -4.9078423e-01

-1.4535751e+00 -7.1707505e-01]]

[[ 1.5320227e+00 4.4165283e-01 6.3375801e-01 ... -5.3953874e-01

4.1937760e-01 -5.0403677e-02]

[ 8.9377600e-01 8.9395344e-01 3.0626178e-02 ... 5.9039176e-02

-2.0649448e-01 -8.4811246e-01]

[-1.8557828e-02 1.0479081e+00 -1.3329606e+00 ... -1.3869843e-01

-3.7879568e-01 -4.9068305e-01]

...

[ 1.4275622e+00 1.0696816e-01 -4.0635362e-02 ... -3.1778324e-02

-4.1460156e-01 7.0036823e-01]

[ 1.1286633e+00 1.4547651e-01 -6.1372471e-01 ... 4.7491628e-01

-3.9852056e-01 4.3124324e-01]

[ 1.4393284e+00 1.8030575e-01 -4.2854339e-01 ... -2.5022790e-01

-1.0000544e+00 3.5985461e-01]]

[[ 1.4993407e+00 -1.5631223e-01 9.2174333e-01 ... -3.6242130e-01

5.5635113e-01 -1.9797830e-01]

[ 1.1110539e+00 3.6651433e-01 3.5505858e-01 ... -5.4297698e-01

1.4471304e-01 -3.1675813e-01]

[ 2.4048802e-01 3.8115788e-01 -5.9182465e-01 ... 3.7410852e-01

-5.9829473e-01 -1.0166264e+00]

...

[ 1.0158644e+00 5.0260526e-01 1.0737082e-01 ... -9.5642781e-01

-4.1039532e-01 -2.6760197e-01]

[ 1.1848929e+00 6.5479934e-01 1.0166168e-03 ... -8.6154389e-01

-8.8036627e-02 -3.0636966e-01]

[ 1.2669108e+00 4.7768092e-01 6.6289604e-03 ... -1.1585802e+00

-7.0675731e-02 -1.8678737e-01]]], shape=(9, 128, 768), dtype=float32)

Similitude sémantique

Maintenant , nous allons jeter un oeil à la pooled_output incorporations de nos phrases et de comparer la façon dont ils sont semblables dans des phrases.

Fonctions d'assistance

def plot_similarity(features, labels):

"""Plot a similarity matrix of the embeddings."""

cos_sim = pairwise.cosine_similarity(features)

sns.set(font_scale=1.2)

cbar_kws=dict(use_gridspec=False, location="left")

g = sns.heatmap(

cos_sim, xticklabels=labels, yticklabels=labels,

vmin=0, vmax=1, cmap="Blues", cbar_kws=cbar_kws)

g.tick_params(labelright=True, labelleft=False)

g.set_yticklabels(labels, rotation=0)

g.set_title("Semantic Textual Similarity")

plot_similarity(outputs["pooled_output"], sentences)

Apprendre encore plus

- Trouver plus de modèles BERT sur tensorflow Hub

- Ce bloc - notes montre l' inférence simple avec BERT, vous pouvez trouver un tutoriel plus avancé au sujet BERT réglage fin à tensorflow.org/official_models/fine_tuning_bert

- Nous avons utilisé une seule puce GPU pour exécuter le modèle, vous pouvez en savoir plus sur les modèles de charge à l' aide tf.distribute à tensorflow.org/tutorials/distribute/save_and_load