注意: このチュートリアルでは、非推奨の TensorFlow 1 の機能を使用しています。このタスクの最新アプローチについては、TensorFlow 2 バージョンをご覧ください。

Run in Google Colab Run in Google Colab |

GitHub でソースを表示 GitHub でソースを表示 |

TF Hub モデルを参照 TF Hub モデルを参照

|

TensorFlow Hub (TF-Hub) は、機械学習の知識を再利用可能なリソース、特にトレーニング済みのモジュールで共有するためのプラットフォームです。このチュートリアルは次の 2 つの主要部分で構成しています。

入門編: TF-Hub によるテキスト分類器のトレーニング

TF-Hub のテキスト埋め込みモジュールを使用して、適切なベースライン精度を持つ単純な感情分類器をトレーニングします。その後、予測を分析してモデルが適切であるかを確認し、精度を向上させるための改善点を提案します。

上級編: 転移学習の分析

この項目では、様々な TF-Hub モジュールを使用して Estimator の精度への効果を比較し、転移学習のメリットとデメリットを実証します。

オプションの前提条件

- Tensorflow の既製の Estimator フレームワークに対する基礎知識があること。

- Pandas ライブラリを熟知していること。

セットアップ

# Install TF-Hub.pip install seaborn

Tensorflow のインストールに関する詳細は、https://www.tensorflow.org/install/ をご覧ください。

from absl import logging

import tensorflow as tf

import tensorflow_hub as hub

import matplotlib.pyplot as plt

import numpy as np

import os

import pandas as pd

import re

import seaborn as sns

2022-08-08 16:41:03.981586: E tensorflow/stream_executor/cuda/cuda_blas.cc:2981] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered 2022-08-08 16:41:04.675076: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer.so.7'; dlerror: libnvrtc.so.11.1: cannot open shared object file: No such file or directory 2022-08-08 16:41:04.675332: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer_plugin.so.7'; dlerror: libnvrtc.so.11.1: cannot open shared object file: No such file or directory 2022-08-08 16:41:04.675345: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Cannot dlopen some TensorRT libraries. If you would like to use Nvidia GPU with TensorRT, please make sure the missing libraries mentioned above are installed properly.

はじめに

データ

映画レビューの大規模データセット v1.0 タスク (Mass et al., 2011) を解決してみます。データセットは、1 から 10 までの肯定度でラベル付けされた IMDB 映画レビューで構成されています。タスクは、レビューをネガティブ (negative) またはポジティブ (positive) にラベル付けすることです。

# Load all files from a directory in a DataFrame.

def load_directory_data(directory):

data = {}

data["sentence"] = []

data["sentiment"] = []

for file_path in os.listdir(directory):

with tf.io.gfile.GFile(os.path.join(directory, file_path), "r") as f:

data["sentence"].append(f.read())

data["sentiment"].append(re.match("\d+_(\d+)\.txt", file_path).group(1))

return pd.DataFrame.from_dict(data)

# Merge positive and negative examples, add a polarity column and shuffle.

def load_dataset(directory):

pos_df = load_directory_data(os.path.join(directory, "pos"))

neg_df = load_directory_data(os.path.join(directory, "neg"))

pos_df["polarity"] = 1

neg_df["polarity"] = 0

return pd.concat([pos_df, neg_df]).sample(frac=1).reset_index(drop=True)

# Download and process the dataset files.

def download_and_load_datasets(force_download=False):

dataset = tf.keras.utils.get_file(

fname="aclImdb.tar.gz",

origin="http://ai.stanford.edu/~amaas/data/sentiment/aclImdb_v1.tar.gz",

extract=True)

train_df = load_dataset(os.path.join(os.path.dirname(dataset),

"aclImdb", "train"))

test_df = load_dataset(os.path.join(os.path.dirname(dataset),

"aclImdb", "test"))

return train_df, test_df

# Reduce logging output.

logging.set_verbosity(logging.ERROR)

train_df, test_df = download_and_load_datasets()

train_df.head()

Downloading data from http://ai.stanford.edu/~amaas/data/sentiment/aclImdb_v1.tar.gz 84125825/84125825 [==============================] - 3s 0us/step

モデル

入力関数

Estimator フレームワークは、Pandasの データフレームをラップする入力関数を提供します。

# Training input on the whole training set with no limit on training epochs.

train_input_fn = tf.compat.v1.estimator.inputs.pandas_input_fn(

train_df, train_df["polarity"], num_epochs=None, shuffle=True)

# Prediction on the whole training set.

predict_train_input_fn = tf.compat.v1.estimator.inputs.pandas_input_fn(

train_df, train_df["polarity"], shuffle=False)

# Prediction on the test set.

predict_test_input_fn = tf.compat.v1.estimator.inputs.pandas_input_fn(

test_df, test_df["polarity"], shuffle=False)

WARNING:tensorflow:From /tmpfs/tmp/ipykernel_8196/2827882428.py:2: The name tf.estimator.inputs is deprecated. Please use tf.compat.v1.estimator.inputs instead. WARNING:tensorflow:From /tmpfs/tmp/ipykernel_8196/2827882428.py:2: The name tf.estimator.inputs.pandas_input_fn is deprecated. Please use tf.compat.v1.estimator.inputs.pandas_input_fn instead.

特徴量カラム

TF-Hub は、特定のテキスト特徴量にモジュールを適用し、モジュールの出力をさらに渡す、特徴量カラムを提供しています。このチュートリアルでは nlm-en-dim128 モジュールを使用します。このチュートリアルにおいて、最も重要な事実は次の通りです。

- このモジュールは、文のバッチを1 次元のテンソル文字列で入力として受け取ります。

- このモジュールは、文の前処理(例えば、句読点の削除やスペースの分割など)を担当します。

- このモジュールは任意の入力に使用できます(例えば nlm-en-dim128 は、語彙に存在していない単語を 20.000 バケットまでハッシュします)。

embedded_text_feature_column = hub.text_embedding_column(

key="sentence",

module_spec="https://tfhub.dev/google/nnlm-en-dim128/1")

Estimator

分類には DNN 分類器を使用することができます。(注: ラベル関数の異なるモデリングに関しては、追加の留意点がチュートリアルの最後にあります。)

estimator = tf.estimator.DNNClassifier(

hidden_units=[500, 100],

feature_columns=[embedded_text_feature_column],

n_classes=2,

optimizer=tf.keras.optimizers.Adagrad(lr=0.003))

INFO:tensorflow:Using default config.

/tmpfs/src/tf_docs_env/lib/python3.9/site-packages/keras/optimizers/optimizer_v2/adagrad.py:77: UserWarning: The `lr` argument is deprecated, use `learning_rate` instead.

super(Adagrad, self).__init__(name, **kwargs)

INFO:tensorflow:Using default config.

WARNING:tensorflow:Using temporary folder as model directory: /tmpfs/tmp/tmpwmjnov9i

WARNING:tensorflow:Using temporary folder as model directory: /tmpfs/tmp/tmpwmjnov9i

INFO:tensorflow:Using config: {'_model_dir': '/tmpfs/tmp/tmpwmjnov9i', '_tf_random_seed': None, '_save_summary_steps': 100, '_save_checkpoints_steps': None, '_save_checkpoints_secs': 600, '_session_config': allow_soft_placement: true

graph_options {

rewrite_options {

meta_optimizer_iterations: ONE

}

}

, '_keep_checkpoint_max': 5, '_keep_checkpoint_every_n_hours': 10000, '_log_step_count_steps': 100, '_train_distribute': None, '_device_fn': None, '_protocol': None, '_eval_distribute': None, '_experimental_distribute': None, '_experimental_max_worker_delay_secs': None, '_session_creation_timeout_secs': 7200, '_checkpoint_save_graph_def': True, '_service': None, '_cluster_spec': ClusterSpec({}), '_task_type': 'worker', '_task_id': 0, '_global_id_in_cluster': 0, '_master': '', '_evaluation_master': '', '_is_chief': True, '_num_ps_replicas': 0, '_num_worker_replicas': 1}

INFO:tensorflow:Using config: {'_model_dir': '/tmpfs/tmp/tmpwmjnov9i', '_tf_random_seed': None, '_save_summary_steps': 100, '_save_checkpoints_steps': None, '_save_checkpoints_secs': 600, '_session_config': allow_soft_placement: true

graph_options {

rewrite_options {

meta_optimizer_iterations: ONE

}

}

, '_keep_checkpoint_max': 5, '_keep_checkpoint_every_n_hours': 10000, '_log_step_count_steps': 100, '_train_distribute': None, '_device_fn': None, '_protocol': None, '_eval_distribute': None, '_experimental_distribute': None, '_experimental_max_worker_delay_secs': None, '_session_creation_timeout_secs': 7200, '_checkpoint_save_graph_def': True, '_service': None, '_cluster_spec': ClusterSpec({}), '_task_type': 'worker', '_task_id': 0, '_global_id_in_cluster': 0, '_master': '', '_evaluation_master': '', '_is_chief': True, '_num_ps_replicas': 0, '_num_worker_replicas': 1}

トレーニング

妥当なステップ数の分だけ、Estimator をトレーニングします。

# Training for 5,000 steps means 640,000 training examples with the default

# batch size. This is roughly equivalent to 25 epochs since the training dataset

# contains 25,000 examples.

estimator.train(input_fn=train_input_fn, steps=5000);

WARNING:tensorflow:From /tmpfs/src/tf_docs_env/lib/python3.9/site-packages/tensorflow/python/training/training_util.py:396: Variable.initialized_value (from tensorflow.python.ops.variables) is deprecated and will be removed in a future version.

Instructions for updating:

Use Variable.read_value. Variables in 2.X are initialized automatically both in eager and graph (inside tf.defun) contexts.

WARNING:tensorflow:From /tmpfs/src/tf_docs_env/lib/python3.9/site-packages/tensorflow/python/training/training_util.py:396: Variable.initialized_value (from tensorflow.python.ops.variables) is deprecated and will be removed in a future version.

Instructions for updating:

Use Variable.read_value. Variables in 2.X are initialized automatically both in eager and graph (inside tf.defun) contexts.

WARNING:tensorflow:From /tmpfs/src/tf_docs_env/lib/python3.9/site-packages/tensorflow_estimator/python/estimator/inputs/queues/feeding_queue_runner.py:60: QueueRunner.__init__ (from tensorflow.python.training.queue_runner_impl) is deprecated and will be removed in a future version.

Instructions for updating:

To construct input pipelines, use the `tf.data` module.

WARNING:tensorflow:From /tmpfs/src/tf_docs_env/lib/python3.9/site-packages/tensorflow_estimator/python/estimator/inputs/queues/feeding_queue_runner.py:60: QueueRunner.__init__ (from tensorflow.python.training.queue_runner_impl) is deprecated and will be removed in a future version.

Instructions for updating:

To construct input pipelines, use the `tf.data` module.

WARNING:tensorflow:From /tmpfs/src/tf_docs_env/lib/python3.9/site-packages/tensorflow_estimator/python/estimator/inputs/queues/feeding_functions.py:491: add_queue_runner (from tensorflow.python.training.queue_runner_impl) is deprecated and will be removed in a future version.

Instructions for updating:

To construct input pipelines, use the `tf.data` module.

WARNING:tensorflow:From /tmpfs/src/tf_docs_env/lib/python3.9/site-packages/tensorflow_estimator/python/estimator/inputs/queues/feeding_functions.py:491: add_queue_runner (from tensorflow.python.training.queue_runner_impl) is deprecated and will be removed in a future version.

Instructions for updating:

To construct input pipelines, use the `tf.data` module.

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

2022-08-08 16:41:28.195787: W tensorflow/core/common_runtime/graph_constructor.cc:1526] Importing a graph with a lower producer version 26 into an existing graph with producer version 1205. Shape inference will have run different parts of the graph with different producer versions.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

WARNING:tensorflow:From /tmpfs/src/tf_docs_env/lib/python3.9/site-packages/keras/optimizers/optimizer_v2/adagrad.py:86: calling Constant.__init__ (from tensorflow.python.ops.init_ops) with dtype is deprecated and will be removed in a future version.

Instructions for updating:

Call initializer instance with the dtype argument instead of passing it to the constructor

WARNING:tensorflow:From /tmpfs/src/tf_docs_env/lib/python3.9/site-packages/keras/optimizers/optimizer_v2/adagrad.py:86: calling Constant.__init__ (from tensorflow.python.ops.init_ops) with dtype is deprecated and will be removed in a future version.

Instructions for updating:

Call initializer instance with the dtype argument instead of passing it to the constructor

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Create CheckpointSaverHook.

INFO:tensorflow:Create CheckpointSaverHook.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Graph was finalized.

2022-08-08 16:41:31.507634: W tensorflow/core/common_runtime/forward_type_inference.cc:332] Type inference failed. This indicates an invalid graph that escaped type checking. Error message: INVALID_ARGUMENT: expected compatible input types, but input 1:

type_id: TFT_OPTIONAL

args {

type_id: TFT_PRODUCT

args {

type_id: TFT_TENSOR

args {

type_id: TFT_INT64

}

}

}

is neither a subtype nor a supertype of the combined inputs preceding it:

type_id: TFT_OPTIONAL

args {

type_id: TFT_PRODUCT

args {

type_id: TFT_TENSOR

args {

type_id: TFT_INT32

}

}

}

while inferring type of node 'dnn/zero_fraction/cond/output/_18'

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Done running local_init_op.

WARNING:tensorflow:From /tmpfs/src/tf_docs_env/lib/python3.9/site-packages/tensorflow/python/training/monitored_session.py:914: start_queue_runners (from tensorflow.python.training.queue_runner_impl) is deprecated and will be removed in a future version.

Instructions for updating:

To construct input pipelines, use the `tf.data` module.

WARNING:tensorflow:From /tmpfs/src/tf_docs_env/lib/python3.9/site-packages/tensorflow/python/training/monitored_session.py:914: start_queue_runners (from tensorflow.python.training.queue_runner_impl) is deprecated and will be removed in a future version.

Instructions for updating:

To construct input pipelines, use the `tf.data` module.

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 0...

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 0...

INFO:tensorflow:Saving checkpoints for 0 into /tmpfs/tmp/tmpwmjnov9i/model.ckpt.

INFO:tensorflow:Saving checkpoints for 0 into /tmpfs/tmp/tmpwmjnov9i/model.ckpt.

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 0...

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 0...

INFO:tensorflow:loss = 0.69700253, step = 0

INFO:tensorflow:loss = 0.69700253, step = 0

INFO:tensorflow:global_step/sec: 121.898

INFO:tensorflow:global_step/sec: 121.898

INFO:tensorflow:loss = 0.6803105, step = 100 (0.822 sec)

INFO:tensorflow:loss = 0.6803105, step = 100 (0.822 sec)

INFO:tensorflow:global_step/sec: 136.235

INFO:tensorflow:global_step/sec: 136.235

INFO:tensorflow:loss = 0.6795012, step = 200 (0.734 sec)

INFO:tensorflow:loss = 0.6795012, step = 200 (0.734 sec)

INFO:tensorflow:global_step/sec: 139.01

INFO:tensorflow:global_step/sec: 139.01

INFO:tensorflow:loss = 0.66940796, step = 300 (0.719 sec)

INFO:tensorflow:loss = 0.66940796, step = 300 (0.719 sec)

INFO:tensorflow:global_step/sec: 135.506

INFO:tensorflow:global_step/sec: 135.506

INFO:tensorflow:loss = 0.66105497, step = 400 (0.738 sec)

INFO:tensorflow:loss = 0.66105497, step = 400 (0.738 sec)

INFO:tensorflow:global_step/sec: 135.127

INFO:tensorflow:global_step/sec: 135.127

INFO:tensorflow:loss = 0.64085764, step = 500 (0.740 sec)

INFO:tensorflow:loss = 0.64085764, step = 500 (0.740 sec)

INFO:tensorflow:global_step/sec: 135.248

INFO:tensorflow:global_step/sec: 135.248

INFO:tensorflow:loss = 0.6294505, step = 600 (0.740 sec)

INFO:tensorflow:loss = 0.6294505, step = 600 (0.740 sec)

INFO:tensorflow:global_step/sec: 134.249

INFO:tensorflow:global_step/sec: 134.249

INFO:tensorflow:loss = 0.6261533, step = 700 (0.745 sec)

INFO:tensorflow:loss = 0.6261533, step = 700 (0.745 sec)

INFO:tensorflow:global_step/sec: 137.569

INFO:tensorflow:global_step/sec: 137.569

INFO:tensorflow:loss = 0.59472835, step = 800 (0.727 sec)

INFO:tensorflow:loss = 0.59472835, step = 800 (0.727 sec)

INFO:tensorflow:global_step/sec: 141.422

INFO:tensorflow:global_step/sec: 141.422

INFO:tensorflow:loss = 0.61054045, step = 900 (0.707 sec)

INFO:tensorflow:loss = 0.61054045, step = 900 (0.707 sec)

INFO:tensorflow:global_step/sec: 137.629

INFO:tensorflow:global_step/sec: 137.629

INFO:tensorflow:loss = 0.6058329, step = 1000 (0.727 sec)

INFO:tensorflow:loss = 0.6058329, step = 1000 (0.727 sec)

INFO:tensorflow:global_step/sec: 133.718

INFO:tensorflow:global_step/sec: 133.718

INFO:tensorflow:loss = 0.5740481, step = 1100 (0.747 sec)

INFO:tensorflow:loss = 0.5740481, step = 1100 (0.747 sec)

INFO:tensorflow:global_step/sec: 137.331

INFO:tensorflow:global_step/sec: 137.331

INFO:tensorflow:loss = 0.5301008, step = 1200 (0.728 sec)

INFO:tensorflow:loss = 0.5301008, step = 1200 (0.728 sec)

INFO:tensorflow:global_step/sec: 135.472

INFO:tensorflow:global_step/sec: 135.472

INFO:tensorflow:loss = 0.5786547, step = 1300 (0.738 sec)

INFO:tensorflow:loss = 0.5786547, step = 1300 (0.738 sec)

INFO:tensorflow:global_step/sec: 134.266

INFO:tensorflow:global_step/sec: 134.266

INFO:tensorflow:loss = 0.58776206, step = 1400 (0.744 sec)

INFO:tensorflow:loss = 0.58776206, step = 1400 (0.744 sec)

INFO:tensorflow:global_step/sec: 136.847

INFO:tensorflow:global_step/sec: 136.847

INFO:tensorflow:loss = 0.5249496, step = 1500 (0.731 sec)

INFO:tensorflow:loss = 0.5249496, step = 1500 (0.731 sec)

INFO:tensorflow:global_step/sec: 133.594

INFO:tensorflow:global_step/sec: 133.594

INFO:tensorflow:loss = 0.538677, step = 1600 (0.748 sec)

INFO:tensorflow:loss = 0.538677, step = 1600 (0.748 sec)

INFO:tensorflow:global_step/sec: 134.839

INFO:tensorflow:global_step/sec: 134.839

INFO:tensorflow:loss = 0.5168773, step = 1700 (0.742 sec)

INFO:tensorflow:loss = 0.5168773, step = 1700 (0.742 sec)

INFO:tensorflow:global_step/sec: 129.489

INFO:tensorflow:global_step/sec: 129.489

INFO:tensorflow:loss = 0.5466976, step = 1800 (0.772 sec)

INFO:tensorflow:loss = 0.5466976, step = 1800 (0.772 sec)

INFO:tensorflow:global_step/sec: 134.513

INFO:tensorflow:global_step/sec: 134.513

INFO:tensorflow:loss = 0.5353106, step = 1900 (0.744 sec)

INFO:tensorflow:loss = 0.5353106, step = 1900 (0.744 sec)

INFO:tensorflow:global_step/sec: 134.656

INFO:tensorflow:global_step/sec: 134.656

INFO:tensorflow:loss = 0.49088287, step = 2000 (0.742 sec)

INFO:tensorflow:loss = 0.49088287, step = 2000 (0.742 sec)

INFO:tensorflow:global_step/sec: 128.776

INFO:tensorflow:global_step/sec: 128.776

INFO:tensorflow:loss = 0.47008997, step = 2100 (0.777 sec)

INFO:tensorflow:loss = 0.47008997, step = 2100 (0.777 sec)

INFO:tensorflow:global_step/sec: 133.789

INFO:tensorflow:global_step/sec: 133.789

INFO:tensorflow:loss = 0.48619008, step = 2200 (0.747 sec)

INFO:tensorflow:loss = 0.48619008, step = 2200 (0.747 sec)

INFO:tensorflow:global_step/sec: 133.07

INFO:tensorflow:global_step/sec: 133.07

INFO:tensorflow:loss = 0.46037608, step = 2300 (0.751 sec)

INFO:tensorflow:loss = 0.46037608, step = 2300 (0.751 sec)

INFO:tensorflow:global_step/sec: 137.586

INFO:tensorflow:global_step/sec: 137.586

INFO:tensorflow:loss = 0.49235773, step = 2400 (0.727 sec)

INFO:tensorflow:loss = 0.49235773, step = 2400 (0.727 sec)

INFO:tensorflow:global_step/sec: 134.359

INFO:tensorflow:global_step/sec: 134.359

INFO:tensorflow:loss = 0.45718938, step = 2500 (0.744 sec)

INFO:tensorflow:loss = 0.45718938, step = 2500 (0.744 sec)

INFO:tensorflow:global_step/sec: 136.87

INFO:tensorflow:global_step/sec: 136.87

INFO:tensorflow:loss = 0.42642182, step = 2600 (0.731 sec)

INFO:tensorflow:loss = 0.42642182, step = 2600 (0.731 sec)

INFO:tensorflow:global_step/sec: 139.501

INFO:tensorflow:global_step/sec: 139.501

INFO:tensorflow:loss = 0.5368844, step = 2700 (0.717 sec)

INFO:tensorflow:loss = 0.5368844, step = 2700 (0.717 sec)

INFO:tensorflow:global_step/sec: 133.134

INFO:tensorflow:global_step/sec: 133.134

INFO:tensorflow:loss = 0.53780097, step = 2800 (0.751 sec)

INFO:tensorflow:loss = 0.53780097, step = 2800 (0.751 sec)

INFO:tensorflow:global_step/sec: 133.249

INFO:tensorflow:global_step/sec: 133.249

INFO:tensorflow:loss = 0.4425622, step = 2900 (0.750 sec)

INFO:tensorflow:loss = 0.4425622, step = 2900 (0.750 sec)

INFO:tensorflow:global_step/sec: 138.075

INFO:tensorflow:global_step/sec: 138.075

INFO:tensorflow:loss = 0.49331677, step = 3000 (0.724 sec)

INFO:tensorflow:loss = 0.49331677, step = 3000 (0.724 sec)

INFO:tensorflow:global_step/sec: 136.709

INFO:tensorflow:global_step/sec: 136.709

INFO:tensorflow:loss = 0.49796364, step = 3100 (0.732 sec)

INFO:tensorflow:loss = 0.49796364, step = 3100 (0.732 sec)

INFO:tensorflow:global_step/sec: 134.518

INFO:tensorflow:global_step/sec: 134.518

INFO:tensorflow:loss = 0.43429905, step = 3200 (0.743 sec)

INFO:tensorflow:loss = 0.43429905, step = 3200 (0.743 sec)

INFO:tensorflow:global_step/sec: 133.383

INFO:tensorflow:global_step/sec: 133.383

INFO:tensorflow:loss = 0.4540514, step = 3300 (0.750 sec)

INFO:tensorflow:loss = 0.4540514, step = 3300 (0.750 sec)

INFO:tensorflow:global_step/sec: 135.915

INFO:tensorflow:global_step/sec: 135.915

INFO:tensorflow:loss = 0.45045894, step = 3400 (0.736 sec)

INFO:tensorflow:loss = 0.45045894, step = 3400 (0.736 sec)

INFO:tensorflow:global_step/sec: 136.408

INFO:tensorflow:global_step/sec: 136.408

INFO:tensorflow:loss = 0.39834836, step = 3500 (0.733 sec)

INFO:tensorflow:loss = 0.39834836, step = 3500 (0.733 sec)

INFO:tensorflow:global_step/sec: 136.992

INFO:tensorflow:global_step/sec: 136.992

INFO:tensorflow:loss = 0.47742182, step = 3600 (0.730 sec)

INFO:tensorflow:loss = 0.47742182, step = 3600 (0.730 sec)

INFO:tensorflow:global_step/sec: 139.293

INFO:tensorflow:global_step/sec: 139.293

INFO:tensorflow:loss = 0.39858812, step = 3700 (0.718 sec)

INFO:tensorflow:loss = 0.39858812, step = 3700 (0.718 sec)

INFO:tensorflow:global_step/sec: 131.215

INFO:tensorflow:global_step/sec: 131.215

INFO:tensorflow:loss = 0.49839973, step = 3800 (0.762 sec)

INFO:tensorflow:loss = 0.49839973, step = 3800 (0.762 sec)

INFO:tensorflow:global_step/sec: 136.852

INFO:tensorflow:global_step/sec: 136.852

INFO:tensorflow:loss = 0.4317188, step = 3900 (0.731 sec)

INFO:tensorflow:loss = 0.4317188, step = 3900 (0.731 sec)

INFO:tensorflow:global_step/sec: 138.928

INFO:tensorflow:global_step/sec: 138.928

INFO:tensorflow:loss = 0.48535174, step = 4000 (0.720 sec)

INFO:tensorflow:loss = 0.48535174, step = 4000 (0.720 sec)

INFO:tensorflow:global_step/sec: 138.412

INFO:tensorflow:global_step/sec: 138.412

INFO:tensorflow:loss = 0.42053157, step = 4100 (0.722 sec)

INFO:tensorflow:loss = 0.42053157, step = 4100 (0.722 sec)

INFO:tensorflow:global_step/sec: 133.325

INFO:tensorflow:global_step/sec: 133.325

INFO:tensorflow:loss = 0.44434202, step = 4200 (0.750 sec)

INFO:tensorflow:loss = 0.44434202, step = 4200 (0.750 sec)

INFO:tensorflow:global_step/sec: 133.3

INFO:tensorflow:global_step/sec: 133.3

INFO:tensorflow:loss = 0.48930025, step = 4300 (0.750 sec)

INFO:tensorflow:loss = 0.48930025, step = 4300 (0.750 sec)

INFO:tensorflow:global_step/sec: 126.771

INFO:tensorflow:global_step/sec: 126.771

INFO:tensorflow:loss = 0.40035847, step = 4400 (0.789 sec)

INFO:tensorflow:loss = 0.40035847, step = 4400 (0.789 sec)

INFO:tensorflow:global_step/sec: 132.774

INFO:tensorflow:global_step/sec: 132.774

INFO:tensorflow:loss = 0.5221117, step = 4500 (0.753 sec)

INFO:tensorflow:loss = 0.5221117, step = 4500 (0.753 sec)

INFO:tensorflow:global_step/sec: 133.287

INFO:tensorflow:global_step/sec: 133.287

INFO:tensorflow:loss = 0.43918937, step = 4600 (0.750 sec)

INFO:tensorflow:loss = 0.43918937, step = 4600 (0.750 sec)

INFO:tensorflow:global_step/sec: 137.784

INFO:tensorflow:global_step/sec: 137.784

INFO:tensorflow:loss = 0.4040125, step = 4700 (0.726 sec)

INFO:tensorflow:loss = 0.4040125, step = 4700 (0.726 sec)

INFO:tensorflow:global_step/sec: 134.724

INFO:tensorflow:global_step/sec: 134.724

INFO:tensorflow:loss = 0.43220806, step = 4800 (0.742 sec)

INFO:tensorflow:loss = 0.43220806, step = 4800 (0.742 sec)

INFO:tensorflow:global_step/sec: 135.554

INFO:tensorflow:global_step/sec: 135.554

INFO:tensorflow:loss = 0.45726532, step = 4900 (0.738 sec)

INFO:tensorflow:loss = 0.45726532, step = 4900 (0.738 sec)

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 5000...

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 5000...

INFO:tensorflow:Saving checkpoints for 5000 into /tmpfs/tmp/tmpwmjnov9i/model.ckpt.

INFO:tensorflow:Saving checkpoints for 5000 into /tmpfs/tmp/tmpwmjnov9i/model.ckpt.

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 5000...

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 5000...

INFO:tensorflow:Loss for final step: 0.5594708.

INFO:tensorflow:Loss for final step: 0.5594708.

予測する

トレーニングセットとテストセットの両方で予測を実行します。

train_eval_result = estimator.evaluate(input_fn=predict_train_input_fn)

test_eval_result = estimator.evaluate(input_fn=predict_test_input_fn)

print("Training set accuracy: {accuracy}".format(**train_eval_result))

print("Test set accuracy: {accuracy}".format(**test_eval_result))

WARNING:tensorflow:Please fix your imports. Module tensorflow.python.training.checkpoint_management has been moved to tensorflow.python.checkpoint.checkpoint_management. The old module will be deleted in version 2.9. WARNING:tensorflow:Please fix your imports. Module tensorflow.python.training.checkpoint_management has been moved to tensorflow.python.checkpoint.checkpoint_management. The old module will be deleted in version 2.9. INFO:tensorflow:Calling model_fn. INFO:tensorflow:Calling model_fn. INFO:tensorflow:Saver not created because there are no variables in the graph to restore 2022-08-08 16:42:12.834999: W tensorflow/core/common_runtime/graph_constructor.cc:1526] Importing a graph with a lower producer version 26 into an existing graph with producer version 1205. Shape inference will have run different parts of the graph with different producer versions. INFO:tensorflow:Saver not created because there are no variables in the graph to restore INFO:tensorflow:Done calling model_fn. INFO:tensorflow:Done calling model_fn. INFO:tensorflow:Starting evaluation at 2022-08-08T16:42:13 INFO:tensorflow:Starting evaluation at 2022-08-08T16:42:13 INFO:tensorflow:Graph was finalized. INFO:tensorflow:Graph was finalized. INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmpwmjnov9i/model.ckpt-5000 INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmpwmjnov9i/model.ckpt-5000 INFO:tensorflow:Running local_init_op. INFO:tensorflow:Running local_init_op. INFO:tensorflow:Done running local_init_op. INFO:tensorflow:Done running local_init_op. INFO:tensorflow:Inference Time : 2.76648s INFO:tensorflow:Inference Time : 2.76648s INFO:tensorflow:Finished evaluation at 2022-08-08-16:42:16 INFO:tensorflow:Finished evaluation at 2022-08-08-16:42:16 INFO:tensorflow:Saving dict for global step 5000: accuracy = 0.79344, accuracy_baseline = 0.5, auc = 0.8753907, auc_precision_recall = 0.87543654, average_loss = 0.44532335, global_step = 5000, label/mean = 0.5, loss = 0.4454927, precision = 0.78359365, prediction/mean = 0.51800334, recall = 0.8108 INFO:tensorflow:Saving dict for global step 5000: accuracy = 0.79344, accuracy_baseline = 0.5, auc = 0.8753907, auc_precision_recall = 0.87543654, average_loss = 0.44532335, global_step = 5000, label/mean = 0.5, loss = 0.4454927, precision = 0.78359365, prediction/mean = 0.51800334, recall = 0.8108 INFO:tensorflow:Saving 'checkpoint_path' summary for global step 5000: /tmpfs/tmp/tmpwmjnov9i/model.ckpt-5000 INFO:tensorflow:Saving 'checkpoint_path' summary for global step 5000: /tmpfs/tmp/tmpwmjnov9i/model.ckpt-5000 INFO:tensorflow:Calling model_fn. INFO:tensorflow:Calling model_fn. INFO:tensorflow:Saver not created because there are no variables in the graph to restore 2022-08-08 16:42:16.828357: W tensorflow/core/common_runtime/graph_constructor.cc:1526] Importing a graph with a lower producer version 26 into an existing graph with producer version 1205. Shape inference will have run different parts of the graph with different producer versions. INFO:tensorflow:Saver not created because there are no variables in the graph to restore INFO:tensorflow:Done calling model_fn. INFO:tensorflow:Done calling model_fn. INFO:tensorflow:Starting evaluation at 2022-08-08T16:42:17 INFO:tensorflow:Starting evaluation at 2022-08-08T16:42:17 INFO:tensorflow:Graph was finalized. INFO:tensorflow:Graph was finalized. INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmpwmjnov9i/model.ckpt-5000 INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmpwmjnov9i/model.ckpt-5000 INFO:tensorflow:Running local_init_op. INFO:tensorflow:Running local_init_op. INFO:tensorflow:Done running local_init_op. INFO:tensorflow:Done running local_init_op. INFO:tensorflow:Inference Time : 2.78356s INFO:tensorflow:Inference Time : 2.78356s INFO:tensorflow:Finished evaluation at 2022-08-08-16:42:20 INFO:tensorflow:Finished evaluation at 2022-08-08-16:42:20 INFO:tensorflow:Saving dict for global step 5000: accuracy = 0.78744, accuracy_baseline = 0.5, auc = 0.8696759, auc_precision_recall = 0.87126565, average_loss = 0.45326608, global_step = 5000, label/mean = 0.5, loss = 0.45359227, precision = 0.7811424, prediction/mean = 0.5138652, recall = 0.79864 INFO:tensorflow:Saving dict for global step 5000: accuracy = 0.78744, accuracy_baseline = 0.5, auc = 0.8696759, auc_precision_recall = 0.87126565, average_loss = 0.45326608, global_step = 5000, label/mean = 0.5, loss = 0.45359227, precision = 0.7811424, prediction/mean = 0.5138652, recall = 0.79864 INFO:tensorflow:Saving 'checkpoint_path' summary for global step 5000: /tmpfs/tmp/tmpwmjnov9i/model.ckpt-5000 INFO:tensorflow:Saving 'checkpoint_path' summary for global step 5000: /tmpfs/tmp/tmpwmjnov9i/model.ckpt-5000 Training set accuracy: 0.7934399843215942 Test set accuracy: 0.7874400019645691

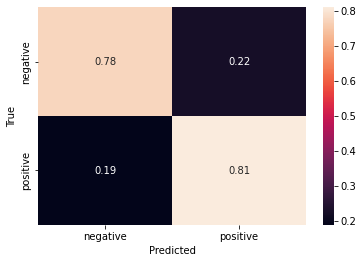

混同行列

混同行列を目視確認して、誤分類の分布を把握することができます。

def get_predictions(estimator, input_fn):

return [x["class_ids"][0] for x in estimator.predict(input_fn=input_fn)]

LABELS = [

"negative", "positive"

]

# Create a confusion matrix on training data.

cm = tf.math.confusion_matrix(train_df["polarity"],

get_predictions(estimator, predict_train_input_fn))

# Normalize the confusion matrix so that each row sums to 1.

cm = tf.cast(cm, dtype=tf.float32)

cm = cm / tf.math.reduce_sum(cm, axis=1)[:, np.newaxis]

sns.heatmap(cm, annot=True, xticklabels=LABELS, yticklabels=LABELS);

plt.xlabel("Predicted");

plt.ylabel("True");

INFO:tensorflow:Calling model_fn. INFO:tensorflow:Calling model_fn. INFO:tensorflow:Saver not created because there are no variables in the graph to restore 2022-08-08 16:42:20.542505: W tensorflow/core/common_runtime/graph_constructor.cc:1526] Importing a graph with a lower producer version 26 into an existing graph with producer version 1205. Shape inference will have run different parts of the graph with different producer versions. INFO:tensorflow:Saver not created because there are no variables in the graph to restore INFO:tensorflow:Done calling model_fn. INFO:tensorflow:Done calling model_fn. INFO:tensorflow:Graph was finalized. INFO:tensorflow:Graph was finalized. INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmpwmjnov9i/model.ckpt-5000 INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmpwmjnov9i/model.ckpt-5000 INFO:tensorflow:Running local_init_op. INFO:tensorflow:Running local_init_op. INFO:tensorflow:Done running local_init_op. INFO:tensorflow:Done running local_init_op.

もっと改善するために

- 感情の回帰: 極性クラスに各例を割り当てる際には分類器を使用しました。しかし実際には感情という、もう 1 つの自由に使える分類的特徴があります。この場合クラスは実際にはスケールを表し、連続的な範囲に基礎的な値(ポジティブまたはネガティブ)をうまくマッピングすることができます。分類(DNN 分類器)の代わりに回帰(DNN 回帰器)を計算することによって、このプロパティが使用できるようにしました。

- 大規模モジュール: 本チュートリアルでは、メモリ使用量を制限するために小さなモジュールを使用しました。もっと語彙が多く埋め込み空間が大きいモジュールを使用すると、精度のポイントがさらに向上する可能性があります。

- パラメータ調整: 学習率やステップ数などのメタパラメータを調整することにより、精度を向上させることができます。特に異なるモジュールを使用している場合にこれは有効です。妥当な結果を得るためには、検証セットが非常に重要な要素です。なぜなら、テストセットに一般化させないでトレーニングデータの予測を学習するモデルを設定するのは非常に簡単だからです。

- より複雑なモデル: 本チュートリアルでは個々の単語を埋め込み、さらに平均値と組み合わせて文の埋め込みを計算するモジュールを使用しました。Sequential モジュール(例えばユニバーサルセンテンスエンコーダモジュールなど)を使用して、文の性質をさらに良く捉えることも可能です。あるいは、2 つ以上の TF-Hub モジュールをアンサンブルします。

- 正則化: 過適合を防ぐために、Proximal Adagrad オプティマイザなどの正則化を行うオプティマイザを使用してみるのもよいでしょう。

上級編: 転移学習の分析

転移学習によって、トレーニングリソースが節約され、小さなデータセットによるトレーニングでも良好なモデルの一般化が実現できるようになりました。この項目では、2 つの異なる TF-Hub モジュールを使用してトレーニングを行い、これを実証します。

- nnlm-en-dim128 - 事前トレーニング済みのテキスト埋め込みモジュール

- random-nnlm-en-dim128 - nlm-en-dim128 と同じ語彙とネットワークを持ちますが、重みはランダムに初期化され、実際のデータではトレーニングされていない、テキスト埋め込みモジュール

これを 2 つのモードでトレーニングします。

- 分類器のみをトレーニングする(つまりモジュールは凍結) 。

- モジュールと分類器を一緒にトレーニングする。

様々なモジュールを使用して複数のトレーニングと評価を行い、精度にどのような影響が出るかを見てみましょう。

def train_and_evaluate_with_module(hub_module, train_module=False):

embedded_text_feature_column = hub.text_embedding_column(

key="sentence", module_spec=hub_module, trainable=train_module)

estimator = tf.estimator.DNNClassifier(

hidden_units=[500, 100],

feature_columns=[embedded_text_feature_column],

n_classes=2,

optimizer=tf.keras.optimizers.Adagrad(learning_rate=0.003))

estimator.train(input_fn=train_input_fn, steps=1000)

train_eval_result = estimator.evaluate(input_fn=predict_train_input_fn)

test_eval_result = estimator.evaluate(input_fn=predict_test_input_fn)

training_set_accuracy = train_eval_result["accuracy"]

test_set_accuracy = test_eval_result["accuracy"]

return {

"Training accuracy": training_set_accuracy,

"Test accuracy": test_set_accuracy

}

results = {}

results["nnlm-en-dim128"] = train_and_evaluate_with_module(

"https://tfhub.dev/google/nnlm-en-dim128/1")

results["nnlm-en-dim128-with-module-training"] = train_and_evaluate_with_module(

"https://tfhub.dev/google/nnlm-en-dim128/1", True)

results["random-nnlm-en-dim128"] = train_and_evaluate_with_module(

"https://tfhub.dev/google/random-nnlm-en-dim128/1")

results["random-nnlm-en-dim128-with-module-training"] = train_and_evaluate_with_module(

"https://tfhub.dev/google/random-nnlm-en-dim128/1", True)

INFO:tensorflow:Using default config.

INFO:tensorflow:Using default config.

WARNING:tensorflow:Using temporary folder as model directory: /tmpfs/tmp/tmp8mlkkp1_

WARNING:tensorflow:Using temporary folder as model directory: /tmpfs/tmp/tmp8mlkkp1_

INFO:tensorflow:Using config: {'_model_dir': '/tmpfs/tmp/tmp8mlkkp1_', '_tf_random_seed': None, '_save_summary_steps': 100, '_save_checkpoints_steps': None, '_save_checkpoints_secs': 600, '_session_config': allow_soft_placement: true

graph_options {

rewrite_options {

meta_optimizer_iterations: ONE

}

}

, '_keep_checkpoint_max': 5, '_keep_checkpoint_every_n_hours': 10000, '_log_step_count_steps': 100, '_train_distribute': None, '_device_fn': None, '_protocol': None, '_eval_distribute': None, '_experimental_distribute': None, '_experimental_max_worker_delay_secs': None, '_session_creation_timeout_secs': 7200, '_checkpoint_save_graph_def': True, '_service': None, '_cluster_spec': ClusterSpec({}), '_task_type': 'worker', '_task_id': 0, '_global_id_in_cluster': 0, '_master': '', '_evaluation_master': '', '_is_chief': True, '_num_ps_replicas': 0, '_num_worker_replicas': 1}

INFO:tensorflow:Using config: {'_model_dir': '/tmpfs/tmp/tmp8mlkkp1_', '_tf_random_seed': None, '_save_summary_steps': 100, '_save_checkpoints_steps': None, '_save_checkpoints_secs': 600, '_session_config': allow_soft_placement: true

graph_options {

rewrite_options {

meta_optimizer_iterations: ONE

}

}

, '_keep_checkpoint_max': 5, '_keep_checkpoint_every_n_hours': 10000, '_log_step_count_steps': 100, '_train_distribute': None, '_device_fn': None, '_protocol': None, '_eval_distribute': None, '_experimental_distribute': None, '_experimental_max_worker_delay_secs': None, '_session_creation_timeout_secs': 7200, '_checkpoint_save_graph_def': True, '_service': None, '_cluster_spec': ClusterSpec({}), '_task_type': 'worker', '_task_id': 0, '_global_id_in_cluster': 0, '_master': '', '_evaluation_master': '', '_is_chief': True, '_num_ps_replicas': 0, '_num_worker_replicas': 1}

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

2022-08-08 16:42:23.163719: W tensorflow/core/common_runtime/graph_constructor.cc:1526] Importing a graph with a lower producer version 26 into an existing graph with producer version 1205. Shape inference will have run different parts of the graph with different producer versions.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Create CheckpointSaverHook.

INFO:tensorflow:Create CheckpointSaverHook.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 0...

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 0...

INFO:tensorflow:Saving checkpoints for 0 into /tmpfs/tmp/tmp8mlkkp1_/model.ckpt.

INFO:tensorflow:Saving checkpoints for 0 into /tmpfs/tmp/tmp8mlkkp1_/model.ckpt.

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 0...

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 0...

INFO:tensorflow:loss = 0.69364965, step = 0

INFO:tensorflow:loss = 0.69364965, step = 0

INFO:tensorflow:global_step/sec: 130.097

INFO:tensorflow:global_step/sec: 130.097

INFO:tensorflow:loss = 0.68727475, step = 100 (0.770 sec)

INFO:tensorflow:loss = 0.68727475, step = 100 (0.770 sec)

INFO:tensorflow:global_step/sec: 133.841

INFO:tensorflow:global_step/sec: 133.841

INFO:tensorflow:loss = 0.6822545, step = 200 (0.747 sec)

INFO:tensorflow:loss = 0.6822545, step = 200 (0.747 sec)

INFO:tensorflow:global_step/sec: 132.996

INFO:tensorflow:global_step/sec: 132.996

INFO:tensorflow:loss = 0.6543617, step = 300 (0.752 sec)

INFO:tensorflow:loss = 0.6543617, step = 300 (0.752 sec)

INFO:tensorflow:global_step/sec: 133.278

INFO:tensorflow:global_step/sec: 133.278

INFO:tensorflow:loss = 0.665762, step = 400 (0.750 sec)

INFO:tensorflow:loss = 0.665762, step = 400 (0.750 sec)

INFO:tensorflow:global_step/sec: 132.978

INFO:tensorflow:global_step/sec: 132.978

INFO:tensorflow:loss = 0.65268624, step = 500 (0.752 sec)

INFO:tensorflow:loss = 0.65268624, step = 500 (0.752 sec)

INFO:tensorflow:global_step/sec: 135.71

INFO:tensorflow:global_step/sec: 135.71

INFO:tensorflow:loss = 0.6269785, step = 600 (0.737 sec)

INFO:tensorflow:loss = 0.6269785, step = 600 (0.737 sec)

INFO:tensorflow:global_step/sec: 132.638

INFO:tensorflow:global_step/sec: 132.638

INFO:tensorflow:loss = 0.61691236, step = 700 (0.754 sec)

INFO:tensorflow:loss = 0.61691236, step = 700 (0.754 sec)

INFO:tensorflow:global_step/sec: 138.206

INFO:tensorflow:global_step/sec: 138.206

INFO:tensorflow:loss = 0.5926987, step = 800 (0.723 sec)

INFO:tensorflow:loss = 0.5926987, step = 800 (0.723 sec)

INFO:tensorflow:global_step/sec: 133.056

INFO:tensorflow:global_step/sec: 133.056

INFO:tensorflow:loss = 0.6129915, step = 900 (0.752 sec)

INFO:tensorflow:loss = 0.6129915, step = 900 (0.752 sec)

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 1000...

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 1000...

INFO:tensorflow:Saving checkpoints for 1000 into /tmpfs/tmp/tmp8mlkkp1_/model.ckpt.

INFO:tensorflow:Saving checkpoints for 1000 into /tmpfs/tmp/tmp8mlkkp1_/model.ckpt.

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 1000...

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 1000...

INFO:tensorflow:Loss for final step: 0.5936066.

INFO:tensorflow:Loss for final step: 0.5936066.

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

2022-08-08 16:42:33.750593: W tensorflow/core/common_runtime/graph_constructor.cc:1526] Importing a graph with a lower producer version 26 into an existing graph with producer version 1205. Shape inference will have run different parts of the graph with different producer versions.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Starting evaluation at 2022-08-08T16:42:34

INFO:tensorflow:Starting evaluation at 2022-08-08T16:42:34

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmp8mlkkp1_/model.ckpt-1000

INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmp8mlkkp1_/model.ckpt-1000

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Inference Time : 2.81515s

INFO:tensorflow:Inference Time : 2.81515s

INFO:tensorflow:Finished evaluation at 2022-08-08-16:42:37

INFO:tensorflow:Finished evaluation at 2022-08-08-16:42:37

INFO:tensorflow:Saving dict for global step 1000: accuracy = 0.72436, accuracy_baseline = 0.5, auc = 0.8015463, auc_precision_recall = 0.80141294, average_loss = 0.5847696, global_step = 1000, label/mean = 0.5, loss = 0.58462936, precision = 0.7376896, prediction/mean = 0.49329805, recall = 0.69632

INFO:tensorflow:Saving dict for global step 1000: accuracy = 0.72436, accuracy_baseline = 0.5, auc = 0.8015463, auc_precision_recall = 0.80141294, average_loss = 0.5847696, global_step = 1000, label/mean = 0.5, loss = 0.58462936, precision = 0.7376896, prediction/mean = 0.49329805, recall = 0.69632

INFO:tensorflow:Saving 'checkpoint_path' summary for global step 1000: /tmpfs/tmp/tmp8mlkkp1_/model.ckpt-1000

INFO:tensorflow:Saving 'checkpoint_path' summary for global step 1000: /tmpfs/tmp/tmp8mlkkp1_/model.ckpt-1000

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Calling model_fn.

2022-08-08 16:42:37.652466: W tensorflow/core/common_runtime/graph_constructor.cc:1526] Importing a graph with a lower producer version 26 into an existing graph with producer version 1205. Shape inference will have run different parts of the graph with different producer versions.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Starting evaluation at 2022-08-08T16:42:38

INFO:tensorflow:Starting evaluation at 2022-08-08T16:42:38

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmp8mlkkp1_/model.ckpt-1000

INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmp8mlkkp1_/model.ckpt-1000

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Inference Time : 2.84917s

INFO:tensorflow:Inference Time : 2.84917s

INFO:tensorflow:Finished evaluation at 2022-08-08-16:42:41

INFO:tensorflow:Finished evaluation at 2022-08-08-16:42:41

INFO:tensorflow:Saving dict for global step 1000: accuracy = 0.7202, accuracy_baseline = 0.5, auc = 0.79454243, auc_precision_recall = 0.79342645, average_loss = 0.58867556, global_step = 1000, label/mean = 0.5, loss = 0.58878756, precision = 0.7400366, prediction/mean = 0.48992622, recall = 0.67888

INFO:tensorflow:Saving dict for global step 1000: accuracy = 0.7202, accuracy_baseline = 0.5, auc = 0.79454243, auc_precision_recall = 0.79342645, average_loss = 0.58867556, global_step = 1000, label/mean = 0.5, loss = 0.58878756, precision = 0.7400366, prediction/mean = 0.48992622, recall = 0.67888

INFO:tensorflow:Saving 'checkpoint_path' summary for global step 1000: /tmpfs/tmp/tmp8mlkkp1_/model.ckpt-1000

INFO:tensorflow:Saving 'checkpoint_path' summary for global step 1000: /tmpfs/tmp/tmp8mlkkp1_/model.ckpt-1000

INFO:tensorflow:Using default config.

INFO:tensorflow:Using default config.

WARNING:tensorflow:Using temporary folder as model directory: /tmpfs/tmp/tmpquqexv2x

WARNING:tensorflow:Using temporary folder as model directory: /tmpfs/tmp/tmpquqexv2x

INFO:tensorflow:Using config: {'_model_dir': '/tmpfs/tmp/tmpquqexv2x', '_tf_random_seed': None, '_save_summary_steps': 100, '_save_checkpoints_steps': None, '_save_checkpoints_secs': 600, '_session_config': allow_soft_placement: true

graph_options {

rewrite_options {

meta_optimizer_iterations: ONE

}

}

, '_keep_checkpoint_max': 5, '_keep_checkpoint_every_n_hours': 10000, '_log_step_count_steps': 100, '_train_distribute': None, '_device_fn': None, '_protocol': None, '_eval_distribute': None, '_experimental_distribute': None, '_experimental_max_worker_delay_secs': None, '_session_creation_timeout_secs': 7200, '_checkpoint_save_graph_def': True, '_service': None, '_cluster_spec': ClusterSpec({}), '_task_type': 'worker', '_task_id': 0, '_global_id_in_cluster': 0, '_master': '', '_evaluation_master': '', '_is_chief': True, '_num_ps_replicas': 0, '_num_worker_replicas': 1}

INFO:tensorflow:Using config: {'_model_dir': '/tmpfs/tmp/tmpquqexv2x', '_tf_random_seed': None, '_save_summary_steps': 100, '_save_checkpoints_steps': None, '_save_checkpoints_secs': 600, '_session_config': allow_soft_placement: true

graph_options {

rewrite_options {

meta_optimizer_iterations: ONE

}

}

, '_keep_checkpoint_max': 5, '_keep_checkpoint_every_n_hours': 10000, '_log_step_count_steps': 100, '_train_distribute': None, '_device_fn': None, '_protocol': None, '_eval_distribute': None, '_experimental_distribute': None, '_experimental_max_worker_delay_secs': None, '_session_creation_timeout_secs': 7200, '_checkpoint_save_graph_def': True, '_service': None, '_cluster_spec': ClusterSpec({}), '_task_type': 'worker', '_task_id': 0, '_global_id_in_cluster': 0, '_master': '', '_evaluation_master': '', '_is_chief': True, '_num_ps_replicas': 0, '_num_worker_replicas': 1}

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

2022-08-08 16:42:41.401985: W tensorflow/core/common_runtime/graph_constructor.cc:1526] Importing a graph with a lower producer version 26 into an existing graph with producer version 1205. Shape inference will have run different parts of the graph with different producer versions.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Create CheckpointSaverHook.

INFO:tensorflow:Create CheckpointSaverHook.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 0...

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 0...

INFO:tensorflow:Saving checkpoints for 0 into /tmpfs/tmp/tmpquqexv2x/model.ckpt.

INFO:tensorflow:Saving checkpoints for 0 into /tmpfs/tmp/tmpquqexv2x/model.ckpt.

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 0...

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 0...

INFO:tensorflow:loss = 0.677137, step = 0

INFO:tensorflow:loss = 0.677137, step = 0

INFO:tensorflow:global_step/sec: 125.961

INFO:tensorflow:global_step/sec: 125.961

INFO:tensorflow:loss = 0.6761261, step = 100 (0.795 sec)

INFO:tensorflow:loss = 0.6761261, step = 100 (0.795 sec)

INFO:tensorflow:global_step/sec: 142.587

INFO:tensorflow:global_step/sec: 142.587

INFO:tensorflow:loss = 0.6649024, step = 200 (0.701 sec)

INFO:tensorflow:loss = 0.6649024, step = 200 (0.701 sec)

INFO:tensorflow:global_step/sec: 131.438

INFO:tensorflow:global_step/sec: 131.438

INFO:tensorflow:loss = 0.6433133, step = 300 (0.761 sec)

INFO:tensorflow:loss = 0.6433133, step = 300 (0.761 sec)

INFO:tensorflow:global_step/sec: 136.106

INFO:tensorflow:global_step/sec: 136.106

INFO:tensorflow:loss = 0.65290105, step = 400 (0.735 sec)

INFO:tensorflow:loss = 0.65290105, step = 400 (0.735 sec)

INFO:tensorflow:global_step/sec: 137.7

INFO:tensorflow:global_step/sec: 137.7

INFO:tensorflow:loss = 0.6280735, step = 500 (0.726 sec)

INFO:tensorflow:loss = 0.6280735, step = 500 (0.726 sec)

INFO:tensorflow:global_step/sec: 133.427

INFO:tensorflow:global_step/sec: 133.427

INFO:tensorflow:loss = 0.61764765, step = 600 (0.750 sec)

INFO:tensorflow:loss = 0.61764765, step = 600 (0.750 sec)

INFO:tensorflow:global_step/sec: 136.516

INFO:tensorflow:global_step/sec: 136.516

INFO:tensorflow:loss = 0.60908824, step = 700 (0.733 sec)

INFO:tensorflow:loss = 0.60908824, step = 700 (0.733 sec)

INFO:tensorflow:global_step/sec: 135.482

INFO:tensorflow:global_step/sec: 135.482

INFO:tensorflow:loss = 0.63097423, step = 800 (0.738 sec)

INFO:tensorflow:loss = 0.63097423, step = 800 (0.738 sec)

INFO:tensorflow:global_step/sec: 138.322

INFO:tensorflow:global_step/sec: 138.322

INFO:tensorflow:loss = 0.5856416, step = 900 (0.723 sec)

INFO:tensorflow:loss = 0.5856416, step = 900 (0.723 sec)

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 1000...

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 1000...

INFO:tensorflow:Saving checkpoints for 1000 into /tmpfs/tmp/tmpquqexv2x/model.ckpt.

INFO:tensorflow:Saving checkpoints for 1000 into /tmpfs/tmp/tmpquqexv2x/model.ckpt.

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 1000...

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 1000...

INFO:tensorflow:Loss for final step: 0.57468414.

INFO:tensorflow:Loss for final step: 0.57468414.

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Calling model_fn.

2022-08-08 16:42:51.851672: W tensorflow/core/common_runtime/graph_constructor.cc:1526] Importing a graph with a lower producer version 26 into an existing graph with producer version 1205. Shape inference will have run different parts of the graph with different producer versions.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Starting evaluation at 2022-08-08T16:42:52

INFO:tensorflow:Starting evaluation at 2022-08-08T16:42:52

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmpquqexv2x/model.ckpt-1000

INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmpquqexv2x/model.ckpt-1000

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Inference Time : 2.78018s

INFO:tensorflow:Inference Time : 2.78018s

INFO:tensorflow:Finished evaluation at 2022-08-08-16:42:55

INFO:tensorflow:Finished evaluation at 2022-08-08-16:42:55

INFO:tensorflow:Saving dict for global step 1000: accuracy = 0.72984, accuracy_baseline = 0.5, auc = 0.8064427, auc_precision_recall = 0.80617565, average_loss = 0.58430856, global_step = 1000, label/mean = 0.5, loss = 0.5842167, precision = 0.71549654, prediction/mean = 0.51830983, recall = 0.76312

INFO:tensorflow:Saving dict for global step 1000: accuracy = 0.72984, accuracy_baseline = 0.5, auc = 0.8064427, auc_precision_recall = 0.80617565, average_loss = 0.58430856, global_step = 1000, label/mean = 0.5, loss = 0.5842167, precision = 0.71549654, prediction/mean = 0.51830983, recall = 0.76312

INFO:tensorflow:Saving 'checkpoint_path' summary for global step 1000: /tmpfs/tmp/tmpquqexv2x/model.ckpt-1000

INFO:tensorflow:Saving 'checkpoint_path' summary for global step 1000: /tmpfs/tmp/tmpquqexv2x/model.ckpt-1000

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Calling model_fn.

2022-08-08 16:42:55.969586: W tensorflow/core/common_runtime/graph_constructor.cc:1526] Importing a graph with a lower producer version 26 into an existing graph with producer version 1205. Shape inference will have run different parts of the graph with different producer versions.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Starting evaluation at 2022-08-08T16:42:56

INFO:tensorflow:Starting evaluation at 2022-08-08T16:42:56

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmpquqexv2x/model.ckpt-1000

INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmpquqexv2x/model.ckpt-1000

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Inference Time : 2.79060s

INFO:tensorflow:Inference Time : 2.79060s

INFO:tensorflow:Finished evaluation at 2022-08-08-16:42:59

INFO:tensorflow:Finished evaluation at 2022-08-08-16:42:59

INFO:tensorflow:Saving dict for global step 1000: accuracy = 0.72392, accuracy_baseline = 0.5, auc = 0.79960686, auc_precision_recall = 0.798516, average_loss = 0.5881147, global_step = 1000, label/mean = 0.5, loss = 0.5882235, precision = 0.7135663, prediction/mean = 0.5156373, recall = 0.74816

INFO:tensorflow:Saving dict for global step 1000: accuracy = 0.72392, accuracy_baseline = 0.5, auc = 0.79960686, auc_precision_recall = 0.798516, average_loss = 0.5881147, global_step = 1000, label/mean = 0.5, loss = 0.5882235, precision = 0.7135663, prediction/mean = 0.5156373, recall = 0.74816

INFO:tensorflow:Saving 'checkpoint_path' summary for global step 1000: /tmpfs/tmp/tmpquqexv2x/model.ckpt-1000

INFO:tensorflow:Saving 'checkpoint_path' summary for global step 1000: /tmpfs/tmp/tmpquqexv2x/model.ckpt-1000

INFO:tensorflow:Using default config.

INFO:tensorflow:Using default config.

WARNING:tensorflow:Using temporary folder as model directory: /tmpfs/tmp/tmpu5xr174t

WARNING:tensorflow:Using temporary folder as model directory: /tmpfs/tmp/tmpu5xr174t

INFO:tensorflow:Using config: {'_model_dir': '/tmpfs/tmp/tmpu5xr174t', '_tf_random_seed': None, '_save_summary_steps': 100, '_save_checkpoints_steps': None, '_save_checkpoints_secs': 600, '_session_config': allow_soft_placement: true

graph_options {

rewrite_options {

meta_optimizer_iterations: ONE

}

}

, '_keep_checkpoint_max': 5, '_keep_checkpoint_every_n_hours': 10000, '_log_step_count_steps': 100, '_train_distribute': None, '_device_fn': None, '_protocol': None, '_eval_distribute': None, '_experimental_distribute': None, '_experimental_max_worker_delay_secs': None, '_session_creation_timeout_secs': 7200, '_checkpoint_save_graph_def': True, '_service': None, '_cluster_spec': ClusterSpec({}), '_task_type': 'worker', '_task_id': 0, '_global_id_in_cluster': 0, '_master': '', '_evaluation_master': '', '_is_chief': True, '_num_ps_replicas': 0, '_num_worker_replicas': 1}

INFO:tensorflow:Using config: {'_model_dir': '/tmpfs/tmp/tmpu5xr174t', '_tf_random_seed': None, '_save_summary_steps': 100, '_save_checkpoints_steps': None, '_save_checkpoints_secs': 600, '_session_config': allow_soft_placement: true

graph_options {

rewrite_options {

meta_optimizer_iterations: ONE

}

}

, '_keep_checkpoint_max': 5, '_keep_checkpoint_every_n_hours': 10000, '_log_step_count_steps': 100, '_train_distribute': None, '_device_fn': None, '_protocol': None, '_eval_distribute': None, '_experimental_distribute': None, '_experimental_max_worker_delay_secs': None, '_session_creation_timeout_secs': 7200, '_checkpoint_save_graph_def': True, '_service': None, '_cluster_spec': ClusterSpec({}), '_task_type': 'worker', '_task_id': 0, '_global_id_in_cluster': 0, '_master': '', '_evaluation_master': '', '_is_chief': True, '_num_ps_replicas': 0, '_num_worker_replicas': 1}

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

2022-08-08 16:43:04.374558: W tensorflow/core/common_runtime/graph_constructor.cc:1526] Importing a graph with a lower producer version 26 into an existing graph with producer version 1205. Shape inference will have run different parts of the graph with different producer versions.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Create CheckpointSaverHook.

INFO:tensorflow:Create CheckpointSaverHook.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 0...

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 0...

INFO:tensorflow:Saving checkpoints for 0 into /tmpfs/tmp/tmpu5xr174t/model.ckpt.

INFO:tensorflow:Saving checkpoints for 0 into /tmpfs/tmp/tmpu5xr174t/model.ckpt.

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 0...

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 0...

INFO:tensorflow:loss = 1.1687939, step = 0

INFO:tensorflow:loss = 1.1687939, step = 0

INFO:tensorflow:global_step/sec: 126.886

INFO:tensorflow:global_step/sec: 126.886

INFO:tensorflow:loss = 0.6399313, step = 100 (0.790 sec)

INFO:tensorflow:loss = 0.6399313, step = 100 (0.790 sec)

INFO:tensorflow:global_step/sec: 141.84

INFO:tensorflow:global_step/sec: 141.84

INFO:tensorflow:loss = 0.61387503, step = 200 (0.705 sec)

INFO:tensorflow:loss = 0.61387503, step = 200 (0.705 sec)

INFO:tensorflow:global_step/sec: 136.975

INFO:tensorflow:global_step/sec: 136.975

INFO:tensorflow:loss = 0.6275979, step = 300 (0.730 sec)

INFO:tensorflow:loss = 0.6275979, step = 300 (0.730 sec)

INFO:tensorflow:global_step/sec: 135.727

INFO:tensorflow:global_step/sec: 135.727

INFO:tensorflow:loss = 0.61466575, step = 400 (0.737 sec)

INFO:tensorflow:loss = 0.61466575, step = 400 (0.737 sec)

INFO:tensorflow:global_step/sec: 138.437

INFO:tensorflow:global_step/sec: 138.437

INFO:tensorflow:loss = 0.6657936, step = 500 (0.722 sec)

INFO:tensorflow:loss = 0.6657936, step = 500 (0.722 sec)

INFO:tensorflow:global_step/sec: 132.964

INFO:tensorflow:global_step/sec: 132.964

INFO:tensorflow:loss = 0.6180428, step = 600 (0.752 sec)

INFO:tensorflow:loss = 0.6180428, step = 600 (0.752 sec)

INFO:tensorflow:global_step/sec: 139.58

INFO:tensorflow:global_step/sec: 139.58

INFO:tensorflow:loss = 0.60067225, step = 700 (0.716 sec)

INFO:tensorflow:loss = 0.60067225, step = 700 (0.716 sec)

INFO:tensorflow:global_step/sec: 136.251

INFO:tensorflow:global_step/sec: 136.251

INFO:tensorflow:loss = 0.6730646, step = 800 (0.734 sec)

INFO:tensorflow:loss = 0.6730646, step = 800 (0.734 sec)

INFO:tensorflow:global_step/sec: 141.721

INFO:tensorflow:global_step/sec: 141.721

INFO:tensorflow:loss = 0.6092864, step = 900 (0.706 sec)

INFO:tensorflow:loss = 0.6092864, step = 900 (0.706 sec)

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 1000...

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 1000...

INFO:tensorflow:Saving checkpoints for 1000 into /tmpfs/tmp/tmpu5xr174t/model.ckpt.

INFO:tensorflow:Saving checkpoints for 1000 into /tmpfs/tmp/tmpu5xr174t/model.ckpt.

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 1000...

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 1000...

INFO:tensorflow:Loss for final step: 0.6206025.

INFO:tensorflow:Loss for final step: 0.6206025.

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

2022-08-08 16:43:14.797754: W tensorflow/core/common_runtime/graph_constructor.cc:1526] Importing a graph with a lower producer version 26 into an existing graph with producer version 1205. Shape inference will have run different parts of the graph with different producer versions.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Starting evaluation at 2022-08-08T16:43:15

INFO:tensorflow:Starting evaluation at 2022-08-08T16:43:15

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmpu5xr174t/model.ckpt-1000

INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmpu5xr174t/model.ckpt-1000

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Inference Time : 2.74745s

INFO:tensorflow:Inference Time : 2.74745s

INFO:tensorflow:Finished evaluation at 2022-08-08-16:43:18

INFO:tensorflow:Finished evaluation at 2022-08-08-16:43:18

INFO:tensorflow:Saving dict for global step 1000: accuracy = 0.68276, accuracy_baseline = 0.5, auc = 0.7481813, auc_precision_recall = 0.7389629, average_loss = 0.5928112, global_step = 1000, label/mean = 0.5, loss = 0.5927259, precision = 0.67774063, prediction/mean = 0.50650775, recall = 0.69688

INFO:tensorflow:Saving dict for global step 1000: accuracy = 0.68276, accuracy_baseline = 0.5, auc = 0.7481813, auc_precision_recall = 0.7389629, average_loss = 0.5928112, global_step = 1000, label/mean = 0.5, loss = 0.5927259, precision = 0.67774063, prediction/mean = 0.50650775, recall = 0.69688

INFO:tensorflow:Saving 'checkpoint_path' summary for global step 1000: /tmpfs/tmp/tmpu5xr174t/model.ckpt-1000

INFO:tensorflow:Saving 'checkpoint_path' summary for global step 1000: /tmpfs/tmp/tmpu5xr174t/model.ckpt-1000

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Calling model_fn.

2022-08-08 16:43:18.561103: W tensorflow/core/common_runtime/graph_constructor.cc:1526] Importing a graph with a lower producer version 26 into an existing graph with producer version 1205. Shape inference will have run different parts of the graph with different producer versions.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Starting evaluation at 2022-08-08T16:43:19

INFO:tensorflow:Starting evaluation at 2022-08-08T16:43:19

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmpu5xr174t/model.ckpt-1000

INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmpu5xr174t/model.ckpt-1000

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Inference Time : 2.76066s

INFO:tensorflow:Inference Time : 2.76066s

INFO:tensorflow:Finished evaluation at 2022-08-08-16:43:22

INFO:tensorflow:Finished evaluation at 2022-08-08-16:43:22

INFO:tensorflow:Saving dict for global step 1000: accuracy = 0.6636, accuracy_baseline = 0.5, auc = 0.7234778, auc_precision_recall = 0.7146655, average_loss = 0.61233413, global_step = 1000, label/mean = 0.5, loss = 0.6122198, precision = 0.65989053, prediction/mean = 0.5056227, recall = 0.6752

INFO:tensorflow:Saving dict for global step 1000: accuracy = 0.6636, accuracy_baseline = 0.5, auc = 0.7234778, auc_precision_recall = 0.7146655, average_loss = 0.61233413, global_step = 1000, label/mean = 0.5, loss = 0.6122198, precision = 0.65989053, prediction/mean = 0.5056227, recall = 0.6752

INFO:tensorflow:Saving 'checkpoint_path' summary for global step 1000: /tmpfs/tmp/tmpu5xr174t/model.ckpt-1000

INFO:tensorflow:Saving 'checkpoint_path' summary for global step 1000: /tmpfs/tmp/tmpu5xr174t/model.ckpt-1000

INFO:tensorflow:Using default config.

INFO:tensorflow:Using default config.

WARNING:tensorflow:Using temporary folder as model directory: /tmpfs/tmp/tmpq7a3x2p6

WARNING:tensorflow:Using temporary folder as model directory: /tmpfs/tmp/tmpq7a3x2p6

INFO:tensorflow:Using config: {'_model_dir': '/tmpfs/tmp/tmpq7a3x2p6', '_tf_random_seed': None, '_save_summary_steps': 100, '_save_checkpoints_steps': None, '_save_checkpoints_secs': 600, '_session_config': allow_soft_placement: true

graph_options {

rewrite_options {

meta_optimizer_iterations: ONE

}

}

, '_keep_checkpoint_max': 5, '_keep_checkpoint_every_n_hours': 10000, '_log_step_count_steps': 100, '_train_distribute': None, '_device_fn': None, '_protocol': None, '_eval_distribute': None, '_experimental_distribute': None, '_experimental_max_worker_delay_secs': None, '_session_creation_timeout_secs': 7200, '_checkpoint_save_graph_def': True, '_service': None, '_cluster_spec': ClusterSpec({}), '_task_type': 'worker', '_task_id': 0, '_global_id_in_cluster': 0, '_master': '', '_evaluation_master': '', '_is_chief': True, '_num_ps_replicas': 0, '_num_worker_replicas': 1}

INFO:tensorflow:Using config: {'_model_dir': '/tmpfs/tmp/tmpq7a3x2p6', '_tf_random_seed': None, '_save_summary_steps': 100, '_save_checkpoints_steps': None, '_save_checkpoints_secs': 600, '_session_config': allow_soft_placement: true

graph_options {

rewrite_options {

meta_optimizer_iterations: ONE

}

}

, '_keep_checkpoint_max': 5, '_keep_checkpoint_every_n_hours': 10000, '_log_step_count_steps': 100, '_train_distribute': None, '_device_fn': None, '_protocol': None, '_eval_distribute': None, '_experimental_distribute': None, '_experimental_max_worker_delay_secs': None, '_session_creation_timeout_secs': 7200, '_checkpoint_save_graph_def': True, '_service': None, '_cluster_spec': ClusterSpec({}), '_task_type': 'worker', '_task_id': 0, '_global_id_in_cluster': 0, '_master': '', '_evaluation_master': '', '_is_chief': True, '_num_ps_replicas': 0, '_num_worker_replicas': 1}

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Calling model_fn.

2022-08-08 16:43:22.207633: W tensorflow/core/common_runtime/graph_constructor.cc:1526] Importing a graph with a lower producer version 26 into an existing graph with producer version 1205. Shape inference will have run different parts of the graph with different producer versions.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Create CheckpointSaverHook.

INFO:tensorflow:Create CheckpointSaverHook.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 0...

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 0...

INFO:tensorflow:Saving checkpoints for 0 into /tmpfs/tmp/tmpq7a3x2p6/model.ckpt.

INFO:tensorflow:Saving checkpoints for 0 into /tmpfs/tmp/tmpq7a3x2p6/model.ckpt.

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 0...

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 0...

INFO:tensorflow:loss = 0.8449179, step = 0

INFO:tensorflow:loss = 0.8449179, step = 0

INFO:tensorflow:global_step/sec: 128.823

INFO:tensorflow:global_step/sec: 128.823

INFO:tensorflow:loss = 0.6730957, step = 100 (0.778 sec)

INFO:tensorflow:loss = 0.6730957, step = 100 (0.778 sec)

INFO:tensorflow:global_step/sec: 144.706

INFO:tensorflow:global_step/sec: 144.706

INFO:tensorflow:loss = 0.6316531, step = 200 (0.691 sec)

INFO:tensorflow:loss = 0.6316531, step = 200 (0.691 sec)

INFO:tensorflow:global_step/sec: 145.183

INFO:tensorflow:global_step/sec: 145.183

INFO:tensorflow:loss = 0.6283449, step = 300 (0.689 sec)

INFO:tensorflow:loss = 0.6283449, step = 300 (0.689 sec)

INFO:tensorflow:global_step/sec: 143.151

INFO:tensorflow:global_step/sec: 143.151

INFO:tensorflow:loss = 0.62578607, step = 400 (0.699 sec)

INFO:tensorflow:loss = 0.62578607, step = 400 (0.699 sec)

INFO:tensorflow:global_step/sec: 143.677

INFO:tensorflow:global_step/sec: 143.677

INFO:tensorflow:loss = 0.6280848, step = 500 (0.696 sec)

INFO:tensorflow:loss = 0.6280848, step = 500 (0.696 sec)

INFO:tensorflow:global_step/sec: 143.458

INFO:tensorflow:global_step/sec: 143.458

INFO:tensorflow:loss = 0.6259059, step = 600 (0.697 sec)

INFO:tensorflow:loss = 0.6259059, step = 600 (0.697 sec)

INFO:tensorflow:global_step/sec: 145.136

INFO:tensorflow:global_step/sec: 145.136

INFO:tensorflow:loss = 0.61442184, step = 700 (0.689 sec)

INFO:tensorflow:loss = 0.61442184, step = 700 (0.689 sec)

INFO:tensorflow:global_step/sec: 143.491

INFO:tensorflow:global_step/sec: 143.491

INFO:tensorflow:loss = 0.64296997, step = 800 (0.697 sec)

INFO:tensorflow:loss = 0.64296997, step = 800 (0.697 sec)

INFO:tensorflow:global_step/sec: 142.223

INFO:tensorflow:global_step/sec: 142.223

INFO:tensorflow:loss = 0.62556696, step = 900 (0.703 sec)

INFO:tensorflow:loss = 0.62556696, step = 900 (0.703 sec)

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 1000...

INFO:tensorflow:Calling checkpoint listeners before saving checkpoint 1000...

INFO:tensorflow:Saving checkpoints for 1000 into /tmpfs/tmp/tmpq7a3x2p6/model.ckpt.

INFO:tensorflow:Saving checkpoints for 1000 into /tmpfs/tmp/tmpq7a3x2p6/model.ckpt.

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 1000...

INFO:tensorflow:Calling checkpoint listeners after saving checkpoint 1000...

INFO:tensorflow:Loss for final step: 0.68325025.

INFO:tensorflow:Loss for final step: 0.68325025.

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

2022-08-08 16:43:32.504893: W tensorflow/core/common_runtime/graph_constructor.cc:1526] Importing a graph with a lower producer version 26 into an existing graph with producer version 1205. Shape inference will have run different parts of the graph with different producer versions.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Starting evaluation at 2022-08-08T16:43:33

INFO:tensorflow:Starting evaluation at 2022-08-08T16:43:33

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmpq7a3x2p6/model.ckpt-1000

INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmpq7a3x2p6/model.ckpt-1000

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Inference Time : 2.80029s

INFO:tensorflow:Inference Time : 2.80029s

INFO:tensorflow:Finished evaluation at 2022-08-08-16:43:36

INFO:tensorflow:Finished evaluation at 2022-08-08-16:43:36

INFO:tensorflow:Saving dict for global step 1000: accuracy = 0.67568, accuracy_baseline = 0.5, auc = 0.7432403, auc_precision_recall = 0.73325217, average_loss = 0.5978181, global_step = 1000, label/mean = 0.5, loss = 0.59763205, precision = 0.67938244, prediction/mean = 0.4936379, recall = 0.66536

INFO:tensorflow:Saving dict for global step 1000: accuracy = 0.67568, accuracy_baseline = 0.5, auc = 0.7432403, auc_precision_recall = 0.73325217, average_loss = 0.5978181, global_step = 1000, label/mean = 0.5, loss = 0.59763205, precision = 0.67938244, prediction/mean = 0.4936379, recall = 0.66536

INFO:tensorflow:Saving 'checkpoint_path' summary for global step 1000: /tmpfs/tmp/tmpq7a3x2p6/model.ckpt-1000

INFO:tensorflow:Saving 'checkpoint_path' summary for global step 1000: /tmpfs/tmp/tmpq7a3x2p6/model.ckpt-1000

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Calling model_fn.

2022-08-08 16:43:36.343988: W tensorflow/core/common_runtime/graph_constructor.cc:1526] Importing a graph with a lower producer version 26 into an existing graph with producer version 1205. Shape inference will have run different parts of the graph with different producer versions.

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

INFO:tensorflow:Saver not created because there are no variables in the graph to restore

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Starting evaluation at 2022-08-08T16:43:37

INFO:tensorflow:Starting evaluation at 2022-08-08T16:43:37

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmpq7a3x2p6/model.ckpt-1000

INFO:tensorflow:Restoring parameters from /tmpfs/tmp/tmpq7a3x2p6/model.ckpt-1000

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Inference Time : 2.75035s

INFO:tensorflow:Inference Time : 2.75035s

INFO:tensorflow:Finished evaluation at 2022-08-08-16:43:39

INFO:tensorflow:Finished evaluation at 2022-08-08-16:43:39

INFO:tensorflow:Saving dict for global step 1000: accuracy = 0.66412, accuracy_baseline = 0.5, auc = 0.7257351, auc_precision_recall = 0.7147416, average_loss = 0.61066264, global_step = 1000, label/mean = 0.5, loss = 0.61058325, precision = 0.66756517, prediction/mean = 0.49435714, recall = 0.65384

INFO:tensorflow:Saving dict for global step 1000: accuracy = 0.66412, accuracy_baseline = 0.5, auc = 0.7257351, auc_precision_recall = 0.7147416, average_loss = 0.61066264, global_step = 1000, label/mean = 0.5, loss = 0.61058325, precision = 0.66756517, prediction/mean = 0.49435714, recall = 0.65384

INFO:tensorflow:Saving 'checkpoint_path' summary for global step 1000: /tmpfs/tmp/tmpq7a3x2p6/model.ckpt-1000

INFO:tensorflow:Saving 'checkpoint_path' summary for global step 1000: /tmpfs/tmp/tmpq7a3x2p6/model.ckpt-1000

結果を見てみましょう。

pd.DataFrame.from_dict(results, orient="index")

既に複数のパターンが見られますが、まず最初にテストセットのベースラインの精度、つまり最も代表的なクラスのラベルのみを出力して達成可能な下限値を確立させる必要があります。

estimator.evaluate(input_fn=predict_test_input_fn)["accuracy_baseline"]