View on TensorFlow.org View on TensorFlow.org |

Run in Google Colab Run in Google Colab |

View on GitHub View on GitHub |

Download notebook Download notebook |

查看 TF Hub 模型 查看 TF Hub 模型 |

TensorFlow Hub (TF-Hub) 是一个分享打包在可重用资源(尤其是预训练的模块)中的机器学习专业知识的平台。

在此 Colab 中,我们将使用打包 DELF 神经网络和逻辑的模块来处理图像,从而识别关键点及其描述符。神经网络的权重在地标图像上训练,如这篇论文所述。

设置

pip install scikit-imagefrom absl import logging

import matplotlib.pyplot as plt

import numpy as np

from PIL import Image, ImageOps

from scipy.spatial import cKDTree

from skimage.feature import plot_matches

from skimage.measure import ransac

from skimage.transform import AffineTransform

from six import BytesIO

import tensorflow as tf

import tensorflow_hub as hub

from six.moves.urllib.request import urlopen

2022-12-14 22:16:47.698669: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer.so.7'; dlerror: libnvinfer.so.7: cannot open shared object file: No such file or directory 2022-12-14 22:16:47.698802: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer_plugin.so.7'; dlerror: libnvinfer_plugin.so.7: cannot open shared object file: No such file or directory 2022-12-14 22:16:47.698813: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Cannot dlopen some TensorRT libraries. If you would like to use Nvidia GPU with TensorRT, please make sure the missing libraries mentioned above are installed properly.

数据

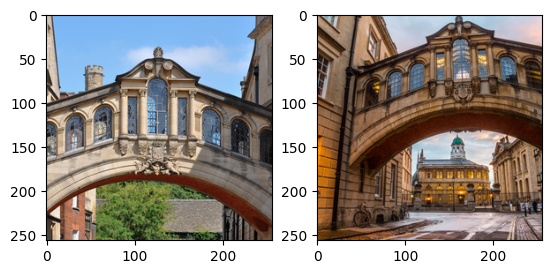

在下一个代码单元中,我们指定要使用 DELF 处理的两个图像的网址,以便进行匹配和对比。

Choose images

images = "Bridge of Sighs"

if images == "Bridge of Sighs":

# from: https://commons.wikimedia.org/wiki/File:Bridge_of_Sighs,_Oxford.jpg

# by: N.H. Fischer

IMAGE_1_URL = 'https://upload.wikimedia.org/wikipedia/commons/2/28/Bridge_of_Sighs%2C_Oxford.jpg'

# from https://commons.wikimedia.org/wiki/File:The_Bridge_of_Sighs_and_Sheldonian_Theatre,_Oxford.jpg

# by: Matthew Hoser

IMAGE_2_URL = 'https://upload.wikimedia.org/wikipedia/commons/c/c3/The_Bridge_of_Sighs_and_Sheldonian_Theatre%2C_Oxford.jpg'

elif images == "Golden Gate":

IMAGE_1_URL = 'https://upload.wikimedia.org/wikipedia/commons/1/1e/Golden_gate2.jpg'

IMAGE_2_URL = 'https://upload.wikimedia.org/wikipedia/commons/3/3e/GoldenGateBridge.jpg'

elif images == "Acropolis":

IMAGE_1_URL = 'https://upload.wikimedia.org/wikipedia/commons/c/ce/2006_01_21_Ath%C3%A8nes_Parth%C3%A9non.JPG'

IMAGE_2_URL = 'https://upload.wikimedia.org/wikipedia/commons/5/5c/ACROPOLIS_1969_-_panoramio_-_jean_melis.jpg'

else:

IMAGE_1_URL = 'https://upload.wikimedia.org/wikipedia/commons/d/d8/Eiffel_Tower%2C_November_15%2C_2011.jpg'

IMAGE_2_URL = 'https://upload.wikimedia.org/wikipedia/commons/a/a8/Eiffel_Tower_from_immediately_beside_it%2C_Paris_May_2008.jpg'

下载、调整大小、保存并显示图像。

def download_and_resize(name, url, new_width=256, new_height=256):

path = tf.keras.utils.get_file(url.split('/')[-1], url)

image = Image.open(path)

image = ImageOps.fit(image, (new_width, new_height), Image.ANTIALIAS)

return image

image1 = download_and_resize('image_1.jpg', IMAGE_1_URL)

image2 = download_and_resize('image_2.jpg', IMAGE_2_URL)

plt.subplot(1,2,1)

plt.imshow(image1)

plt.subplot(1,2,2)

plt.imshow(image2)

Downloading data from https://upload.wikimedia.org/wikipedia/commons/2/28/Bridge_of_Sighs%2C_Oxford.jpg 7013850/7013850 [==============================] - 0s 0us/step /tmpfs/tmp/ipykernel_90249/2456265030.py:4: DeprecationWarning: ANTIALIAS is deprecated and will be removed in Pillow 10 (2023-07-01). Use Resampling.LANCZOS instead. image = ImageOps.fit(image, (new_width, new_height), Image.ANTIALIAS) Downloading data from https://upload.wikimedia.org/wikipedia/commons/c/c3/The_Bridge_of_Sighs_and_Sheldonian_Theatre%2C_Oxford.jpg 14164194/14164194 [==============================] - 1s 0us/step <matplotlib.image.AxesImage at 0x7f60c6cecbe0>

将 DELF 模块应用到数据

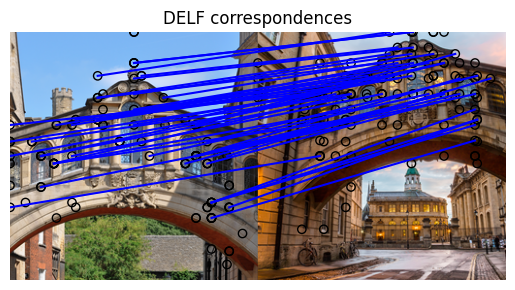

DELF 模块使用一个图像作为输入,并使用向量描述需要注意的点。以下单元包含此 Colab 逻辑的核心。

delf = hub.load('https://tfhub.dev/google/delf/1').signatures['default']

def run_delf(image):

np_image = np.array(image)

float_image = tf.image.convert_image_dtype(np_image, tf.float32)

return delf(

image=float_image,

score_threshold=tf.constant(100.0),

image_scales=tf.constant([0.25, 0.3536, 0.5, 0.7071, 1.0, 1.4142, 2.0]),

max_feature_num=tf.constant(1000))

result1 = run_delf(image1)

result2 = run_delf(image2)

使用位置和描述向量匹配图像

TensorFlow is not needed for this post-processing and visualization

def match_images(image1, image2, result1, result2):

distance_threshold = 0.8

# Read features.

num_features_1 = result1['locations'].shape[0]

print("Loaded image 1's %d features" % num_features_1)

num_features_2 = result2['locations'].shape[0]

print("Loaded image 2's %d features" % num_features_2)

# Find nearest-neighbor matches using a KD tree.

d1_tree = cKDTree(result1['descriptors'])

_, indices = d1_tree.query(

result2['descriptors'],

distance_upper_bound=distance_threshold)

# Select feature locations for putative matches.

locations_2_to_use = np.array([

result2['locations'][i,]

for i in range(num_features_2)

if indices[i] != num_features_1

])

locations_1_to_use = np.array([

result1['locations'][indices[i],]

for i in range(num_features_2)

if indices[i] != num_features_1

])

# Perform geometric verification using RANSAC.

_, inliers = ransac(

(locations_1_to_use, locations_2_to_use),

AffineTransform,

min_samples=3,

residual_threshold=20,

max_trials=1000)

print('Found %d inliers' % sum(inliers))

# Visualize correspondences.

_, ax = plt.subplots()

inlier_idxs = np.nonzero(inliers)[0]

plot_matches(

ax,

image1,

image2,

locations_1_to_use,

locations_2_to_use,

np.column_stack((inlier_idxs, inlier_idxs)),

matches_color='b')

ax.axis('off')

ax.set_title('DELF correspondences')

match_images(image1, image2, result1, result2)

Loaded image 1's 233 features Loaded image 2's 262 features Found 48 inliers