Lihat di TensorFlow.org Lihat di TensorFlow.org |  Jalankan di Google Colab Jalankan di Google Colab |  Lihat sumber di GitHub Lihat sumber di GitHub |  Unduh buku catatan Unduh buku catatan |

Kebisingan hadir di komputer kuantum modern. Qubit rentan terhadap gangguan dari lingkungan sekitarnya, fabrikasi yang tidak sempurna, TLS, dan terkadang bahkan sinar gamma . Sampai koreksi kesalahan skala besar tercapai, algoritma saat ini harus dapat tetap berfungsi dengan adanya noise. Hal ini menjadikan pengujian algoritma di bawah noise sebagai langkah penting untuk memvalidasi algoritma/model kuantum yang akan berfungsi pada komputer kuantum saat ini.

Dalam tutorial ini Anda akan menjelajahi dasar-dasar simulasi rangkaian bising di TFQ melalui API tfq.layers tingkat tinggi.

Mempersiapkan

pip install tensorflow==2.7.0 tensorflow-quantum

pip install -q git+https://github.com/tensorflow/docs

# Update package resources to account for version changes.

import importlib, pkg_resources

importlib.reload(pkg_resources)

<module 'pkg_resources' from '/tmpfs/src/tf_docs_env/lib/python3.7/site-packages/pkg_resources/__init__.py'>

import random

import cirq

import sympy

import tensorflow_quantum as tfq

import tensorflow as tf

import numpy as np

# Plotting

import matplotlib.pyplot as plt

import tensorflow_docs as tfdocs

import tensorflow_docs.plots

2022-02-04 12:35:30.853880: E tensorflow/stream_executor/cuda/cuda_driver.cc:271] failed call to cuInit: CUDA_ERROR_NO_DEVICE: no CUDA-capable device is detected

1. Memahami kebisingan kuantum

1.1 Kebisingan sirkuit dasar

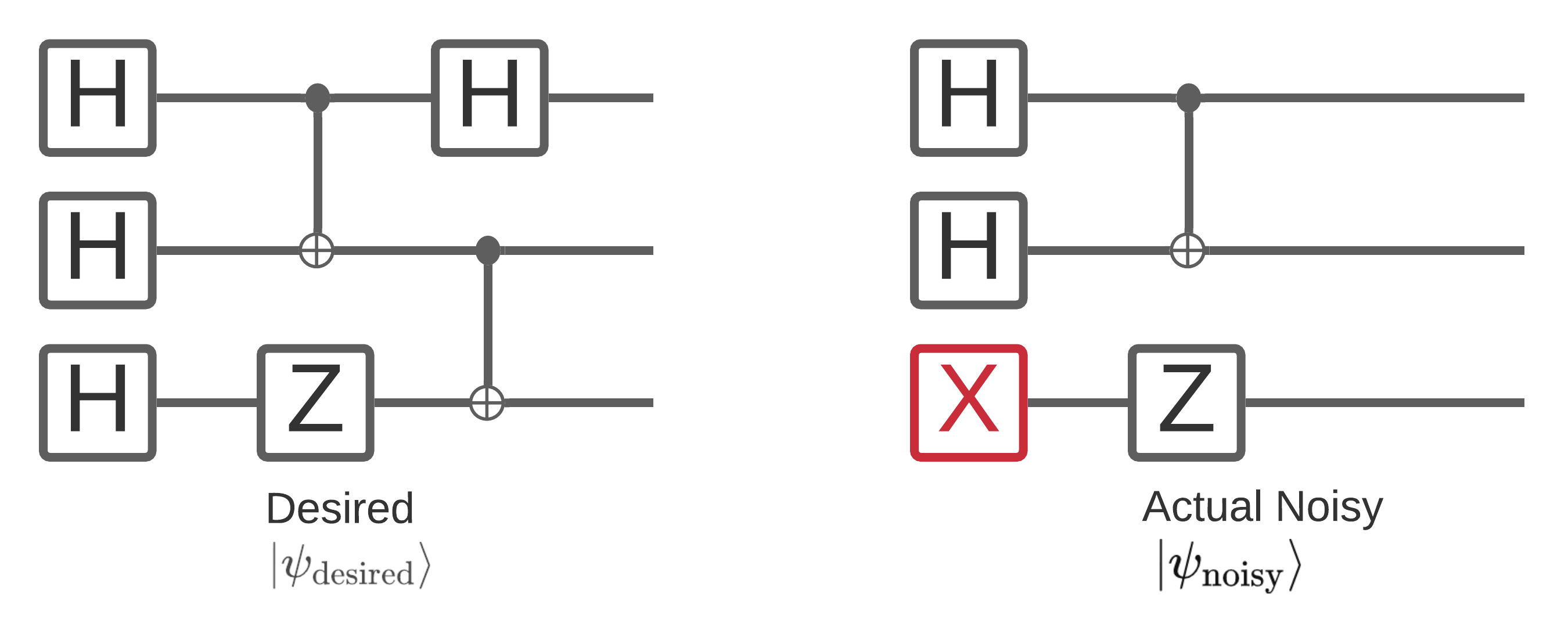

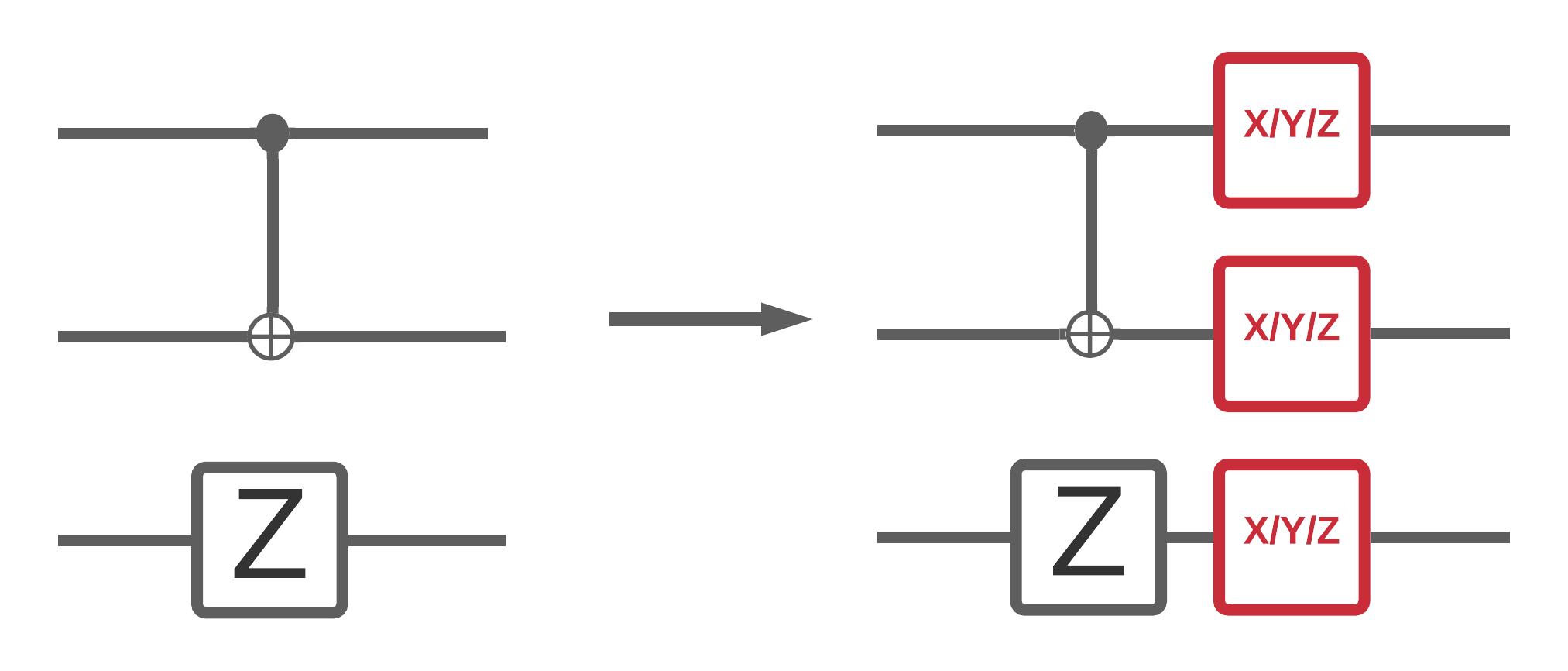

Kebisingan pada komputer kuantum memengaruhi sampel bitstring yang dapat Anda ukur darinya. Salah satu cara intuitif Anda dapat mulai memikirkan hal ini adalah bahwa komputer kuantum yang bising akan "menyisipkan", "menghapus" atau "mengganti" gerbang di tempat-tempat acak seperti diagram di bawah ini:

Membangun dari intuisi ini, ketika berhadapan dengan kebisingan, Anda tidak lagi menggunakan satu keadaan murni \(|\psi \rangle\) melainkan berurusan dengan ansambel dari semua realisasi bising yang mungkin dari sirkuit yang Anda inginkan: \(\rho = \sum_j p_j |\psi_j \rangle \langle \psi_j |\) . Di mana \(p_j\) memberikan probabilitas bahwa sistem berada di \(|\psi_j \rangle\) .

Meninjau kembali gambar di atas, jika kita tahu sebelumnya bahwa 90% dari waktu sistem kita dijalankan dengan sempurna, atau error 10% dari waktu hanya dengan satu mode kegagalan ini, maka ansambel kita akan menjadi:

\(\rho = 0.9 |\psi_\text{desired} \rangle \langle \psi_\text{desired}| + 0.1 |\psi_\text{noisy} \rangle \langle \psi_\text{noisy}| \)

Jika ada lebih dari satu cara di mana sirkuit kita bisa salah, maka ansambel \(\rho\) akan berisi lebih dari dua istilah (satu untuk setiap realisasi bising baru yang bisa terjadi). \(\rho\) disebut sebagai matriks kepadatan yang menggambarkan sistem bising Anda.

1.2 Menggunakan saluran untuk memodelkan kebisingan sirkuit

Sayangnya dalam praktiknya, hampir tidak mungkin untuk mengetahui semua kemungkinan kesalahan sirkuit Anda dan probabilitas pastinya. Asumsi penyederhanaan yang dapat Anda buat adalah bahwa setelah setiap operasi di sirkuit Anda, ada semacam saluran yang secara kasar menangkap bagaimana operasi itu mungkin salah. Anda dapat dengan cepat membuat sirkuit dengan beberapa kebisingan:

def x_circuit(qubits):

"""Produces an X wall circuit on `qubits`."""

return cirq.Circuit(cirq.X.on_each(*qubits))

def make_noisy(circuit, p):

"""Add a depolarization channel to all qubits in `circuit` before measurement."""

return circuit + cirq.Circuit(cirq.depolarize(p).on_each(*circuit.all_qubits()))

my_qubits = cirq.GridQubit.rect(1, 2)

my_circuit = x_circuit(my_qubits)

my_noisy_circuit = make_noisy(my_circuit, 0.5)

my_circuit

my_noisy_circuit

Anda dapat memeriksa matriks kepadatan tak bersuara \(\rho\) dengan:

rho = cirq.final_density_matrix(my_circuit)

np.round(rho, 3)

array([[0.+0.j, 0.+0.j, 0.+0.j, 0.+0.j],

[0.+0.j, 0.+0.j, 0.+0.j, 0.+0.j],

[0.+0.j, 0.+0.j, 0.+0.j, 0.+0.j],

[0.+0.j, 0.+0.j, 0.+0.j, 1.+0.j]], dtype=complex64)

Dan matriks kepadatan bising \(\rho\) dengan:

rho = cirq.final_density_matrix(my_noisy_circuit)

np.round(rho, 3)

array([[0.111+0.j, 0. +0.j, 0. +0.j, 0. +0.j],

[0. +0.j, 0.222+0.j, 0. +0.j, 0. +0.j],

[0. +0.j, 0. +0.j, 0.222+0.j, 0. +0.j],

[0. +0.j, 0. +0.j, 0. +0.j, 0.444+0.j]], dtype=complex64)

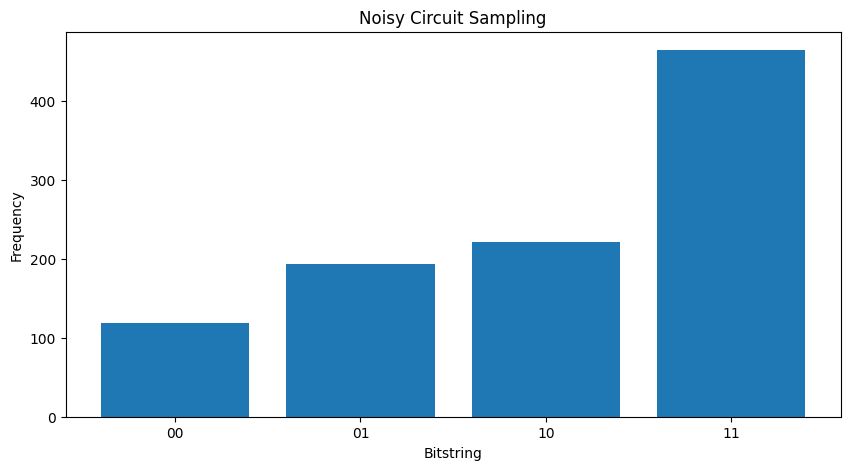

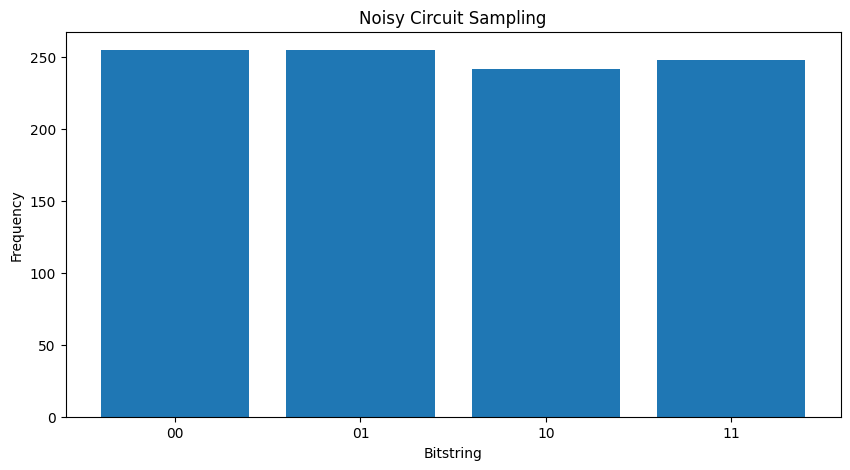

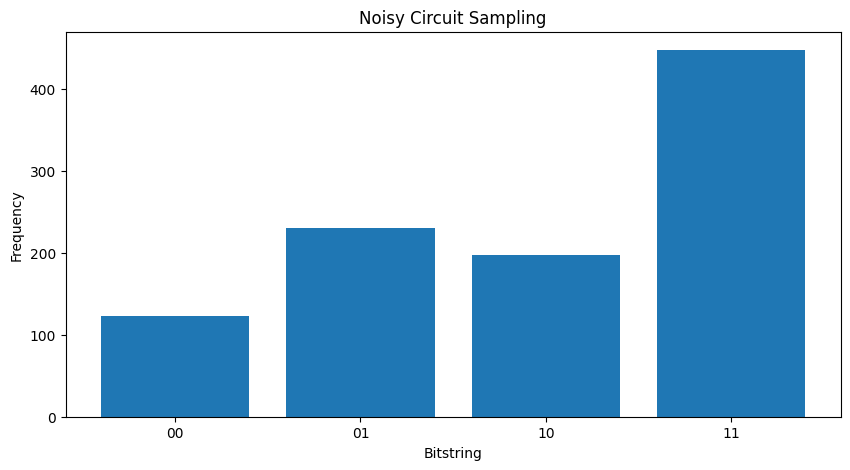

Membandingkan dua \( \rho \) yang berbeda, Anda dapat melihat bahwa kebisingan telah memengaruhi amplitudo status (dan akibatnya probabilitas pengambilan sampel). Dalam kasus tanpa suara, Anda akan selalu berharap untuk mengambil sampel status \( |11\rangle \) . Tetapi dalam keadaan bising sekarang ada kemungkinan bukan nol untuk pengambilan sampel \( |00\rangle \) atau \( |01\rangle \) atau \( |10\rangle \) juga:

"""Sample from my_noisy_circuit."""

def plot_samples(circuit):

samples = cirq.sample(circuit + cirq.measure(*circuit.all_qubits(), key='bits'), repetitions=1000)

freqs, _ = np.histogram(samples.data['bits'], bins=[i+0.01 for i in range(-1,2** len(my_qubits))])

plt.figure(figsize=(10,5))

plt.title('Noisy Circuit Sampling')

plt.xlabel('Bitstring')

plt.ylabel('Frequency')

plt.bar([i for i in range(2** len(my_qubits))], freqs, tick_label=['00','01','10','11'])

plot_samples(my_noisy_circuit)

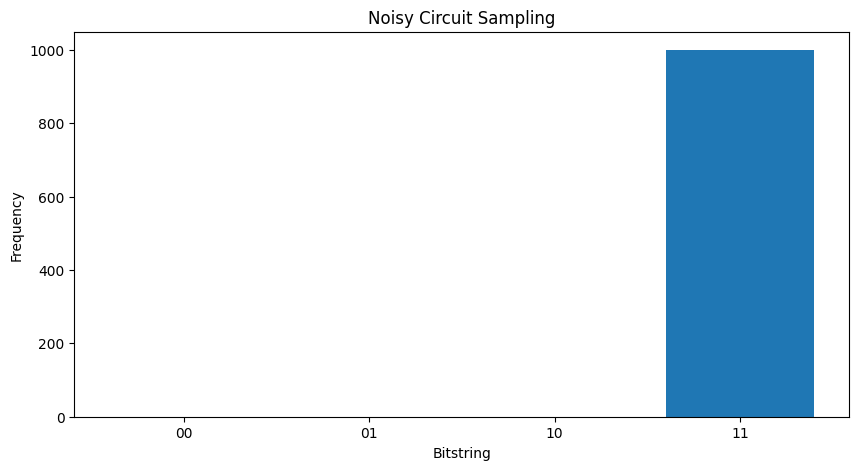

Tanpa suara apa pun, Anda akan selalu mendapatkan \(|11\rangle\):

"""Sample from my_circuit."""

plot_samples(my_circuit)

Jika Anda meningkatkan kebisingan sedikit lebih jauh, akan semakin sulit untuk membedakan perilaku yang diinginkan (pengambilan sampel \(|11\rangle\) ) dari kebisingan:

my_really_noisy_circuit = make_noisy(my_circuit, 0.75)

plot_samples(my_really_noisy_circuit)

2. Kebisingan dasar di TFQ

Dengan pemahaman tentang bagaimana kebisingan dapat memengaruhi eksekusi sirkuit, Anda dapat menjelajahi cara kerja kebisingan di TFQ. TensorFlow Quantum menggunakan simulasi berbasis monte-carlo / trajectory sebagai alternatif simulasi matriks kepadatan. Ini karena kompleksitas memori simulasi matriks kepadatan membatasi simulasi besar menjadi <= 20 qubit dengan metode simulasi matriks kepadatan penuh tradisional. Monte-carlo / lintasan memperdagangkan biaya ini dalam memori untuk biaya tambahan dalam waktu. Opsi backend='noisy' tersedia untuk semua tfq.layers.Sample , tfq.layers.SampledExpectation dan tfq.layers.Expectation (Dalam kasus Expectation , ini menambahkan parameter repetitions yang diperlukan).

2.1 Pengambilan sampel bising di TFQ

Untuk membuat ulang plot di atas menggunakan TFQ dan simulasi lintasan Anda dapat menggunakan tfq.layers.Sample

"""Draw bitstring samples from `my_noisy_circuit`"""

bitstrings = tfq.layers.Sample(backend='noisy')(my_noisy_circuit, repetitions=1000)

numeric_values = np.einsum('ijk,k->ij', bitstrings.to_tensor().numpy(), [1, 2])[0]

freqs, _ = np.histogram(numeric_values, bins=[i+0.01 for i in range(-1,2** len(my_qubits))])

plt.figure(figsize=(10,5))

plt.title('Noisy Circuit Sampling')

plt.xlabel('Bitstring')

plt.ylabel('Frequency')

plt.bar([i for i in range(2** len(my_qubits))], freqs, tick_label=['00','01','10','11'])

<BarContainer object of 4 artists>

2.2 Ekspektasi berdasarkan sampel yang bising

Untuk melakukan perhitungan ekspektasi berbasis sampel yang bising, Anda dapat menggunakan tfq.layers.SampleExpectation :

some_observables = [cirq.X(my_qubits[0]), cirq.Z(my_qubits[0]), 3.0 * cirq.Y(my_qubits[1]) + 1]

some_observables

[cirq.X(cirq.GridQubit(0, 0)),

cirq.Z(cirq.GridQubit(0, 0)),

cirq.PauliSum(cirq.LinearDict({frozenset({(cirq.GridQubit(0, 1), cirq.Y)}): (3+0j), frozenset(): (1+0j)}))]

Hitung perkiraan ekspektasi tak bersuara melalui pengambilan sampel dari rangkaian:

noiseless_sampled_expectation = tfq.layers.SampledExpectation(backend='noiseless')(

my_circuit, operators=some_observables, repetitions=10000

)

noiseless_sampled_expectation.numpy()

array([[-0.0028, -1. , 1.0264]], dtype=float32)

Bandingkan dengan versi berisik:

noisy_sampled_expectation = tfq.layers.SampledExpectation(backend='noisy')(

[my_noisy_circuit, my_really_noisy_circuit], operators=some_observables, repetitions=10000

)

noisy_sampled_expectation.numpy()

array([[ 0.0242 , -0.33200002, 1.0138001 ],

[ 0.0108 , -0.0012 , 0.9502 ]], dtype=float32)

Anda dapat melihat bahwa noise telah secara khusus memengaruhi akurasi l10n \(\langle \psi | Z | \psi \rangle\) , dengan my_really_noisy_circuit berkonsentrasi sangat cepat menuju 0.

2.3 Perhitungan ekspektasi analitik yang bising

Melakukan perhitungan ekspektasi analitik yang bising hampir identik dengan di atas:

noiseless_analytic_expectation = tfq.layers.Expectation(backend='noiseless')(

my_circuit, operators=some_observables

)

noiseless_analytic_expectation.numpy()

array([[ 1.9106853e-15, -1.0000000e+00, 1.0000002e+00]], dtype=float32)

noisy_analytic_expectation = tfq.layers.Expectation(backend='noisy')(

[my_noisy_circuit, my_really_noisy_circuit], operators=some_observables, repetitions=10000

)

noisy_analytic_expectation.numpy()

array([[ 1.9106850e-15, -3.3359998e-01, 1.0000000e+00],

[ 1.9106857e-15, -3.8000005e-03, 1.0000001e+00]], dtype=float32)

3. Model hybrid dan noise data kuantum

Sekarang setelah Anda menerapkan beberapa simulasi sirkuit bising di TFQ, Anda dapat bereksperimen dengan bagaimana kebisingan memengaruhi model klasik kuantum dan hibrida kuantum, dengan membandingkan dan membedakan kinerjanya yang bising vs tanpa suara. Pemeriksaan pertama yang baik untuk melihat apakah model atau algoritme tahan terhadap noise adalah dengan menguji di bawah model depolarisasi lebar sirkuit yang terlihat seperti ini:

Dimana setiap irisan waktu dari rangkaian (kadang-kadang disebut sebagai momen) memiliki saluran depolarisasi yang ditambahkan setelah setiap operasi gerbang dalam irisan waktu tersebut. Saluran depolarisasi dengan menerapkan salah satu dari \(\{X, Y, Z \}\) dengan probabilitas \(p\) atau tidak menerapkan apa pun (pertahankan operasi asli) dengan probabilitas \(1-p\).

3.1 Data

Untuk contoh ini, Anda dapat menggunakan beberapa sirkuit yang telah disiapkan dalam modul tfq.datasets sebagai data pelatihan:

qubits = cirq.GridQubit.rect(1, 8)

circuits, labels, pauli_sums, _ = tfq.datasets.xxz_chain(qubits, 'closed')

circuits[0]

Downloading data from https://storage.googleapis.com/download.tensorflow.org/data/quantum/spin_systems/XXZ_chain.zip 184451072/184449737 [==============================] - 2s 0us/step 184459264/184449737 [==============================] - 2s 0us/step

Menulis fungsi pembantu kecil akan membantu menghasilkan data untuk kasing yang bising vs tidak bersuara:

def get_data(qubits, depolarize_p=0.):

"""Return quantum data circuits and labels in `tf.Tensor` form."""

circuits, labels, pauli_sums, _ = tfq.datasets.xxz_chain(qubits, 'closed')

if depolarize_p >= 1e-5:

circuits = [circuit.with_noise(cirq.depolarize(depolarize_p)) for circuit in circuits]

tmp = list(zip(circuits, labels))

random.shuffle(tmp)

circuits_tensor = tfq.convert_to_tensor([x[0] for x in tmp])

labels_tensor = tf.convert_to_tensor([x[1] for x in tmp])

return circuits_tensor, labels_tensor

3.2 Mendefinisikan rangkaian model

Sekarang Anda memiliki data kuantum dalam bentuk sirkuit, Anda akan memerlukan sirkuit untuk memodelkan data ini, seperti dengan data tersebut Anda dapat menulis fungsi pembantu untuk menghasilkan sirkuit ini yang secara opsional mengandung noise:

def modelling_circuit(qubits, depth, depolarize_p=0.):

"""A simple classifier circuit."""

dim = len(qubits)

ret = cirq.Circuit(cirq.H.on_each(*qubits))

for i in range(depth):

# Entangle layer.

ret += cirq.Circuit(cirq.CX(q1, q2) for (q1, q2) in zip(qubits[::2], qubits[1::2]))

ret += cirq.Circuit(cirq.CX(q1, q2) for (q1, q2) in zip(qubits[1::2], qubits[2::2]))

# Learnable rotation layer.

# i_params = sympy.symbols(f'layer-{i}-0:{dim}')

param = sympy.Symbol(f'layer-{i}')

single_qb = cirq.X

if i % 2 == 1:

single_qb = cirq.Y

ret += cirq.Circuit(single_qb(q) ** param for q in qubits)

if depolarize_p >= 1e-5:

ret = ret.with_noise(cirq.depolarize(depolarize_p))

return ret, [op(q) for q in qubits for op in [cirq.X, cirq.Y, cirq.Z]]

modelling_circuit(qubits, 3)[0]

3.3 Pembuatan dan pelatihan model

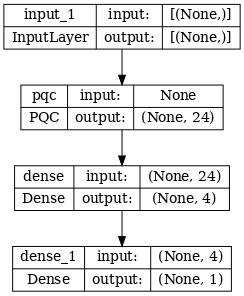

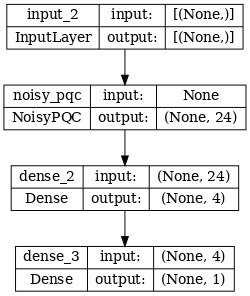

Dengan data dan rangkaian model yang Anda buat, fungsi pembantu terakhir yang Anda perlukan adalah yang dapat merakit baik kuantum tf.keras.Model hibrid yang bising atau tidak bersuara :

def build_keras_model(qubits, depolarize_p=0.):

"""Prepare a noisy hybrid quantum classical Keras model."""

spin_input = tf.keras.Input(shape=(), dtype=tf.dtypes.string)

circuit_and_readout = modelling_circuit(qubits, 4, depolarize_p)

if depolarize_p >= 1e-5:

quantum_model = tfq.layers.NoisyPQC(*circuit_and_readout, sample_based=False, repetitions=10)(spin_input)

else:

quantum_model = tfq.layers.PQC(*circuit_and_readout)(spin_input)

intermediate = tf.keras.layers.Dense(4, activation='sigmoid')(quantum_model)

post_process = tf.keras.layers.Dense(1)(intermediate)

return tf.keras.Model(inputs=[spin_input], outputs=[post_process])

4. Bandingkan kinerja

4.1 Garis dasar tanpa suara

Dengan pembuatan data dan kode pembuatan model, kini Anda dapat membandingkan dan membedakan kinerja model dalam pengaturan tanpa suara dan bising, pertama-tama Anda dapat menjalankan pelatihan referensi tanpa suara:

training_histories = dict()

depolarize_p = 0.

n_epochs = 50

phase_classifier = build_keras_model(qubits, depolarize_p)

phase_classifier.compile(optimizer=tf.keras.optimizers.Adam(learning_rate=0.02),

loss=tf.keras.losses.BinaryCrossentropy(from_logits=True),

metrics=['accuracy'])

# Show the keras plot of the model

tf.keras.utils.plot_model(phase_classifier, show_shapes=True, dpi=70)

noiseless_data, noiseless_labels = get_data(qubits, depolarize_p)

training_histories['noiseless'] = phase_classifier.fit(x=noiseless_data,

y=noiseless_labels,

batch_size=16,

epochs=n_epochs,

validation_split=0.15,

verbose=1)

Epoch 1/50 4/4 [==============================] - 1s 133ms/step - loss: 0.7212 - accuracy: 0.4688 - val_loss: 0.6834 - val_accuracy: 0.5000 Epoch 2/50 4/4 [==============================] - 0s 80ms/step - loss: 0.6787 - accuracy: 0.4688 - val_loss: 0.6640 - val_accuracy: 0.5000 Epoch 3/50 4/4 [==============================] - 0s 76ms/step - loss: 0.6637 - accuracy: 0.4688 - val_loss: 0.6529 - val_accuracy: 0.5000 Epoch 4/50 4/4 [==============================] - 0s 78ms/step - loss: 0.6505 - accuracy: 0.4688 - val_loss: 0.6423 - val_accuracy: 0.5000 Epoch 5/50 4/4 [==============================] - 0s 77ms/step - loss: 0.6409 - accuracy: 0.4688 - val_loss: 0.6322 - val_accuracy: 0.5000 Epoch 6/50 4/4 [==============================] - 0s 77ms/step - loss: 0.6300 - accuracy: 0.4844 - val_loss: 0.6187 - val_accuracy: 0.5000 Epoch 7/50 4/4 [==============================] - 0s 77ms/step - loss: 0.6171 - accuracy: 0.5781 - val_loss: 0.6007 - val_accuracy: 0.5000 Epoch 8/50 4/4 [==============================] - 0s 79ms/step - loss: 0.6008 - accuracy: 0.6250 - val_loss: 0.5825 - val_accuracy: 0.5833 Epoch 9/50 4/4 [==============================] - 0s 76ms/step - loss: 0.5864 - accuracy: 0.6406 - val_loss: 0.5610 - val_accuracy: 0.6667 Epoch 10/50 4/4 [==============================] - 0s 77ms/step - loss: 0.5670 - accuracy: 0.6719 - val_loss: 0.5406 - val_accuracy: 0.8333 Epoch 11/50 4/4 [==============================] - 0s 79ms/step - loss: 0.5474 - accuracy: 0.6875 - val_loss: 0.5173 - val_accuracy: 0.9167 Epoch 12/50 4/4 [==============================] - 0s 77ms/step - loss: 0.5276 - accuracy: 0.7188 - val_loss: 0.4941 - val_accuracy: 0.9167 Epoch 13/50 4/4 [==============================] - 0s 75ms/step - loss: 0.5066 - accuracy: 0.7500 - val_loss: 0.4686 - val_accuracy: 0.9167 Epoch 14/50 4/4 [==============================] - 0s 76ms/step - loss: 0.4838 - accuracy: 0.7812 - val_loss: 0.4437 - val_accuracy: 0.9167 Epoch 15/50 4/4 [==============================] - 0s 76ms/step - loss: 0.4618 - accuracy: 0.8281 - val_loss: 0.4182 - val_accuracy: 0.9167 Epoch 16/50 4/4 [==============================] - 0s 76ms/step - loss: 0.4386 - accuracy: 0.8281 - val_loss: 0.3930 - val_accuracy: 1.0000 Epoch 17/50 4/4 [==============================] - 0s 79ms/step - loss: 0.4158 - accuracy: 0.8438 - val_loss: 0.3673 - val_accuracy: 1.0000 Epoch 18/50 4/4 [==============================] - 0s 79ms/step - loss: 0.3944 - accuracy: 0.8438 - val_loss: 0.3429 - val_accuracy: 1.0000 Epoch 19/50 4/4 [==============================] - 0s 77ms/step - loss: 0.3735 - accuracy: 0.8594 - val_loss: 0.3203 - val_accuracy: 1.0000 Epoch 20/50 4/4 [==============================] - 0s 77ms/step - loss: 0.3535 - accuracy: 0.8750 - val_loss: 0.2998 - val_accuracy: 1.0000 Epoch 21/50 4/4 [==============================] - 0s 78ms/step - loss: 0.3345 - accuracy: 0.8906 - val_loss: 0.2815 - val_accuracy: 1.0000 Epoch 22/50 4/4 [==============================] - 0s 76ms/step - loss: 0.3168 - accuracy: 0.8906 - val_loss: 0.2640 - val_accuracy: 1.0000 Epoch 23/50 4/4 [==============================] - 0s 76ms/step - loss: 0.3017 - accuracy: 0.9062 - val_loss: 0.2465 - val_accuracy: 1.0000 Epoch 24/50 4/4 [==============================] - 0s 76ms/step - loss: 0.2840 - accuracy: 0.9219 - val_loss: 0.2328 - val_accuracy: 1.0000 Epoch 25/50 4/4 [==============================] - 0s 76ms/step - loss: 0.2700 - accuracy: 0.9219 - val_loss: 0.2181 - val_accuracy: 1.0000 Epoch 26/50 4/4 [==============================] - 0s 76ms/step - loss: 0.2566 - accuracy: 0.9219 - val_loss: 0.2053 - val_accuracy: 1.0000 Epoch 27/50 4/4 [==============================] - 0s 77ms/step - loss: 0.2445 - accuracy: 0.9375 - val_loss: 0.1935 - val_accuracy: 1.0000 Epoch 28/50 4/4 [==============================] - 0s 76ms/step - loss: 0.2332 - accuracy: 0.9375 - val_loss: 0.1839 - val_accuracy: 1.0000 Epoch 29/50 4/4 [==============================] - 0s 78ms/step - loss: 0.2227 - accuracy: 0.9375 - val_loss: 0.1734 - val_accuracy: 1.0000 Epoch 30/50 4/4 [==============================] - 0s 76ms/step - loss: 0.2145 - accuracy: 0.9375 - val_loss: 0.1630 - val_accuracy: 1.0000 Epoch 31/50 4/4 [==============================] - 0s 76ms/step - loss: 0.2047 - accuracy: 0.9375 - val_loss: 0.1564 - val_accuracy: 1.0000 Epoch 32/50 4/4 [==============================] - 0s 76ms/step - loss: 0.1971 - accuracy: 0.9375 - val_loss: 0.1525 - val_accuracy: 1.0000 Epoch 33/50 4/4 [==============================] - 0s 75ms/step - loss: 0.1894 - accuracy: 0.9531 - val_loss: 0.1464 - val_accuracy: 1.0000 Epoch 34/50 4/4 [==============================] - 0s 74ms/step - loss: 0.1825 - accuracy: 0.9531 - val_loss: 0.1407 - val_accuracy: 1.0000 Epoch 35/50 4/4 [==============================] - 0s 77ms/step - loss: 0.1771 - accuracy: 0.9531 - val_loss: 0.1330 - val_accuracy: 1.0000 Epoch 36/50 4/4 [==============================] - 0s 75ms/step - loss: 0.1704 - accuracy: 0.9531 - val_loss: 0.1288 - val_accuracy: 1.0000 Epoch 37/50 4/4 [==============================] - 0s 76ms/step - loss: 0.1647 - accuracy: 0.9531 - val_loss: 0.1237 - val_accuracy: 1.0000 Epoch 38/50 4/4 [==============================] - 0s 80ms/step - loss: 0.1603 - accuracy: 0.9531 - val_loss: 0.1221 - val_accuracy: 1.0000 Epoch 39/50 4/4 [==============================] - 0s 76ms/step - loss: 0.1551 - accuracy: 0.9688 - val_loss: 0.1177 - val_accuracy: 1.0000 Epoch 40/50 4/4 [==============================] - 0s 75ms/step - loss: 0.1509 - accuracy: 0.9688 - val_loss: 0.1136 - val_accuracy: 1.0000 Epoch 41/50 4/4 [==============================] - 0s 76ms/step - loss: 0.1466 - accuracy: 0.9688 - val_loss: 0.1110 - val_accuracy: 1.0000 Epoch 42/50 4/4 [==============================] - 0s 76ms/step - loss: 0.1426 - accuracy: 0.9688 - val_loss: 0.1083 - val_accuracy: 1.0000 Epoch 43/50 4/4 [==============================] - 0s 75ms/step - loss: 0.1386 - accuracy: 0.9688 - val_loss: 0.1050 - val_accuracy: 1.0000 Epoch 44/50 4/4 [==============================] - 0s 83ms/step - loss: 0.1362 - accuracy: 0.9688 - val_loss: 0.0989 - val_accuracy: 1.0000 Epoch 45/50 4/4 [==============================] - 0s 78ms/step - loss: 0.1324 - accuracy: 0.9688 - val_loss: 0.0978 - val_accuracy: 1.0000 Epoch 46/50 4/4 [==============================] - 0s 77ms/step - loss: 0.1290 - accuracy: 0.9688 - val_loss: 0.0964 - val_accuracy: 1.0000 Epoch 47/50 4/4 [==============================] - 0s 75ms/step - loss: 0.1265 - accuracy: 0.9688 - val_loss: 0.0929 - val_accuracy: 1.0000 Epoch 48/50 4/4 [==============================] - 0s 77ms/step - loss: 0.1234 - accuracy: 0.9688 - val_loss: 0.0923 - val_accuracy: 1.0000 Epoch 49/50 4/4 [==============================] - 0s 77ms/step - loss: 0.1213 - accuracy: 0.9688 - val_loss: 0.0903 - val_accuracy: 1.0000 Epoch 50/50 4/4 [==============================] - 0s 77ms/step - loss: 0.1182 - accuracy: 0.9688 - val_loss: 0.0885 - val_accuracy: 1.0000

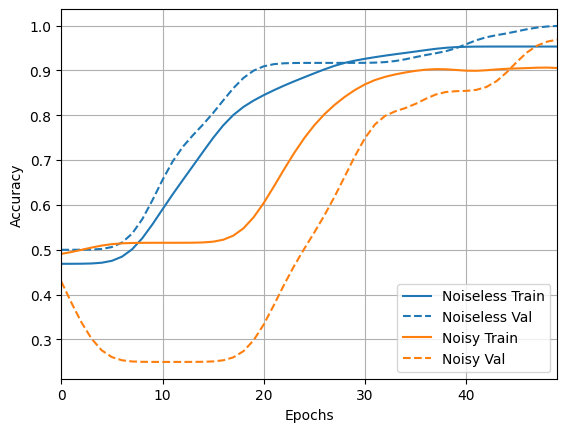

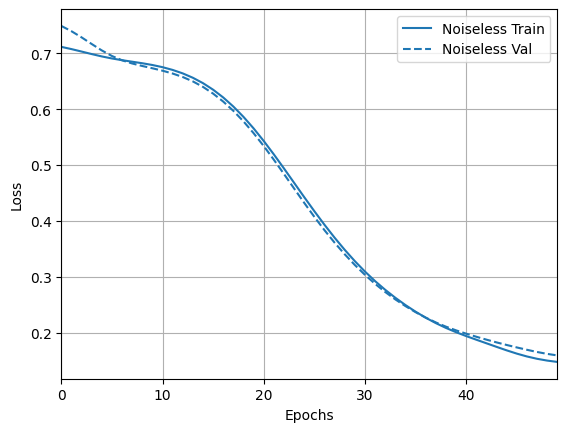

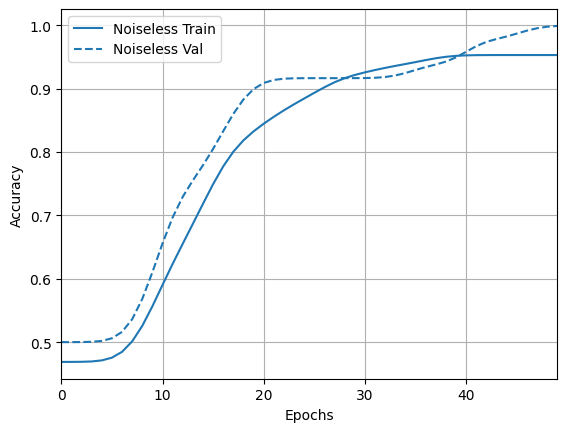

Dan jelajahi hasil dan akurasinya:

loss_plotter = tfdocs.plots.HistoryPlotter(metric = 'loss', smoothing_std=10)

loss_plotter.plot(training_histories)

acc_plotter = tfdocs.plots.HistoryPlotter(metric = 'accuracy', smoothing_std=10)

acc_plotter.plot(training_histories)

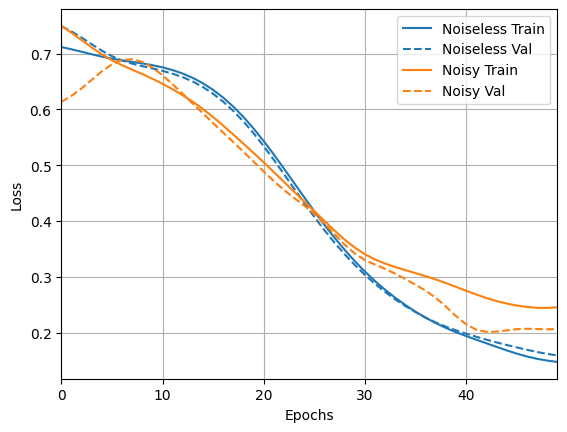

4.2 Perbandingan yang bising

Sekarang Anda dapat membangun model baru dengan struktur yang bising dan membandingkan dengan yang di atas, kodenya hampir sama:

depolarize_p = 0.001

n_epochs = 50

noisy_phase_classifier = build_keras_model(qubits, depolarize_p)

noisy_phase_classifier.compile(optimizer=tf.keras.optimizers.Adam(learning_rate=0.02),

loss=tf.keras.losses.BinaryCrossentropy(from_logits=True),

metrics=['accuracy'])

# Show the keras plot of the model

tf.keras.utils.plot_model(noisy_phase_classifier, show_shapes=True, dpi=70)

noisy_data, noisy_labels = get_data(qubits, depolarize_p)

training_histories['noisy'] = noisy_phase_classifier.fit(x=noisy_data,

y=noisy_labels,

batch_size=16,

epochs=n_epochs,

validation_split=0.15,

verbose=1)

Epoch 1/50 4/4 [==============================] - 8s 2s/step - loss: 0.8265 - accuracy: 0.4844 - val_loss: 0.8369 - val_accuracy: 0.4167 Epoch 2/50 4/4 [==============================] - 7s 2s/step - loss: 0.7613 - accuracy: 0.4844 - val_loss: 0.7695 - val_accuracy: 0.4167 Epoch 3/50 4/4 [==============================] - 7s 2s/step - loss: 0.7151 - accuracy: 0.4844 - val_loss: 0.7290 - val_accuracy: 0.4167 Epoch 4/50 4/4 [==============================] - 7s 2s/step - loss: 0.6915 - accuracy: 0.4844 - val_loss: 0.7014 - val_accuracy: 0.4167 Epoch 5/50 4/4 [==============================] - 7s 2s/step - loss: 0.6837 - accuracy: 0.4844 - val_loss: 0.6811 - val_accuracy: 0.4167 Epoch 6/50 4/4 [==============================] - 7s 2s/step - loss: 0.6717 - accuracy: 0.4844 - val_loss: 0.6801 - val_accuracy: 0.4167 Epoch 7/50 4/4 [==============================] - 7s 2s/step - loss: 0.6739 - accuracy: 0.4844 - val_loss: 0.6726 - val_accuracy: 0.4167 Epoch 8/50 4/4 [==============================] - 7s 2s/step - loss: 0.6713 - accuracy: 0.4844 - val_loss: 0.6661 - val_accuracy: 0.4167 Epoch 9/50 4/4 [==============================] - 7s 2s/step - loss: 0.6710 - accuracy: 0.4844 - val_loss: 0.6667 - val_accuracy: 0.4167 Epoch 10/50 4/4 [==============================] - 7s 2s/step - loss: 0.6669 - accuracy: 0.4844 - val_loss: 0.6627 - val_accuracy: 0.4167 Epoch 11/50 4/4 [==============================] - 7s 2s/step - loss: 0.6637 - accuracy: 0.4844 - val_loss: 0.6550 - val_accuracy: 0.4167 Epoch 12/50 4/4 [==============================] - 7s 2s/step - loss: 0.6616 - accuracy: 0.4844 - val_loss: 0.6593 - val_accuracy: 0.4167 Epoch 13/50 4/4 [==============================] - 7s 2s/step - loss: 0.6536 - accuracy: 0.4844 - val_loss: 0.6514 - val_accuracy: 0.4167 Epoch 14/50 4/4 [==============================] - 7s 2s/step - loss: 0.6489 - accuracy: 0.4844 - val_loss: 0.6481 - val_accuracy: 0.4167 Epoch 15/50 4/4 [==============================] - 7s 2s/step - loss: 0.6491 - accuracy: 0.4844 - val_loss: 0.6484 - val_accuracy: 0.4167 Epoch 16/50 4/4 [==============================] - 7s 2s/step - loss: 0.6389 - accuracy: 0.4844 - val_loss: 0.6396 - val_accuracy: 0.4167 Epoch 17/50 4/4 [==============================] - 7s 2s/step - loss: 0.6307 - accuracy: 0.4844 - val_loss: 0.6337 - val_accuracy: 0.4167 Epoch 18/50 4/4 [==============================] - 7s 2s/step - loss: 0.6296 - accuracy: 0.4844 - val_loss: 0.6260 - val_accuracy: 0.4167 Epoch 19/50 4/4 [==============================] - 7s 2s/step - loss: 0.6194 - accuracy: 0.4844 - val_loss: 0.6282 - val_accuracy: 0.4167 Epoch 20/50 4/4 [==============================] - 7s 2s/step - loss: 0.6095 - accuracy: 0.4844 - val_loss: 0.6138 - val_accuracy: 0.4167 Epoch 21/50 4/4 [==============================] - 7s 2s/step - loss: 0.6075 - accuracy: 0.4844 - val_loss: 0.5874 - val_accuracy: 0.4167 Epoch 22/50 4/4 [==============================] - 7s 2s/step - loss: 0.5981 - accuracy: 0.4844 - val_loss: 0.5981 - val_accuracy: 0.4167 Epoch 23/50 4/4 [==============================] - 7s 2s/step - loss: 0.5823 - accuracy: 0.4844 - val_loss: 0.5818 - val_accuracy: 0.4167 Epoch 24/50 4/4 [==============================] - 7s 2s/step - loss: 0.5768 - accuracy: 0.4844 - val_loss: 0.5617 - val_accuracy: 0.4167 Epoch 25/50 4/4 [==============================] - 7s 2s/step - loss: 0.5651 - accuracy: 0.4844 - val_loss: 0.5638 - val_accuracy: 0.4167 Epoch 26/50 4/4 [==============================] - 7s 2s/step - loss: 0.5496 - accuracy: 0.4844 - val_loss: 0.5532 - val_accuracy: 0.4167 Epoch 27/50 4/4 [==============================] - 7s 2s/step - loss: 0.5340 - accuracy: 0.5000 - val_loss: 0.5345 - val_accuracy: 0.4167 Epoch 28/50 4/4 [==============================] - 7s 2s/step - loss: 0.5297 - accuracy: 0.5156 - val_loss: 0.5308 - val_accuracy: 0.4167 Epoch 29/50 4/4 [==============================] - 7s 2s/step - loss: 0.5120 - accuracy: 0.5312 - val_loss: 0.5224 - val_accuracy: 0.5000 Epoch 30/50 4/4 [==============================] - 7s 2s/step - loss: 0.4992 - accuracy: 0.5781 - val_loss: 0.4921 - val_accuracy: 0.5833 Epoch 31/50 4/4 [==============================] - 7s 2s/step - loss: 0.4823 - accuracy: 0.5938 - val_loss: 0.4975 - val_accuracy: 0.5000 Epoch 32/50 4/4 [==============================] - 7s 2s/step - loss: 0.5025 - accuracy: 0.5781 - val_loss: 0.4814 - val_accuracy: 0.5000 Epoch 33/50 4/4 [==============================] - 7s 2s/step - loss: 0.4655 - accuracy: 0.6562 - val_loss: 0.4391 - val_accuracy: 0.6667 Epoch 34/50 4/4 [==============================] - 7s 2s/step - loss: 0.4552 - accuracy: 0.7031 - val_loss: 0.4528 - val_accuracy: 0.5833 Epoch 35/50 4/4 [==============================] - 7s 2s/step - loss: 0.4516 - accuracy: 0.6719 - val_loss: 0.3993 - val_accuracy: 0.8333 Epoch 36/50 4/4 [==============================] - 7s 2s/step - loss: 0.4320 - accuracy: 0.7656 - val_loss: 0.4225 - val_accuracy: 0.6667 Epoch 37/50 4/4 [==============================] - 7s 2s/step - loss: 0.4060 - accuracy: 0.7656 - val_loss: 0.4001 - val_accuracy: 0.9167 Epoch 38/50 4/4 [==============================] - 7s 2s/step - loss: 0.3858 - accuracy: 0.7812 - val_loss: 0.4152 - val_accuracy: 0.8333 Epoch 39/50 4/4 [==============================] - 7s 2s/step - loss: 0.3964 - accuracy: 0.7656 - val_loss: 0.3899 - val_accuracy: 0.7500 Epoch 40/50 4/4 [==============================] - 7s 2s/step - loss: 0.3640 - accuracy: 0.8125 - val_loss: 0.3689 - val_accuracy: 0.7500 Epoch 41/50 4/4 [==============================] - 7s 2s/step - loss: 0.3676 - accuracy: 0.7812 - val_loss: 0.3786 - val_accuracy: 0.7500 Epoch 42/50 4/4 [==============================] - 7s 2s/step - loss: 0.3466 - accuracy: 0.8281 - val_loss: 0.3313 - val_accuracy: 0.8333 Epoch 43/50 4/4 [==============================] - 7s 2s/step - loss: 0.3520 - accuracy: 0.8594 - val_loss: 0.3398 - val_accuracy: 0.8333 Epoch 44/50 4/4 [==============================] - 7s 2s/step - loss: 0.3402 - accuracy: 0.8438 - val_loss: 0.3135 - val_accuracy: 0.9167 Epoch 45/50 4/4 [==============================] - 7s 2s/step - loss: 0.3253 - accuracy: 0.8281 - val_loss: 0.3469 - val_accuracy: 0.8333 Epoch 46/50 4/4 [==============================] - 7s 2s/step - loss: 0.3239 - accuracy: 0.8281 - val_loss: 0.3038 - val_accuracy: 0.9167 Epoch 47/50 4/4 [==============================] - 7s 2s/step - loss: 0.2948 - accuracy: 0.8594 - val_loss: 0.3056 - val_accuracy: 0.9167 Epoch 48/50 4/4 [==============================] - 7s 2s/step - loss: 0.2972 - accuracy: 0.9219 - val_loss: 0.2699 - val_accuracy: 0.9167 Epoch 49/50 4/4 [==============================] - 7s 2s/step - loss: 0.3041 - accuracy: 0.8281 - val_loss: 0.2754 - val_accuracy: 0.9167 Epoch 50/50 4/4 [==============================] - 7s 2s/step - loss: 0.2944 - accuracy: 0.8750 - val_loss: 0.2988 - val_accuracy: 0.9167

loss_plotter.plot(training_histories)

acc_plotter.plot(training_histories)