View on TensorFlow.org View on TensorFlow.org

|

Run in Google Colab Run in Google Colab

|

View source on GitHub View source on GitHub

|

Download notebook Download notebook

|

In this tutorial, you will learn how to classify images of cats and dogs by using transfer learning from a pre-trained network.

A pre-trained model is a saved network that was previously trained on a large dataset, typically on a large-scale image-classification task. You either use the pretrained model as is or use transfer learning to customize this model to a given task.

The intuition behind transfer learning for image classification is that if a model is trained on a large and general enough dataset, this model will effectively serve as a generic model of the visual world. You can then take advantage of these learned feature maps without having to start from scratch by training a large model on a large dataset.

In this notebook, you will try two ways to customize a pretrained model:

Feature Extraction: Use the representations learned by a previous network to extract meaningful features from new samples. You simply add a new classifier, which will be trained from scratch, on top of the pretrained model so that you can repurpose the feature maps learned previously for the dataset.

You do not need to (re)train the entire model. The base convolutional network already contains features that are generically useful for classifying pictures. However, the final, classification part of the pretrained model is specific to the original classification task, and subsequently specific to the set of classes on which the model was trained.

Fine-Tuning: Unfreeze a few of the top layers of a frozen model base and jointly train both the newly-added classifier layers and the last layers of the base model. This allows us to "fine-tune" the higher-order feature representations in the base model in order to make them more relevant for the specific task.

You will follow the general machine learning workflow.

- Examine and understand the data

- Build an input pipeline, in this case using Keras ImageDataGenerator

- Compose the model

- Load in the pretrained base model (and pretrained weights)

- Stack the classification layers on top

- Train the model

- Evaluate model

import matplotlib.pyplot as plt

import numpy as np

import os

import tensorflow as tf

Data preprocessing

Data download

In this tutorial, you will use a dataset containing several thousand images of cats and dogs. Download and extract a zip file containing the images, then create a tf.data.Dataset for training and validation using the tf.keras.utils.image_dataset_from_directory utility. You can learn more about loading images in this tutorial.

_URL = 'https://storage.googleapis.com/mledu-datasets/cats_and_dogs_filtered.zip'

path_to_zip = tf.keras.utils.get_file('cats_and_dogs.zip', origin=_URL, extract=True)

PATH = os.path.join(os.path.dirname(path_to_zip), 'cats_and_dogs_filtered')

train_dir = os.path.join(PATH, 'train')

validation_dir = os.path.join(PATH, 'validation')

BATCH_SIZE = 32

IMG_SIZE = (160, 160)

train_dataset = tf.keras.utils.image_dataset_from_directory(train_dir,

shuffle=True,

batch_size=BATCH_SIZE,

image_size=IMG_SIZE)

Downloading data from https://storage.googleapis.com/mledu-datasets/cats_and_dogs_filtered.zip 68606236/68606236 ━━━━━━━━━━━━━━━━━━━━ 0s 0us/step Found 2000 files belonging to 2 classes.

validation_dataset = tf.keras.utils.image_dataset_from_directory(validation_dir,

shuffle=True,

batch_size=BATCH_SIZE,

image_size=IMG_SIZE)

Found 1000 files belonging to 2 classes.

Show the first nine images and labels from the training set:

class_names = train_dataset.class_names

plt.figure(figsize=(10, 10))

for images, labels in train_dataset.take(1):

for i in range(9):

ax = plt.subplot(3, 3, i + 1)

plt.imshow(images[i].numpy().astype("uint8"))

plt.title(class_names[labels[i]])

plt.axis("off")

2024-04-16 01:23:05.140106: W tensorflow/core/framework/local_rendezvous.cc:404] Local rendezvous is aborting with status: OUT_OF_RANGE: End of sequence

As the original dataset doesn't contain a test set, you will create one. To do so, determine how many batches of data are available in the validation set using tf.data.experimental.cardinality, then move 20% of them to a test set.

val_batches = tf.data.experimental.cardinality(validation_dataset)

test_dataset = validation_dataset.take(val_batches // 5)

validation_dataset = validation_dataset.skip(val_batches // 5)

print('Number of validation batches: %d' % tf.data.experimental.cardinality(validation_dataset))

print('Number of test batches: %d' % tf.data.experimental.cardinality(test_dataset))

Number of validation batches: 26 Number of test batches: 6

Configure the dataset for performance

Use buffered prefetching to load images from disk without having I/O become blocking. To learn more about this method see the data performance guide.

AUTOTUNE = tf.data.AUTOTUNE

train_dataset = train_dataset.prefetch(buffer_size=AUTOTUNE)

validation_dataset = validation_dataset.prefetch(buffer_size=AUTOTUNE)

test_dataset = test_dataset.prefetch(buffer_size=AUTOTUNE)

Use data augmentation

When you don't have a large image dataset, it's a good practice to artificially introduce sample diversity by applying random, yet realistic, transformations to the training images, such as rotation and horizontal flipping. This helps expose the model to different aspects of the training data and reduce overfitting. You can learn more about data augmentation in this tutorial.

data_augmentation = tf.keras.Sequential([

tf.keras.layers.RandomFlip('horizontal'),

tf.keras.layers.RandomRotation(0.2),

])

Let's repeatedly apply these layers to the same image and see the result.

for image, _ in train_dataset.take(1):

plt.figure(figsize=(10, 10))

first_image = image[0]

for i in range(9):

ax = plt.subplot(3, 3, i + 1)

augmented_image = data_augmentation(tf.expand_dims(first_image, 0))

plt.imshow(augmented_image[0] / 255)

plt.axis('off')

2024-04-16 01:23:07.046845: W tensorflow/core/framework/local_rendezvous.cc:404] Local rendezvous is aborting with status: OUT_OF_RANGE: End of sequence

Rescale pixel values

In a moment, you will download tf.keras.applications.MobileNetV2 for use as your base model. This model expects pixel values in [-1, 1], but at this point, the pixel values in your images are in [0, 255]. To rescale them, use the preprocessing method included with the model.

preprocess_input = tf.keras.applications.mobilenet_v2.preprocess_input

rescale = tf.keras.layers.Rescaling(1./127.5, offset=-1)

Create the base model from the pre-trained convnets

You will create the base model from the MobileNet V2 model developed at Google. This is pre-trained on the ImageNet dataset, a large dataset consisting of 1.4M images and 1000 classes. ImageNet is a research training dataset with a wide variety of categories like jackfruit and syringe. This base of knowledge will help us classify cats and dogs from our specific dataset.

First, you need to pick which layer of MobileNet V2 you will use for feature extraction. The very last classification layer (on "top", as most diagrams of machine learning models go from bottom to top) is not very useful. Instead, you will follow the common practice to depend on the very last layer before the flatten operation. This layer is called the "bottleneck layer". The bottleneck layer features retain more generality as compared to the final/top layer.

First, instantiate a MobileNet V2 model pre-loaded with weights trained on ImageNet. By specifying the include_top=False argument, you load a network that doesn't include the classification layers at the top, which is ideal for feature extraction.

# Create the base model from the pre-trained model MobileNet V2

IMG_SHAPE = IMG_SIZE + (3,)

base_model = tf.keras.applications.MobileNetV2(input_shape=IMG_SHAPE,

include_top=False,

weights='imagenet')

Downloading data from https://storage.googleapis.com/tensorflow/keras-applications/mobilenet_v2/mobilenet_v2_weights_tf_dim_ordering_tf_kernels_1.0_160_no_top.h5 9406464/9406464 ━━━━━━━━━━━━━━━━━━━━ 0s 0us/step

This feature extractor converts each 160x160x3 image into a 5x5x1280 block of features. Let's see what it does to an example batch of images:

image_batch, label_batch = next(iter(train_dataset))

feature_batch = base_model(image_batch)

print(feature_batch.shape)

(32, 5, 5, 1280)

Feature extraction

In this step, you will freeze the convolutional base created from the previous step and to use as a feature extractor. Additionally, you add a classifier on top of it and train the top-level classifier.

Freeze the convolutional base

It is important to freeze the convolutional base before you compile and train the model. Freezing (by setting layer.trainable = False) prevents the weights in a given layer from being updated during training. MobileNet V2 has many layers, so setting the entire model's trainable flag to False will freeze all of them.

base_model.trainable = False

Important note about BatchNormalization layers

Many models contain tf.keras.layers.BatchNormalization layers. This layer is a special case and precautions should be taken in the context of fine-tuning, as shown later in this tutorial.

When you set layer.trainable = False, the BatchNormalization layer will run in inference mode, and will not update its mean and variance statistics.

When you unfreeze a model that contains BatchNormalization layers in order to do fine-tuning, you should keep the BatchNormalization layers in inference mode by passing training = False when calling the base model. Otherwise, the updates applied to the non-trainable weights will destroy what the model has learned.

For more details, see the Transfer learning guide.

# Let's take a look at the base model architecture

base_model.summary()

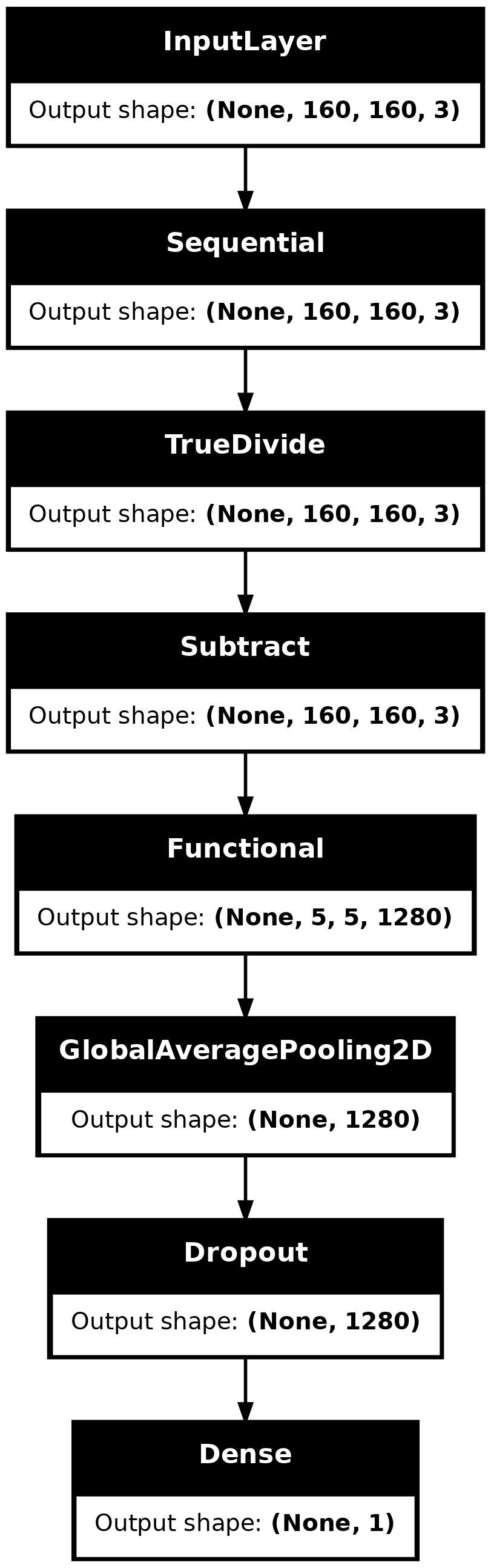

Add a classification head

To generate predictions from the block of features, average over the spatial 5x5 spatial locations, using a tf.keras.layers.GlobalAveragePooling2D layer to convert the features to a single 1280-element vector per image.

global_average_layer = tf.keras.layers.GlobalAveragePooling2D()

feature_batch_average = global_average_layer(feature_batch)

print(feature_batch_average.shape)

(32, 1280)

Apply a tf.keras.layers.Dense layer to convert these features into a single prediction per image. You don't need an activation function here because this prediction will be treated as a logit, or a raw prediction value. Positive numbers predict class 1, negative numbers predict class 0.

prediction_layer = tf.keras.layers.Dense(1, activation='sigmoid')

prediction_batch = prediction_layer(feature_batch_average)

print(prediction_batch.shape)

(32, 1)

Build a model by chaining together the data augmentation, rescaling, base_model and feature extractor layers using the Keras Functional API. As previously mentioned, use training=False as our model contains a BatchNormalization layer.

inputs = tf.keras.Input(shape=(160, 160, 3))

x = data_augmentation(inputs)

x = preprocess_input(x)

x = base_model(x, training=False)

x = global_average_layer(x)

x = tf.keras.layers.Dropout(0.2)(x)

outputs = prediction_layer(x)

model = tf.keras.Model(inputs, outputs)

model.summary()

The 8+ million parameters in MobileNet are frozen, but there are 1.2 thousand trainable parameters in the Dense layer. These are divided between two tf.Variable objects, the weights and biases.

len(model.trainable_variables)

2

tf.keras.utils.plot_model(model, show_shapes=True)

Compile the model

Compile the model before training it. Since there are two classes and a sigmoid oputput, use the BinaryAccuracy.

base_learning_rate = 0.0001

model.compile(optimizer=tf.keras.optimizers.Adam(learning_rate=base_learning_rate),

loss=tf.keras.losses.BinaryCrossentropy(),

metrics=[tf.keras.metrics.BinaryAccuracy(threshold=0.5, name='accuracy')])

Train the model

After training for 10 epochs, you should see ~96% accuracy on the validation set.

initial_epochs = 10

loss0, accuracy0 = model.evaluate(validation_dataset)

26/26 ━━━━━━━━━━━━━━━━━━━━ 3s 48ms/step - accuracy: 0.6077 - loss: 0.6547

print("initial loss: {:.2f}".format(loss0))

print("initial accuracy: {:.2f}".format(accuracy0))

initial loss: 0.65 initial accuracy: 0.62

history = model.fit(train_dataset,

epochs=initial_epochs,

validation_data=validation_dataset)

Epoch 1/10 63/63 ━━━━━━━━━━━━━━━━━━━━ 8s 52ms/step - accuracy: 0.6776 - loss: 0.6373 - val_accuracy: 0.8304 - val_loss: 0.4399 Epoch 2/10 63/63 ━━━━━━━━━━━━━━━━━━━━ 3s 42ms/step - accuracy: 0.7697 - loss: 0.4771 - val_accuracy: 0.9035 - val_loss: 0.3350 Epoch 3/10 63/63 ━━━━━━━━━━━━━━━━━━━━ 3s 42ms/step - accuracy: 0.8319 - loss: 0.4024 - val_accuracy: 0.9418 - val_loss: 0.2631 Epoch 4/10 63/63 ━━━━━━━━━━━━━━━━━━━━ 3s 42ms/step - accuracy: 0.8776 - loss: 0.3252 - val_accuracy: 0.9567 - val_loss: 0.2159 Epoch 5/10 63/63 ━━━━━━━━━━━━━━━━━━━━ 3s 42ms/step - accuracy: 0.8960 - loss: 0.2874 - val_accuracy: 0.9604 - val_loss: 0.1869 Epoch 6/10 63/63 ━━━━━━━━━━━━━━━━━━━━ 3s 42ms/step - accuracy: 0.9088 - loss: 0.2708 - val_accuracy: 0.9641 - val_loss: 0.1661 Epoch 7/10 63/63 ━━━━━━━━━━━━━━━━━━━━ 3s 42ms/step - accuracy: 0.8931 - loss: 0.2500 - val_accuracy: 0.9629 - val_loss: 0.1517 Epoch 8/10 63/63 ━━━━━━━━━━━━━━━━━━━━ 3s 43ms/step - accuracy: 0.9189 - loss: 0.2309 - val_accuracy: 0.9666 - val_loss: 0.1366 Epoch 9/10 63/63 ━━━━━━━━━━━━━━━━━━━━ 3s 42ms/step - accuracy: 0.9181 - loss: 0.2166 - val_accuracy: 0.9715 - val_loss: 0.1270 Epoch 10/10 63/63 ━━━━━━━━━━━━━━━━━━━━ 3s 42ms/step - accuracy: 0.9252 - loss: 0.2123 - val_accuracy: 0.9752 - val_loss: 0.1169

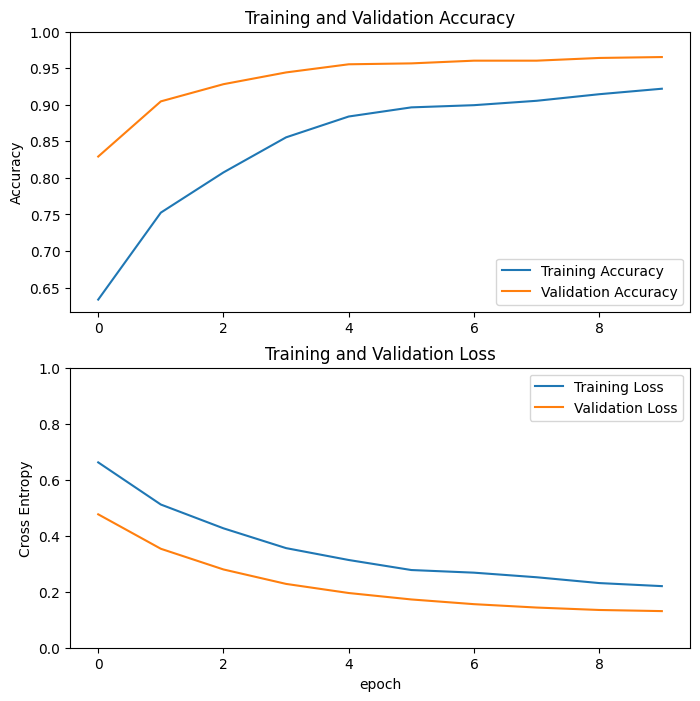

Learning curves

Let's take a look at the learning curves of the training and validation accuracy/loss when using the MobileNetV2 base model as a fixed feature extractor.

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

plt.figure(figsize=(8, 8))

plt.subplot(2, 1, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.legend(loc='lower right')

plt.ylabel('Accuracy')

plt.ylim([min(plt.ylim()),1])

plt.title('Training and Validation Accuracy')

plt.subplot(2, 1, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.legend(loc='upper right')

plt.ylabel('Cross Entropy')

plt.ylim([0,1.0])

plt.title('Training and Validation Loss')

plt.xlabel('epoch')

plt.show()

To a lesser extent, it is also because training metrics report the average for an epoch, while validation metrics are evaluated after the epoch, so validation metrics see a model that has trained slightly longer.

Fine tuning

In the feature extraction experiment, you were only training a few layers on top of an MobileNetV2 base model. The weights of the pre-trained network were not updated during training.

One way to increase performance even further is to train (or "fine-tune") the weights of the top layers of the pre-trained model alongside the training of the classifier you added. The training process will force the weights to be tuned from generic feature maps to features associated specifically with the dataset.

Also, you should try to fine-tune a small number of top layers rather than the whole MobileNet model. In most convolutional networks, the higher up a layer is, the more specialized it is. The first few layers learn very simple and generic features that generalize to almost all types of images. As you go higher up, the features are increasingly more specific to the dataset on which the model was trained. The goal of fine-tuning is to adapt these specialized features to work with the new dataset, rather than overwrite the generic learning.

Un-freeze the top layers of the model

All you need to do is unfreeze the base_model and set the bottom layers to be un-trainable. Then, you should recompile the model (necessary for these changes to take effect), and resume training.

base_model.trainable = True

# Let's take a look to see how many layers are in the base model

print("Number of layers in the base model: ", len(base_model.layers))

# Fine-tune from this layer onwards

fine_tune_at = 100

# Freeze all the layers before the `fine_tune_at` layer

for layer in base_model.layers[:fine_tune_at]:

layer.trainable = False

Number of layers in the base model: 154

Compile the model

As you are training a much larger model and want to readapt the pretrained weights, it is important to use a lower learning rate at this stage. Otherwise, your model could overfit very quickly.

model.compile(loss=tf.keras.losses.BinaryCrossentropy(),

optimizer = tf.keras.optimizers.RMSprop(learning_rate=base_learning_rate/10),

metrics=[tf.keras.metrics.BinaryAccuracy(threshold=0.5, name='accuracy')])

model.summary()

len(model.trainable_variables)

56

Continue training the model

If you trained to convergence earlier, this step will improve your accuracy by a few percentage points.

fine_tune_epochs = 10

total_epochs = initial_epochs + fine_tune_epochs

history_fine = model.fit(train_dataset,

epochs=total_epochs,

initial_epoch=len(history.epoch),

validation_data=validation_dataset)

Epoch 11/20 63/63 ━━━━━━━━━━━━━━━━━━━━ 15s 86ms/step - accuracy: 0.8169 - loss: 0.3828 - val_accuracy: 0.9827 - val_loss: 0.0775 Epoch 12/20 63/63 ━━━━━━━━━━━━━━━━━━━━ 4s 64ms/step - accuracy: 0.8731 - loss: 0.2787 - val_accuracy: 0.9827 - val_loss: 0.0679 Epoch 13/20 63/63 ━━━━━━━━━━━━━━━━━━━━ 4s 63ms/step - accuracy: 0.9109 - loss: 0.2071 - val_accuracy: 0.9851 - val_loss: 0.0598 Epoch 14/20 63/63 ━━━━━━━━━━━━━━━━━━━━ 4s 63ms/step - accuracy: 0.9256 - loss: 0.1930 - val_accuracy: 0.9889 - val_loss: 0.0481 Epoch 15/20 63/63 ━━━━━━━━━━━━━━━━━━━━ 4s 64ms/step - accuracy: 0.9403 - loss: 0.1629 - val_accuracy: 0.9889 - val_loss: 0.0462 Epoch 16/20 63/63 ━━━━━━━━━━━━━━━━━━━━ 4s 63ms/step - accuracy: 0.9338 - loss: 0.1602 - val_accuracy: 0.9889 - val_loss: 0.0377 Epoch 17/20 63/63 ━━━━━━━━━━━━━━━━━━━━ 4s 63ms/step - accuracy: 0.9399 - loss: 0.1416 - val_accuracy: 0.9876 - val_loss: 0.0382 Epoch 18/20 63/63 ━━━━━━━━━━━━━━━━━━━━ 4s 63ms/step - accuracy: 0.9499 - loss: 0.1333 - val_accuracy: 0.9864 - val_loss: 0.0402 Epoch 19/20 63/63 ━━━━━━━━━━━━━━━━━━━━ 4s 63ms/step - accuracy: 0.9471 - loss: 0.1381 - val_accuracy: 0.9827 - val_loss: 0.0410 Epoch 20/20 63/63 ━━━━━━━━━━━━━━━━━━━━ 4s 64ms/step - accuracy: 0.9546 - loss: 0.1193 - val_accuracy: 0.9851 - val_loss: 0.0368

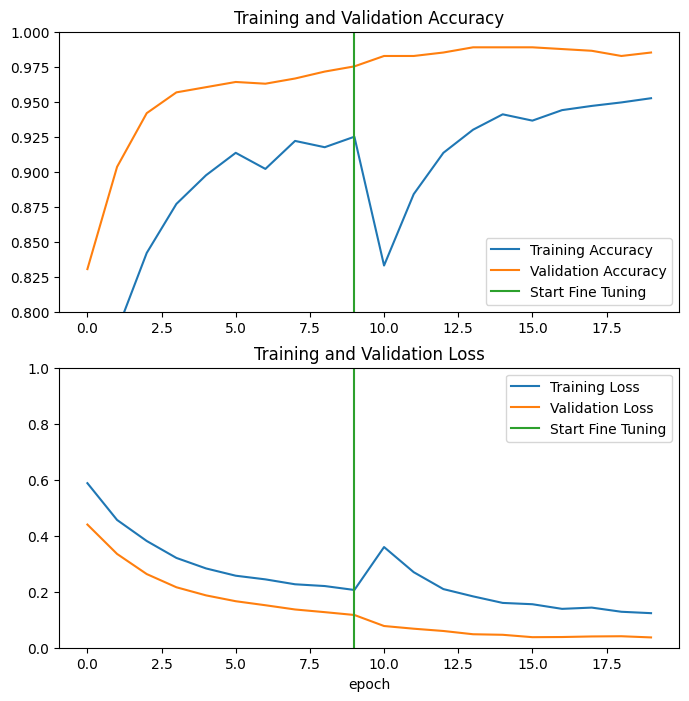

Let's take a look at the learning curves of the training and validation accuracy/loss when fine-tuning the last few layers of the MobileNetV2 base model and training the classifier on top of it. The validation loss is much higher than the training loss, so you may get some overfitting.

You may also get some overfitting as the new training set is relatively small and similar to the original MobileNetV2 datasets.

After fine tuning the model nearly reaches 98% accuracy on the validation set.

acc += history_fine.history['accuracy']

val_acc += history_fine.history['val_accuracy']

loss += history_fine.history['loss']

val_loss += history_fine.history['val_loss']

plt.figure(figsize=(8, 8))

plt.subplot(2, 1, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.ylim([0.8, 1])

plt.plot([initial_epochs-1,initial_epochs-1],

plt.ylim(), label='Start Fine Tuning')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(2, 1, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.ylim([0, 1.0])

plt.plot([initial_epochs-1,initial_epochs-1],

plt.ylim(), label='Start Fine Tuning')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.xlabel('epoch')

plt.show()

Evaluation and prediction

Finally you can verify the performance of the model on new data using test set.

loss, accuracy = model.evaluate(test_dataset)

print('Test accuracy :', accuracy)

6/6 ━━━━━━━━━━━━━━━━━━━━ 0s 31ms/step - accuracy: 0.9853 - loss: 0.0681 Test accuracy : 0.984375

And now you are all set to use this model to predict if your pet is a cat or dog.

# Retrieve a batch of images from the test set

image_batch, label_batch = test_dataset.as_numpy_iterator().next()

predictions = model.predict_on_batch(image_batch).flatten()

# Apply a sigmoid since our model returns logits

predictions = tf.nn.sigmoid(predictions)

predictions = tf.where(predictions < 0.5, 0, 1)

print('Predictions:\n', predictions.numpy())

print('Labels:\n', label_batch)

plt.figure(figsize=(10, 10))

for i in range(9):

ax = plt.subplot(3, 3, i + 1)

plt.imshow(image_batch[i].astype("uint8"))

plt.title(class_names[predictions[i]])

plt.axis("off")

Predictions: [1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1] Labels: [0 1 0 0 0 1 1 0 1 0 0 0 0 1 1 1 0 1 1 0 0 1 1 1 0 1 0 1 0 1 0 0]

Summary

Using a pre-trained model for feature extraction: When working with a small dataset, it is a common practice to take advantage of features learned by a model trained on a larger dataset in the same domain. This is done by instantiating the pre-trained model and adding a fully-connected classifier on top. The pre-trained model is "frozen" and only the weights of the classifier get updated during training. In this case, the convolutional base extracted all the features associated with each image and you just trained a classifier that determines the image class given that set of extracted features.

Fine-tuning a pre-trained model: To further improve performance, one might want to repurpose the top-level layers of the pre-trained models to the new dataset via fine-tuning. In this case, you tuned your weights such that your model learned high-level features specific to the dataset. This technique is usually recommended when the training dataset is large and very similar to the original dataset that the pre-trained model was trained on.

To learn more, visit the Transfer learning guide.

# MIT License

#

# Copyright (c) 2017 François Chollet # IGNORE_COPYRIGHT: cleared by OSS licensing

#

# Permission is hereby granted, free of charge, to any person obtaining a

# copy of this software and associated documentation files (the "Software"),

# to deal in the Software without restriction, including without limitation

# the rights to use, copy, modify, merge, publish, distribute, sublicense,

# and/or sell copies of the Software, and to permit persons to whom the

# Software is furnished to do so, subject to the following conditions:

#

# The above copyright notice and this permission notice shall be included in

# all copies or substantial portions of the Software.

#

# THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

# IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

# FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL

# THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

# LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

# FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER

# DEALINGS IN THE SOFTWARE.