View on TensorFlow.org View on TensorFlow.org

|

Run in Google Colab Run in Google Colab

|

View source on GitHub View source on GitHub

|

Download notebook Download notebook

|

This tutorial demonstrates how to load and preprocess AVI video data using the UCF101 human action dataset. Once you have preprocessed the data, it can be used for such tasks as video classification/recognition, captioning or clustering. The original dataset contains realistic action videos collected from YouTube with 101 categories, including playing cello, brushing teeth, and applying eye makeup. You will learn how to:

Load the data from a zip file.

Read sequences of frames out of the video files.

Visualize the video data.

Wrap the frame-generator

tf.data.Dataset.

This video loading and preprocessing tutorial is the first part in a series of TensorFlow video tutorials. Here are the other three tutorials:

- Build a 3D CNN model for video classification: Note that this tutorial uses a (2+1)D CNN that decomposes the spatial and temporal aspects of 3D data; if you are using volumetric data such as an MRI scan, consider using a 3D CNN instead of a (2+1)D CNN.

- MoViNet for streaming action recognition: Get familiar with the MoViNet models that are available on TF Hub.

- Transfer learning for video classification with MoViNet: This tutorial explains how to use a pre-trained video classification model trained on a different dataset with the UCF-101 dataset.

Setup

Begin by installing and importing some necessary libraries, including:

remotezip to inspect the contents of a ZIP file, tqdm to use a progress bar, OpenCV to process video files, and tensorflow_docs for embedding data in a Jupyter notebook.

# The way this tutorial uses the `TimeDistributed` layer requires TF>=2.10pip install -U "tensorflow>=2.10.0"

pip install remotezip tqdm opencv-pythonpip install -q git+https://github.com/tensorflow/docs

import tqdm

import random

import pathlib

import itertools

import collections

import os

import cv2

import numpy as np

import remotezip as rz

import tensorflow as tf

# Some modules to display an animation using imageio.

import imageio

from IPython import display

from urllib import request

from tensorflow_docs.vis import embed

Download a subset of the UCF101 dataset

The UCF101 dataset contains 101 categories of different actions in video, primarily used in action recognition. You will use a subset of these categories in this demo.

URL = 'https://storage.googleapis.com/thumos14_files/UCF101_videos.zip'

The above URL contains a zip file with the UCF 101 dataset. Create a function that uses the remotezip library to examine the contents of the zip file in that URL:

def list_files_from_zip_url(zip_url):

""" List the files in each class of the dataset given a URL with the zip file.

Args:

zip_url: A URL from which the files can be extracted from.

Returns:

List of files in each of the classes.

"""

files = []

with rz.RemoteZip(zip_url) as zip:

for zip_info in zip.infolist():

files.append(zip_info.filename)

return files

files = list_files_from_zip_url(URL)

files = [f for f in files if f.endswith('.avi')]

files[:10]

Begin with a few videos and a limited number of classes for training. After running the above code block, notice that the class name is included in the filename of each video.

Define the get_class function that retrieves the class name from a filename. Then, create a function called get_files_per_class which converts the list of all files (files above) into a dictionary listing the files for each class:

def get_class(fname):

""" Retrieve the name of the class given a filename.

Args:

fname: Name of the file in the UCF101 dataset.

Returns:

Class that the file belongs to.

"""

return fname.split('_')[-3]

def get_files_per_class(files):

""" Retrieve the files that belong to each class.

Args:

files: List of files in the dataset.

Returns:

Dictionary of class names (key) and files (values).

"""

files_for_class = collections.defaultdict(list)

for fname in files:

class_name = get_class(fname)

files_for_class[class_name].append(fname)

return files_for_class

Once you have the list of files per class, you can choose how many classes you would like to use and how many videos you would like per class in order to create your dataset.

NUM_CLASSES = 10

FILES_PER_CLASS = 50

files_for_class = get_files_per_class(files)

classes = list(files_for_class.keys())

print('Num classes:', len(classes))

print('Num videos for class[0]:', len(files_for_class[classes[0]]))

Create a new function called select_subset_of_classes that selects a subset of the classes present within the dataset and a particular number of files per class:

def select_subset_of_classes(files_for_class, classes, files_per_class):

""" Create a dictionary with the class name and a subset of the files in that class.

Args:

files_for_class: Dictionary of class names (key) and files (values).

classes: List of classes.

files_per_class: Number of files per class of interest.

Returns:

Dictionary with class as key and list of specified number of video files in that class.

"""

files_subset = dict()

for class_name in classes:

class_files = files_for_class[class_name]

files_subset[class_name] = class_files[:files_per_class]

return files_subset

files_subset = select_subset_of_classes(files_for_class, classes[:NUM_CLASSES], FILES_PER_CLASS)

list(files_subset.keys())

Define helper functions that split the videos into training, validation, and test sets. The videos are downloaded from a URL with the zip file, and placed into their respective subdirectiories.

def download_from_zip(zip_url, to_dir, file_names):

""" Download the contents of the zip file from the zip URL.

Args:

zip_url: A URL with a zip file containing data.

to_dir: A directory to download data to.

file_names: Names of files to download.

"""

with rz.RemoteZip(zip_url) as zip:

for fn in tqdm.tqdm(file_names):

class_name = get_class(fn)

zip.extract(fn, str(to_dir / class_name))

unzipped_file = to_dir / class_name / fn

fn = pathlib.Path(fn).parts[-1]

output_file = to_dir / class_name / fn

unzipped_file.rename(output_file)

The following function returns the remaining data that hasn't already been placed into a subset of data. It allows you to place that remaining data in the next specified subset of data.

def split_class_lists(files_for_class, count):

""" Returns the list of files belonging to a subset of data as well as the remainder of

files that need to be downloaded.

Args:

files_for_class: Files belonging to a particular class of data.

count: Number of files to download.

Returns:

Files belonging to the subset of data and dictionary of the remainder of files that need to be downloaded.

"""

split_files = []

remainder = {}

for cls in files_for_class:

split_files.extend(files_for_class[cls][:count])

remainder[cls] = files_for_class[cls][count:]

return split_files, remainder

The following download_ucf_101_subset function allows you to download a subset of the UCF101 dataset and split it into the training, validation, and test sets. You can specify the number of classes that you would like to use. The splits argument allows you to pass in a dictionary in which the key values are the name of subset (example: "train") and the number of videos you would like to have per class.

def download_ucf_101_subset(zip_url, num_classes, splits, download_dir):

""" Download a subset of the UCF101 dataset and split them into various parts, such as

training, validation, and test.

Args:

zip_url: A URL with a ZIP file with the data.

num_classes: Number of labels.

splits: Dictionary specifying the training, validation, test, etc. (key) division of data

(value is number of files per split).

download_dir: Directory to download data to.

Return:

Mapping of the directories containing the subsections of data.

"""

files = list_files_from_zip_url(zip_url)

for f in files:

path = os.path.normpath(f)

tokens = path.split(os.sep)

if len(tokens) <= 2:

files.remove(f) # Remove that item from the list if it does not have a filename

files_for_class = get_files_per_class(files)

classes = list(files_for_class.keys())[:num_classes]

for cls in classes:

random.shuffle(files_for_class[cls])

# Only use the number of classes you want in the dictionary

files_for_class = {x: files_for_class[x] for x in classes}

dirs = {}

for split_name, split_count in splits.items():

print(split_name, ":")

split_dir = download_dir / split_name

split_files, files_for_class = split_class_lists(files_for_class, split_count)

download_from_zip(zip_url, split_dir, split_files)

dirs[split_name] = split_dir

return dirs

download_dir = pathlib.Path('./UCF101_subset/')

subset_paths = download_ucf_101_subset(URL,

num_classes = NUM_CLASSES,

splits = {"train": 30, "val": 10, "test": 10},

download_dir = download_dir)

After downloading the data, you should now have a copy of a subset of the UCF101 dataset. Run the following code to print the total number of videos you have amongst all your subsets of data.

video_count_train = len(list(download_dir.glob('train/*/*.avi')))

video_count_val = len(list(download_dir.glob('val/*/*.avi')))

video_count_test = len(list(download_dir.glob('test/*/*.avi')))

video_total = video_count_train + video_count_val + video_count_test

print(f"Total videos: {video_total}")

You can also preview the directory of data files now.

find ./UCF101_subsetCreate frames from each video file

The frames_from_video_file function splits the videos into frames, reads a randomly chosen span of n_frames out of a video file, and returns them as a NumPy array.

To reduce memory and computation overhead, choose a small number of frames. In addition, pick the same number of frames from each video, which makes it easier to work on batches of data.

def format_frames(frame, output_size):

"""

Pad and resize an image from a video.

Args:

frame: Image that needs to resized and padded.

output_size: Pixel size of the output frame image.

Return:

Formatted frame with padding of specified output size.

"""

frame = tf.image.convert_image_dtype(frame, tf.float32)

frame = tf.image.resize_with_pad(frame, *output_size)

return frame

def frames_from_video_file(video_path, n_frames, output_size = (224,224), frame_step = 15):

"""

Creates frames from each video file present for each category.

Args:

video_path: File path to the video.

n_frames: Number of frames to be created per video file.

output_size: Pixel size of the output frame image.

Return:

An NumPy array of frames in the shape of (n_frames, height, width, channels).

"""

# Read each video frame by frame

result = []

src = cv2.VideoCapture(str(video_path))

video_length = src.get(cv2.CAP_PROP_FRAME_COUNT)

need_length = 1 + (n_frames - 1) * frame_step

if need_length > video_length:

start = 0

else:

max_start = video_length - need_length

start = random.randint(0, max_start + 1)

src.set(cv2.CAP_PROP_POS_FRAMES, start)

# ret is a boolean indicating whether read was successful, frame is the image itself

ret, frame = src.read()

result.append(format_frames(frame, output_size))

for _ in range(n_frames - 1):

for _ in range(frame_step):

ret, frame = src.read()

if ret:

frame = format_frames(frame, output_size)

result.append(frame)

else:

result.append(np.zeros_like(result[0]))

src.release()

result = np.array(result)[..., [2, 1, 0]]

return result

Visualize video data

The frames_from_video_file function that returns a set of frames as a NumPy array. Try using this function on a new video from Wikimedia by Patrick Gillett:

curl -O https://upload.wikimedia.org/wikipedia/commons/8/86/End_of_a_jam.ogvvideo_path = "End_of_a_jam.ogv"

sample_video = frames_from_video_file(video_path, n_frames = 10)

sample_video.shape

def to_gif(images):

converted_images = np.clip(images * 255, 0, 255).astype(np.uint8)

imageio.mimsave('./animation.gif', converted_images, fps=10)

return embed.embed_file('./animation.gif')

to_gif(sample_video)

In addition to examining this video, you can also display the UCF-101 data. To do this, run the following code:

# docs-infra: no-execute

ucf_sample_video = frames_from_video_file(next(subset_paths['train'].glob('*/*.avi')), 50)

to_gif(ucf_sample_video)

Next, define the FrameGenerator class in order to create an iterable object that can feed data into the TensorFlow data pipeline. The generator (__call__) function yields the frame array produced by frames_from_video_file and a one-hot encoded vector of the label associated with the set of frames.

class FrameGenerator:

def __init__(self, path, n_frames, training = False):

""" Returns a set of frames with their associated label.

Args:

path: Video file paths.

n_frames: Number of frames.

training: Boolean to determine if training dataset is being created.

"""

self.path = path

self.n_frames = n_frames

self.training = training

self.class_names = sorted(set(p.name for p in self.path.iterdir() if p.is_dir()))

self.class_ids_for_name = dict((name, idx) for idx, name in enumerate(self.class_names))

def get_files_and_class_names(self):

video_paths = list(self.path.glob('*/*.avi'))

classes = [p.parent.name for p in video_paths]

return video_paths, classes

def __call__(self):

video_paths, classes = self.get_files_and_class_names()

pairs = list(zip(video_paths, classes))

if self.training:

random.shuffle(pairs)

for path, name in pairs:

video_frames = frames_from_video_file(path, self.n_frames)

label = self.class_ids_for_name[name] # Encode labels

yield video_frames, label

Test out the FrameGenerator object before wrapping it as a TensorFlow Dataset object. Moreover, for the training dataset, ensure you enable training mode so that the data will be shuffled.

fg = FrameGenerator(subset_paths['train'], 10, training=True)

frames, label = next(fg())

print(f"Shape: {frames.shape}")

print(f"Label: {label}")

Finally, create a TensorFlow data input pipeline. This pipeline that you create from the generator object allows you to feed in data to your deep learning model. In this video pipeline, each element is a single set of frames and its associated label.

# Create the training set

output_signature = (tf.TensorSpec(shape = (None, None, None, 3), dtype = tf.float32),

tf.TensorSpec(shape = (), dtype = tf.int16))

train_ds = tf.data.Dataset.from_generator(FrameGenerator(subset_paths['train'], 10, training=True),

output_signature = output_signature)

Check to see that the labels are shuffled.

for frames, labels in train_ds.take(10):

print(labels)

# Create the validation set

val_ds = tf.data.Dataset.from_generator(FrameGenerator(subset_paths['val'], 10),

output_signature = output_signature)

# Print the shapes of the data

train_frames, train_labels = next(iter(train_ds))

print(f'Shape of training set of frames: {train_frames.shape}')

print(f'Shape of training labels: {train_labels.shape}')

val_frames, val_labels = next(iter(val_ds))

print(f'Shape of validation set of frames: {val_frames.shape}')

print(f'Shape of validation labels: {val_labels.shape}')

Configure the dataset for performance

Use buffered prefetching such that you can yield data from the disk without having I/O become blocking. Two important functions to use while loading data are:

Dataset.cache: keeps the sets of frames in memory after they're loaded off the disk during the first epoch. This function ensures that the dataset does not become a bottleneck while training your model. If your dataset is too large to fit into memory, you can also use this method to create a performant on-disk cache.Dataset.prefetch: overlaps data preprocessing and model execution while training. Refer to Better performance with thetf.datafor details.

AUTOTUNE = tf.data.AUTOTUNE

train_ds = train_ds.cache().shuffle(1000).prefetch(buffer_size = AUTOTUNE)

val_ds = val_ds.cache().shuffle(1000).prefetch(buffer_size = AUTOTUNE)

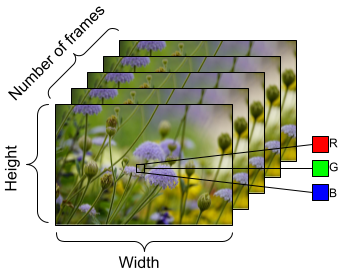

To prepare the data to be fed into the model, use batching as shown below. Notice that when working with video data, such as AVI files, the data should be shaped as a five dimensional object. These dimensions are as follows: [batch_size, number_of_frames, height, width, channels]. In comparison, an image would have four dimensions: [batch_size, height, width, channels]. The image below is an illustration of how the shape of video data is represented.

train_ds = train_ds.batch(2)

val_ds = val_ds.batch(2)

train_frames, train_labels = next(iter(train_ds))

print(f'Shape of training set of frames: {train_frames.shape}')

print(f'Shape of training labels: {train_labels.shape}')

val_frames, val_labels = next(iter(val_ds))

print(f'Shape of validation set of frames: {val_frames.shape}')

print(f'Shape of validation labels: {val_labels.shape}')

Next steps

Now that you have created a TensorFlow Dataset of video frames with their labels, you can use it with a deep learning model. The following classification model that uses a pre-trained EfficientNet trains to high accuracy in a few minutes:

net = tf.keras.applications.EfficientNetB0(include_top = False)

net.trainable = False

model = tf.keras.Sequential([

tf.keras.layers.Rescaling(scale=255),

tf.keras.layers.TimeDistributed(net),

tf.keras.layers.Dense(10),

tf.keras.layers.GlobalAveragePooling3D()

])

model.compile(optimizer = 'adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits = True),

metrics=['accuracy'])

model.fit(train_ds,

epochs = 10,

validation_data = val_ds,

callbacks = tf.keras.callbacks.EarlyStopping(patience = 2, monitor = 'val_loss'))

To learn more about working with video data in TensorFlow, check out the following tutorials: