Lihat di TensorFlow.org Lihat di TensorFlow.org |  Jalankan di Google Colab Jalankan di Google Colab |  Lihat sumber di GitHub Lihat sumber di GitHub |  Unduh buku catatan Unduh buku catatan |

Dalam tutorial ini, Anda akan belajar bagaimana mengklasifikasikan gambar kucing dan anjing dengan menggunakan pembelajaran transfer dari jaringan yang telah dilatih sebelumnya.

Model pra-pelatihan adalah jaringan tersimpan yang sebelumnya dilatih pada kumpulan data besar, biasanya pada tugas klasifikasi gambar skala besar. Anda dapat menggunakan model yang telah dilatih sebelumnya atau menggunakan pembelajaran transfer untuk menyesuaikan model ini dengan tugas yang diberikan.

Intuisi di balik pembelajaran transfer untuk klasifikasi gambar adalah bahwa jika model dilatih pada kumpulan data yang cukup besar dan umum, model ini akan secara efektif berfungsi sebagai model umum dunia visual. Anda kemudian dapat memanfaatkan peta fitur yang dipelajari ini tanpa harus memulai dari awal dengan melatih model besar pada kumpulan data yang besar.

Di notebook ini, Anda akan mencoba dua cara untuk menyesuaikan model yang sudah dilatih sebelumnya:

Ekstraksi Fitur: Gunakan representasi yang dipelajari oleh jaringan sebelumnya untuk mengekstrak fitur yang berarti dari sampel baru. Anda cukup menambahkan pengklasifikasi baru, yang akan dilatih dari awal, di atas model yang telah dilatih sebelumnya sehingga Anda dapat menggunakan kembali peta fitur yang dipelajari sebelumnya untuk kumpulan data.

Anda tidak perlu (kembali) melatih seluruh model. Jaringan konvolusi dasar sudah berisi fitur yang secara umum berguna untuk mengklasifikasikan gambar. Namun, bagian klasifikasi terakhir dari model pra-pelatihan khusus untuk tugas klasifikasi asli, dan selanjutnya khusus untuk kumpulan kelas tempat model dilatih.

Fine-Tuning: Mencairkan beberapa lapisan atas dari basis model yang dibekukan dan bersama-sama melatih lapisan pengklasifikasi yang baru ditambahkan dan lapisan terakhir dari model dasar. Ini memungkinkan kita untuk "menyempurnakan" representasi fitur tingkat tinggi dalam model dasar agar lebih relevan untuk tugas tertentu.

Anda akan mengikuti alur kerja machine learning umum.

- Pelajari dan pahami datanya

- Bangun saluran input, dalam hal ini menggunakan Keras ImageDataGenerator

- Buat modelnya

- Muat dalam model dasar yang telah dilatih sebelumnya (dan bobot yang telah dilatih sebelumnya)

- Tumpuk lapisan klasifikasi di atas

- Latih modelnya

- Evaluasi model

import matplotlib.pyplot as plt

import numpy as np

import os

import tensorflow as tf

Pra-pemrosesan data

Unduhan data

Dalam tutorial ini, Anda akan menggunakan kumpulan data yang berisi beberapa ribu gambar kucing dan anjing. Unduh dan ekstrak file zip yang berisi gambar, lalu buat tf.data.Dataset untuk pelatihan dan validasi menggunakan utilitas tf.keras.utils.image_dataset_from_directory . Anda dapat mempelajari lebih lanjut tentang memuat gambar dalam tutorial ini.

_URL = 'https://storage.googleapis.com/mledu-datasets/cats_and_dogs_filtered.zip'

path_to_zip = tf.keras.utils.get_file('cats_and_dogs.zip', origin=_URL, extract=True)

PATH = os.path.join(os.path.dirname(path_to_zip), 'cats_and_dogs_filtered')

train_dir = os.path.join(PATH, 'train')

validation_dir = os.path.join(PATH, 'validation')

BATCH_SIZE = 32

IMG_SIZE = (160, 160)

train_dataset = tf.keras.utils.image_dataset_from_directory(train_dir,

shuffle=True,

batch_size=BATCH_SIZE,

image_size=IMG_SIZE)

Downloading data from https://storage.googleapis.com/mledu-datasets/cats_and_dogs_filtered.zip 68608000/68606236 [==============================] - 1s 0us/step 68616192/68606236 [==============================] - 1s 0us/step Found 2000 files belonging to 2 classes.

validation_dataset = tf.keras.utils.image_dataset_from_directory(validation_dir,

shuffle=True,

batch_size=BATCH_SIZE,

image_size=IMG_SIZE)

Found 1000 files belonging to 2 classes.

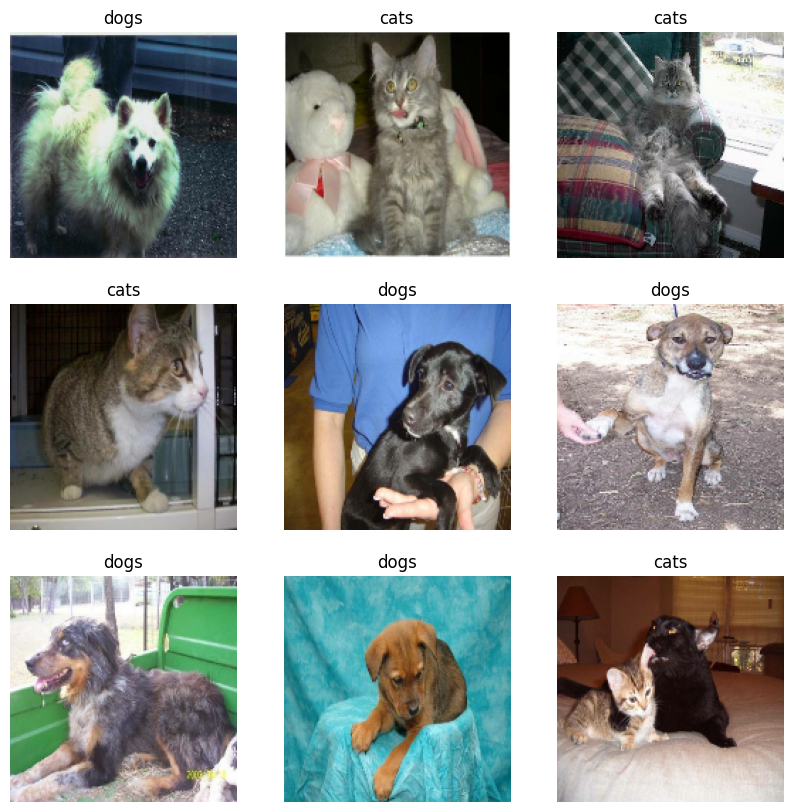

Tampilkan sembilan gambar dan label pertama dari set pelatihan:

class_names = train_dataset.class_names

plt.figure(figsize=(10, 10))

for images, labels in train_dataset.take(1):

for i in range(9):

ax = plt.subplot(3, 3, i + 1)

plt.imshow(images[i].numpy().astype("uint8"))

plt.title(class_names[labels[i]])

plt.axis("off")

Karena set data asli tidak berisi set pengujian, Anda akan membuatnya. Untuk melakukannya, tentukan berapa banyak kumpulan data yang tersedia di set validasi menggunakan tf.data.experimental.cardinality , lalu pindahkan 20% darinya ke set pengujian.

val_batches = tf.data.experimental.cardinality(validation_dataset)

test_dataset = validation_dataset.take(val_batches // 5)

validation_dataset = validation_dataset.skip(val_batches // 5)

print('Number of validation batches: %d' % tf.data.experimental.cardinality(validation_dataset))

print('Number of test batches: %d' % tf.data.experimental.cardinality(test_dataset))

Number of validation batches: 26 Number of test batches: 6

Konfigurasikan kumpulan data untuk kinerja

Gunakan buffered prefetching untuk memuat gambar dari disk tanpa I/O menjadi pemblokiran. Untuk mempelajari lebih lanjut tentang metode ini, lihat panduan kinerja data .

AUTOTUNE = tf.data.AUTOTUNE

train_dataset = train_dataset.prefetch(buffer_size=AUTOTUNE)

validation_dataset = validation_dataset.prefetch(buffer_size=AUTOTUNE)

test_dataset = test_dataset.prefetch(buffer_size=AUTOTUNE)

Gunakan augmentasi data

Bila Anda tidak memiliki kumpulan data gambar yang besar, merupakan praktik yang baik untuk memperkenalkan keragaman sampel secara artifisial dengan menerapkan transformasi acak, namun realistis, ke gambar pelatihan, seperti rotasi dan pembalikan horizontal. Ini membantu mengekspos model ke berbagai aspek data pelatihan dan mengurangi overfitting . Anda dapat mempelajari lebih lanjut tentang augmentasi data dalam tutorial ini.

data_augmentation = tf.keras.Sequential([

tf.keras.layers.RandomFlip('horizontal'),

tf.keras.layers.RandomRotation(0.2),

])

Mari kita berulang kali menerapkan lapisan ini ke gambar yang sama dan melihat hasilnya.

for image, _ in train_dataset.take(1):

plt.figure(figsize=(10, 10))

first_image = image[0]

for i in range(9):

ax = plt.subplot(3, 3, i + 1)

augmented_image = data_augmentation(tf.expand_dims(first_image, 0))

plt.imshow(augmented_image[0] / 255)

plt.axis('off')

Skala ulang nilai piksel

Sebentar lagi, Anda akan mengunduh tf.keras.applications.MobileNetV2 untuk digunakan sebagai model dasar Anda. Model ini mengharapkan nilai piksel dalam [-1, 1] , tetapi pada titik ini, nilai piksel pada gambar Anda berada di [0, 255] . Untuk mengubah skalanya, gunakan metode prapemrosesan yang disertakan dengan model.

preprocess_input = tf.keras.applications.mobilenet_v2.preprocess_input

rescale = tf.keras.layers.Rescaling(1./127.5, offset=-1)

Buat model dasar dari konvnet yang telah dilatih sebelumnya

Anda akan membuat model dasar dari model MobileNet V2 yang dikembangkan di Google. Ini telah dilatih sebelumnya pada kumpulan data ImageNet, kumpulan data besar yang terdiri dari 1,4 juta gambar dan 1000 kelas. ImageNet adalah kumpulan data pelatihan penelitian dengan berbagai kategori seperti jackfruit dan syringe . Basis pengetahuan ini akan membantu kami mengklasifikasikan kucing dan anjing dari kumpulan data khusus kami.

Pertama, Anda harus memilih lapisan MobileNet V2 mana yang akan Anda gunakan untuk ekstraksi fitur. Lapisan klasifikasi terakhir (di "atas", karena sebagian besar diagram model pembelajaran mesin bergerak dari bawah ke atas) tidak terlalu berguna. Sebagai gantinya, Anda akan mengikuti praktik umum untuk bergantung pada lapisan terakhir sebelum operasi perataan. Lapisan ini disebut "lapisan bottleneck". Fitur lapisan bottleneck mempertahankan lebih umum dibandingkan dengan lapisan akhir/atas.

Pertama, buat instance model MobileNet V2 yang telah dimuat sebelumnya dengan bobot yang dilatih di ImageNet. Dengan menentukan argumen include_top=False , Anda memuat jaringan yang tidak menyertakan lapisan klasifikasi di bagian atas, yang ideal untuk ekstraksi fitur.

# Create the base model from the pre-trained model MobileNet V2

IMG_SHAPE = IMG_SIZE + (3,)

base_model = tf.keras.applications.MobileNetV2(input_shape=IMG_SHAPE,

include_top=False,

weights='imagenet')

Downloading data from https://storage.googleapis.com/tensorflow/keras-applications/mobilenet_v2/mobilenet_v2_weights_tf_dim_ordering_tf_kernels_1.0_160_no_top.h5 9412608/9406464 [==============================] - 0s 0us/step 9420800/9406464 [==============================] - 0s 0us/step

Ekstraktor fitur ini mengubah setiap gambar 160x160x3 menjadi blok fitur 5x5x1280 . Mari kita lihat apa yang dilakukannya pada kumpulan contoh gambar:

image_batch, label_batch = next(iter(train_dataset))

feature_batch = base_model(image_batch)

print(feature_batch.shape)

(32, 5, 5, 1280)

Ekstraksi fitur

Pada langkah ini, Anda akan membekukan basis konvolusi yang dibuat dari langkah sebelumnya dan untuk digunakan sebagai ekstraktor fitur. Selain itu, Anda menambahkan pengklasifikasi di atasnya dan melatih pengklasifikasi tingkat atas.

Bekukan basis konvolusi

Penting untuk membekukan basis konvolusi sebelum Anda mengompilasi dan melatih model. Pembekuan (dengan menyetel layer.trainable = False) mencegah pembobotan pada layer tertentu agar tidak diperbarui selama pelatihan. MobileNet V2 memiliki banyak lapisan, jadi menyetel seluruh flag yang trainable untuk model ke False akan membekukan semuanya.

base_model.trainable = False

Catatan penting tentang lapisan BatchNormalization

Banyak model berisi lapisan tf.keras.layers.BatchNormalization . Lapisan ini adalah kasus khusus dan tindakan pencegahan harus diambil dalam konteks fine-tuning, seperti yang ditunjukkan nanti dalam tutorial ini.

Saat Anda menyetel layer.trainable = False , lapisan BatchNormalization akan berjalan dalam mode inferensi, dan tidak akan memperbarui statistik rata-rata dan variansnya.

Saat Anda mencairkan model yang berisi lapisan BatchNormalization untuk melakukan fine-tuning, Anda harus menjaga lapisan BatchNormalization dalam mode inferensi dengan melewatkan training = False saat memanggil model dasar. Jika tidak, pembaruan yang diterapkan pada bobot yang tidak dapat dilatih akan menghancurkan apa yang telah dipelajari model.

Untuk lebih jelasnya, lihat panduan Transfer learning .

# Let's take a look at the base model architecture

base_model.summary()

Model: "mobilenetv2_1.00_160"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 160, 160, 3 0 []

)]

Conv1 (Conv2D) (None, 80, 80, 32) 864 ['input_1[0][0]']

bn_Conv1 (BatchNormalization) (None, 80, 80, 32) 128 ['Conv1[0][0]']

Conv1_relu (ReLU) (None, 80, 80, 32) 0 ['bn_Conv1[0][0]']

expanded_conv_depthwise (Depth (None, 80, 80, 32) 288 ['Conv1_relu[0][0]']

wiseConv2D)

expanded_conv_depthwise_BN (Ba (None, 80, 80, 32) 128 ['expanded_conv_depthwise[0][0]']

tchNormalization)

expanded_conv_depthwise_relu ( (None, 80, 80, 32) 0 ['expanded_conv_depthwise_BN[0][0

ReLU) ]']

expanded_conv_project (Conv2D) (None, 80, 80, 16) 512 ['expanded_conv_depthwise_relu[0]

[0]']

expanded_conv_project_BN (Batc (None, 80, 80, 16) 64 ['expanded_conv_project[0][0]']

hNormalization)

block_1_expand (Conv2D) (None, 80, 80, 96) 1536 ['expanded_conv_project_BN[0][0]'

]

block_1_expand_BN (BatchNormal (None, 80, 80, 96) 384 ['block_1_expand[0][0]']

ization)

block_1_expand_relu (ReLU) (None, 80, 80, 96) 0 ['block_1_expand_BN[0][0]']

block_1_pad (ZeroPadding2D) (None, 81, 81, 96) 0 ['block_1_expand_relu[0][0]']

block_1_depthwise (DepthwiseCo (None, 40, 40, 96) 864 ['block_1_pad[0][0]']

nv2D)

block_1_depthwise_BN (BatchNor (None, 40, 40, 96) 384 ['block_1_depthwise[0][0]']

malization)

block_1_depthwise_relu (ReLU) (None, 40, 40, 96) 0 ['block_1_depthwise_BN[0][0]']

block_1_project (Conv2D) (None, 40, 40, 24) 2304 ['block_1_depthwise_relu[0][0]']

block_1_project_BN (BatchNorma (None, 40, 40, 24) 96 ['block_1_project[0][0]']

lization)

block_2_expand (Conv2D) (None, 40, 40, 144) 3456 ['block_1_project_BN[0][0]']

block_2_expand_BN (BatchNormal (None, 40, 40, 144) 576 ['block_2_expand[0][0]']

ization)

block_2_expand_relu (ReLU) (None, 40, 40, 144) 0 ['block_2_expand_BN[0][0]']

block_2_depthwise (DepthwiseCo (None, 40, 40, 144) 1296 ['block_2_expand_relu[0][0]']

nv2D)

block_2_depthwise_BN (BatchNor (None, 40, 40, 144) 576 ['block_2_depthwise[0][0]']

malization)

block_2_depthwise_relu (ReLU) (None, 40, 40, 144) 0 ['block_2_depthwise_BN[0][0]']

block_2_project (Conv2D) (None, 40, 40, 24) 3456 ['block_2_depthwise_relu[0][0]']

block_2_project_BN (BatchNorma (None, 40, 40, 24) 96 ['block_2_project[0][0]']

lization)

block_2_add (Add) (None, 40, 40, 24) 0 ['block_1_project_BN[0][0]',

'block_2_project_BN[0][0]']

block_3_expand (Conv2D) (None, 40, 40, 144) 3456 ['block_2_add[0][0]']

block_3_expand_BN (BatchNormal (None, 40, 40, 144) 576 ['block_3_expand[0][0]']

ization)

block_3_expand_relu (ReLU) (None, 40, 40, 144) 0 ['block_3_expand_BN[0][0]']

block_3_pad (ZeroPadding2D) (None, 41, 41, 144) 0 ['block_3_expand_relu[0][0]']

block_3_depthwise (DepthwiseCo (None, 20, 20, 144) 1296 ['block_3_pad[0][0]']

nv2D)

block_3_depthwise_BN (BatchNor (None, 20, 20, 144) 576 ['block_3_depthwise[0][0]']

malization)

block_3_depthwise_relu (ReLU) (None, 20, 20, 144) 0 ['block_3_depthwise_BN[0][0]']

block_3_project (Conv2D) (None, 20, 20, 32) 4608 ['block_3_depthwise_relu[0][0]']

block_3_project_BN (BatchNorma (None, 20, 20, 32) 128 ['block_3_project[0][0]']

lization)

block_4_expand (Conv2D) (None, 20, 20, 192) 6144 ['block_3_project_BN[0][0]']

block_4_expand_BN (BatchNormal (None, 20, 20, 192) 768 ['block_4_expand[0][0]']

ization)

block_4_expand_relu (ReLU) (None, 20, 20, 192) 0 ['block_4_expand_BN[0][0]']

block_4_depthwise (DepthwiseCo (None, 20, 20, 192) 1728 ['block_4_expand_relu[0][0]']

nv2D)

block_4_depthwise_BN (BatchNor (None, 20, 20, 192) 768 ['block_4_depthwise[0][0]']

malization)

block_4_depthwise_relu (ReLU) (None, 20, 20, 192) 0 ['block_4_depthwise_BN[0][0]']

block_4_project (Conv2D) (None, 20, 20, 32) 6144 ['block_4_depthwise_relu[0][0]']

block_4_project_BN (BatchNorma (None, 20, 20, 32) 128 ['block_4_project[0][0]']

lization)

block_4_add (Add) (None, 20, 20, 32) 0 ['block_3_project_BN[0][0]',

'block_4_project_BN[0][0]']

block_5_expand (Conv2D) (None, 20, 20, 192) 6144 ['block_4_add[0][0]']

block_5_expand_BN (BatchNormal (None, 20, 20, 192) 768 ['block_5_expand[0][0]']

ization)

block_5_expand_relu (ReLU) (None, 20, 20, 192) 0 ['block_5_expand_BN[0][0]']

block_5_depthwise (DepthwiseCo (None, 20, 20, 192) 1728 ['block_5_expand_relu[0][0]']

nv2D)

block_5_depthwise_BN (BatchNor (None, 20, 20, 192) 768 ['block_5_depthwise[0][0]']

malization)

block_5_depthwise_relu (ReLU) (None, 20, 20, 192) 0 ['block_5_depthwise_BN[0][0]']

block_5_project (Conv2D) (None, 20, 20, 32) 6144 ['block_5_depthwise_relu[0][0]']

block_5_project_BN (BatchNorma (None, 20, 20, 32) 128 ['block_5_project[0][0]']

lization)

block_5_add (Add) (None, 20, 20, 32) 0 ['block_4_add[0][0]',

'block_5_project_BN[0][0]']

block_6_expand (Conv2D) (None, 20, 20, 192) 6144 ['block_5_add[0][0]']

block_6_expand_BN (BatchNormal (None, 20, 20, 192) 768 ['block_6_expand[0][0]']

ization)

block_6_expand_relu (ReLU) (None, 20, 20, 192) 0 ['block_6_expand_BN[0][0]']

block_6_pad (ZeroPadding2D) (None, 21, 21, 192) 0 ['block_6_expand_relu[0][0]']

block_6_depthwise (DepthwiseCo (None, 10, 10, 192) 1728 ['block_6_pad[0][0]']

nv2D)

block_6_depthwise_BN (BatchNor (None, 10, 10, 192) 768 ['block_6_depthwise[0][0]']

malization)

block_6_depthwise_relu (ReLU) (None, 10, 10, 192) 0 ['block_6_depthwise_BN[0][0]']

block_6_project (Conv2D) (None, 10, 10, 64) 12288 ['block_6_depthwise_relu[0][0]']

block_6_project_BN (BatchNorma (None, 10, 10, 64) 256 ['block_6_project[0][0]']

lization)

block_7_expand (Conv2D) (None, 10, 10, 384) 24576 ['block_6_project_BN[0][0]']

block_7_expand_BN (BatchNormal (None, 10, 10, 384) 1536 ['block_7_expand[0][0]']

ization)

block_7_expand_relu (ReLU) (None, 10, 10, 384) 0 ['block_7_expand_BN[0][0]']

block_7_depthwise (DepthwiseCo (None, 10, 10, 384) 3456 ['block_7_expand_relu[0][0]']

nv2D)

block_7_depthwise_BN (BatchNor (None, 10, 10, 384) 1536 ['block_7_depthwise[0][0]']

malization)

block_7_depthwise_relu (ReLU) (None, 10, 10, 384) 0 ['block_7_depthwise_BN[0][0]']

block_7_project (Conv2D) (None, 10, 10, 64) 24576 ['block_7_depthwise_relu[0][0]']

block_7_project_BN (BatchNorma (None, 10, 10, 64) 256 ['block_7_project[0][0]']

lization)

block_7_add (Add) (None, 10, 10, 64) 0 ['block_6_project_BN[0][0]',

'block_7_project_BN[0][0]']

block_8_expand (Conv2D) (None, 10, 10, 384) 24576 ['block_7_add[0][0]']

block_8_expand_BN (BatchNormal (None, 10, 10, 384) 1536 ['block_8_expand[0][0]']

ization)

block_8_expand_relu (ReLU) (None, 10, 10, 384) 0 ['block_8_expand_BN[0][0]']

block_8_depthwise (DepthwiseCo (None, 10, 10, 384) 3456 ['block_8_expand_relu[0][0]']

nv2D)

block_8_depthwise_BN (BatchNor (None, 10, 10, 384) 1536 ['block_8_depthwise[0][0]']

malization)

block_8_depthwise_relu (ReLU) (None, 10, 10, 384) 0 ['block_8_depthwise_BN[0][0]']

block_8_project (Conv2D) (None, 10, 10, 64) 24576 ['block_8_depthwise_relu[0][0]']

block_8_project_BN (BatchNorma (None, 10, 10, 64) 256 ['block_8_project[0][0]']

lization)

block_8_add (Add) (None, 10, 10, 64) 0 ['block_7_add[0][0]',

'block_8_project_BN[0][0]']

block_9_expand (Conv2D) (None, 10, 10, 384) 24576 ['block_8_add[0][0]']

block_9_expand_BN (BatchNormal (None, 10, 10, 384) 1536 ['block_9_expand[0][0]']

ization)

block_9_expand_relu (ReLU) (None, 10, 10, 384) 0 ['block_9_expand_BN[0][0]']

block_9_depthwise (DepthwiseCo (None, 10, 10, 384) 3456 ['block_9_expand_relu[0][0]']

nv2D)

block_9_depthwise_BN (BatchNor (None, 10, 10, 384) 1536 ['block_9_depthwise[0][0]']

malization)

block_9_depthwise_relu (ReLU) (None, 10, 10, 384) 0 ['block_9_depthwise_BN[0][0]']

block_9_project (Conv2D) (None, 10, 10, 64) 24576 ['block_9_depthwise_relu[0][0]']

block_9_project_BN (BatchNorma (None, 10, 10, 64) 256 ['block_9_project[0][0]']

lization)

block_9_add (Add) (None, 10, 10, 64) 0 ['block_8_add[0][0]',

'block_9_project_BN[0][0]']

block_10_expand (Conv2D) (None, 10, 10, 384) 24576 ['block_9_add[0][0]']

block_10_expand_BN (BatchNorma (None, 10, 10, 384) 1536 ['block_10_expand[0][0]']

lization)

block_10_expand_relu (ReLU) (None, 10, 10, 384) 0 ['block_10_expand_BN[0][0]']

block_10_depthwise (DepthwiseC (None, 10, 10, 384) 3456 ['block_10_expand_relu[0][0]']

onv2D)

block_10_depthwise_BN (BatchNo (None, 10, 10, 384) 1536 ['block_10_depthwise[0][0]']

rmalization)

block_10_depthwise_relu (ReLU) (None, 10, 10, 384) 0 ['block_10_depthwise_BN[0][0]']

block_10_project (Conv2D) (None, 10, 10, 96) 36864 ['block_10_depthwise_relu[0][0]']

block_10_project_BN (BatchNorm (None, 10, 10, 96) 384 ['block_10_project[0][0]']

alization)

block_11_expand (Conv2D) (None, 10, 10, 576) 55296 ['block_10_project_BN[0][0]']

block_11_expand_BN (BatchNorma (None, 10, 10, 576) 2304 ['block_11_expand[0][0]']

lization)

block_11_expand_relu (ReLU) (None, 10, 10, 576) 0 ['block_11_expand_BN[0][0]']

block_11_depthwise (DepthwiseC (None, 10, 10, 576) 5184 ['block_11_expand_relu[0][0]']

onv2D)

block_11_depthwise_BN (BatchNo (None, 10, 10, 576) 2304 ['block_11_depthwise[0][0]']

rmalization)

block_11_depthwise_relu (ReLU) (None, 10, 10, 576) 0 ['block_11_depthwise_BN[0][0]']

block_11_project (Conv2D) (None, 10, 10, 96) 55296 ['block_11_depthwise_relu[0][0]']

block_11_project_BN (BatchNorm (None, 10, 10, 96) 384 ['block_11_project[0][0]']

alization)

block_11_add (Add) (None, 10, 10, 96) 0 ['block_10_project_BN[0][0]',

'block_11_project_BN[0][0]']

block_12_expand (Conv2D) (None, 10, 10, 576) 55296 ['block_11_add[0][0]']

block_12_expand_BN (BatchNorma (None, 10, 10, 576) 2304 ['block_12_expand[0][0]']

lization)

block_12_expand_relu (ReLU) (None, 10, 10, 576) 0 ['block_12_expand_BN[0][0]']

block_12_depthwise (DepthwiseC (None, 10, 10, 576) 5184 ['block_12_expand_relu[0][0]']

onv2D)

block_12_depthwise_BN (BatchNo (None, 10, 10, 576) 2304 ['block_12_depthwise[0][0]']

rmalization)

block_12_depthwise_relu (ReLU) (None, 10, 10, 576) 0 ['block_12_depthwise_BN[0][0]']

block_12_project (Conv2D) (None, 10, 10, 96) 55296 ['block_12_depthwise_relu[0][0]']

block_12_project_BN (BatchNorm (None, 10, 10, 96) 384 ['block_12_project[0][0]']

alization)

block_12_add (Add) (None, 10, 10, 96) 0 ['block_11_add[0][0]',

'block_12_project_BN[0][0]']

block_13_expand (Conv2D) (None, 10, 10, 576) 55296 ['block_12_add[0][0]']

block_13_expand_BN (BatchNorma (None, 10, 10, 576) 2304 ['block_13_expand[0][0]']

lization)

block_13_expand_relu (ReLU) (None, 10, 10, 576) 0 ['block_13_expand_BN[0][0]']

block_13_pad (ZeroPadding2D) (None, 11, 11, 576) 0 ['block_13_expand_relu[0][0]']

block_13_depthwise (DepthwiseC (None, 5, 5, 576) 5184 ['block_13_pad[0][0]']

onv2D)

block_13_depthwise_BN (BatchNo (None, 5, 5, 576) 2304 ['block_13_depthwise[0][0]']

rmalization)

block_13_depthwise_relu (ReLU) (None, 5, 5, 576) 0 ['block_13_depthwise_BN[0][0]']

block_13_project (Conv2D) (None, 5, 5, 160) 92160 ['block_13_depthwise_relu[0][0]']

block_13_project_BN (BatchNorm (None, 5, 5, 160) 640 ['block_13_project[0][0]']

alization)

block_14_expand (Conv2D) (None, 5, 5, 960) 153600 ['block_13_project_BN[0][0]']

block_14_expand_BN (BatchNorma (None, 5, 5, 960) 3840 ['block_14_expand[0][0]']

lization)

block_14_expand_relu (ReLU) (None, 5, 5, 960) 0 ['block_14_expand_BN[0][0]']

block_14_depthwise (DepthwiseC (None, 5, 5, 960) 8640 ['block_14_expand_relu[0][0]']

onv2D)

block_14_depthwise_BN (BatchNo (None, 5, 5, 960) 3840 ['block_14_depthwise[0][0]']

rmalization)

block_14_depthwise_relu (ReLU) (None, 5, 5, 960) 0 ['block_14_depthwise_BN[0][0]']

block_14_project (Conv2D) (None, 5, 5, 160) 153600 ['block_14_depthwise_relu[0][0]']

block_14_project_BN (BatchNorm (None, 5, 5, 160) 640 ['block_14_project[0][0]']

alization)

block_14_add (Add) (None, 5, 5, 160) 0 ['block_13_project_BN[0][0]',

'block_14_project_BN[0][0]']

block_15_expand (Conv2D) (None, 5, 5, 960) 153600 ['block_14_add[0][0]']

block_15_expand_BN (BatchNorma (None, 5, 5, 960) 3840 ['block_15_expand[0][0]']

lization)

block_15_expand_relu (ReLU) (None, 5, 5, 960) 0 ['block_15_expand_BN[0][0]']

block_15_depthwise (DepthwiseC (None, 5, 5, 960) 8640 ['block_15_expand_relu[0][0]']

onv2D)

block_15_depthwise_BN (BatchNo (None, 5, 5, 960) 3840 ['block_15_depthwise[0][0]']

rmalization)

block_15_depthwise_relu (ReLU) (None, 5, 5, 960) 0 ['block_15_depthwise_BN[0][0]']

block_15_project (Conv2D) (None, 5, 5, 160) 153600 ['block_15_depthwise_relu[0][0]']

block_15_project_BN (BatchNorm (None, 5, 5, 160) 640 ['block_15_project[0][0]']

alization)

block_15_add (Add) (None, 5, 5, 160) 0 ['block_14_add[0][0]',

'block_15_project_BN[0][0]']

block_16_expand (Conv2D) (None, 5, 5, 960) 153600 ['block_15_add[0][0]']

block_16_expand_BN (BatchNorma (None, 5, 5, 960) 3840 ['block_16_expand[0][0]']

lization)

block_16_expand_relu (ReLU) (None, 5, 5, 960) 0 ['block_16_expand_BN[0][0]']

block_16_depthwise (DepthwiseC (None, 5, 5, 960) 8640 ['block_16_expand_relu[0][0]']

onv2D)

block_16_depthwise_BN (BatchNo (None, 5, 5, 960) 3840 ['block_16_depthwise[0][0]']

rmalization)

block_16_depthwise_relu (ReLU) (None, 5, 5, 960) 0 ['block_16_depthwise_BN[0][0]']

block_16_project (Conv2D) (None, 5, 5, 320) 307200 ['block_16_depthwise_relu[0][0]']

block_16_project_BN (BatchNorm (None, 5, 5, 320) 1280 ['block_16_project[0][0]']

alization)

Conv_1 (Conv2D) (None, 5, 5, 1280) 409600 ['block_16_project_BN[0][0]']

Conv_1_bn (BatchNormalization) (None, 5, 5, 1280) 5120 ['Conv_1[0][0]']

out_relu (ReLU) (None, 5, 5, 1280) 0 ['Conv_1_bn[0][0]']

==================================================================================================

Total params: 2,257,984

Trainable params: 0

Non-trainable params: 2,257,984

__________________________________________________________________________________________________

Tambahkan kepala klasifikasi

Untuk menghasilkan prediksi dari blok fitur, rata-ratakan lokasi spasial 5x5 spasial, menggunakan lapisan tf.keras.layers.GlobalAveragePooling2D untuk mengonversi fitur menjadi satu vektor 1280 elemen per gambar.

global_average_layer = tf.keras.layers.GlobalAveragePooling2D()

feature_batch_average = global_average_layer(feature_batch)

print(feature_batch_average.shape)

(32, 1280)

Terapkan lapisan tf.keras.layers.Dense untuk mengonversi fitur ini menjadi satu prediksi per gambar. Anda tidak memerlukan fungsi aktivasi di sini karena prediksi ini akan diperlakukan sebagai logit , atau nilai prediksi mentah. Angka positif memprediksi kelas 1, angka negatif memprediksi kelas 0.

prediction_layer = tf.keras.layers.Dense(1)

prediction_batch = prediction_layer(feature_batch_average)

print(prediction_batch.shape)

(32, 1)

Bangun model dengan menyatukan lapisan augmentasi data, penskalaan ulang, base_model , dan ekstraktor fitur menggunakan Keras Functional API . Seperti yang disebutkan sebelumnya, gunakan training=False karena model kami berisi lapisan BatchNormalization .

inputs = tf.keras.Input(shape=(160, 160, 3))

x = data_augmentation(inputs)

x = preprocess_input(x)

x = base_model(x, training=False)

x = global_average_layer(x)

x = tf.keras.layers.Dropout(0.2)(x)

outputs = prediction_layer(x)

model = tf.keras.Model(inputs, outputs)

Kompilasi modelnya

Kompilasi model sebelum melatihnya. Karena ada dua kelas, gunakan kerugian tf.keras.losses.BinaryCrossentropy dengan from_logits=True karena model menyediakan output linier.

base_learning_rate = 0.0001

model.compile(optimizer=tf.keras.optimizers.Adam(learning_rate=base_learning_rate),

loss=tf.keras.losses.BinaryCrossentropy(from_logits=True),

metrics=['accuracy'])

model.summary()

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_2 (InputLayer) [(None, 160, 160, 3)] 0

sequential (Sequential) (None, 160, 160, 3) 0

tf.math.truediv (TFOpLambda (None, 160, 160, 3) 0

)

tf.math.subtract (TFOpLambd (None, 160, 160, 3) 0

a)

mobilenetv2_1.00_160 (Funct (None, 5, 5, 1280) 2257984

ional)

global_average_pooling2d (G (None, 1280) 0

lobalAveragePooling2D)

dropout (Dropout) (None, 1280) 0

dense (Dense) (None, 1) 1281

=================================================================

Total params: 2,259,265

Trainable params: 1,281

Non-trainable params: 2,257,984

_________________________________________________________________

2,5 juta parameter di MobileNet dibekukan, tetapi ada 1,2 ribu parameter yang dapat dilatih di lapisan Padat. Ini dibagi antara dua objek tf.Variable , bobot dan bias.

len(model.trainable_variables)

2

Latih modelnya

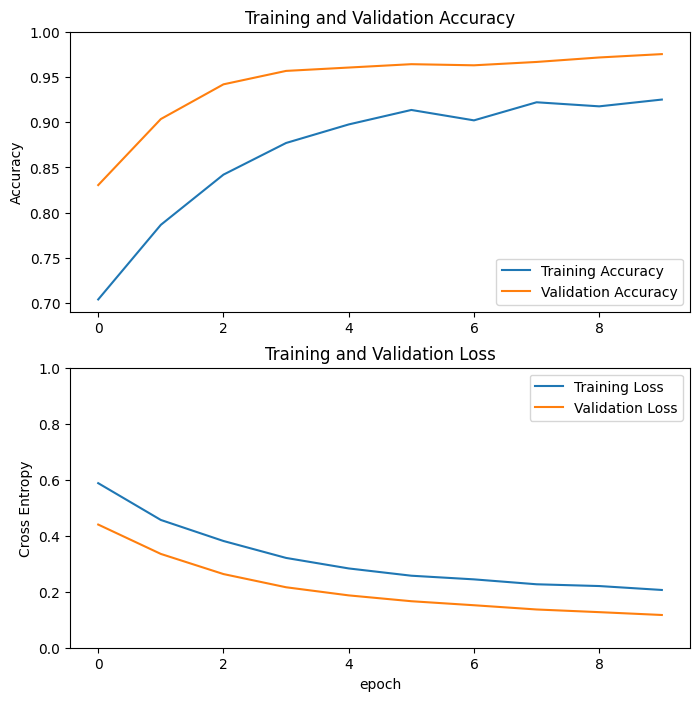

Setelah pelatihan selama 10 epoch, Anda akan melihat akurasi ~94% pada set validasi.

initial_epochs = 10

loss0, accuracy0 = model.evaluate(validation_dataset)

26/26 [==============================] - 2s 16ms/step - loss: 0.7428 - accuracy: 0.5186

print("initial loss: {:.2f}".format(loss0))

print("initial accuracy: {:.2f}".format(accuracy0))

initial loss: 0.74 initial accuracy: 0.52

history = model.fit(train_dataset,

epochs=initial_epochs,

validation_data=validation_dataset)

Epoch 1/10 63/63 [==============================] - 4s 23ms/step - loss: 0.6804 - accuracy: 0.5680 - val_loss: 0.4981 - val_accuracy: 0.7054 Epoch 2/10 63/63 [==============================] - 1s 22ms/step - loss: 0.5044 - accuracy: 0.7170 - val_loss: 0.3598 - val_accuracy: 0.8144 Epoch 3/10 63/63 [==============================] - 1s 21ms/step - loss: 0.4109 - accuracy: 0.7845 - val_loss: 0.2810 - val_accuracy: 0.8861 Epoch 4/10 63/63 [==============================] - 1s 21ms/step - loss: 0.3285 - accuracy: 0.8445 - val_loss: 0.2256 - val_accuracy: 0.9208 Epoch 5/10 63/63 [==============================] - 1s 21ms/step - loss: 0.3108 - accuracy: 0.8555 - val_loss: 0.1986 - val_accuracy: 0.9307 Epoch 6/10 63/63 [==============================] - 1s 21ms/step - loss: 0.2659 - accuracy: 0.8855 - val_loss: 0.1703 - val_accuracy: 0.9418 Epoch 7/10 63/63 [==============================] - 1s 21ms/step - loss: 0.2459 - accuracy: 0.8935 - val_loss: 0.1495 - val_accuracy: 0.9517 Epoch 8/10 63/63 [==============================] - 1s 21ms/step - loss: 0.2315 - accuracy: 0.8950 - val_loss: 0.1454 - val_accuracy: 0.9542 Epoch 9/10 63/63 [==============================] - 1s 21ms/step - loss: 0.2204 - accuracy: 0.9030 - val_loss: 0.1326 - val_accuracy: 0.9592 Epoch 10/10 63/63 [==============================] - 1s 21ms/step - loss: 0.2180 - accuracy: 0.9115 - val_loss: 0.1215 - val_accuracy: 0.9604

kurva belajar

Mari kita lihat kurva pembelajaran dari pelatihan dan akurasi/kerugian validasi saat menggunakan model dasar MobileNetV2 sebagai ekstraktor fitur tetap.

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

plt.figure(figsize=(8, 8))

plt.subplot(2, 1, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.legend(loc='lower right')

plt.ylabel('Accuracy')

plt.ylim([min(plt.ylim()),1])

plt.title('Training and Validation Accuracy')

plt.subplot(2, 1, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.legend(loc='upper right')

plt.ylabel('Cross Entropy')

plt.ylim([0,1.0])

plt.title('Training and Validation Loss')

plt.xlabel('epoch')

plt.show()

Pada tingkat yang lebih rendah, ini juga karena metrik pelatihan melaporkan rata-rata untuk suatu epoch, sedangkan metrik validasi dievaluasi setelah epoch, jadi metrik validasi melihat model yang telah dilatih sedikit lebih lama.

Mencari setelan

Dalam eksperimen ekstraksi fitur, Anda hanya melatih beberapa lapisan di atas model dasar MobileNetV2. Bobot jaringan pra-terlatih tidak diperbarui selama pelatihan.

Salah satu cara untuk meningkatkan performa lebih jauh lagi adalah dengan melatih (atau "menyesuaikan") bobot lapisan atas model yang telah dilatih sebelumnya di samping pelatihan pengklasifikasi yang Anda tambahkan. Proses pelatihan akan memaksa bobot untuk disetel dari peta fitur umum ke fitur yang terkait secara khusus dengan kumpulan data.

Selain itu, Anda harus mencoba menyempurnakan sejumlah kecil lapisan atas daripada keseluruhan model MobileNet. Di sebagian besar jaringan konvolusi, semakin tinggi lapisannya, semakin terspesialisasi. Beberapa lapisan pertama mempelajari fitur yang sangat sederhana dan umum yang digeneralisasikan ke hampir semua jenis gambar. Saat Anda naik lebih tinggi, fitur-fiturnya semakin spesifik untuk kumpulan data tempat model dilatih. Tujuan fine-tuning adalah untuk mengadaptasi fitur-fitur khusus ini untuk bekerja dengan dataset baru, daripada menimpa pembelajaran generik.

Un-freeze lapisan atas model

Yang perlu Anda lakukan adalah mencairkan base_model dan mengatur lapisan bawah agar tidak dapat dilatih. Kemudian, Anda harus mengkompilasi ulang model (diperlukan agar perubahan ini diterapkan), dan melanjutkan pelatihan.

base_model.trainable = True

# Let's take a look to see how many layers are in the base model

print("Number of layers in the base model: ", len(base_model.layers))

# Fine-tune from this layer onwards

fine_tune_at = 100

# Freeze all the layers before the `fine_tune_at` layer

for layer in base_model.layers[:fine_tune_at]:

layer.trainable = False

Number of layers in the base model: 154

Kompilasi modelnya

Saat Anda melatih model yang jauh lebih besar dan ingin menyesuaikan kembali bobot yang telah dilatih sebelumnya, penting untuk menggunakan tingkat pembelajaran yang lebih rendah pada tahap ini. Jika tidak, model Anda bisa overfit dengan sangat cepat.

model.compile(loss=tf.keras.losses.BinaryCrossentropy(from_logits=True),

optimizer = tf.keras.optimizers.RMSprop(learning_rate=base_learning_rate/10),

metrics=['accuracy'])

model.summary()

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_2 (InputLayer) [(None, 160, 160, 3)] 0

sequential (Sequential) (None, 160, 160, 3) 0

tf.math.truediv (TFOpLambda (None, 160, 160, 3) 0

)

tf.math.subtract (TFOpLambd (None, 160, 160, 3) 0

a)

mobilenetv2_1.00_160 (Funct (None, 5, 5, 1280) 2257984

ional)

global_average_pooling2d (G (None, 1280) 0

lobalAveragePooling2D)

dropout (Dropout) (None, 1280) 0

dense (Dense) (None, 1) 1281

=================================================================

Total params: 2,259,265

Trainable params: 1,862,721

Non-trainable params: 396,544

_________________________________________________________________

len(model.trainable_variables)

56

Lanjutkan melatih model

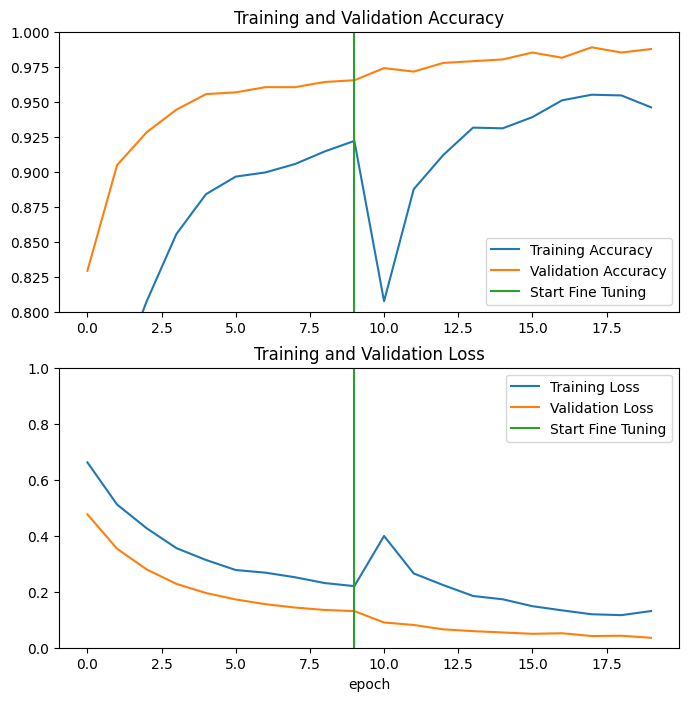

Jika Anda dilatih untuk konvergensi sebelumnya, langkah ini akan meningkatkan akurasi Anda dengan beberapa poin persentase.

fine_tune_epochs = 10

total_epochs = initial_epochs + fine_tune_epochs

history_fine = model.fit(train_dataset,

epochs=total_epochs,

initial_epoch=history.epoch[-1],

validation_data=validation_dataset)

Epoch 10/20 63/63 [==============================] - 7s 40ms/step - loss: 0.1545 - accuracy: 0.9335 - val_loss: 0.0531 - val_accuracy: 0.9864 Epoch 11/20 63/63 [==============================] - 2s 28ms/step - loss: 0.1161 - accuracy: 0.9540 - val_loss: 0.0500 - val_accuracy: 0.9814 Epoch 12/20 63/63 [==============================] - 2s 28ms/step - loss: 0.1125 - accuracy: 0.9525 - val_loss: 0.0379 - val_accuracy: 0.9876 Epoch 13/20 63/63 [==============================] - 2s 28ms/step - loss: 0.0891 - accuracy: 0.9625 - val_loss: 0.0472 - val_accuracy: 0.9889 Epoch 14/20 63/63 [==============================] - 2s 28ms/step - loss: 0.0844 - accuracy: 0.9680 - val_loss: 0.0478 - val_accuracy: 0.9889 Epoch 15/20 63/63 [==============================] - 2s 28ms/step - loss: 0.0857 - accuracy: 0.9645 - val_loss: 0.0354 - val_accuracy: 0.9839 Epoch 16/20 63/63 [==============================] - 2s 28ms/step - loss: 0.0785 - accuracy: 0.9690 - val_loss: 0.0449 - val_accuracy: 0.9864 Epoch 17/20 63/63 [==============================] - 2s 28ms/step - loss: 0.0669 - accuracy: 0.9740 - val_loss: 0.0375 - val_accuracy: 0.9839 Epoch 18/20 63/63 [==============================] - 2s 28ms/step - loss: 0.0701 - accuracy: 0.9695 - val_loss: 0.0324 - val_accuracy: 0.9864 Epoch 19/20 63/63 [==============================] - 2s 28ms/step - loss: 0.0636 - accuracy: 0.9760 - val_loss: 0.0465 - val_accuracy: 0.9790 Epoch 20/20 63/63 [==============================] - 2s 29ms/step - loss: 0.0585 - accuracy: 0.9765 - val_loss: 0.0392 - val_accuracy: 0.9851

Mari kita lihat kurva pembelajaran dari pelatihan dan akurasi/kerugian validasi saat menyempurnakan beberapa lapisan terakhir model dasar MobileNetV2 dan melatih pengklasifikasi di atasnya. Kehilangan validasi jauh lebih tinggi daripada kerugian pelatihan, jadi Anda mungkin mendapatkan beberapa overfitting.

Anda juga mungkin mendapatkan beberapa overfitting karena set pelatihan baru relatif kecil dan mirip dengan set data MobileNetV2 asli.

Setelah fine tuning, model hampir mencapai akurasi 98% pada set validasi.

acc += history_fine.history['accuracy']

val_acc += history_fine.history['val_accuracy']

loss += history_fine.history['loss']

val_loss += history_fine.history['val_loss']

plt.figure(figsize=(8, 8))

plt.subplot(2, 1, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.ylim([0.8, 1])

plt.plot([initial_epochs-1,initial_epochs-1],

plt.ylim(), label='Start Fine Tuning')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(2, 1, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.ylim([0, 1.0])

plt.plot([initial_epochs-1,initial_epochs-1],

plt.ylim(), label='Start Fine Tuning')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.xlabel('epoch')

plt.show()

Evaluasi dan prediksi

Akhirnya Anda dapat memverifikasi kinerja model pada data baru menggunakan test set.

loss, accuracy = model.evaluate(test_dataset)

print('Test accuracy :', accuracy)

6/6 [==============================] - 0s 13ms/step - loss: 0.0281 - accuracy: 0.9948 Test accuracy : 0.9947916865348816

Dan sekarang Anda siap menggunakan model ini untuk memprediksi apakah hewan peliharaan Anda adalah kucing atau anjing.

# Retrieve a batch of images from the test set

image_batch, label_batch = test_dataset.as_numpy_iterator().next()

predictions = model.predict_on_batch(image_batch).flatten()

# Apply a sigmoid since our model returns logits

predictions = tf.nn.sigmoid(predictions)

predictions = tf.where(predictions < 0.5, 0, 1)

print('Predictions:\n', predictions.numpy())

print('Labels:\n', label_batch)

plt.figure(figsize=(10, 10))

for i in range(9):

ax = plt.subplot(3, 3, i + 1)

plt.imshow(image_batch[i].astype("uint8"))

plt.title(class_names[predictions[i]])

plt.axis("off")

Predictions: [0 1 1 1 1 0 1 1 1 0 1 1 0 1 1 1 0 0 0 1 0 1 0 0 1 1 1 0 0 0 1 0] Labels: [0 1 1 1 1 0 1 1 1 0 1 1 0 1 1 1 0 0 0 1 0 1 0 0 1 1 1 0 0 0 1 0]

Ringkasan

Menggunakan model yang telah dilatih sebelumnya untuk ekstraksi fitur : Saat bekerja dengan kumpulan data kecil, merupakan praktik umum untuk memanfaatkan fitur yang dipelajari oleh model yang dilatih pada kumpulan data yang lebih besar dalam domain yang sama. Ini dilakukan dengan membuat instance model yang telah dilatih sebelumnya dan menambahkan classifier yang terhubung penuh di atasnya. Model pra-pelatihan "dibekukan" dan hanya bobot pengklasifikasi yang diperbarui selama pelatihan. Dalam hal ini, basis konvolusi mengekstrak semua fitur yang terkait dengan setiap gambar dan Anda baru saja melatih pengklasifikasi yang menentukan kelas gambar yang diberikan kumpulan fitur yang diekstraksi.

Menyesuaikan model yang telah dilatih sebelumnya : Untuk lebih meningkatkan kinerja, seseorang mungkin ingin menggunakan kembali lapisan tingkat atas dari model yang telah dilatih sebelumnya ke kumpulan data baru melalui penyetelan halus. Dalam hal ini, Anda menyetel bobot Anda sedemikian rupa sehingga model Anda mempelajari fitur tingkat tinggi khusus untuk kumpulan data. Teknik ini biasanya direkomendasikan ketika dataset pelatihan besar dan sangat mirip dengan dataset asli yang digunakan untuk melatih model pra-pelatihan.

Untuk mempelajari lebih lanjut, kunjungi panduan pembelajaran Transfer .

# MIT License

#

# Copyright (c) 2017 François Chollet # IGNORE_COPYRIGHT: cleared by OSS licensing

#

# Permission is hereby granted, free of charge, to any person obtaining a

# copy of this software and associated documentation files (the "Software"),

# to deal in the Software without restriction, including without limitation

# the rights to use, copy, modify, merge, publish, distribute, sublicense,

# and/or sell copies of the Software, and to permit persons to whom the

# Software is furnished to do so, subject to the following conditions:

#

# The above copyright notice and this permission notice shall be included in

# all copies or substantial portions of the Software.

#

# THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

# IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

# FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL

# THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

# LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

# FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER

# DEALINGS IN THE SOFTWARE.